Abstract

The QLogic 2-port 10Gb Converged Network Adapter (CFFh) for BladeCenter offers robust 8Gb Fibre Channel storage connectivity and 10Gb networking over a single Converged Enhanced Ethernet (CEE) link. Because this adapter combines the functions of a Network Interface Card and a Host Bus Adapter on a single converged adapter, clients can realize potential benefits in cost, power and cooling, and data center footprint by deploying less hardware.

Note: This adapter is withdrawn from marketing.

Introduction

The QLogic 2-port 10Gb Converged Network Adapter (CFFh) for BladeCenter offers robust 8Gb Fibre Channel storage connectivity and 10Gb networking over a single Converged Enhanced Ethernet (CEE) link. Because this adapter combines the functions of a Network Interface Card and a Host Bus Adapter on a single converged adapter, clients can realize potential benefits in cost, power and cooling, and data center footprint by deploying less hardware.

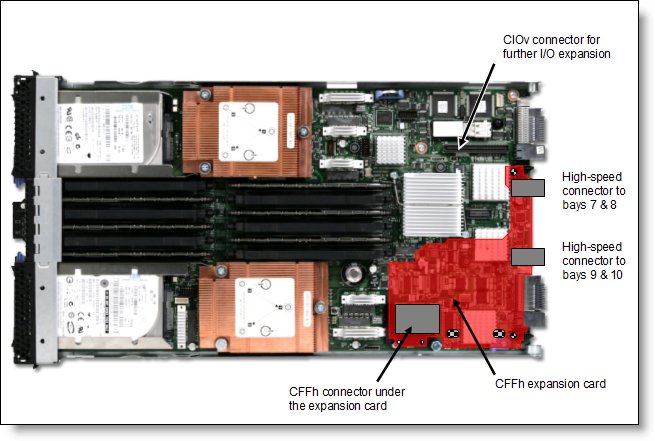

Figure 1 shows the QLogic 2-port 10Gb Converged Network Adapter (CFFh).

Figure 1. The QLogic 2-port 10Gb Converged Network Adapter (CFFh)

Did you know?

These Converged Network Adapters are backward compatible with many 4Gb storage targets and work with most existing LAN and SAN infrastructures, providing an investment protection when consolidating data centers. You can use this adapter with the 10Gb Pass-Thru Module and connect to a converged top-of-rack switch such as the Brocade 8000 or Nexus 5000. With this setup, you can reduce hardware, as well as power and cooling costs, while boosting performance by operating at 10Gb bandwidth.

The adapter connects to the midplane directly, without having to use cables or SFP modules. By eliminating these components for up to 14 servers, the resulting savings alone cover the BladeCenter chassis investment.

Part number information

Table 1. Ordering part number and feature code

| Description | Part number | Feature code (x-config / e-config) |

| QLogic 2-port 10Gb CNA (CFFh) for BladeCenter | 00Y3280* | A3JB / 8275 |

| QLogic 2-port 10Gb Converged Network Adapter (CFFh)* | 42C1830* | 3592 / 8275* |

* Withdrawn from marketing

These part numbers include the following items:

- One QLogic 2-port 10Gb Converged Network Adapter (CFFh)

- Documentation package

Features

The expansion card has the following features:

- Combo Form Factor (CFFh) PCI Express 2.0 x8 adapter

- Communication module: QLogic ISP8112

- Support for up to two CEE HSSMs in a BladeCenter H or HT chassis

- Support for 10Gb Converged Enhanced Ethernet (CEE)

- Support for Fiber Channel over Converged Enhanced Ethernet (FCoCEE)

- Full hardware offload for FCoCEE protocol processing

- Support for IPv4 and IPv6

- Support for SAN boot over CEE, PXE boot, and iSCSI boot

- Support for Wake on LAN

- Support for Jumbo Frames

- Support for BladeCenter Open Fabric Manager for BIOS, UEFI, and FCode

Stateless offload features include:

- IP, TCP, and UDP checksum offloads

- Large and Giant Send Offload (LSO, GSO)

- Receive Side Scaling (RSS)

- Header-data split

- Interrupt coalescing

- NetQueue

IEEE standards compliance:

- 802.1Qbb rev. 0 (Priority-based flow control)

- 802.1Qaz rev. 0 (Enhanced transmission selection)

- 802.1Qaz rev. 0 (DCBX protocol)

- 802.3ae (10Gb Ethernet)

- 802.1q (VLAN)

- 802.3ad (Link Aggregation)

- 802.1p (Priority Encoding)

- 802.3x (Flow Control)

- 802.3ap (KX/KX4)

- IEEE 1149.1 (JTAG)

- IPv4 Specification (RFC 791)

- IPv6 Specification (RFC 2460)

- TCP/UDP Specification (RFC 793/768)

- ARP Specification (RFC 826)

Operating environment

The expansion card has the following physical specifications:

- Temperature:

- 0 to 55 °C (32 to 113 °F) at 0 to 914 m (0 to 3000 ft.) operating

- -43 to 73 °C (-40 to 163 °F) at 0 to 914 m (0 to 3000 ft.) storage

- Relative humidity: 5% to 93% (non-condensing)

Supported servers and I/O modules

The QLogic 2-port 10Gb Converged Network Adapter (CFFh) is supported in the BladeCenter servers that are listed in Table 2.

Table 2. Supported servers

| Part number |

Product description | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 00Y3280 | QLogic 2-port 10Gb CNA (CFFh) for BladeCenter | Y | Y | Y | Y | Y | Y | Y | Y |

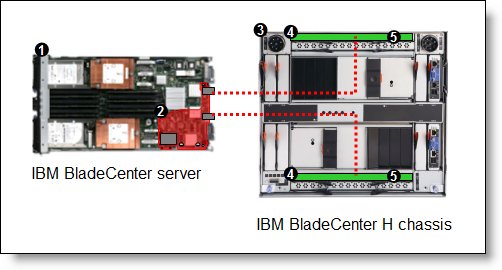

Figure 2 shows where the CFFh card is installed in a BladeCenter server.

Figure 2. Location on the BladeCenter server planar where the CFFh card is installed

BladeCenter chassis support is based on the blade server type in which the expansion card is installed. Consult ServerProven to see in which chassis each blade server type is supported: http://ibm.com/servers/eserver/serverproven/compat/us/.

Table 3 lists the I/O modules that can be used to connect to the QLogic 2-port 10Gb Converged Network Adapter (CFFh).

Table 3. I/O modules supported with the QLogic 2-port 10Gb Converged Network Adapter (CFFh)

| IBM Virtual Fabric 10Gb Switch Module | 46C7191 | N | N | Y | N | Y | N | N |

| 10Gb Ethernet Pass-Thru Module | 46M6181 | N | N | Y | N | Y | N | N |

| Cisco Nexus 4001I Switch Module | 46M6071 | N | N | Y | N | Y | N | N |

| Brocade Converged 10GbE Switch Module | 69Y1909 | N | N | Y | N | Y | N | N |

| BNT 6-port 10 Gb High Speed Switch* | 39Y9267 | N | N | N | N | N | N | N |

* Withdrawn from marketing

Supported I/O modules are supported in BladeCenter H and BladeCenter HT chassis only.

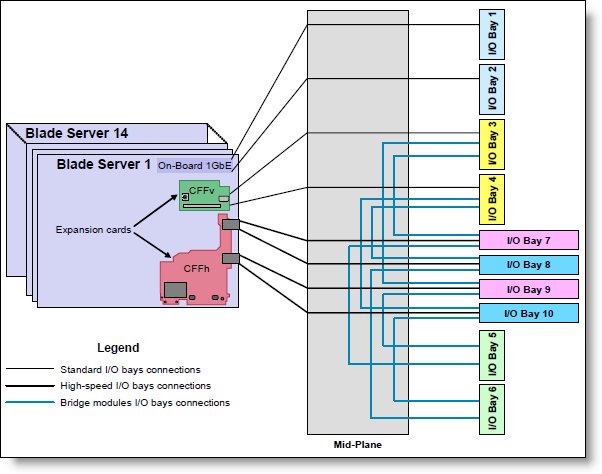

In BladeCenter H, the ports of CFFh cards are routed through the midplane to I/O bays 7, 8, 9, and 10, as shown in Figure 3. The BladeCenter HT is similar in that the CFFh cards are also routed through the midplane to I/O bays 7, 8, 9, and 10.

Figure 3. BladeCenter H I/O topology showing the I/O paths from CFFh expansion cards

Two I/O module must be installed in the chassis for each Ethernet port that you wish to use on the expansion card. Table 4 lists the specific I/O bays in the chassis.

Table 4. Locations of I/O modules required to connect to the expansion card

| Expansion card | I/O bay 7 | I/O bay 8 | I/O bay 9 | I/O bay 10 |

| QLogic 2-port 10Gb Converged Network Adapter (CFFh) | Supported I/O module | Not used | Supported I/O module | Not used |

Popular configurations

Figure 4 shows the use of 10Gb Ethernet Pass-Thru Modules to route two Ethernet ports from QLogic 2-port 10Gb Converged Network Adapter (CFFh) installed into each server. Two 10Gb Ethernet Pass-Thru Modules are installed in bay 7 and bay 9 of the BladeCenter H chassis. All connections between the controller, card, and the switch modules are internal to the chassis. No internal cabling is needed. External (top of rack,TOR) switches that support FCoCEE and cabling are required for the pass-thru module to operate.

Figure 4. A converged 20Gb solution using two 10Gb Ethernet Pass-Thru Modules

The components used in this configuration are listed in Table 5.

Table 5. Components used when connecting QLogic 2-port 10Gb Converged Network Adapter (CFFh) to two 10Gb Ethernet Pass-Thru Modules

| Diagram reference | Part number/machine type | Description | Quantity |

| Varies | BladeCenter HS22 or other supported server | 1 to 14 | |

| 00Y3280 | QLogic 2-port 10Gb CNA (CFFh) for BladeCenter | 1 per server | |

| 8852 or 8740/8750 | BladeCenter H or BladeCenter HT | 1 | |

| 46M6181 | 10Gb Ethernet Pass-Thru Module | 2 | |

| 44W4408 | IBM 10GBase-SR SFP+ Transceiver | Up to 28* |

*The 10Gb Ethernet Pass-Thru Module has 14 external 10Gb ports. You must have one transceiver for each 10Gb port in an I/O module.

Operating system support

The expansion card supports the following operating systems on the HS and LS blades:

- Microsoft Windows Server 2003, Web Edition

- Microsoft Windows Server 2003/2003 R2, Datacenter Edition

- Microsoft Windows Server 2003/2003 R2, Datacenter x64 Edition

- Microsoft Windows Server 2003/2003 R2, Enterprise Edition

- Microsoft Windows Server 2003/2003 R2, Enterprise x64 Edition

- Microsoft Windows Server 2003/2003 R2, Standard Edition

- Microsoft Windows Server 2003/2003 R2, Standard x64 Edition

- Microsoft Windows Server 2008 R2

- Microsoft Windows Server 2008, Datacenter x64 Edition

- Microsoft Windows Server 2008, Datacenter x86 Edition

- Microsoft Windows Server 2008, Enterprise x64 Edition

- Microsoft Windows Server 2008, Enterprise x86 Edition

- Microsoft Windows Server 2008, Standard x64 Edition

- Microsoft Windows Server 2008, Standard x86 Edition

- Microsoft Windows Server 2008, Web x64 Edition

- Microsoft Windows Server 2008, Web x86 Edition

- Microsoft Windows Small Business Server 2003/2003 R2 Premium Edition

- Microsoft Windows Small Business Server 2003/2003 R2 Standard Edition

- Red Hat Enterprise Linux 5 Server Edition

- Red Hat Enterprise Linux 5 Server Edition with Xen

- Red Hat Enterprise Linux 5 Server with Xen x64 Edition

- Red Hat Enterprise Linux 5 Server x64 Edition

- Red Hat Enterprise Linux 6 Server Edition

- Red Hat Enterprise Linux 6 Server x64 Edition

- SUSE LINUX Enterprise Server 10 for AMD64/EM64T

- SUSE LINUX Enterprise Server 10 for x86

- SUSE LINUX Enterprise Server 10 with Xen for AMD64/EM64T

- SUSE LINUX Enterprise Server 10 with Xen for x86

- SUSE LINUX Enterprise Server 11 for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 for x86

- SUSE LINUX Enterprise Server 11 with Xen for AMD64/EM64T

- VMware ESX 4.0

- VMware ESX 4.1

- VMware ESXi 4.0

- VMware ESXi 4.1

- VMware vSphere 5

- VMware vSphere 5.1

Support for operating systems is based on the combination of the expansion card and the blade server in which it is installed. See IBM ServerProven for the latest information about the specific versions and service packs supported: http://ibm.com/servers/eserver/serverproven/compat/us/. Select the blade server and then select the expansion card to see the supported operating systems.

The expansion card supports the following operating systems on the Power Systems blades:

- IBM AIX 5L for POWER Version 5.3

- IBM AIX Version 6.1

- IBM Virtual I/O Server

- IBM i 6.1

- IBM i 7.1

- Red Hat Enterprise Linux 5 for IBM POWER

- SUSE LINUX Enterprise Server 10 for IBM POWER

- SUSE LINUX Enterprise Server 11 for IBM POWER

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

BladeCenter®

ServerProven®

The following terms are trademarks of other companies:

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

IBM®, AIX®, and ibm.com® are trademarks of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.