Abstract

The 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter is a dual port InfiniBand Host Channel Adapter (HCA) based on proven Mellanox ConnectX IB technology. This HCA, when combined with the QDR switch, delivers end-to-end 40 Gb bandwidth per port. This solution is ideal for low latency, high bandwidth, performance-driven server and storage clustering applications in a High Performance Compute environment.

Note: This adapter is withdrawn from marketing.

Introduction

The 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter is a dual port InfiniBand Host Channel Adapter (HCA) based on proven Mellanox ConnectX IB technology. This HCA, when combined with the QDR switch, delivers end-to-end 40 Gb bandwidth per port. This solution is ideal for low latency, high bandwidth, performance-driven server and storage clustering applications in a High Performance Compute environment. The adapter uses the CFFh form factor and can be combined with a CIOv or CFFv adapter to get additional SAS, Fibre Channel, or Ethernet ports.

Figure 1 shows the expansion card.

Figure 1. 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter

Did you know?

InfiniBand is a scalable high performance fabric that was used for Petascale computing. Roadrunner is the largest supercomputer of the world, breaking the barrier of 1000 trillion operations per second. Roadrunner is based on Mellanox ConnectX DDR adapters. QDR is the next generation, which offers twice the bandwidth per port.

Part number information

Table 1 shows the part numbers to order the 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter.

Table 1. Part number and feature code for ordering

| Description | Part number | Feature codes |

| 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter | 46M6001* | 0056 |

* Withdrawn from marketing

The part number includes the following items:

- One 2-Port 40 Gb InfiniBand Expansion Card (CFFh)

- Documentation package

Features and specifications

The 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter includes the following features and specifications:

- The 2-Port 40 Gb InfiniBand Expansion Card features include:

- Form-factor: CFFh

- Host interface: PCI-E x8 Gen 2 (5.0GT/s): 40+40 Gbps bidirectional bandwidth

- Dual 4X InfiniBand ports at speeds 10 Gbps, 20 Gbps, or 40 Gbps per port

- 6.5 GBps bidirectional performance

- RDMA, Send/Receive semantics

- Hardware-based congestion control

- Atomic operations

- 16 million I/O channels

- 256 to 4 Kb MTU

- 1 GB messages

- 9 virtual lanes: 8 data + 1 management

- 1us MPI ping latency

- CPU offload of transport operations

- End-to-end QoS and congestion control

- TCP/UDP/IP stateless offload

- Enhanced InfiniBand features

- Hardware-based reliable transport

- Hardware-based reliable multicast

- Extended Reliable Connected transport

- Enhanced Atomic operations

- Fine grained end-to-end QoS

- Hardware-based I/O virtualization features

- Single Root IOV

- Address translation and protection

- Multiple queues per virtual machine

- VMware NetQueue support

- Protocol support

- Open MPI, OSU MVAPICH, HP MPI, Intel MPI, MS MPI, Scali MPI

- IPoIB, SDP, RDS

- SRP, iSER, FCoIB and NFS RDMA

Operating environment

2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter supports the following environment:

- Temperature

- 10 to 52 °C (50 to 125.6 F) at an altitude of 0 to 914 m (0 to 3,000 ft)

- 10 to 49 °C (50 to 120.2 F) at an altitude of 0 to 3000 m (0 to 10,000 ft)

- Relative humidity

- 8% to 80% (noncondensing)

Supported servers and I/O modules

Table 2 lists the BladeCenter servers that the 2-Port 40 Gb InfiniBand Expansion Card for BladeCenter supports.

Table 2. Supported servers

| 2-Port 40 Gb InfiniBand Expansion Card (CFFh) | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | N | N | N |

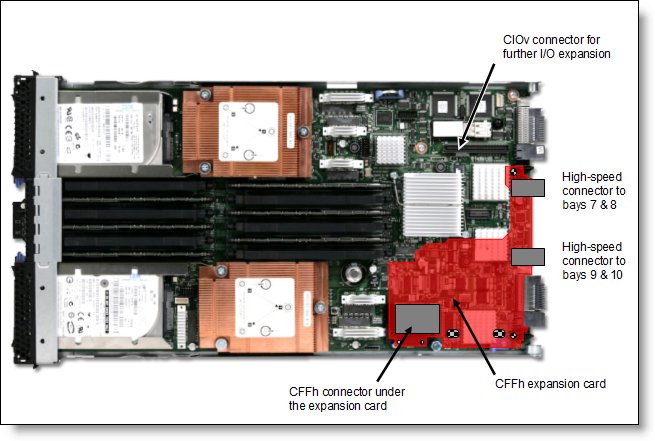

Figure 2 shows where the CFFh card is installed in a BladeCenter server.

Figure 2. Location on the BladeCenter server planar where the CFFh card is installed

BladeCenter chassis support is based on the blade server type in which the expansion card is installed. Consult ServerProven to see which chassis each blade server type is supported in: http://ibm.com/servers/eserver/serverproven/compat/us/.

Table 3 lists the I/O modules that can be used to connect to the 2-Port 40 Gb InfiniBand Expansion Card (CFFh) for BladeCenter. The I/O modules listed in Table 3 are supported in BladeCenter H chassis only.

Table 3. I/O modules supported with the 2-Port 40 Gb InfiniBand Expansion Card (CFFh)

| I/O module | Part number | |||||||

| Voltaire 40 Gb InfiniBand Switch Module | 46M6005 | N | N | Y | N | N | N | N |

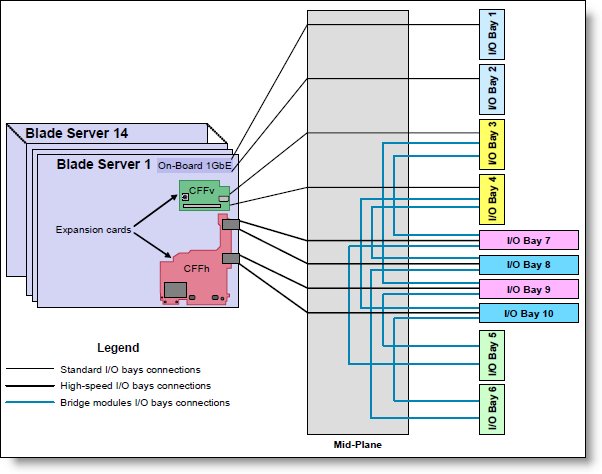

In BladeCenter H, the ports of CFFh cards are routed through the midplane to I/O bays 7, 8, 9, and 10, as shown in Figure 3.

Figure 3. BladeCenter H I/O topology showing the I/O paths from CFFh expansion cards

One I/O module must be installed in the chassis for each 4X InfiniBand port that you wish to use on the expansion card. The specific I/O bays in the chassis are listed in Table 4. For the 2-Port 40 Gb InfiniBand Expansion Card (CFFh), you should install an I/O module in I/O bays 7/8 and 9/10 (that is, two I/O modules, each of them occupies two adjacent high-speed slots).

Table 4. Locations of I/O modules required to connect to the expansion card

| Expansion card | I/O bay 7 | I/O bay 8 | I/O bay 9 | I/O bay 10 |

| 2-Port 40 Gb InfiniBand Expansion Card (CFFh) | Supported I/O module* | Supported I/O module* | ||

* A single Voltaire 40 Gb InfiniBand Switch Module occupies two adjacent high-speed bays (7 and 8 or 9 and 10) while expansion cards have only two ports--one port per one InfiniBand module.

Popular configurations

Figure 4 shows the use of Voltaire 40 Gb InfiniBand Switch Module to route two 4X InfiniBand ports from 2-Port 40 Gb InfiniBand Expansion Card (CFFh) installed into each server. Two Voltaire 40 Gb InfiniBand Switch Modules are installed in bays 7/8 and bays 9/10 of the BladeCenter H chassis. All connections between the expansion cards and the switch modules are internal to the chassis. No cabling is needed.

Figure 4. A 40 Gb solution using 2-Port 40 Gb InfiniBand Expansion Card (CFFh) and Voltaire 40 Gb InfiniBand Switch Modules

Table 5 lists he components that this configuration uses.

Table 5. Components used when connecting 2-Port 40 Gb InfiniBand Expansion Card (CFFh) to two Voltaire 40 Gb InfiniBand Switch Modules

| Diagram reference | Part number/machine type | Description | Quantity |

| Varies | BladeCenter HS22 or other supported server | 1 to 14 | |

| 46M6001 | 2-Port 40 Gb InfiniBand Expansion Card (CFFh) | 1 per server | |

| 8852 | BladeCenter H | 1 | |

| 46M6005 | Voltaire 40 Gb InfiniBand Switch Module | 2 | |

| 49Y9980 | 3 m Copper QDR InfiniBand QSFP Cable | Up to 32* |

* The Voltaire 40 Gb InfiniBand Switch Module has 16 external ports. To communicate outside of the chassis, you must have QSFP cables connected. You have the flexibility to expand bandwidth using from one to 16 connections per switch.

Operating system support

The 2-Port 40 Gb InfiniBand Expansion Card (CFFh) supports the following operating systems:

- Microsoft Windows Server 2008 R2

- Microsoft Windows Server 2008, Datacenter x64 Edition

- Microsoft Windows Server 2008, Datacenter x86 Edition

- Microsoft Windows Server 2008, Enterprise x64 Edition

- Microsoft Windows Server 2008, Enterprise x86 Edition

- Microsoft Windows Server 2008, Standard x64 Edition

- Microsoft Windows Server 2008, Standard x86 Edition

- Microsoft Windows Server 2008, Web x64 Edition

- Microsoft Windows Server 2008, Web x86 Edition

- Red Hat Enterprise Linux 4 AS for AMD64/EM64T

- Red Hat Enterprise Linux 4 AS for x86

- Red Hat Enterprise Linux 5 Server Edition

- SUSE LINUX Enterprise Server 10 for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 for AMD64/EM64T

Support for operating systems is based on the combination of the expansion card and the blade server in which it is installed. See IBM ServerProven for the latest information about the specific versions and service packs supported: http://ibm.com/servers/eserver/serverproven/compat/us/. Select the blade server, and then select the expansion card to see the supported operating systems.

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

BladeCenter®

ServerProven®

The following terms are trademarks of other companies:

Intel®, the Intel logo is a trademark of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

IBM® and ibm.com® are trademarks of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.