Author

Published

9 Sep 2022Form Number

LP1644PDF size

5 pages, 232 KBAbstract

Lenovo’s MLPerf Inference v2.1 results demonstrate a diverse portfolio of compute platforms for AI workloads.

Lenovo AI continues to perform

MLPerf™ is a noble endeavor; without it, Artificial Intelligence (AI) performance would be lost in translation between expectation and reality. AI is often misunderstood, incorporating diverse workflows and architectures. Due to its complexity, it can be difficult for organizations to make informed decisions as to how systems should be configured and how best to invest in improving AI performance. MLPerf was born to address this need and Lenovo is an active participant in these efforts.

MLPerf’s mission is to "build fair and useful benchmarks" that provide unbiased evaluations of training and inference performance for hardware, software, and services—all conducted under prescribed conditions. This is not about bragging rights as to who builds the fastest servers; it's about helping our customers make informed decisions about how to best configure the infrastructure they buy from us and showing where to invest in areas they will see the biggest returns.

To be transparent and help our customers make better-informed decisions, we publish results quarterly. This is important because it provides our customers with a better understanding of how the latest versions of technical components perform, and it sometimes involves us testing entirely new types of technology. In this case, our latest round of MLPerf Inference v2.1 involved testing new versions of technologies, as well as new classes of technology.

MLPerf Inference 2.1 highlights

From the highest level, here’s what we attempted and discovered in this quarter’s MLPerf testing:

- We won 3 benchmarks, and tied 1, using the same GPUs and infrastructure as the previous round of testing. This indicates that even existing AI infrastructure can perform better over time, ostensibly with firmware and software updates.

- We won more ResNet benchmarks than any other technology vendor, and while the ResNet family include some of the earliest MLPerf benchmarks, it is widely perceived as the industry standard, so this is a meaningful accomplishment.

- We made our first Qualcomm submission and will likely be carrying that torch forward.

- We made our first submission into the power category, and it’s encouraging to see these benchmarks moving beyond sheer performance to incorporate capabilities organizations truly care about, like power efficiency.

Advancements in performance

This was our second run with some of the same servers, indicating the performance improvements we are seeing are due to software and/or driver improvements, proving they are obtainable for organizations that have already deployed these systems. The most image classification wins for the accelerator and number pair:

- ThinkSystem SR670 V2 with 8x NVIDIA A100-PCIe-80GB – ResNet Offline 318,162 images/sec

- ThinkSystem SR670 V2 with 8x NVIDIA A100-PCIe-80GB – RNNT Offline 107,881.00 samples/s

- ThinkSystem SR670 V2 with 4x NVIDIA A100-SXM-80GB – ResNet Server 150,027 images/s (tie with Dell)

- ThinkSystem SR670 V2 with 4x NVIDIA A100-SXM-80GB – ResNet Offline 174,180 images/s

Fundamentally, Lenovo is making continual progress with supporting more models. This momentum includes extending and enhancing our AI infrastructure portfolio so that customers can make more informed decisions from faster insights. Our goal through MLPerf is to bring clarity to infrastructure decisions so customers can focus on the success of their AI deployment overall.

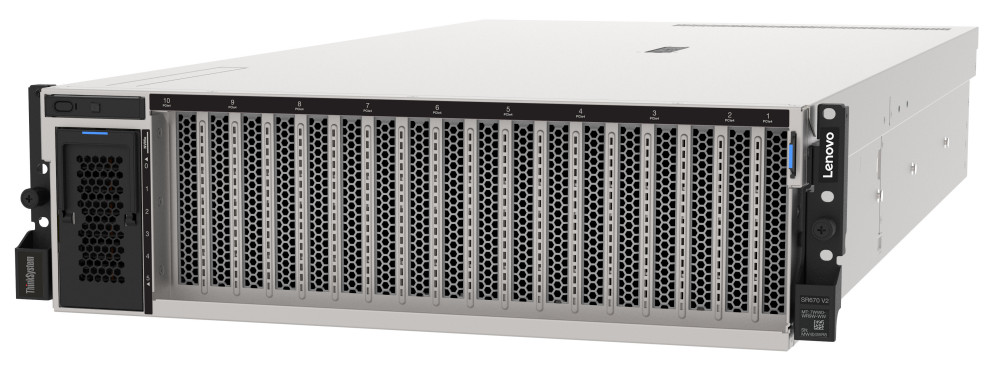

Figure 1. Lenovo ThinkSystem SR670 V2 configured to support eight NVIDIA A100 GPUs

Lenovo collaborates with NVIDIA

Lenovo demonstrated AI performance across various infrastructure configurations, including NVIDIA A16 GPUs, running on Lenovo ThinkSystem platforms We showcased the efficiency and performance of our air-cooled systems, providing both PCIe and HGX deployment options in a standard data center platform that enterprises of all sizes can quickly deploy.

Lenovo collaborates extensively with NVIDIA in the AI realm. Through our Lenovo AI Innovation Centers, we’re working with NVIDIA to ensure the success of our mutual customers AI initiatives. This provides customers with access to Lenovo and NVIDIA AI experts to aid with consulting on projects, the proper infrastructure to run a proof of concept, and proof of ROI before deployment. As the AI world continues to evolve, collaborations make coming to market an easier and more effective process.

For more information

For more information, see the following resources:

Explore Lenovo AI solutions:

https://www.lenovo.com/us/en/servers-storage/solutions/analytics-ai/

Engage the Lenovo AI Center of Excellence:

https://lenovoaicodelab.atlassian.net/servicedesk/customer/portal/3

MLCommons®, the open engineering consortium and leading force behind MLPerf, has now released new results for MLPerf benchmark suites:

- Benchmark results: https://mlcommons.org/en/inference-datacenter-21/

- Latest news about MLCommons: https://mlcommons.org/en/news/mlperf-inference-v21/

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkSystem®

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.