Author

Published

14 Apr 2023Form Number

LP1716PDF size

5 pages, 428 KBAbstract

The future is an ever-changing landscape that we are witnessing in real time, such as the development of truly autonomous vehicles on the roadways over the past 10 years. These vehicles are run by computers utilizing Machine Learning (ML) which requires data analysis at compute speeds, but one drawback for these vehicles are environmental changes the vehicle may experience and the effect on the ML.

To address and overcome these challenges of Continual Learning (CL), Lenovo AI partnered with Intel and Barcelona Supercomputing Centre to research and develop ways to overcome machine learning deficiencies and make autonomous vehicles not only more intelligent, but also safer.

What does data have to do with vehicles?

Every moment of our lives we are internally collecting and processing data points of the world around us, but this process has exceeded being unique to only the biological existence and grown to include the silicon version simultaneously. Like the biological brain in its developmental years, AI is learning how to process enormous amounts of visual data. One of the most fascinating and popular areas of this is within autonomous vehicles.

According to Gartner (gartner.com/en/information-technology/glossary/autonomous-vehicles):

“An autonomous vehicle is one that can drive itself from a starting point to a predetermined destination in “autopilot” mode using various in-vehicle technologies and sensors, including adaptive cruise control, active steering (steer by wire), anti-lock braking systems (brake by wire), GPS navigation technology, lasers and radar.”

The technological feat is powered by the consumption of data at complex and varying degrees, while evolving with changes in human nature. Remember watching shows and movies like Batman, Knight Rider, and Total Recall, sitting with fasciation of the idea that a vehicle could drive you around? That awe-inspiring notion of what the future could hold, well that future is truly here now.

Benchmarks = Standards = Measurements of Growth

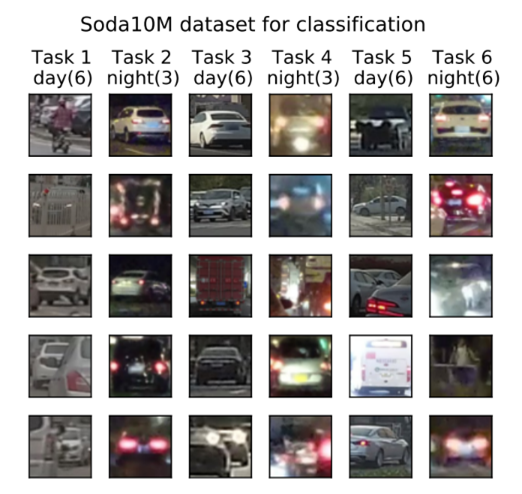

Working together with Intel® and the Barcelona Supercomputing Centre (BSC), Lenovo researched the various ways in which autonomous driving data, known as SODA 10M, are consumed, and processed. SODA 10M is “a large-scale object detection benchmark for standardizing the evaluation of different self-supervised and semi-supervised approaches by learning from raw data,” as shown in the image below. (from paperswithcode.com)

Machine Learning Continuously from New Data

These processes are powered by Machine Learning (ML), which assumes that all data consumed is the same within the process, meaning that data is assumed to be independent and identically distributed (iid), but that is not really the case. One example of this is when data is assumed to be iid but is correlated in different contexts. This is called a domain shift, which is extremely common in ML. To provide a bit more context around how to understand a domain shift, consider the drive from New York City into rural Pennsylvania. Our brains notice and interpret the context as the scenario changes around us. How we drive and interact with that new environment is seamless and fluid, allowing us to continue onward without needing to reset or reprogram ourselves. Even though MLs have the ability to shift, which is all too common in autonomous driving data, how can it do so to continually advance its capabilities?

Continual Learning (CL) is the study to keep an ML system learning post-deployment, e.g., within an autonomous vehicle. Traditionally, this is done by retaining all learned data and then retraining the system frequently. However, due to various guard rails, this can pose problems around data privacy, storage, or compute restrictions. Knowing these limitations provide the problem known as “catastrophic forgetting” in which a neural network is unable to make a correlation between what it previously learned and the new data it was provided. Again, we can bring this back to our human experience.

Consider an activity that you enjoy, whether it be sports, reading, language, art, music, etc. In the moment, we focus on learning the new skills within that task. Time progresses and we go on to learn other skills, but one day come back to that original skill and simply forget the old skills or techniques after learning new ones. This is catastrophic failure—forgetting old rules after learning new rules—is something that machine learning is susceptible to, just like a human. Through the creation of algorithms, these challenges have been addressed, but often assume rigid task boundaries and known new tasks.

Putting the right team together is where it all begins.

Gusseppe Bravo-Rocca, MS, Peini Liu, MS, Jordi Guitart, PhD, Ajay Dholakia PhD, and David Ellison PhD wrote a paper titled, “Efficient Domain-Incremental Learning through Task-ID Inference using Transformer Nearest-Centroid Embeddings,” hosted in Lenovo’s Center of Discovery and Excellence. The research was run on the Lenovo ThinkSystem SR650 V2 server, while the testbed used in the experiments is as follows:

- Server: Lenovo ThinkSystem SR650 V2

- Processors: 2x Intel 3rd Gen Xeon Platinum 8360Y CPU @ 2.40GHz, 256 GB RAM

- Operating System: Ubuntu 22.04 (64 bit)

- Software:

- Docker image intel/oneapi-aikit:develubuntu22.04 1 (Intel AI Analytics Toolkit)

- avalanche-lib 0.3.1 2 (CL library)

- torch 1.12.0 and torchvision 0.13.0 3 (DL library)

- intel-extension-for-pytorch 1.12.100+cpu 4 (Intel acceleration for Pytorch)

- scikit-learn 1.2.2 5 (ML library)

- scikit-learn-intelex 2023.0.1 6 (Intel acceleration for Sklearn)

The research will show the steps in which the participants conducted group embeddings, trained classifiers, and created an algorithm that can decide if the program should or should not learn a new class.

A task detector was developed that can operate unsupervised and did not have to be informed that any data is changing or carrying a new label. Explaining such a task detector, we can go back to our example of driving from NYC to a rural area, which are two different classes. Much like our brains, this task detector allows the ML to operate without catastrophic failure and sense change while adapting to the new without forgetting the old. Most importantly with this development, the ML within the vehicle did not need to update, but rather was able to learn and run within the context switch it was experiencing first-hand.

Autonomous vehicles are progressing to advance and become a regular occurrence in day-to-day life for society. Lenovo, alongside our partners and fellow scientists will continue to help advance this technology and continue to drive smarter technology for all. This being the first stage of the project we are very excited to share our findings and look forward to bringing you along for the journey.

For more information

For more information, see the following resources:

- Explore Lenovo AI solutions:

https://www.lenovo.com/us/en/servers-storage/solutions/analytics-ai/ - Engage the Lenovo AI Center of Excellence:

https://lenovoaicodelab.atlassian.net/servicedesk/customer/portal/3

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkSystem®

The following terms are trademarks of other companies:

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.