Author

Published

18 Jul 2023Form Number

LP1772PDF size

9 pages, 392 KBAbstract

The ThinkSystem Qualcomm Cloud AI 100 accelerator delivers unprecedented visual computing performance for the data center and provides revolutionary neural graphics, compute, and AI capabilities to accelerate the most demanding visual computing workloads.

This product guide provides essential presales information to understand the Qualcomm Cloud AI 100 and its key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the Qualcomm Cloud AI 100 and consider its use in IT solutions.

Introduction

The Qualcomm Cloud AI 100 is designed for AI inference acceleration, and addresses the unique requirements in the cloud, including power efficiency, scale, process node advancements, and signal processing. The AI 100 enables data centers to run inference on the edge cloud faster and more efficiently. Qualcomm Cloud AI 100 is designed to be a leading solution for datacenters who increasingly rely on infrastructure at the edge-cloud.

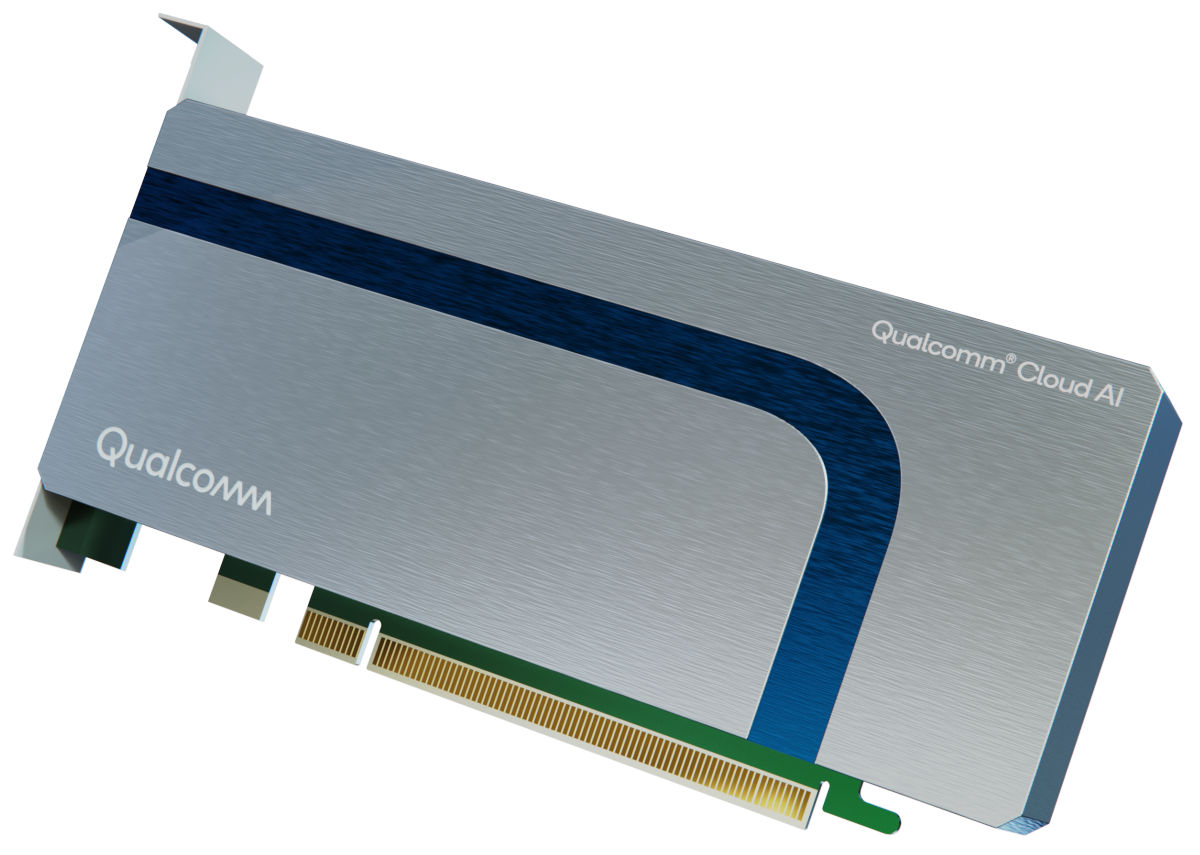

The following figure shows the Qualcomm Cloud AI 100.

Did you know?

The ThinkSystem Qualcomm Cloud AI 100 accelerator is offered on ThinkEdge servers to enable customers to deploy AI workloads at the edge of their network. The AI 100 supports over 150 neural networks across multiple categories, including image classification, object detection, semantic segmentation, and natural language processing.

Part number information

The following table shows the part numbers for the GPU.

| Part number | Feature code | Description | Vendor part number |

|---|---|---|---|

| 4X67A84009 | BS49 | ThinkSystem Qualcomm Cloud AI 100 | QAIC-100P-0-MPA001-MT-01-0-BE |

The option part number includes the following:

- One Qualcomm Cloud AI 100 (PCIe HHHL-Standard)

- Full height (3U) and Low Profile (2U) adapter brackets

- Documentation

Features

The Qualcomm Cloud AI 100 accelerator supports more than 150 deep learning networks, with strong emphasis on computer vision use cases and natural language processing.

Target applications include:

- Image classification

- Object detection and monitoring

- Semantic segmentation

- Face detection

- Point cloud

- Pose estimation

- Natural language processing (NLP)

- Recommendation systems

The Qualcomm Cloud AI 100 accelerator and accompanying software development kits (SDKs) offer superior power and performance capabilities to meet the growing inference needs of Cloud Data Centers, Edge, and other machine learning (ML) applications. The Cloud AI 100 card is powered by the AIC100 system-on-chip (SoC), which is designed for ML inference workloads.

The Qualcomm Apps and Platform SDKs provide the ability to compile, optimize, and run deep learning models from popular frameworks including:

- PyTorch

- TensorFlow

- ONNX

- Caffe

- Caffe2

Technical specifications

The Qualcomm Cloud AI 100 has the following specifications:

- Low profile form factor

- PCIe 4.0 x8 host interface

- Supports data types: FP32, FP16, INT16, INT8

- Security features include Hardware Root of Trust, Secure boot, Firmware rollback protection

The following table lists the processing specifications and performance of the Qualcomm Cloud AI 100.

Server support

The following tables list the ThinkSystem servers that are compatible.

Operating system support

The following table lists the supported operating systems.

Tip: These tables are automatically generated based on data from Lenovo ServerProven.

Regulatory approvals

The Qualcomm Cloud AI 100 has the following regulatory approvals:

- International: IEC 62368-1, EN62368-1 2nd, and 3rd Ed.

- United States of America: FCC

- Canada: ICES-003

- EU/UK: EN 55032, EN55024, EN55035, EN 61000-3-2, EN 61000-3-3, EN62368-1 2nd, and 3rd Ed.

- Taiwan: BSMI

- Korea: KN32 / KN35

- Japan: VCCI

- China: CNS 15663, RoHS

- Australia / New Zealand: AS/NZS CISPR 32

- Logos: cUL, FCC, ICES, RCM, VCCI

Operating environment

The Qualcomm Cloud AI 100 has the following operating characteristics:

- Ambient temperature

- Operational: 0°C to 50°C (-5°C to 55°C for short term*)

- Storage: -40°C to 85°C

- Relative humidity:

- Operational: 5-90%

- Storage: 5-93%

Warranty

One year limited warranty. When installed in a Lenovo server, the adapter assumes the server’s base warranty and any warranty upgrades.

Related publications

For more information, refer to these documents:

- ThinkSystem and ThinkAgile GPU Summary:

https://lenovopress.lenovo.com/lp0768-thinksystem-thinkagile-gpu-summary - ServerProven compatibility:

https://serverproven.lenovo.com/ - Qualcomm Cloud AI 100 product page:

https://www.qualcomm.com/products/technology/processors/cloud-artificial-intelligence/cloud-ai-100

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

ThinkAgile®

ThinkEdge®

ThinkSystem®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel®, the Intel logo is a trademark of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.