Author

Updated

15 Sep 2024Form Number

LP1774PDF size

20 pages, 1.5 MBAbstract

The NVIDIA A30 offers versatile compute acceleration for mainstream enterprise servers. With NVIDIA Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), it delivers speedups securely across diverse workloads, including AI inference at scale and HPC applications. The A30 combines fast memory bandwidth and low-power consumption in a PCIe form factor to enable an elastic data center and delivers maximum value for enterprises.

This product guide provides essential presales information to understand the NVIDIA A30 GPU and its key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the NVIDIA A30 GPU and consider its use in IT solutions.

Change History

Changes in the September 15, 2023 update:

- Added the Controlled status column to Table 1 - Part number information section

Introduction

The NVIDIA A30 offers versatile compute acceleration for mainstream enterprise servers. With NVIDIA Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), it delivers speedups securely across diverse workloads, including AI inference at scale and HPC applications. The A30 combines fast memory bandwidth and low-power consumption in a PCIe form factor to enable an elastic data center and delivers maximum value for enterprises.

The third-generation Tensor Core technology supports a broad range of math precisions providing a unified workload accelerator for data analytics, AI training, AI inference, and HPC. Accelerating both scale-up and scale-out workloads on one platform enables elastic data centers that can dynamically adjust to shifting application workload demands. This simultaneously boosts throughput and drives down the cost of data centers.

Did you know?

The NVIDIA A30 Tensor Core GPU delivers a versatile platform for mainstream enterprise workloads, like AI inference, training, and HPC. With TF32 and FP64 Tensor Core support, as well as an end-to-end software and hardware solution stack, A30 ensures that mainstream AI training and HPC applications can be rapidly addressed.

Part number information

The following table shows the part numbers for the NVIDIA A30 GPU.

The NVIDIA A30 GPU is Controlled which means the GPU is not offered in certain markets, as determined by the US Government.

The PCIe option part numbers includes the following:

- One NVIDIA A30 GPU with full-height (3U) adapter bracket attached

- Documentation

GPUs without a CEC chip: The NVIDIA A30 GPU is offered without a CEC chip (look for "w/o CEC" in the name). The CEC is a secondary Hardware Root of Trust (RoT) module that provides an additional layer of security, which can be used by customers who have high regulatory requirements or high security standards. NVIDIA uses a multi-layered security model and hence the protection offered by the primary Root of Trust embedded in the GPU is expected to be sufficient for most customers. The CEC defeatured products still offer Secure Boot, Secure Firmware Update, Firmware Rollback Protection, and In-Band Firmware Update Disable. Specifically, without the CEC chip, the GPU does not support Key Revocation or Firmware Attestation. CEC and non-CEC GPUs of the same type of GPU can be mixed in field upgrades.

Features

The ThinkSystem NVIDIA A30 24GB PCIe Gen4 Passive GPU offers the following features:

- Third-Generation NVIDIA Tensor Core: Performance and Versatility

Compared to the NVIDIA T4 Tensor Core GPU, the third-generation Tensor Cores on NVIDIA A30 deliver over 20X more AI training throughput using TF32 without any code changes and over 5X more inference performance. In addition, A30 adds BFLOAT16 to support a full range of AI precisions.

- TF32: Higher Performance for AI Training, Zero Code Changes

A30 supports a new precision, TF32, which works just like FP32 while providing 11X higher floating point operations per second (FLOPS) over the prior-generation V100 for AI without requiring any code changes. NVIDIA’s automatic mixed precision (AMP) feature enables a further 2X boost to performance with just one additional line of code using FP16 precision. A30 Tensor Cores also include support for BFLOAT16, INT8, and INT4 precision, making A30 an incredibly versatile accelerator for both AI training and inference.

- Double-Precision Tensor Cores: The Biggest Milestone Since FP64 for HPC

A30 brings the power of Tensor Cores to HPC, providing the biggest milestone since the introduction of double-precision GPU computing for HPC. The third generation of Tensor Cores in A30 enables matrix operations in full, IEEE-compliant, FP64 precision. Through enhancements in NVIDIA CUDA-X™ math libraries, a range of HPC applications that need double-precision math can now see boosts of up to 30% in performance and efficiency compared to prior generations of GPUs.

- Multi-Instance GPU: Multiple Accelerators in One GPU

With Multi-Instance GPU (MIG), each A30 can be partitioned into as many as four GPU instances, fully isolated at the hardware level with their own high-bandwidth memory, cache, and compute cores. MIG works on NVIDIA AI Enterprise with VMware vSphere and NVIDIA Virtual Compute Server (vCS) with hypervisors such as Red Hat RHEL/RHV.

- PCIe Gen 4: Double the Bandwidth and NVLINK Between GPU pairs

A30 supports PCIe Gen 4, which doubles the bandwidth of PCIe Gen 3 from 15.75 GB/sec to 31.5 GB/sec, improving data transfer speeds from CPU memory for data-intensive tasks and datasets. For additional communication bandwidth, A30 supports NVIDIA NVLink between pairs of GPUs, which provides data transfer rates up to 200 GB/sec.

- 24 GB of GPU Memory

A30 features 24 GB of HBM2 memory with 933 GB/s of memory bandwidth, delivering 1.5X more memory and 3X more bandwidth than T4 to accelerate AI, data science, engineering simulation, and other GPU memory-intensive workloads.

- Structural Sparsity: 2X Higher Performance for AI

Modern AI networks are big, having millions and in some cases billions of parameters. Not all of these parameters are needed for accurate predictions, and some can be converted to zeros to make the models “sparse” without compromising accuracy. Tensor Cores in A30 can provide up to 2X higher performance for sparse models. While the sparsity feature more readily benefits AI inference, it can also improve the performance of model training.

Technical specifications

The following table lists the NVIDIA A30 GPU specifications.

* With structural sparsity enabled

Server support

The following tables list the ThinkSystem servers that are compatible.

- Double-wide GPUs are only supported in the SE450 with the 360mm chassis; not supported in the 300mm chassis

Operating system support

The following table lists the supported operating systems:

Tip: These tables are automatically generated based on data from Lenovo ServerProven.

1 ISG will not sell/preload this OS, but compatibility and cert only.

2 The OS is not supported with EPYC 7003 processors.

3 Ubuntu 22.04.3 LTS/Ubuntu 22.04.4 LTS

NVIDIA GPU software

This section lists the NVIDIA software that is available from Lenovo.

NVIDIA vGPU Software (vApps, vPC, RTX vWS)

Lenovo offers the following virtualization software for NVIDIA GPUs:

- Virtual Applications (vApps)

For organizations deploying Citrix XenApp, VMware Horizon RDSH or other RDSH solutions. Designed to deliver PC Windows applications at full performance. NVIDIA Virtual Applications allows users to access any Windows application at full performance on any device, anywhere. This edition is suited for users who would like to virtualize applications using XenApp or other RDSH solutions. Windows Server hosted RDSH desktops are also supported by vApps.

- Virtual PC (vPC)

This product is ideal for users who want a virtual desktop but need great user experience leveraging PC Windows® applications, browsers and high-definition video. NVIDIA Virtual PC delivers a native experience to users in a virtual environment, allowing them to run all their PC applications at full performance.

- NVIDIA RTX Virtual Workstation (RTX vWS)

NVIDIA RTX vWS is the only virtual workstation that supports NVIDIA RTX technology, bringing advanced features like ray tracing, AI-denoising, and Deep Learning Super Sampling (DLSS) to a virtual environment. Supporting the latest generation of NVIDIA GPUs unlocks the best performance possible, so designers and engineers can create their best work faster. IT can virtualize any application from the data center with an experience that is indistinguishable from a physical workstation — enabling workstation performance from any device.

The following license types are offered:

- Perpetual license

A non-expiring, permanent software license that can be used on a perpetual basis without the need to renew. For each perpetual license, customers are also required to purchase a 5-year SUMS support contract. Without this contract, the perpetual license cannot be ordered.

- Annual subscription

A software license that is active for a fixed period as defined by the terms of the subscription license, typically yearly. The subscription includes Support, Upgrade and Maintenance (SUMS) for the duration of the license term.

- Concurrent User (CCU)

A method of counting licenses based on active user VMs. If the VM is active and the NVIDIA vGPU software is running, then this counts as one CCU. A vGPU CCU is independent of the connection to the VM.

The following table lists the ordering part numbers and feature codes.

NVIDIA Omniverse Software (OVE)

NVIDIA Omniverse™ Enterprise is an end-to-end collaboration and simulation platform that fundamentally transforms complex design workflows, creating a more harmonious environment for creative teams.

NVIDIA and Lenovo offer a robust, scalable solution for deploying Omniverse Enterprise, accommodating a wide range of professional needs. This document details the critical components, deployment options, and support available, ensuring an efficient and effective Omniverse experience.

Deployment options cater to varying team sizes and workloads. Using Lenovo NVIDIA-Certified Systems™ and Lenovo OVX nodes which are meticulously designed to manage scale and complexity, ensures optimal performance for Omniverse tasks.

Deployment options include:

- Workstations: NVIDIA-Certified Workstations with RTX 6000 Ada GPUs for desktop environments.

- Data Center Solutions: Deployment with Lenovo OVX nodes or NVIDIA-Certified Servers equipped with A40, L40, or L40S GPUs for centralized, high-capacity needs.

NVIDIA Omniverse Enterprise includes the following components and features:

- Platform Components: Kit, Connect, Nucleus, Simulation, RTX Renderer.

- Foundation Applications: USD Composer, USD Presenter.

- Omniverse Extensions: Connect Sample & SDK.

- Integrated Development Environment (IDE)

- Nucleus Configuration: Workstation, Enterprise Nucleus Server (supports up to 8 editors per scene); Self-Service Public Cloud Hosting using Containers.

- Omniverse Farm: Supports batch workloads up to 8 GPUs.

- Enterprise Services: Authentication (SSO/SSL), Navigator Microservice, Large File Transfer, User Accounts SAML/Account Directory.

- User Interface: Workstation & IT Managed Launcher.

- Support: NVIDIA Enterprise Support.

- Deployment Scenarios: Desktop to Data Center: Workstation deployment for building and designing, with options for physical or virtual desktops. For batch tasks, rendering, and SDG workloads that require headless compute, Lenovo OVX nodes are recommended.

The following part numbers are for a subscription license which is active for a fixed period as noted in the description. The license is for a named user which means the license is for named authorized users who may not re-assign or share the license with any other person.

NVIDIA AI Enterprise Software

Lenovo offers the NVIDIA AI Enterprise (NVAIE) cloud-native enterprise software. NVIDIA AI Enterprise is an end-to-end, cloud-native suite of AI and data analytics software, optimized, certified, and supported by NVIDIA to run on VMware vSphere and bare-metal with NVIDIA-Certified Systems™. It includes key enabling technologies from NVIDIA for rapid deployment, management, and scaling of AI workloads in the modern hybrid cloud.

NVIDIA AI Enterprise is licensed on a per-GPU basis. NVIDIA AI Enterprise products can be purchased as either a perpetual license with support services, or as an annual or multi-year subscription.

- The perpetual license provides the right to use the NVIDIA AI Enterprise software indefinitely, with no expiration. NVIDIA AI Enterprise with perpetual licenses must be purchased in conjunction with one-year, three-year, or five-year support services. A one-year support service is also available for renewals.

- The subscription offerings are an affordable option to allow IT departments to better manage the flexibility of license volumes. NVIDIA AI Enterprise software products with subscription includes support services for the duration of the software’s subscription license

The features of NVIDIA AI Enterprise Software are listed in the following table.

Note: Maximum 10 concurrent VMs per product license

The following table lists the ordering part numbers and feature codes.

Find more information in the NVIDIA AI Enterprise Sizing Guide.

NVIDIA HPC Compiler Software

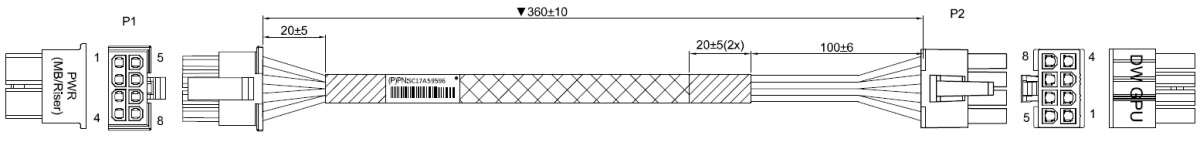

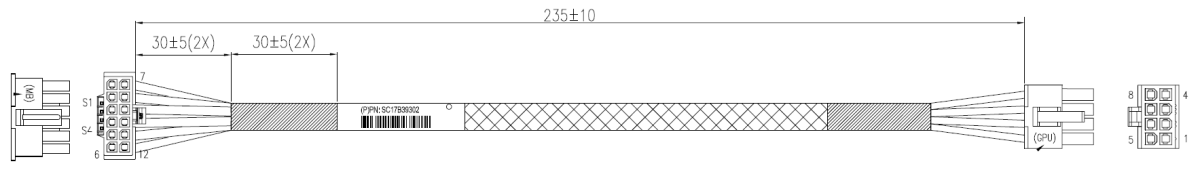

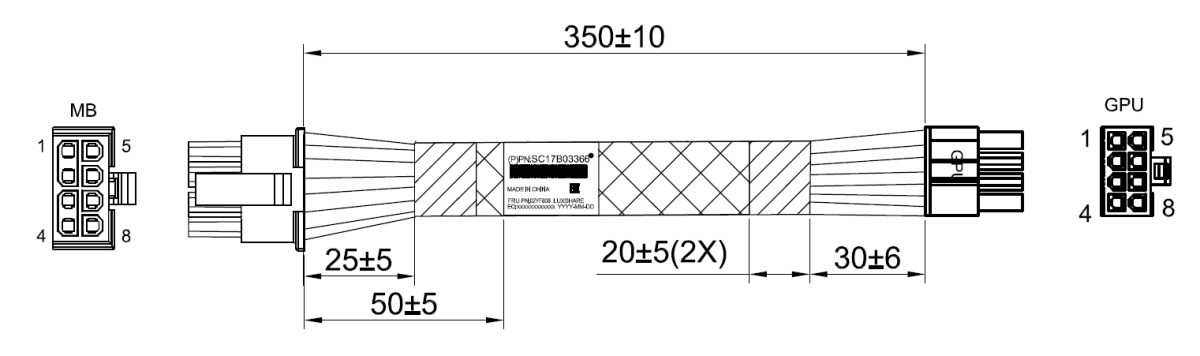

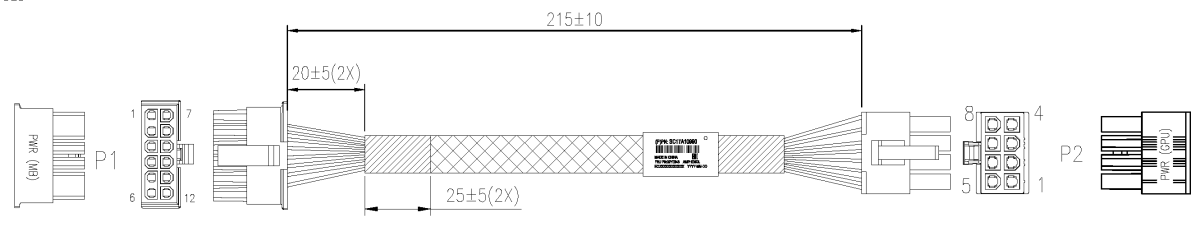

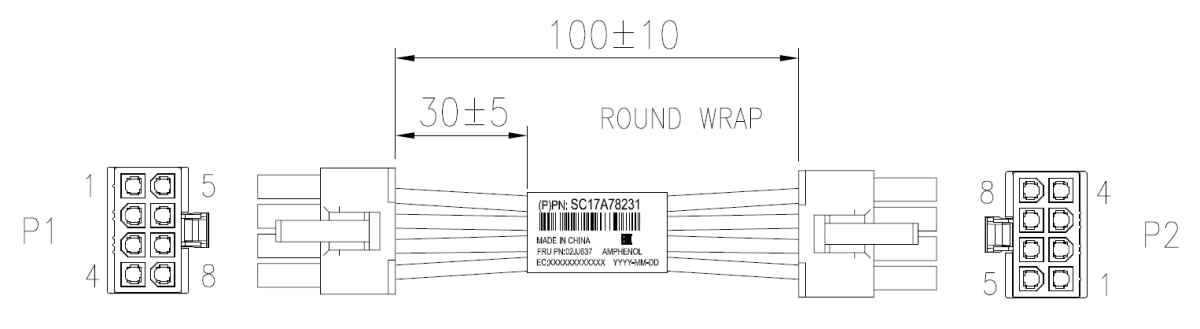

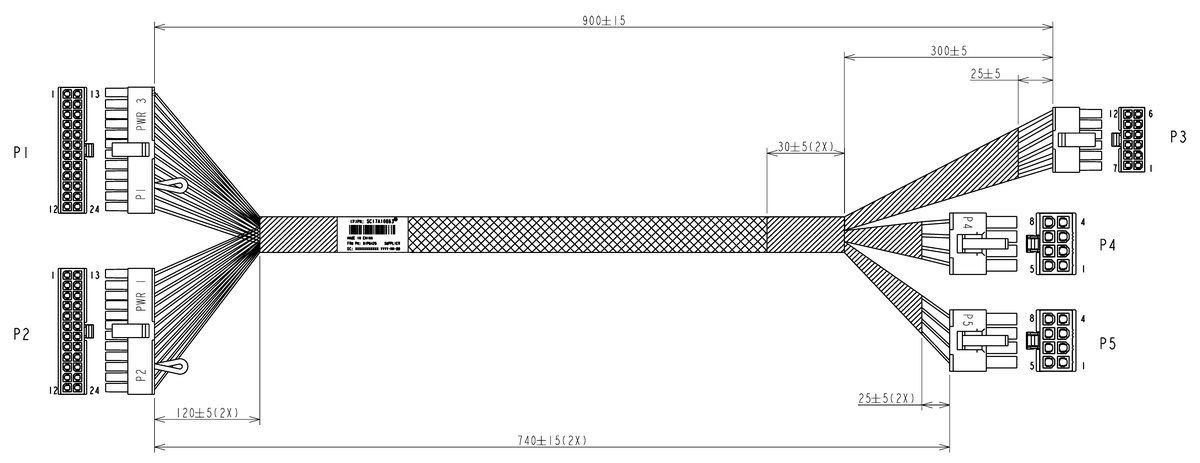

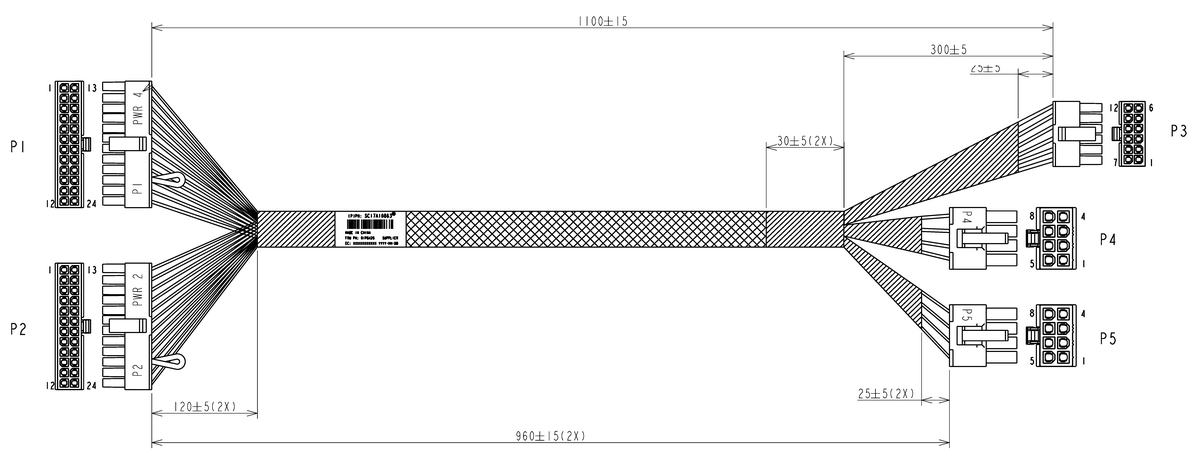

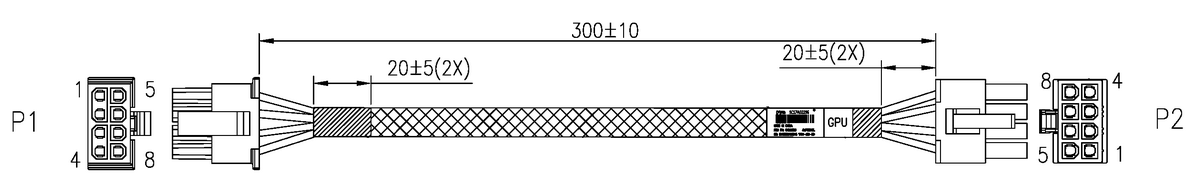

Auxiliary power cables

The A30 option part number does not ship with auxiliary power cables. Cables are server-specific due to length requirements. For CTO orders, auxiliary power cables are derived by the configurator. For field upgrades, cables will need to be ordered separately as listed in the table below.

Regulatory approvals

The NVIDIA A30 GPU has the following regulatory approvals:

- RCM

- BSMI

- CE

- FCC

- ICES

- KCC

- cUL, UL

- VCCI

Operating environment

The NVIDIA A30 GPU has the following operating characteristics:

- Ambient temperature

- Operational: 0°C to 50°C (-5°C to 55°C for short term*)

- Storage: -40°C to 75°C

- Relative humidity:

- Operational: 5-85% (5-93% short term*)

- Storage: 5-95%

* A period not more than 96 hours consecutive, not to exceed 15 days per year.

Warranty

One year limited warranty. When installed in a Lenovo server, the GPU assumes the server’s base warranty and any warranty upgrades.

Related publications

For more information, refer to these documents:

- ThinkSystem and ThinkAgile GPU Summary:

https://lenovopress.lenovo.com/lp0768-thinksystem-thinkagile-gpu-summary - ServerProven compatibility:

https://serverproven.lenovo.com/ - NVIDIA A30 product page:

https://www.nvidia.com/en-us/data-center/products/a30-gpu/ - NVIDIA Ampere Architecture page

https://www.nvidia.com/en-us/data-center/ampere-architecture/

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

ThinkAgile®

ThinkSystem®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Changes in the September 15, 2023 update:

- Added the Controlled status column to Table 1 - Part number information section

Adapter announced: May 3, 2021

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.