Published

17 Jan 2024Form Number

LP1863PDF size

19 pages, 643 KBAbstract

This document serves as a reference design, planning and implementation guide for the infrastructure required for machine learning (ML) use cases. It focuses specifically on open-source ML software, including MicroK8s and Charmed Kubeflow, both delivered by Canonical and running on Lenovo ThinkEdge Servers.

The information is tailored for individuals with a strong background in Artificial Intelligence (AI), open-source software frameworks and troubleshooting techniques including CIOs, CTOs, IT architects, system administrators, and other professionals who are actively engaged in the field of AI and technology. It aims to provide a comprehensive and thorough exploration of the subject matter, catering to those who are seeking to delve deep into the intricacies of AI within their respective roles and responsibilities.

Overview

Canonical MicroK8s (pronounced "micro kates") is a Kubernetes distribution certified by the Cloud Native Computing Foundation (CNCF). Ongoing collaboration between NVIDIA and Canonical ensures continuous validation of test suites, enabling data scientists to benefit from infrastructure designed for AI at scale using their preferred MLOps (Machine Learning Operations) tooling, such as Charmed Kubeflow.

From a business use case perspective, this architecture offers several important advantages:

- Faster Iteration and experimentation: the increased flexibility provided by this infrastructure allows data scientists to iterate faster on AI/ML models and accelerates the experimentation process.

- Scalability: The architecture enables quick scaling of AI initiatives by providing infrastructure that is compatible and tested with various MLOps tooling options.

- Security: Secure workloads can run on Ubuntu-optimized infrastructure, benefiting from regular patching, upgrades, and updates.

- AI-specific Requirements: The architecture meets the specific needs of AI workloads by efficiently handling large datasets on an optimized hardware and software stack.

- End to end stack: The architecture leverages NVIDIA's EGX offerings and utilizes Canonical's MLOps platform, Charmed Kubeflow, to provide a stack for the end-to-end machine learning lifecycle.

- Reproducibility: The solution offers a clear guide that can be used by professionals across the organization, expecting the same outcome.

While data scientists and machine learning engineers are the primary beneficiaries, as they can now easily run ML workloads on high-end hardware with powerful computing capabilities, other key stakeholders who can benefit from this architecture include infrastructure builders, solution architects, DevOps engineers, and CTOs who are looking to swiftly advance their AI initiatives while addressing the challenges that arise when working with AI at scale. In Addition, the Lenovo ThinkEdge server line is designed to virtualize traditional IT applications as well as new transformative AI systems, providing the processing power, storage, accelerator, and networking technologies required for today’s edge workloads.

The guide covers hardware specifications, tools, services, and provides a step-by-step guide for setting up the hardware and software required to run ML workloads. It also delves into other tools used for cluster monitoring and management, explaining how all these components work together in the system. At the end of it, users will have a stack that is able to run AI at the edge.

Solution components

The solution architecture includes Canonical Ubuntu running on Lenovo ThinkEdge Servers, MicroK8s, and Charmed Kubeflow to provide a comprehensive solution for developing, deploying and managing AI workloads in edge computing environments, using the NVIDIA EGX platform.

The NVIDIA EGX platform forms the foundation of the architecture, offering high-performance server builds that are approved by NVIDIA. These servers are equipped with NVIDIA GPU cards, enabling powerful GPU-accelerated computing capabilities for AI workloads. It accelerates project delivery and allows professionals to iterate faster.

By combining these components, this reference architecture enables organizations to leverage the power of Lenovo ThinkEdge Servers using NVIDIA EGX platform for AI workloads at the edge. Ubuntu ensures a reliable and secure operating system, while MicroK8s provides efficient container orchestration. Charmed Kubeflow simplifies the deployment and management of AI workflows, providing an extensive ecosystem of tools and frameworks.

By leveraging enterprise support from both NVIDIA and Canonical, users of the stack can significantly enhance security and availability. The close engineering collaboration between the two companies ensures expedited bug fixes and prompt security updates, often before public patch releases.

Benefits

NVIDIA and Canonical work together across the stack to get the best performance from your hardware, ensuring the fastest and most efficient operations.

- Support for Lenovo ThinkEdge Systems and Ubuntu LTS releases

- Wide range of GPU driver support for NVIDIA GPUs - whether you want a robust production-ready version, or the bleeding-edge experimental latest versions.

- Enterprise-ready GPU Drivers from NVIDIA, signed by Canonical. Secure boot.

- Deep integrations with NVIDIA engineering getting integrated solutions that offer highest performance and ‘just-work’ out of the box.

- Enterprise support, backed by NVIDIA. Deep engineering relationship means that we are often able to get bugs fixed with NVIDIA faster, and at times even before they are public.

- All of this builds on the underlying security and LTS value proposition of Ubuntu.

- Leverage the familiarity and efficiency of Ubuntu, already embraced by AI/ML developers, by adopting it as your unified production environment.

- Take advantage of Canonical's comprehensive support offerings to meet all your AI/ML requirements with confidence.

- Secure open source software for machine learning operations as part of a growing portfolio of applications that include Charmed Kubeflow, Charmed MLFlow, or Spark.

- Monitor production-grade infrastructure using Canonical’s Observability stack.

Canonical software components

The standards-based APIs are the same between all Kubernetes deployments, and they enable customer and vendor ecosystems to operate across multiple clouds. The site specific infrastructure combines open and proprietary software, NVIDIA and Canonical certified hardware, and operational processes to deliver cloud resources as a service.

The implementation choices for each cloud infrastructure are highly specific to the requirements of each site. Many of these choices can be standardized and automated using the tools in this reference architecture. Conforming to best practices helps reduce operational risk by leveraging the accumulated experience of NVIDIA and Canonical.

The primary components of the solution are as follows:

- Ubuntu Server

Ubuntu Pro is a subscription-based offering that extends the standard Ubuntu distribution with additional features and support for enterprise environments. With Ubuntu Pro, organizations gain access to an expanded security maintenance coverage that spans over 30,000 packages for a duration of 10 years, and optional enterprise-grade phone and ticket support by Canonical.

- MicroK8s

Canonical MicroK8s is a CNCF-certified Kubernetes distribution that offers a lightweight and streamlined approach to deploying and managing Kubernetes clusters. It is delivered in the form of a snap - the universal Linux app packaging format which dramatically simplifies the installation and upgrades of its components. MicroK8s installs the NVIDIA operator which allows you to take advantage of the GPU hardware available.

- Charmed Kubeflow

Charmed Kubeflow is an enterprise-grade distribution of Kubeflow, a popular open-source machine learning toolkit built for Kubernetes environments. Developed by Canonical, Charmed Kubeflow offers a comprehensive and reliable solution for deploying and managing machine learning workflows.

Charmed Kubeflow is a full set of Kubernetes operators to deliver the 30+ applications and services that make up the latest version of Kubeflow, for easy operations anywhere, from workstations to on-prem, to public cloud and edge.

Canonical delivers Kubeflow components in an automated fashion, using the same approach and toolset as for deploying the infrastructure and Kubernetes cluster - with help of Juju.

- Juju

Juju is an open-source framework that helps you move from configuration management to application management across your hybrid cloud estate through sharable, reusable, tiny applications called Charmed Operators.

A Charmed Operator is Juju's expansion and generalization of the Kubernetes notion of an operator. In the Kubernetes tradition, an Operator is an application packaged with all the operational knowledge required to install, maintain and upgrade it on a Kubernetes cluster, container, virtual machine, or bare metal machine, running on public or private cloud.

Canonical has developed and tested the Kubeflow charms for automating the delivery of its components.

Software versions

The following versions of software are part of this reference architecture.

Lenovo hardware specifications

The reference architecture is based on testing the solution on the EGX-ready system represented by Lenovo ThinkEdge SE450.

Figure 2. Lenovo ThinkEdge SE450

The ThinkEdge SE450 is a purpose-built server that is significantly shorter than a traditional server, making it ideal for deployment in tight spaces. It can be mounted on a wall, placed vertically in a floor stand, or mounted in a rack.

The ThinkEdge SE450 puts increased processing power, storage and network closer to where data is generated, allowing actions resulting from the analysis of that data to take place more quickly.

Since these edge servers are typically deployed outside of secure data centers, they include technology that encrypts the data stored on the device if it is tampered with, only enabling authorized users to access it.

The server is equipped with a single Intel Xeon Gold processor, 128GB of RAM, a single 480GB drive, and two NVIDIA L40 GPUs.

NVIDIA software specifications

NVIDIA GPU Cloud

NVIDIA GPU Cloud (NGC) is a comprehensive platform that provides a hub for GPU-optimized software, tools, and pre-trained models for deep learning, machine learning, and accelerated computing. It offers a curated collection of software containers, models, and industry-specific SDKs, enabling developers and researchers to accelerate their AI and data science workflows.

Triton Inference Server Software

NVIDIA Triton is a high-performance inference serving software that simplifies the deployment of AI models in production environments. NVIDIA Triton is part of NVIDIA AI Enterprise and within the scope of this reference architecture is used as an enhancement of Charmed Kubeflow platform, allowing to perform inference at the edge in a more robust way compared to the other inference servers.

Triton's automatic compatibility with diverse model frameworks and formats, including PyTorch, TensorFlow, ONNX, and others, simplifies the integration of models developed using different frameworks. This compatibility ensures smooth and efficient deployment of models without the need for extensive conversion or compatibility adjustments.

It delivers enhanced efficiency in terms of response time and throughput by utilizing dynamic batching and load balancing techniques. Triton's ability to employ multiple model instances enables efficient distribution of the workload, ensuring effective load balancing and maximizing performance. Written in C and optimized by NVIDIA, Triton delivers exceptional performance and resource efficiency without the need for additional optimizations. It is designed to be faster, consume less memory, and require fewer GPU and CPU resources compared to other alternatives.

Triton provides a streamlined and lightweight solution for serving models. Unlike installing multiple dependencies and the entire runtime framework, Triton is a single implementation often packaged as a container. This packaging approach makes it smaller, more lightweight, and easier to manage. By focusing solely on serving models, Triton eliminates unnecessary components and provides a dedicated environment specifically designed for efficient and effective model serving.

Canonical and NVIDIA have worked together to deliver full integration between NVIDIA Triton and Charmed Kubeflow for an end-to-end AI workflow.

Tutorial: Deploying an object detection model

This section covers the steps for deploying the software components on the ThinkEdge SE450 Server. We assume that the user has experience on deploying, installing and setting up open-source frameworks.

- Install Ubuntu 22.04 LTS on a machine with an NVIDIA GPU.

- Update system

sudo apt update && sudo apt upgrade -y - Install MicroK8s

sudo snap install microk8s --classic --channel=1.24/stable - Add current user to the microk8s group and give access to the .kube directory

sudo usermod -a -G microk8s $USER sudo chown -f -R $USER ~/.kube - Log out and re-enter the session for the changes to take effect.

- Enable MicroK8s add-ons for Charmed Kubeflow (replace IP addresses accordingly)

microk8s enable dns hostpath-storage ingress gpu metallb:192.168.1.10-192.168.1.16 - Check MicroK8s status until the output shows “microk8s is running” and the add-ons installed are listed under “enabled”

microk8s status --wait-ready - Add alias for omitting microk8s when running commands

alias kubectl='microk8s kubectl' echo "alias kubectl='microk8s kubectl'" > ~/.bash_aliases - (Optional) Set forward IP address in CoreDNS:

microk8s kubectl -n kube-system edit configmap coredns - Install Juju

sudo snap install juju --classic --channel=2.9/stable - Deploy Juju controller to MicroK8s

juju bootstrap microk8s - Add model for Kubeflow

juju add-model kubeflow - Deploy Charmed Kubeflow

We need to run the first two commands because MicroK8s uses inotify to interact with the filesystem, and in kubeflow the default inotify limits may be exceeded.

sudo sysctl fs.inotify.max_user_instances=1280 sudo sysctl fs.inotify.max_user_watches=655360 juju deploy kubeflow --trust --channel=1.7/stable - Check Juju status until all statuses become active

watch -c 'juju status --color | grep -E "blocked|error|maintenance|waiting|App|Unit"' - If tensorboard-controller is stuck with the status message “Waiting for gateway relation”, run the following command. This is a known issue; see tensorboard-controller GitHub issue for more info.

juju run --unit istio-pilot/0 -- "export JUJU_DISPATCH_PATH=hooks/config-changed; ./dispatch" - Get the IP address of Istio ingress gateway load balancer

IP=$(microk8s kubectl -n kubeflow get svc istio-ingressgateway-workload -o jsonpath='{.status.loadBalancer.ingress[0].ip}') - Configure authentication for dashboard

juju config dex-auth public-url=http://$IP.nip.io juju config oidc-gatekeeper public-url=http://$IP.nip.io juju config dex-auth static-username=admin juju config dex-auth static-password=admin - Login to Charmed Kubeflow dashboard with a browser and accept default settings

http://$IP.nip.io - Create pipeline.yaml file on your local machine using the code listing in Appendix: pipeline.yaml

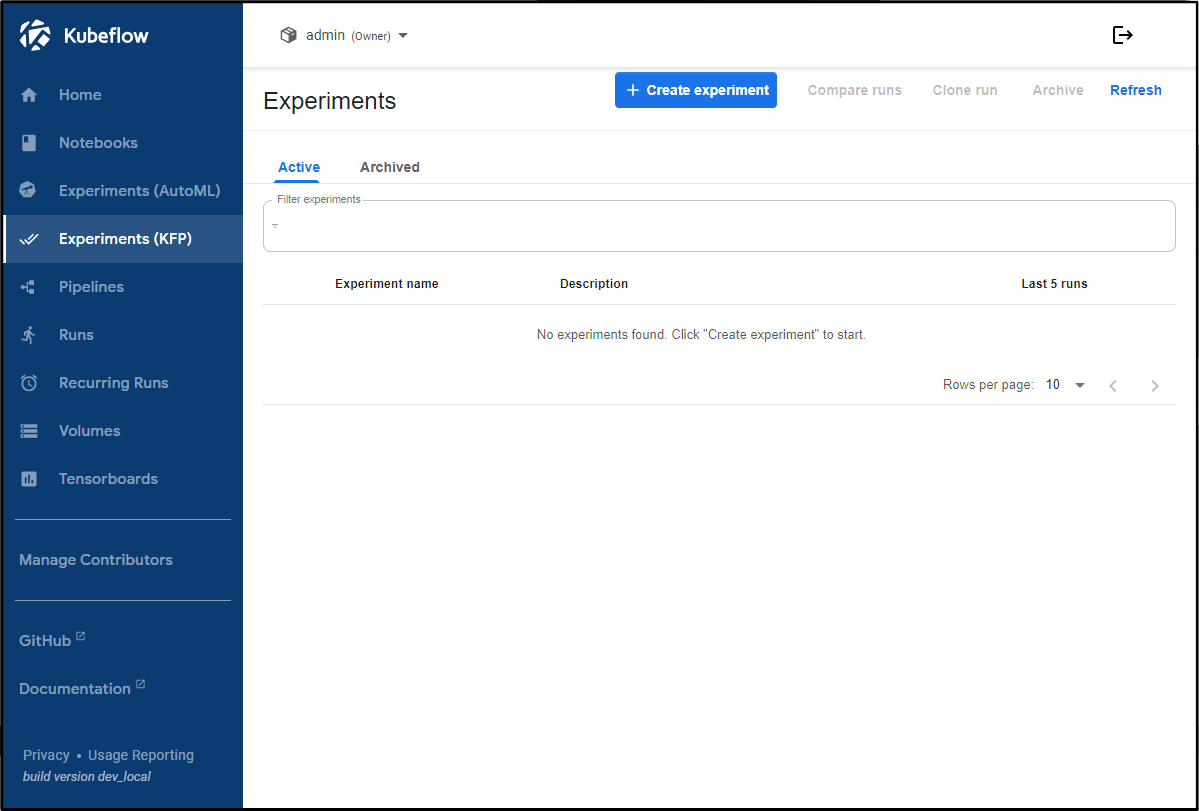

- Click the Create experiment button

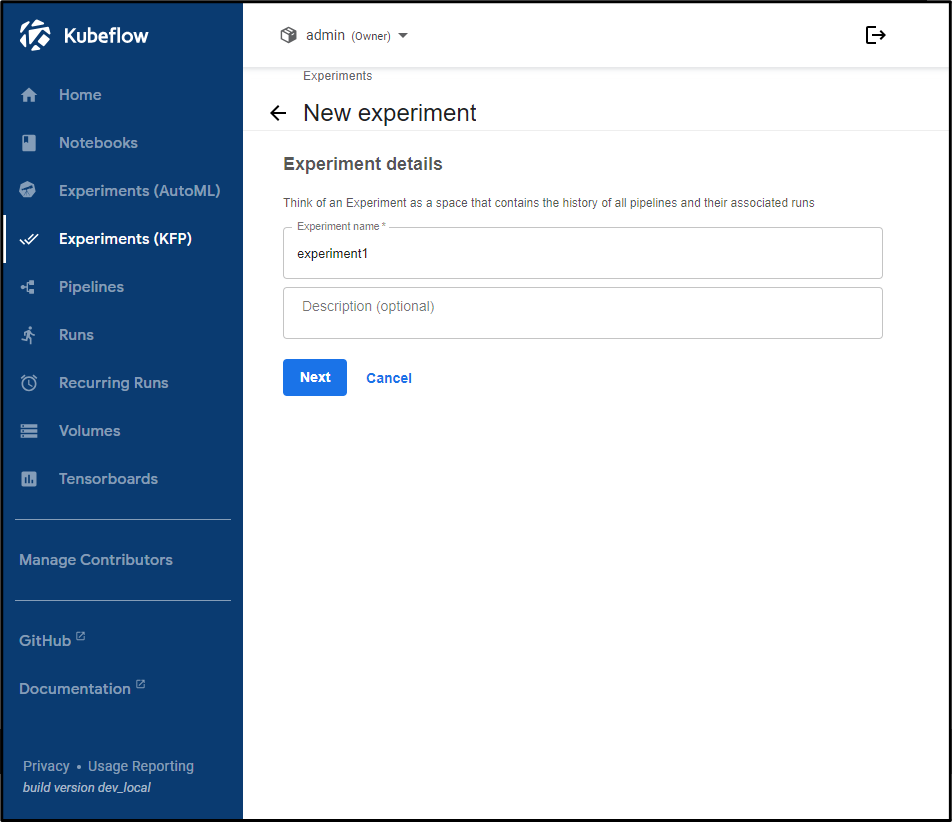

- Enter a name for the experiment and click Next.

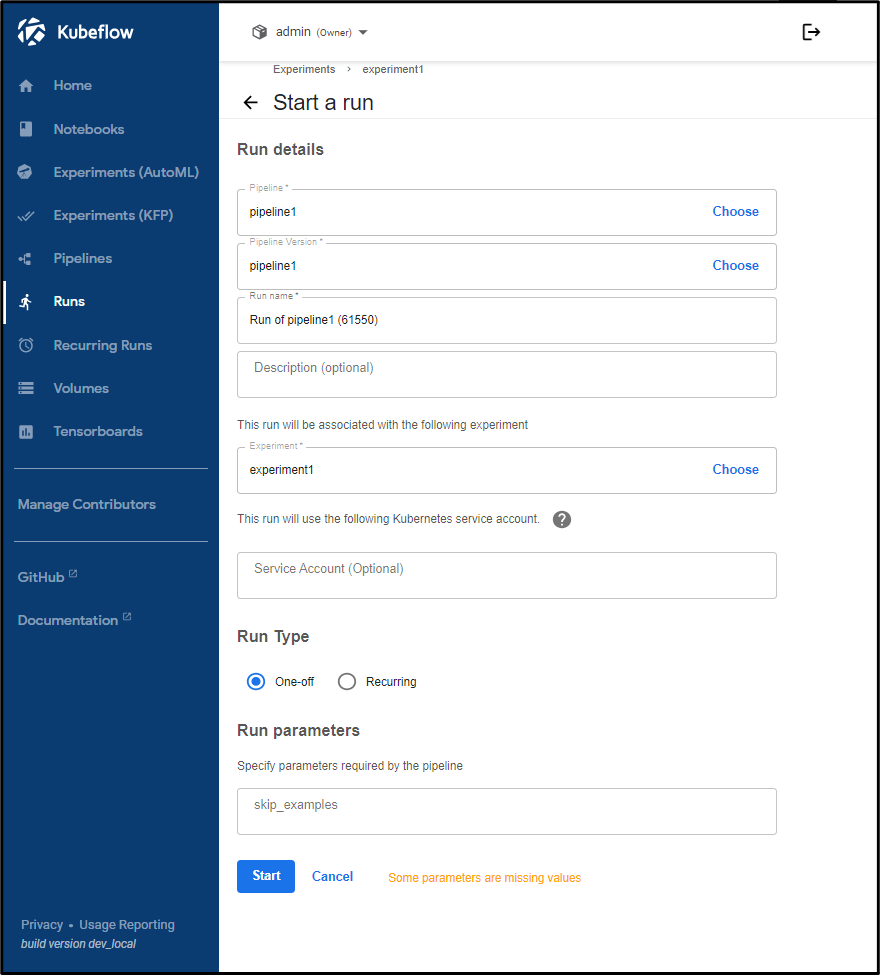

- Enter the run details as shown below.

- Create run and upload pipeline.yaml

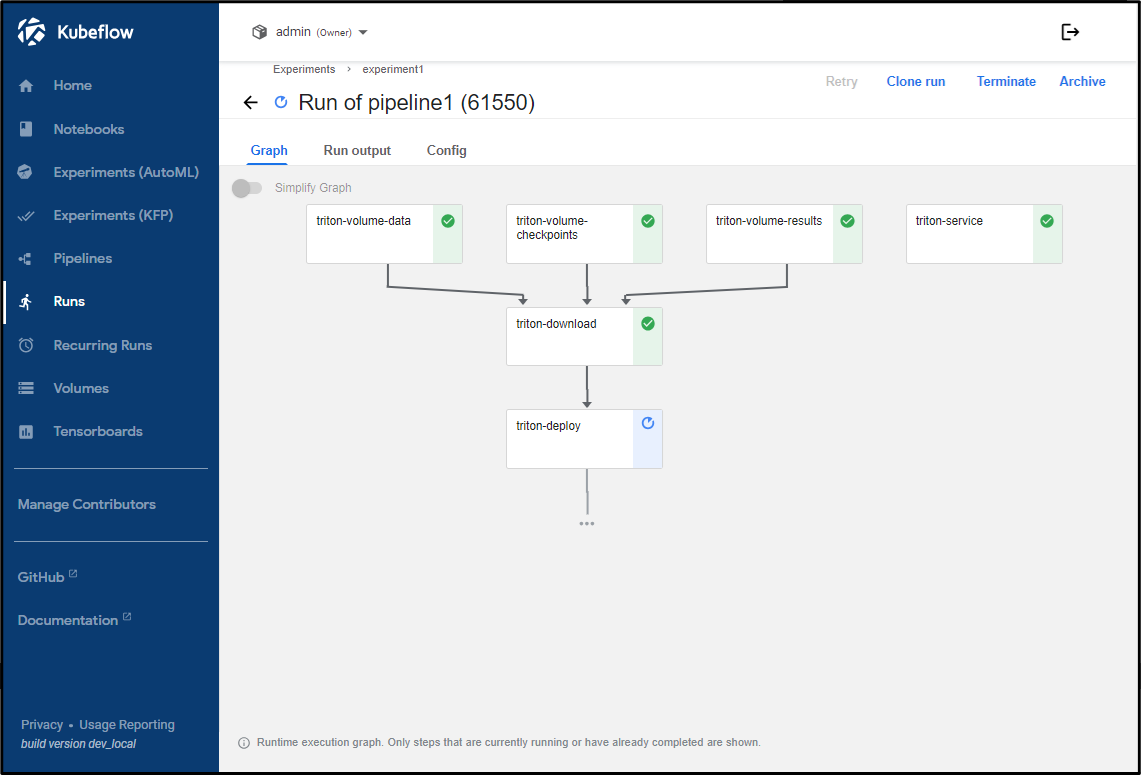

- Let the pipeline run and download the Triton container. It will eventually look like this

- Verify the Triton Inference server can be reached and has loaded the models

IP=$(kubectl get service triton-kubeflow -n admin -o jsonpath='{.spec.clusterIP}') curl http://$IP:8000/v2/models/densenet_onnxSuccessful output will look like the following

{"name":"densenet_onnx","versions":["1"],"platform":"onnxruntime_onnx","inputs":[{"name":"data_0","datatype":"FP32","shape":[3,224,224]}],"outputs":[{"name":"fc6_1","datatype":"FP32","shape":[1000]}]} - Test the image classification

sudo snap install docker sudo docker run -it --rm nvcr.io/nvidia/tritonserver:23.05-py3-sdk /workspace/install/bin/image_client -u $IP:8000 -m densenet_onnx -c 3 -s INCEPTION /workspace/images/mug.jpgA successful output will look like the following

Request 0, batch size 1 Image '/workspace/images/mug.jpg': 15.349561 (504) = COFFEE MUG 13.227463 (968) = CUP 10.424892 (505) = COFFEEPOT

Conclusion

The solution outlined above is suitable for running AI at the edge, helping enterprises that leverage workloads in a broad set of industries, from Telco to Healthcare to HPC. Open source machine learning tooling, such as MicroK8s with Charmed Kubeflow, deployed as part of an accelerated computing stack with Lenovo hardware helps professionals to deliver projects faster, reduce operational costs and have an end-to-end experience within the same tool. This reference architecture is only an example of the larger implementation that may solve challenges related to running and ensuring tool compatibility between ecosystem tools and frameworks, thus maintaining security features and optimizing across compute efficiencies.

Furthermore, by leveraging the combined expertise of Canonical, Lenovo and NVIDIA, organizations can enhance data analytics, optimize decision-making processes, and revolutionize customer experiences. Organizations can confidently embrace this solution to drive innovation, accelerate AI adoption, and unlock new opportunities in their respective domains.

For more information

For more information, visit these pages:

- kubeflow-pipeline-deploy on Github:

https://github.com/NVIDIA/deepops/tree/master/workloads/examples/k8s/kubeflow-pipeline-deploy - Take your models to production with open source AI

https://ubuntu.com/ai - Kubernetes MicroK8s

https://microk8s.io/ - What is Kubeflow

https://ubuntu.com/ai/what-is-kubeflow - Kubeflow on NVIDIA:

https://ubuntu.com/engage/run-ai-at-scale

Appendix: pipeline.yaml

This is the contents of the file pipeline.yaml.

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: tritonpipeline-

labels: {pipelines.kubeflow.org/kfp_sdk_version: 1.8.22}

spec:

entrypoint: tritonpipeline

templates:

- name: condition-skip-examples-download-1

dag:

tasks:

- {name: triton-download, template: triton-download}

- name: triton-deploy

container:

args: ['echo Deploying: /results/model_repository;ls /data; ls /results; ls

/checkpoints; tritonserver --model-store=/results/model_repository']

command: [/bin/bash, -cx]

image: nvcr.io/nvidia/tritonserver:23.05-py3

ports:

- {containerPort: 8000, hostPort: 8000}

- {containerPort: 8001, hostPort: 8001}

- {containerPort: 8002, hostPort: 8002}

resources:

limits: {nvidia.com/gpu: 1}

volumeMounts:

- {mountPath: /results/, name: triton-results, readOnly: false}

- {mountPath: /data/, name: triton-data, readOnly: true}

- {mountPath: /checkpoints/, name: triton-checkpoints, readOnly: true}

metadata:

labels:

app: triton-kubeflow

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

volumes:

- name: triton-checkpoints

persistentVolumeClaim: {claimName: triton-checkpoints, readOnly: false}

- name: triton-data

persistentVolumeClaim: {claimName: triton-data, readOnly: false}

- name: triton-results

persistentVolumeClaim: {claimName: triton-results, readOnly: false}

- name: triton-download

container:

args: ['cd /tmp; git clone https://github.com/triton-inference-server/server.git;

cd server/docs/examples; ./fetch_models.sh; cd model_repository; cp -a .

/results/model_repository']

command: [/bin/bash, -cx]

image: nvcr.io/nvidia/tritonserver:23.05-py3

volumeMounts:

- {mountPath: /results/, name: triton-results, readOnly: false}

- {mountPath: /data/, name: triton-data, readOnly: true}

- {mountPath: /checkpoints/, name: triton-checkpoints, readOnly: true}

metadata:

labels:

app: triton-kubeflow

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

volumes:

- name: triton-checkpoints

persistentVolumeClaim: {claimName: triton-checkpoints, readOnly: false}

- name: triton-data

persistentVolumeClaim: {claimName: triton-data, readOnly: false}

- name: triton-results

persistentVolumeClaim: {claimName: triton-results, readOnly: false}

- name: triton-service

resource:

action: create

manifest: |

apiVersion: v1

kind: Service

metadata:

name: triton-kubeflow

spec:

ports:

- name: http

nodePort: 30800

port: 8000

protocol: TCP

targetPort: 8000

- name: grpc

nodePort: 30801

port: 8001

targetPort: 8001

- name: metrics

nodePort: 30802

port: 8002

targetPort: 8002

selector:

app: triton-kubeflow

type: NodePort

outputs:

parameters:

- name: triton-service-manifest

valueFrom: {jsonPath: '{}'}

- name: triton-service-name

valueFrom: {jsonPath: '{.metadata.name}'}

metadata:

labels:

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

- name: triton-volume-checkpoints

resource:

action: apply

manifest: |

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: triton-checkpoints

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: microk8s-hostpath

outputs:

parameters:

- name: triton-volume-checkpoints-manifest

valueFrom: {jsonPath: '{}'}

- name: triton-volume-checkpoints-name

valueFrom: {jsonPath: '{.metadata.name}'}

metadata:

labels:

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

- name: triton-volume-data

resource:

action: apply

manifest: |

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: triton-data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: microk8s-hostpath

outputs:

parameters:

- name: triton-volume-data-manifest

valueFrom: {jsonPath: '{}'}

- name: triton-volume-data-name

valueFrom: {jsonPath: '{.metadata.name}'}

metadata:

labels:

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

- name: triton-volume-results

resource:

action: apply

manifest: |

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: triton-results

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: microk8s-hostpath

outputs:

parameters:

- name: triton-volume-results-manifest

valueFrom: {jsonPath: '{}'}

- name: triton-volume-results-name

valueFrom: {jsonPath: '{.metadata.name}'}

metadata:

labels:

pipelines.kubeflow.org/kfp_sdk_version: 1.8.22

pipelines.kubeflow.org/pipeline-sdk-type: kfp

pipelines.kubeflow.org/enable_caching: "true"

- name: tritonpipeline

inputs:

parameters:

- {name: skip_examples}

dag:

tasks:

- name: condition-skip-examples-download-1

template: condition-skip-examples-download-1

when: '"{{inputs.parameters.skip_examples}}" == ""'

dependencies: [triton-volume-checkpoints, triton-volume-data, triton-volume-results]

- name: triton-deploy

template: triton-deploy

dependencies: [condition-skip-examples-download-1]

- {name: triton-service, template: triton-service}

- {name: triton-volume-checkpoints, template: triton-volume-checkpoints}

- {name: triton-volume-data, template: triton-volume-data}

- {name: triton-volume-results, template: triton-volume-results}

arguments:

parameters:

- {name: skip_examples}

serviceAccountName: pipeline-runner

Authors

David Ellison is the Chief Data Scientist for Lenovo ISG. Through Lenovo’s US and European AI Discover Centers, he leads a team that uses cutting-edge AI techniques to deliver solutions for external customers while internally supporting the overall AI strategy for the Worldwide Infrastructure Solutions Group. Before joining Lenovo, he ran an international scientific analysis and equipment company and worked as a Data Scientist for the US Postal Service. Previous to that, he received a PhD in Biomedical Engineering from Johns Hopkins University. He has numerous publications in top tier journals including two in the Proceedings of the National Academy of the Sciences.

Carlos Huescas is the Worldwide Product Manager for NVIDIA software at Lenovo. He specializes in High Performance Computing and AI solutions. He has more than 15 years of experience as an IT architect and in product management positions across several high-tech companies.

Mircea Troaca is a AI Technical Engineer leading the benchmarking and reference architectures for Lenovo's AI Discover Lab. He consistently delivers #1 results in MLPerf, the leading AI Benchmark, and is also leading Lenovo and Intel's efforts in the TPCx-AI benchmark.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkEdge®

The following terms are trademarks of other companies:

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.