Author

Published

11 Dec 2024Form Number

LP2111PDF size

7 pages, 298 KBProduct Overview

Red Hat Enterprise Linux AI (RHEL AI) is an enterprise-grade gen AI foundation model platform to develop, test, and deploy LLMs for gen AI business use cases. RHEL AI brings together:

- The Granite family of open source LLMs.

- InstructLab model alignment tooling, which provides a community-driven approach to LLM fine-tuning.

- A bootable image of Red Hat Enterprise Linux, along with gen AI libraries and dependencies such as PyTorch and AI accelerator driver software for NVIDIA, Intel, and AMD.

- Enterprise-level technical support and model intellectual property indemnification provided by Red Hat.

- RHEL AI gives you the trusted Red Hat Enterprise Linux platform and adds the necessary components for you to begin your gen AI journey and see results.

Red Hat Enterprise Linux AI allows portability across hybrid cloud environments, and makes it possible to then scale your AI workflows with Red Hat OpenShift® AI and to advance to IBM watsonx.ai with additional capabilities for enterprise AI development, data management, and model governance.

Key Benefits

Red Hat Enterprise Linux AI is a powerful tool that enables enterprises to harness the potential of artificial intelligence. It offers a comprehensive platform for developing, testing, and running generative AI foundation models, making it accessible to organizations at all stages of their AI journey. By leveraging open-source technologies and a community-driven approach, RHEL AI empowers businesses to innovate with trust and transparency, while reducing costs and removing barriers to entry.

One of its key advantages is its ability to facilitate collaboration between domain experts and data scientists, enabling the creation of purpose-built generative AI models tailored to specific business needs. RHEL AI's flexibility and scalability allow organizations to deploy and manage these models across hybrid cloud environments, ensuring seamless integration with existing infrastructure.

Furthermore, RHEL AI's focus on security and compliance provides a robust foundation for building and deploying AI solutions responsibly. By adhering to open-source principles and industry best practices, RHEL AI helps organizations mitigate risks and protect sensitive data.

Overall, RHEL AI offers a compelling solution for enterprises seeking to embrace the power of AI. Its ease of use, scalability, and focus on security make it a valuable tool for driving innovation and achieving business objectives.

Below is a summary of the key benefits of RHEL AI:

- Let users update and enhance large language models (LLMs) with InstructLab

- Align LLMs with proprietary data, safely and securely, to tailor the models to your business requirements

- Get started quickly with generative artificial intelligence (gen AI) and deliver results with a trusted, security-focused Red Hat® Enterprise Linux® platform

- Packaged as a bootable Red Hat Enterprise Linux container image for installation and updates

The future of AI is open and transparent

RHEL AI includes a subset of the open source Granite language and code models that are fully indemnified by Red Hat. The open source Granite models provide organizations cost- and performance-optimized models that align with a wide variety of gen AI use cases. The Granite models were released under Apache 2.0 license. In addition to the models being open source, the datasets used for training the models are also transparent and open.

Accessible gen AI model training for faster time to value

In addition to open source Granite models, RHEL AI also includes InstructLab model alignment tooling, based on the Large scale Alignment for chatBots (LAB) technique. InstructLab allows teams within organizations to efficiently contribute skills and knowledge to LLMs, customizing these models for the specific needs of their business.

- Skill: A capability domain intended to teach a model how to do something. Skills are classified into two categories

- Compositional skills

- Let AI models perform specific tasks or functions.

- Are either grounded (includes context) or ungrounded (does not include context).

- A grounded example is adding a skill to provide a model the ability to read a markdown-formatted table

- An ungrounded example is adding a skill to teach the model how to rhyme

- Foundation skills

- Skills like math, reasoning, and coding.

- Compositional skills

- Knowledge: Data and facts that provide a model with additional data and information to answer questions with greater accuracy.

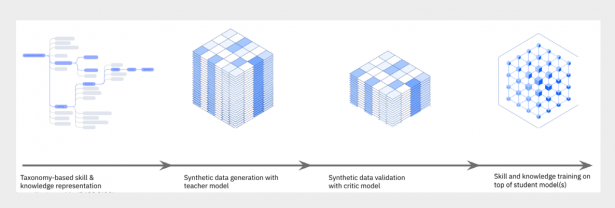

Figure 1. InstructLab model fine-tuning workflow

InstructLab is an open source project for enhancing large language models (LLMs) used in generative artificial intelligence (gen AI) applications. Created by IBM and Red Hat, the InstructLab community project provides a cost-effective solution for improving the alignment of LLMs and opens the doors for those with minimal machine learning experience, skills and knowledge to contribute.

- Skills and knowledge contributions are placed into a taxonomy-based data repository.

- A significant quantity of synthetic data is generated, using the taxonomy data, in order to produce a large enough dataset to successfully update and change an LLM.

- The synthetic data output is reviewed, validated, and pruned by a critic model.

- The model is trained with synthetic data rooted in human-generated manual input.

InstructLab is accessible to developers and domain experts who may lack the necessary data science expertise normally required to fine-tune LLMs. The InstructLab methodology allows teams to add data, or skills especially suited to business use case requirements, to their chosen model for training in a collaborative manner allowing for quicker time to value.

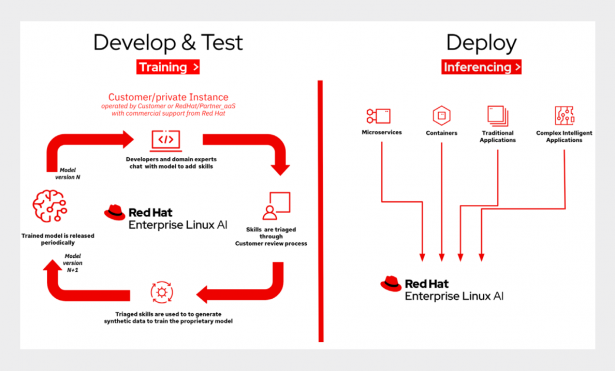

Train and deploy anywhere

RHEL AI helps organizations accelerate the process of going from proof of concept to production server-based deployments by providing all the tools needed and the ability to train, tune, and deploy these models where the data lives, anywhere across the hybrid cloud. The deployed models can then be used by various services and applications within your company.

Figure 2. InstructLab approach used in the RHEL AI deployment

When organizations are ready, RHEL AI also provides an on-ramp to Red Hat OpenShift® AI, for training, tuning, and serving these models at scale across a distributed cluster environment using the same Granite models and InstructLab approach used in the RHEL AI deployment.

Supported Platforms

Red Hat Enterprise Linux AI is supported on the following platforms:

Lenovo ThinkSystem SR675 V3

Lenovo ThinkSystem SR675 V3 delivers optimal performance for Artificial Intelligence (AI), High Performance Computing (HPC) and graphical workloads across an array of industries. As more workloads leverage the capabilities of accelerators, the demand for GPUs increases. The ThinkSystem SR675 V3 delivers an optimized enterprise-grade solution for deploying accelerated HPC and AI workloads in production, maximizing system performance.

The ThinkSystem SR675 V3 is built on one or two 4th or 5th Generation AMD EPYC™ Processors and is designed to support the vast NVIDIA Hopper, Lovelace and Ampere datacenter portfolio and AMD Instinct™ MI Series Accelerators. It delivers performance optimized for your workload, be it visualization, rendering or computationally intensive HPC and AI.

Lenovo Thinksystem SR650a V4

The Lenovo ThinkSystem SR650a V4 is designed to accelerate GPU-intensive workloads like AI, deep learning, and HPC. Powered by Intel® Xeon® 6 processors, it delivers exceptional GPU density, advanced storage, and PCIe Gen5 connectivity for peak performance. It supports up to 4x double-width or 8x single-width GPUs and offers up to 8x E3.S NVMe drives for fast, low-latency data access.

The SR650a V4 is built for high-performance AI workloads, offering GPU density and efficiency to accelerate innovation. Features like SSD Predictive Failure Analysis reduce downtime, while enhanced security with ederated directory simplify access and protect infrastructure. Outstanding reliability, availability, and serviceability (RAS) and high-efficiency design can improve your business environment and can help save operational costs.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkSystem®

The following terms are trademarks of other companies:

AMD, AMD EPYC™, and AMD Instinct™ are trademarks of Advanced Micro Devices, Inc.

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

IBM®, IBM Granite®, IBM watsonx®, watsonx®, and watsonx.ai® are trademarks of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.