Author

Published

23 Jun 2025Form Number

LP2240PDF size

7 pages, 658 KBAbstract

MLCommons® announced new results for its industry-standard MLPerf® Inference v5.0 benchmark suite, which provides insights into machine learning (ML) system performance benchmarking. This article explores Lenovo’s contributions to MLPerf inferencing 5.0. The combination of recent hardware and software advances optimized for generative AI have led to dramatic performance improvements over the past year.

Introduction

The release of MLPerf Inference: Datacenter 5.0 benchmark suite by MLCommons marks another significant milestone in the evaluation of machine learning performance. By measuring how fast systems can process inputs and produce results using a trained model, these comprehensive benchmarks provide insights into datacenter AI capabilities, focusing on inference across diverse hardware configurations. We are proud to participate and are excited to share our outstanding achievements from the latest Lenovo ThinkSystem portfolio including SR650a V4, SR680a V3 and the SR780a V3.

With groundbreaking performance results, Lenovo shines as a global leader in AI infrastructure. In the latest MLPerf Inference benchmarks, three of Lenovo’s powerful ThinkSystem servers—SR650a V4, SR680a V3, and SR780a V3—dominated across a wide spectrum of AI workloads. These systems, equipped with cutting-edge NVIDIA GPUs, delivered top-tier results in critical benchmarks using models such as GPT-J, Llama 2, Mixtral, Stable Diffusion, ResNet50, and more. Notably, the ThinkSystem SR650a V4 emerged as the top-performing general-purpose AI inferencing system, delivering unmatched versatility for both datacenter and colocation AI deployments.

Highlights from MLPerf Inference: Data Center Performance

Key highlights from the MLPerf results include benchmarks using these servers:

- SR650a V4 - 2U server with for 4x NVIDIA H100 NVL PCIe GPUs

- SR680a V4 - 8U air-cooled server with 8x NVIDIA H200 SXM GPUs

- SR780a V4 - 5U water-cooled server with 8x NVIDIA H200 SXM GPUs

ThinkSystem SR650a V4 with 4x NVIDIA H100-NVL-94GB

Tested with GPT-J, Mixtral, Stable Diffusion and others takes 1st place as general purpose for AI inferencing systems and use cases. Key tests included:

- Vision Tasks: ResNet50 and RetinaNet (both server & offline)

- Medical Imaging: 3D-UNet (99 and 99.9 offline)

- NLP & Generative AI: GPT-J (99.9 both server & offline; 99 offline)

- Diffusion Models: Stable Diffusion XL (both server & offline)

- Mixture of Experts: Mixtral-8x7B (both server & offline)

Its consistent top-tier performance across diverse use cases demonstrates its versatility and efficiency, especially for enterprises seeking robust inferencing capabilities.

ThinkSystem SR680a V3 with 8x NVIDIA H200-SXM-141GB

One of Lenovo’s high-performance computing servers excelled in large language model workloads, taking 1st place in:

- LLMs: Llama 2-70B (99 and 99.9 offline)

- Vision: ResNet50 offline

Designed to maximize GPU performance, SR680a achieves 2nd and 3rd places in interactive LLM inferencing, Stable Diffusion XL, Mixtral-8x7B, and RGAT. This balance of top-end performance and flexibility makes the SR680a V3 ideal for demanding AI-heavy enterprises and research environments.

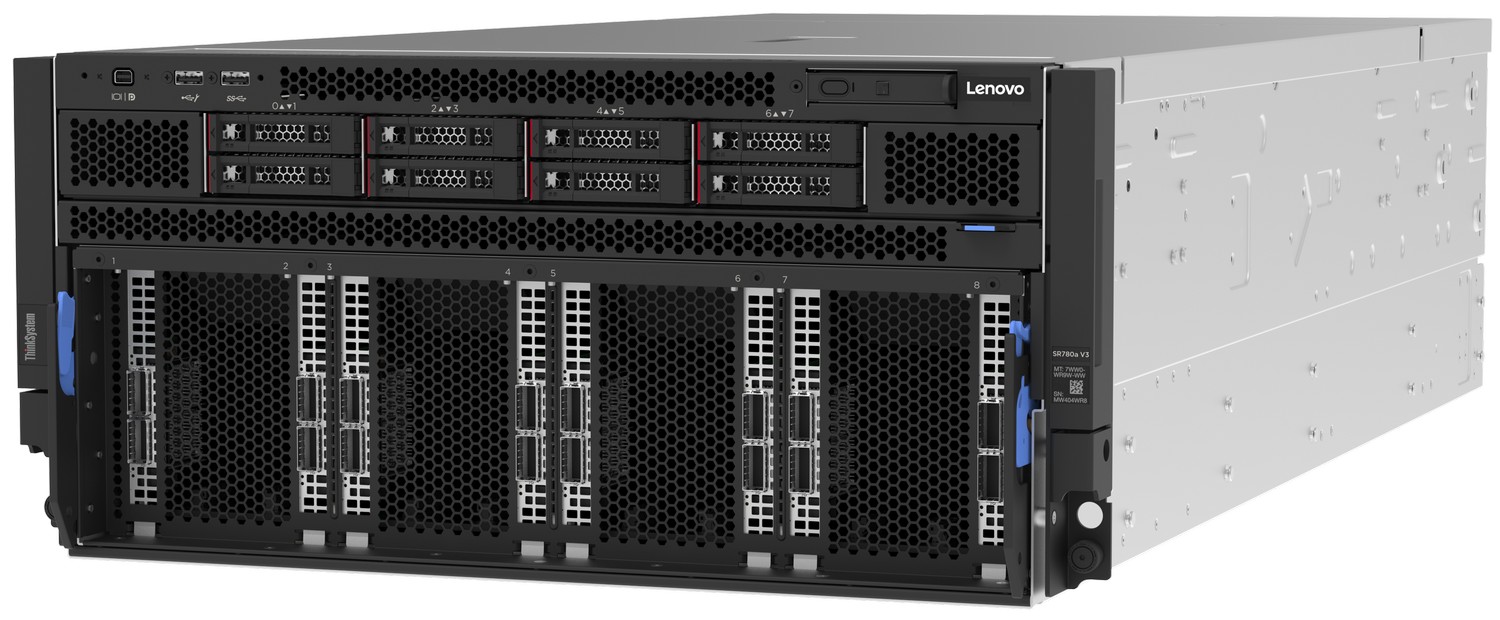

ThinkSystem SR780a V3 with 8x NVIDIA H200-SXM-141GB

With dual 5th Gen Intel® Xeon® Scalable processors, the ThinkSystem SR780a V3 offers the performance needed for compute-demanding AI and HPC workloads, proudly achieves first place as most advanced AI and HPC Inferencing systems, the SR780a V3 also impressed across a wide range of benchmarks, with 1st place finishes in:

- NLP & LLMs: GPT-J and Llama 2-70B (interactive offline)

- Mixture of Experts: Mixtral-8x7B offline

- Graph Neural Networks: RGAT offline

Strongly performing for vision and diffusion model workloads, achieving 2nd and 3rd place, underlining its strength as a general-purpose inferencing powerhouse for advanced AI applications.

Lenovo Hybrid AI Advantage with NVIDIA

Lenovo Hybrid AI Advantage™ with NVIDIA help organizations improve productivity, increase agility, and innovate with trust through standardized and accelerated development and deployment of AI use case solutions. Lenovo Hybrid AI Advantage bring the power of Lenovo AI library and validated, tested hybrid AI factories (hybrid AI platforms, workstations, servers, storage, network, software, models, services, partner ecosystem) to the enterprises.

The hybrid AI factory is designed to support hybrid deployments at the Edge, data centers, Colos, and business locations with cloud integration. It offers flexibility of model, infrastructure choice, enables a wide range of AI applications, agentic and machine learning workflows, and real-time data analysis. Lenovo’s hybrid AI platforms power the hybrid AI factory, and they can scale from a single server with just four GPUs as starter environment to a rack scalable unit (SU) as a turnkey infrastructure solution with partner technology choice.

Future-focused AI with Lenovo

As generative AI, large language models, and inferencing continue to evolve, Lenovo is an established industry leader. These benchmark-topping results not only validate current capabilities but also demonstrate our commitment to AI readiness today and in the future—whether in the datacenter or cloud. Our partnership ecosystem with industry leaders like NVIDIA Lenovo is building the foundation for a more intelligent, connected, and autonomous future.

Lenovo’s leadership in the latest MLPerf Inference results showcases more than just benchmark dominance—it emphasizes our vision for AI that is inclusive, scalable, and enterprise-ready. The ThinkSystem SR650a V4, SR680a V3, and SR780a V3 exemplify Lenovo’s commitment to delivering innovative industry leading infrastructure for every stage of the AI journey. Whether you're developing the next breakthrough in generative AI or deploying edge inferencing at scale, Lenovo offers future-focused solutions you can trust.

For more information

For more information, see the following resources:

- Explore Lenovo AI solutions:

https://www.lenovo.com/us/en/servers-storage/solutions/ai/ - MLCommons®, the open engineering consortium and leading force behind MLPerf, has now released new results for MLPerf benchmark suites:

- Benchmark results: https://mlcommons.org/benchmarks/training/

- Latest news about MLCommons: https://mlcommons.org/news-blog

Author

Aaron Gilbert is the Worldwide AI Solutions Marketing at Lenovo. His focus is the Lenovo Hybrid AI Platform with NVIDIA.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

Lenovo Hybrid AI Advantage

ThinkSystem®

The following terms are trademarks of other companies:

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.