Author

Published

10 Jul 2025Form Number

LP2254PDF size

7 pages, 438 KBAbstract

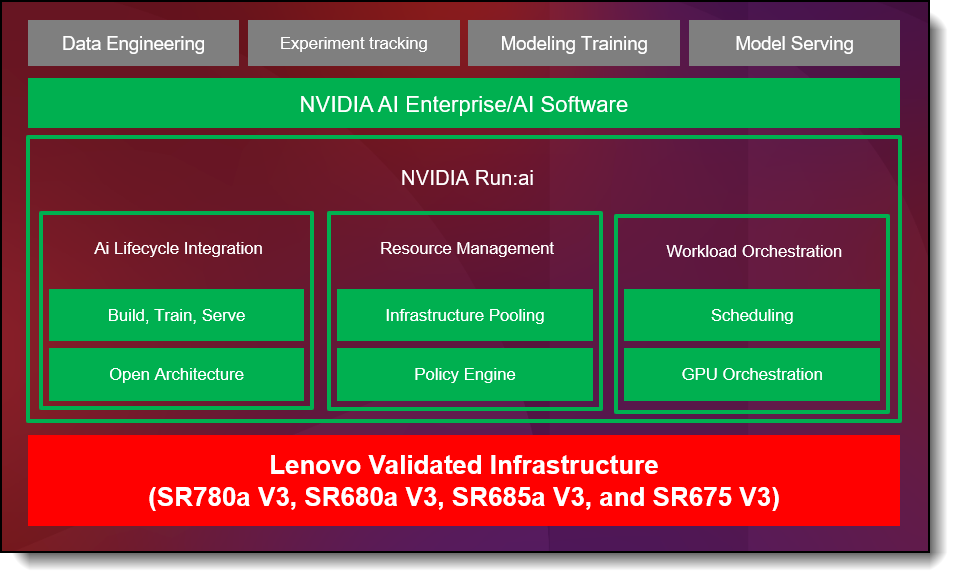

As AI initiatives evolve from small-scale experimentation to full-scale production, enterprises must overcome challenges in resource allocation, team scalability, and infrastructure efficiency. Lenovo, in collaboration with NVIDIA, offers a unified solution—NVIDIA Run:ai on Lenovo AI Platforms—that streamlines AI operations by orchestrating GPU workloads, optimizing infrastructure usage, and enabling seamless collaboration between IT and data science teams. Leveraging Lenovo’s robust AI infrastructure and NVIDIA Run:ai’s dynamic orchestration capabilities, organizations can accelerate time-to-value, enhance ROI, and scale AI initiatives with confidence.

Introduction

As AI workloads mature from pilot experimentation to enterprise-scale production, organizations face increased pressure to operationalize machine learning efficiently, maximize infrastructure ROI, and support ever-expanding AI teams. In partnership with NVIDIA, Lenovo introduces a unified solution that accelerates this journey: NVIDIA Run:ai on Lenovo AI Platforms.

This powerful combination addresses common friction points across the AI lifecycle — from experimentation to deployment — by unifying GPU resource management, improving workload orchestration, and supporting cross-functional collaboration across IT and data science teams.

By leveraging Lenovo’s 285 and 289 AI infrastructure and NVIDIA Run:ai’s intelligent GPU orchestration platform, enterprises can fully unlock the value of their AI investments, scale operationally with confidence, and reduce time-to-insight for data-driven outcomes.

Business and Technical Challenges

Despite substantial investment in AI hardware and software, many organizations struggle to efficiently scale their AI initiatives.

Key challenges include:

- For AI Practitioners:

- Inconsistent access to GPU resources hampers experimentation and training cycles.

- Fragmented environments delay progress from proof-of-concept to deployment.

- Contention between teams results in idle time and lost productivity.

- For IT Leaders:

- GPU infrastructure is often overprovisioned or underutilized due to lack of visibility.

- Static resource allocation fails to align with dynamic AI workloads.

- Difficulty enforcing usage policies across distributed teams and environments.

- For Executives:

- AI investments yield diminishing returns without centralized orchestration.

- Lack of observability across workloads delays AI roadmap execution.

- Cloud overspend and infrastructure inefficiencies erode competitive advantage

Solution Overview: NVIDIA Run:ai on Lenovo Infrastructure

NVIDIA Run:ai is a Kubernetes-native AI workload orchestration platform designed to maximize the efficiency, agility, and governance of GPU resources in hybrid and on-prem environments. When deployed on Lenovo’s purpose-built AI platforms, it delivers a scalable and flexible foundation for production-grade AI.

Core capabilities of the solution:

- Fractional GPU allocation to optimize resource utilization.

- Priority-based workload scheduling to ensure mission-critical jobs are completed on time.

- Elastic scaling of training and inference jobs across distributed compute clusters.

- Lifecycle support for AI development, from Jupyter Notebooks to model serving.

- Policy-based governance for access control, security, and compliance.

NVIDIA Run:ai System Components

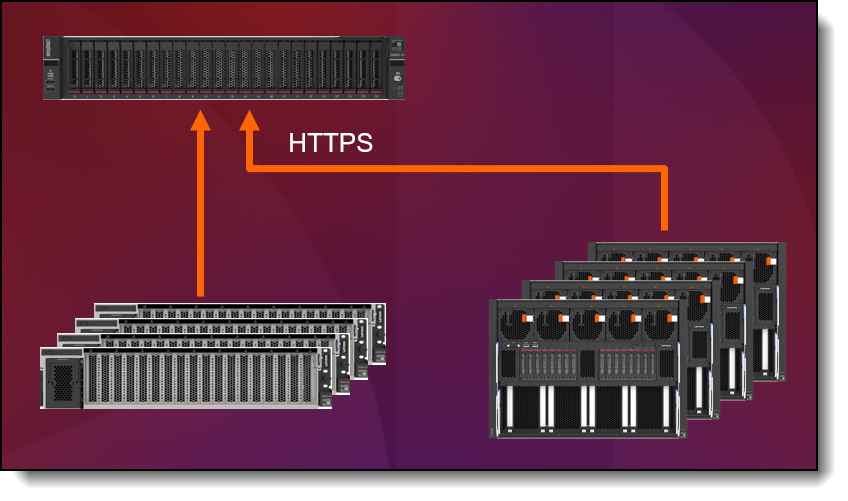

NVIDIA Run:ai is made up of two components both installed over a Kubernetes cluster. NVIDIA Run:ai control plane – Provides resource management, handles workload submission and provides cluster monitoring and analytics. NVIDIA Run:ai cluster – Provides scheduling and workload management, extending Kubernetes native capabilities

The components are as follows:

- Run:ai Control Plane: Centralized resource management, user access policies, workload prioritization, built on Lenovo ThinksSystems. Refer to Control Plane System Requirements for specs and recommendations

- Run:ai Cluster: GPU scheduling, workload orchestration, Kubernetes-native scalability. Built on Lenovo AI server. Refer to the Lenovo Hybrid AI 285 Platform Guide for specs and recommendations

Role-Based Value Proposition

NVIDIA Run:ai software delivers distinct value to each stakeholder. Our co-tailored solution aligns with the priorities of AI practitioners, IT managers, and platform admins—driving technical efficiency, operational control, and strategic impact.

For IT Managers:

- Centralized Control: Manage multiple GPU clusters from a single console.

- Usage Analytics: Gain insights into GPU allocation, job performance, and bottlenecks.

- Policy Enforcement: Set consumption thresholds, scheduling rules, and user permissions.

- Authentication & RBAC: Integrate with enterprise identity platforms (e.g., LDAP, SSO).

- Kubernetes-Native Design: Install and manage using familiar cloud-native operations.

For AI Practitioners:

- Self-Service GPU Access: Launch training, fine-tuning, or inference jobs on-demand.

- Interactive Development: Run uninterrupted Jupyter Notebook sessions using fractional GPUs.

- Model Lifecycle Integration: From data prep to deployment — with support for key tools (PyTorch, TensorFlow, Ray, Kubeflow).

- Scalable Training & Serving: Leverage multiple GPUs with support for auto-scaling.

For Platform Admins:

- Team Structuring: Map projects, teams, and departments for intelligent resource allocation.

- User and Access Control: Assign permissions aligned to org structure and security policies.

- Scheduling and Monitoring: Allocate resources based on workload priority and urgency.

- Cost Optimization: Reduce idle GPU time and increase infrastructure ROI.

Subscription model and Part number information

Run:ai is licensed per GPU with options for education, enterprise, and public sector usage. The following table lists the ordering part numbers from Lenovo.

Author

Carlos Huescas is the Worldwide Product Manager for NVIDIA software at Lenovo. He specializes in High Performance Computing and AI solutions. He has more than 15 years of experience as an IT architect and in product management positions across several high-tech companies.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkSystem®

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.