Abstract

High-performance computing (HPC) solutions require high bandwidth, low latency components with CPU offloads to get the highest server efficiency and application productivity. The Mellanox ConnectX-3 and ConnectX-3 Pro network adapters for Lenovo servers deliver the I/O performance that meets these requirements.

This product guide provides essential presales information to understand the ConnectX-3 offerings and their key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about ConnectX-3 network adapters and consider their use in IT solutions.

Withdrawn: The adapters described in this product guide are now withdrawn from marketing.

Introduction

High-performance computing (HPC) solutions require high bandwidth, low latency components with CPU offloads to get the highest server efficiency and application productivity. The Mellanox ConnectX-3 and ConnectX-3 Pro network adapters for System x® servers deliver the I/O performance that meets these requirements.

Mellanox's ConnectX-3 and ConnectX-3 Pro ASIC delivers low latency, high bandwidth, and computing efficiency for performance-driven server applications. Efficient computing is achieved by offloading from the CPU routine activities, which makes more processor power available for the application. Network protocol processing and data movement impacts, such as InfiniBand RDMA and Send/Receive semantics, are completed in the adapter without CPU intervention. RDMA support extends to virtual servers when SR-IOV is enabled. Mellanox's ConnectX-3 advanced acceleration technology enables higher cluster efficiency and scalability of up to tens of thousands of nodes.

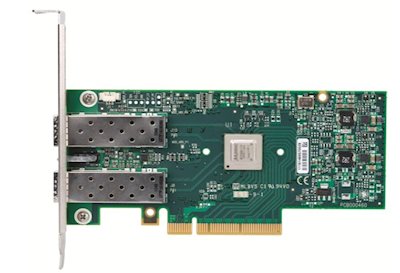

Figure 1. Mellanox Connect X-3 10GbE Adapter for System x (3U bracket shown)

Did you know?

Mellanox Ethernet and InfiniBand network server adapters provide a high-performing interconnect solution for enterprise data centers, Web 2.0, cloud computing, and HPC environments, where low latency and interconnect efficiency is paramount. In additiona, Virtual Protocol Interconnect (VPI) offers flexibility in InfiniBand and Ethernet port designations.

With the new ConnectX-3 Pro adapter, you can implement VXLAN and NVGRE offload engines to accelerate virtual LAN ID processing, ideal for public and private cloud configurations.

Part number information

Table 1 shows the part numbers and feature codes for the adapters.

Withdrawn: All adapters described in this product guide are now withdrawn from marketing.

| Part number | Feature code | Description |

| 00D9690 | A3PM | Mellanox ConnectX-3 10 GbE Adapter |

| 00D9550 | A3PN | Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter |

| 00FP650 | A5RK | Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter |

| 7ZT7A00501 | AUKR | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter |

The part numbers include the following:

- One adapter with a full-height (3U) bracket attached

- Additional low-profile (2U) bracket included in the box

- Documentation

Figure 2 shows the Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter (the shipping adapter includes a heatsink over the ASIC but the figure does not show this heatsink)

Figure 2. Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter for System x with 3U bracket (attached heatsink removed)

Supported transceivers and cables

The 10GbE Adapter (00D9690) supports the direct-attach copper (DAC) twin-ax cables, transceivers, and optical cables that are listed in the following table.

| Part number | Feature code | Description |

|---|---|---|

| Direct Attach Copper (DAC) cables | ||

| 00D6288 | A3RG | .5 m Passive DAC SFP+ Cable |

| 90Y9427 | A1PH | 1 m Passive DAC SFP+ Cable |

| 90Y9430 | A1PJ | 3 m Passive DAC SFP+ Cable |

| 90Y9433 | A1PK | 5 m Passive DAC SFP+ Cable |

| 00D6151 | A3RH | 7 m Passive DAC SFP+ Cable |

| 95Y0323 | A25A | 1M Active DAC SFP+ Cable |

| 95Y0326 | A25B | 3m Active DAC SFP+ Cable |

| 95Y0329 | A25C | 5m Active DAC SFP+ Cable |

| SFP+ Transceivers | ||

| 46C3447 | 5053 | SFP+ SR Transceiver |

| 49Y4216 | 0069 | Brocade 10Gb SFP+ SR Optical Transceiver |

| 49Y4218 | 0064 | QLogic 10Gb SFP+ SR Optical Transceiver |

| 4TC7A78615 | BNDR | ThinkSystem Accelink 10G SR SFP+ Ethernet transceiver |

| Optical Cables | ||

| 00MN499 | ASR5 | Lenovo 0.5m LC-LC OM3 MMF Cable |

| 00MN502 | ASR6 | Lenovo 1m LC-LC OM3 MMF Cable |

| 00MN505 | ASR7 | Lenovo 3m LC-LC OM3 MMF Cable |

| 00MN508 | ASR8 | Lenovo 5m LC-LC OM3 MMF Cable |

| 00MN511 | ASR9 | Lenovo 10m LC-LC OM3 MMF Cable |

| 00MN514 | ASRA | Lenovo 15m LC-LC OM3 MMF Cable |

| 00MN517 | ASRB | Lenovo 25m LC-LC OM3 MMF Cable |

| 00MN520 | ASRC | Lenovo 30m LC-LC OM3 MMF Cable |

The FDR adapters (00D9550, 00FP650 and 7ZT7A00501) support the direct-attach copper (DAC) twin-ax cables, transceivers, and optical cables that are listed in the following table.

| Part number | Feature code | Description |

|---|---|---|

| Direct attach copper (DAC) cables - InfiniBand | ||

| 44T1364 | ARZA | 0.5m Mellanox QSFP Passive DAC Cable |

| 00KF002 | ARZB | 0.75m Mellanox QSFP Passive DAC Cable |

| 00KF003 | ARZC | 1m Mellanox QSFP Passive DAC Cable |

| 00KF004 | ARZD | 1.25m Mellanox QSFP Passive DAC Cable |

| 00KF005 | ARZE | 1.5m Mellanox QSFP Passive DAC Cable |

| 00KF006 | ARZF | 3m Mellanox QSFP Passive DAC Cable |

| Optical cables - InfiniBand | ||

| 00KF007 | ARYC | 3m Mellanox Active IB FDR Optical Fiber Cable |

| 00KF008 | ARYD | 5m Mellanox Active IB FDR Optical Fiber Cable |

| 00KF009 | ARYE | 10m Mellanox Active IB FDR Optical Fiber Cable |

| 00KF010 | ARYF | 15m Mellanox Active IB FDR Optical Fiber Cable |

| 00KF011 | ARYG | 20m Mellanox Active IB FDR Optical Fiber Cable |

| 00KF012 | ARYH | 30m Mellanox Active IB FDR Optical Fiber Cable |

| 40Gb Ethernet (QSFP) to 10Gb Ethernet (SFP+) Conversion | ||

| 00KF013 | ARZG | 3m Mellanox QSFP Passive DAC Hybrid Cable |

| 00D9676 | ARZH | Mellanox QSFP to SFP+ adapter |

| 40Gb Ethernet (QSFP) - 40GbE copper uses the QSFP+ to QSFP+ cables directly | ||

| 49Y7890 | A1DP | 1 m QSFP+ to QSFP+ Cable |

| 49Y7891 | A1DQ | 3 m QSFP+ to QSFP+ Cable |

| 00D5810 | A2X8 | 5m QSFP-to-QSFP cable |

| 00D5813 | A2X9 | 7m QSFP-to-QSFP cable |

| 40Gb Ethernet (QSFP) - 40GbE optical uses QSFP+ transceiver with MTP optical cables | ||

| 49Y7884 | A1DR | QSFP+ 40GBASE-SR4 Transceiver |

| 00VX003 | AT2U | Lenovo 10m QSFP+ MTP-MTP OM3 MMF Cable |

| 00VX005 | AT2V | Lenovo 30m QSFP+ MTP-MTP OM3 MMF Cable |

The following figure shows the Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter.

Figure 3. Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter

Features

The Mellanox Connect X-3 10GbE Adapter has the following features:

- Two 10 Gigabit Ethernet ports

- Low-profile form factor adapter with 2U bracket (3U bracket available for CTO orders)

- PCI Express 3.0 x8 host-interface (PCIe 2.0 and 1.1 compatible)

- SR-IOV support; 16 virtual functions supported by KVM and Hyper-V (OS dependant) up to a maximum of 127 virtual functions supported by the adapter

- Enables Low Latency RDMA over Ethernet (supported with both non-virtualized and SR-IOV enabled virtualized servers) -- latency as low as 1 µs

- TCP/UDP/IP stateless offload in hardware

- Traffic steering across multiple cores

- Intelligent interrupt coalescence

- Industry-leading throughput and latency performance

- Software compatible with standard TCP/UDP/IP stacks

- Microsoft VMQ / VMware NetQueue support

- Legacy and UEFI PXE network boot support

- Supports iSCSI as a software iSCSI initiator in NIC mode with NIC driver

The Mellanox ConnectX-3 40GbE / FDR IB VPI Adapters have the following features:

- Two QSFP ports supporting FDR-14 InfiniBand or 40 Gb Ethernet

- Low-profile form factor adapter with 2U bracket (3U bracket available for CTO orders)

- PCI Express 3.0 x8 host-interface (PCIe 2.0 and 1.1 compatible)

- Support for InfiniBand FDR speeds of up to 56 Gbps (auto-negotiation FDR-10, DDR and SDR)

- Support for Virtual Protocol Interconnect (VPI), which enables one adapter for both InfiniBand and 10/40 Gb Ethernet. Supports three configurations:

- 2 ports InfiniBand

- 2 ports Ethernet

- 1 port InfiniBand and 1 port Ethernet

- SR-IOV support; 16 virtual functions supported by KVM and Hyper-V (OS dependant) up to a maximum of 127 virtual functions supported by the adapter

- Enables Low Latency RDMA over 40Gb Ethernet (supported with both non-virtualized and SR-IOV enabled virtualed servers) -- latency as low as 1 µs

- Microsoft VMQ / VMware NetQueue support

- Sub 1 µs InfiniBand MPI ping latency

- Support for QSFP to SFP+ for 10 GbE support

- Traffic steering across multiple cores

- Legacy and UEFI PXE network boot support (Ethernet mode only)

The Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter and ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter have the same features as the ConnectX-3 40GbE / FDR IB VPI Adapter with these additions:

- Mezzanine LOM Generation 2 (ML2) form factor

- Offers NVGRE hardware offloads

- Offers VXLAN hardware offloads

Performance

Based on Mellanox's ConnectX-3 technology, these adapters provide a high level of throughput performance for all network environments by removing I/O bottlenecks in mainstream servers that are limiting application performance. With the FDR VPI IB/E Adapter, servers can achieve up to 56 Gb transmit and receive bandwidth. Hardware-based InfiniBand transport and IP over InfiniBand (IPoIB) stateless off-load engines handle the segmentation, reassembly, and checksum calculations that otherwise burden the host processor.

RDMA over InfiniBand and RDMA over Ethernet further accelerate application run time while reducing CPU utilization. RDMA allows very high-volume transaction-intensive applications typical of HPC and financial market firms, as well as other industries where speed of data delivery is paramount. With the ConnectX-3-based adapter, highly compute-intensive tasks running on hundreds or thousands of multiprocessor nodes, such as climate research, molecular modeling, and physical simulations, can share data and synchronize faster, resulting in shorter run times.

In data mining or web crawl applications, RDMA provides the needed boost in performance to enable faster search by solving the network latency bottleneck that is associated with I/O cards and the corresponding transport technology in the cloud. Various other applications that benefit from RDMA with ConnectX-3 include Web 2.0 (Content Delivery Network), business intelligence, database transactions, and various cloud computing applications. Mellanox ConnectX-3's low power consumption provides clients with high bandwidth and low latency at the lowest cost of ownership.

TCP/UDP/IP acceleration

Applications utilizing TCP/UDP/IP transport can achieve industry leading data throughput. The hardware-based stateless offload engines in ConnectX-3 reduce the CPU impact of IP packet transport, allowing more processor cycles to work on the application.

NVGRE and VXLAN hardware offloads

The Mellanox ConnectX-3 Pro adapters offers NVGRE and VXLAN hardware offload engines which provide additional performance benefits, especially for public or private cloud implementations and virtualized environments. These offloads ensure that Overlay Networks are enabled to handle the advanced mobility, scalability, serviceability that is required in today's and tomorrow's data center. These offloads dramatically lower CPU consumption, thereby reducing cloud application cost, facilitating the highest available throughput, and lowering power consumption.

Software support

All Mellanox adapter cards are supported by a full suite of drivers for Microsoft Windows, Linux distributions, and VMware. ConnectX-3 adapters support OpenFabrics-based RDMA protocols and software. Stateless offload is fully interoperable with standard TCP/ UDP/IP stacks. ConnectX-3 adapters are compatible with configuration and management tools from OEMs and operating system vendors.

Specifications

InfiniBand specifications (all three FDR adapters):

- Supports InfiniBand FDR-14, FDR-10, QDR, DDR, and SDR

- IBTA Specification 1.2.1 compliant

- RDMA, Send/Receive semantics

- Hardware-based congestion control

- 16 million I/O channels

- 256 to 4 KB MTU, 1 GB messages

- Nine virtual lanes: Eight data and one management

- NV-GRE hardware offloads (ConnectX-3 Pro only)

- VXLAN hardware offloads (ConnectX-3 Pro only)

Enhanced InfiniBand specifications:

- Hardware-based reliable transport

- Hardware-based reliable multicast

- Extended Reliable Connected transport

- Enhanced Atomic operations

- Fine-grained end-to-end quality of server (QoS)

Ethernet specifications:

- IEEE 802.3ae 10 GbE

- IEEE 802.3ba 40 GbE (all three FDR adapters)

- IEEE 802.3ad Link Aggregation

- IEEE 802.3az Energy Efficient Ethernet

- IEEE 802.1Q, .1P VLAN tags and priority

- IEEE 802.1Qbg

- IEEE P802.1Qaz D0.2 Enhanced Transmission Selection (ETS)

- IEEE P802.1Qbb D1.0 Priority-based Flow Control

- IEEE 1588v2 Precision Clock Synchronization

- Multicast

- Jumbo frame support (9600B)

- 128 MAC/VLAN addresses per port

Hardware-based I/O virtualization:

- Address translation and protection

- Multiple queues per virtual machine

- VMware NetQueue support

- 16 virtual function SR-IOV supported with Linux KVM

- VXLAN and NVGRE (ConnectX-3 Pro adapters)

SR-IOV features:

- Address translation and protection

- Dedicated adapter resources

- Multiple queues per virtual machine

- Enhanced QoS for vNICs

- VMware NetQueue support

Additional CPU offloads:

- TCP/UDP/IP stateless offload

- Intelligent interrupt coalescence

- Compliant with Microsoft RSS and NetDMA

Management and tools:

InfiniBand:

- Interoperable with OpenSM and other third-party subnet managers

- Firmware and debug tools (MFT and IBDIAG)

Ethernet:

- MIB, MIB-II, MIB-II Extensions, RMON, and RMON 2

- Configuration and diagnostic tools

- NC-SI (ML2 adapters only)

Protocol support:

- Open MPI, OSU MVAPICH, Intel MPI, MS MPI, and Platform MPI

- TCP/UDP, EoIB and IPoIB

- uDAPL

Physical specifications

The adapters have the following dimensions:

- Height: 168 mm (6.60 in)

- Width: 69 mm (2.71 in)

- Depth: 17 mm (0.69 in)

- Weight: 208 g (0.46 lb)

Approximate shipping dimensions:

- Height: 189 mm (7.51 in)

- Width: 90 mm (3.54 in)

- Depth: 38 mm (1.50 in)

- Weight: 450 g (0.99 lb)

Server support - ThinkSystem

The following tables list the ThinkSystem servers that are compatible.

| Part Number | Description | AMD V3 | 2S Intel V3/V4 | Multi Node V3 | 1S V3 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

SR635 V3 (7D9H / 7D9G) |

SR655 V3 (7D9F / 7D9E) |

SR645 V3 (7D9D / 7D9C) |

SR665 V3 (7D9B / 7D9A) |

ST650 V3 (7D7B / 7D7A) |

SR630 V3 (7D72 / 7D73) |

SR650 V3 (7D75 / 7D76) |

SR630 V4 (7DG8 / 7DG9) |

SR650 V4 (7DGC / 7DGD) |

SR650a V4 (7DGC / 7DGD) |

SD535 V3 (7DD8 / 7DD1) |

SD530 V3 (7DDA / 7DD3) |

SD550 V3 (7DD9 / 7DD2) |

ST45 V3 (7DH4 / 7DH5) |

ST50 V3 (7DF4 / 7DF3) |

ST250 V3 (7DCF / 7DCE) |

SR250 V3 (7DCM / 7DCL) |

||

| 7ZT7A00501 | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Part Number | Description | 4S 8S Intel V3/V4 | GPU Rich | Edge | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

SR850 V3 (7D97 / 7D96) |

SR860 V3 (7D94 / 7D93) |

SR950 V3 (7DC5 / 7DC4) |

SR850 V4 (7DJT / 7DJS) |

SR860 V4 (7DJQ / 7DJN) |

SR670 V2 (7Z22 / 7Z23) |

SR675 V3 (7D9Q / 7D9R) |

SR680a V3 (7DHE) |

SR680a V3 B200 (7DM9) |

SR685a V3 (7DHC) |

SR780a V3 (7DJ5) |

SR680a V4 (7DMK) |

SE100 (7DGR) |

SE350 (7Z46 / 7D1X) |

SE350 V2 (7DA9) |

SE360 V2 (7DAM) |

SE450 (7D8T) |

SE455 V3 (7DBY) |

||

| 7ZT7A00501 | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Part Number | Description | Super Computing | 1S Intel V2 | 2S Intel V2 | AMD V1 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

SC750 V4 (7DDJ) |

SC777 V4 (7DKA) |

SD665 V3 (7D9P) |

SD665-N V3 (7DAZ) |

SD650 V3 (7D7M) |

SD650-I V3 (7D7L) |

SD650-N V3 (7D7N) |

ST50 V2 (7D8K / 7D8J) |

ST250 V2 (7D8G / 7D8F) |

SR250 V2 (7D7R / 7D7Q) |

ST650 V2 (7Z75 / 7Z74) |

SR630 V2 (7Z70 / 7Z71) |

SR650 V2 (7Z72 / 7Z73) |

SR635 (7Y98 / 7Y99) |

SR655 (7Y00 / 7Z01) |

SR645 (7D2Y / 7D2X) |

SR665 (7D2W / 7D2V) |

||

| 7ZT7A00501 | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Part Number | Description | Dense V2 | 4S V2 | 8S | 4S V1 | 1S Intel V1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

SD630 V2 (7D1K) |

SD650 V2 (7D1M) |

SD650-N V2 (7D1N) |

SN550 V2 (7Z69) |

SR850 V2 (7D31 / 7D32) |

SR860 V2 (7Z59 / 7Z60) |

SR950 (7X11 / 7X12) |

SR850 (7X18 / 7X19) |

SR850P (7D2F / 2D2G) |

SR860 (7X69 / 7X70) |

ST50 (7Y48 / 7Y50) |

ST250 (7Y45 / 7Y46) |

SR150 (7Y54) |

SR250 (7Y52 / 7Y51) |

||

| 7ZT7A00501 | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter | N | N | N | N | N | N | Y | Y | Y | Y | N | N | N | N |

| Part Number | Description | 2S Intel V1 | Dense V1 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

ST550 (7X09 / 7X10) |

SR530 (7X07 / 7X08) |

SR550 (7X03 / 7X04) |

SR570 (7Y02 / 7Y03) |

SR590 (7X98 / 7X99) |

SR630 (7X01 / 7X02) |

SR650 (7X05 / 7X06) |

SR670 (7Y36 / 7Y37) |

SD530 (7X21) |

SD650 (7X58) |

SN550 (7X16) |

SN850 (7X15) |

||

| 7ZT7A00501 | ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter | N | N | N | N | N | Y | Y | N | N | N | N | N |

Server support - System x

The adapters are supported in the System x servers that are listed in the following tables.

Memory requirements: Ensure that your server has sufficient memory available to the adapters. The first adapter requires 8 GB of RAM in addition to the memory allocated to operating system, applications, and virtual machines. Any additional adapters installed each require 4 GB of RAM.

Support for System x and dense servers with Xeon E5/E7 v4 and E3 v5 processors

| Part number |

Description |

x3250 M6 (3943)

|

x3250 M6 (3633)

|

x3550 M5 (8869)

|

x3650 M5 (8871)

|

x3850 X6/x3950 X6 (6241, E7 v4)

|

nx360 M5 (5465, E5-2600 v4)

|

sd350 (5493)

|

|---|---|---|---|---|---|---|---|---|

| 00D9690 | Mellanox ConnectX-3 10 GbE Adapter | N | N | Y | Y | Y | Y | N |

| 00D9550 | Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter | N | N | Y | Y | Y | Y | Y |

| 00FP650 | Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter | N | N | Y | Y | Y | Y | N |

Support for servers with Intel Xeon v3 processors

| Part number |

Description |

x3100 M5 (5457)

|

x3250 M5 (5458)

|

x3500 M5 (5464)

|

x3550 M5 (5463)

|

x3650 M5 (5462)

|

x3850 X6/x3950 X6 (6241, E7 v3)

|

nx360 M5 (5465)

|

|---|---|---|---|---|---|---|---|---|

| 00D9690 | Mellanox ConnectX-3 10 GbE Adapter | Y | Y | Y | Y | Y | Y | Y |

| 00D9550 | Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter | N | N | Y | Y | Y | Y | Y |

| 00FP650 | Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter | N | N | N | Y | Y | Y | Y |

Support for servers with Intel Xeon v2 processors

| Part number |

Description |

x3500 M4 (7383, E5-2600 v2)

|

x3530 M4 (7160, E5-2400 v2)

|

x3550 M4 (7914, E5-2600 v2)

|

x3630 M4 (7158, E5-2400 v2)

|

x3650 M4 (7915, E5-2600 v2)

|

x3650 M4 BD (5466)

|

x3650 M4 HD (5460)

|

x3750 M4 (8752)

|

x3750 M4 (8753)

|

x3850 X6/x3950 X6 (3837)

|

x3850 X6/x3950 X6 (6241, E7 v2)

|

dx360 M4 (E5-2600 v2)

|

nx360 M4 (5455)

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 00D9690 | Mellanox ConnectX-3 10 GbE Adapter | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| 00D9550 | Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| 00FP650 | Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter | N | N | N | N | N | N | N | Y | Y | Y | Y | N | N |

Operating system support

The adapters support the following operating systems:

- Mellanox ConnectX-3 10 GbE Adapter, 00D9690

- Mellanox ConnectX-3 40GbE / FDR IB VPI Adapter, 00D9550

- Mellanox ConnectX-3 Pro ML2 2x40GbE/FDR VPI Adapter, 00FP650

- ThinkSystem Mellanox ConnectX-3 Pro ML2 FDR 2-Port QSFP VPI Adapter, 7ZT7A00501

Tip: This table is automatically generated based on data from Lenovo ServerProven. Note that older servers are not listed. Visit ServerProven to view OS support for those servers.

* InfiniBand mode not supported with VMware: With VMware, these adapters are supported only in Ethernet mode. InfiniBand is not supported.

Notes:

- VXLAN is initially supported only with Red Hat Enterprise Linux 7

- NVGRE is initially supported only with Windows Server 2012 R2

| Operating systems | x3530 M4 (7160, E5 v1) |

x3850/3950 X6 (3837) |

x3850/3950 X6 (6241, E7 v2) |

x3850 X5/x3950 X5 (7143) |

x3755 M3 (7164, 6200) |

x3750 M4 (8722, E5 v1) |

x3750 M4 (8752, E5 v2) |

x3750 M4 (8753, E5 v2) |

x3690 X5 (7147) |

x3650 M4 HD (5460, E5 v2) |

x3650 M4 BD (5466, E5 v2) |

x3650 M4 (7915, E5 v1) |

x3650 M4 (7915, E5 v2) |

x3650 M3 (7945) |

x3630 M4 (7158, E5 v1) |

x3630 M4 (7158, E5 v2) |

x3550 M4 (7914, E5 v1) |

x3550 M4 (7914, E5 v2) |

nx360 M4 (5455, E5 v1) |

x3530 M4 (7160, E5 v2) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2008 R2 | N | N | Y | N | N | N | N | Y | N | N | Y | N | N | N | N | N | N | N | N | N |

| Microsoft Windows Server 2012 R2 | N | Y | Y | N | N | N | Y | Y | N | Y | Y | Y | Y | N | N | Y | N | N | Y | Y |

| Microsoft Windows Server 2016 | N | N | Y 1 | N | N | N | N | Y | N | N | N | Y | Y | N | N | N | Y | Y | Y | N |

| Microsoft Windows Server 2019 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Microsoft Windows Server version 1709 | N | N | N | N | N | N | N | Y | N | N | N | N | N | N | N | N | N | N | Y | N |

| Microsoft Windows Server version 1803 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Red Hat Enterprise Linux 5 Server x64 Edition | Y | N | N | Y | N | Y | N | N | Y | N | Y | Y | Y | Y | Y | Y | Y | N | N | Y |

| Red Hat Enterprise Linux 6 Server x64 Edition | Y | N | Y | Y | Y | Y | N | Y | Y | N | Y | Y | N | Y | Y | Y | Y | N | Y | Y |

| Red Hat Enterprise Linux 7 | N | N | Y | N | N | N | N | N | N | N | N | N | Y | N | N | N | Y | Y | Y | N |

| Red Hat Enterprise Linux 8.0 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 10 for AMD64/EM64T | Y | N | N | Y | N | Y | N | N | Y | N | N | Y | N | Y | Y | N | Y | N | N | N |

| SUSE Linux Enterprise Server 11 for AMD64/EM64T | Y | Y | Y | Y | N | Y | Y | Y | Y | N | N | Y | N | Y | Y | Y | Y | N | Y | Y |

| SUSE Linux Enterprise Server 12 | N | N | Y | N | N | N | N | N | N | N | N | N | Y | N | N | N | Y | Y | Y | N |

| SUSE Linux Enterprise Server 15 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | Y | N |

| VMware vSphere 5.0 (ESXi) | N | N | N | Y | N | Y | Y | Y | Y 2 | N | N | N | Y | N | N | Y | N | N | N | N |

| VMware vSphere 5.1 (ESXi) | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 5.5 | N | N | Y | N | N | N | N | Y | N | Y | Y | N | Y | N | N | N | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.0 | N | Y | Y | Y | N | Y | Y | Y | Y | N | N | Y | Y | N | N | Y | Y | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.5 | N | Y | N | N | N | N | N | Y | N | N | N | Y | Y | N | N | N | Y | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | Y | N |

1 [in box driver support only]

2 Port 2 cannot be assigned in vSphere (ESXi 5.0) - IBM Dual-Port 10 Gigabit Ethernet Adapter for BladeCenter

| Operating systems | x3500 M4 (7383, E5 v1) |

x3500 M4 (7383, E5 v2) |

x3300 M4 (7382, E5 v1) |

x3250 M5 (5458) |

x3100 M5 (5457) |

nx360 M4 (5455, E5 v2) |

dx360 M4 (7912, E5 v1) |

dx360 M4 (7912, E5 v2) |

x3850/3950 X6 (6241, E7 v4) |

x3850/3950 X6 (6241, E7 v3) |

x3650 M5 (5462) |

x3650 M5 (8871) |

x3550 M5 (5463) |

x3550 M5 (8869) |

x3500 M5 (5464) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2008 R2 | Y | Y | N | N | Y | N | N | N | N | Y | Y | N | Y | Y | Y |

| Microsoft Windows Server 2012 R2 | N | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2016 | N | Y | N | Y | Y | N | Y | Y | Y 1 | Y 1 | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2019 | N | N | N | N | N | N | N | N | Y | N | N | Y | N | Y | N |

| Microsoft Windows Server version 1709 | N | N | N | N | N | N | N | N | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server version 1803 | N | N | N | N | N | N | N | N | N | N | N | Y | N | Y | N |

| Red Hat Enterprise Linux 5 Server x64 Edition | Y | Y | Y | Y | Y | Y | N | Y | N | N | N | N | N | N | N |

| Red Hat Enterprise Linux 6 Server x64 Edition | Y | Y | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7 | N | Y | N | Y | Y | N | N | N | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.0 | N | N | N | N | N | N | N | N | Y | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 10 for AMD64/EM64T | Y | N | Y | N | N | N | N | Y | N | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 11 for AMD64/EM64T | Y | Y | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 | N | Y | N | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 | N | N | N | N | N | N | N | N | Y | Y | N | Y | N | Y | N |

| VMware vSphere 5.0 (ESXi) | Y | Y | N | N | N | Y | N | Y | N | N | N | N | N | N | N |

| VMware vSphere 5.1 (ESXi) | Y | Y | N | Y | Y | Y | N | Y | N | N | Y | N | Y | N | Y |

| VMware vSphere Hypervisor (ESXi) 5.5 | N | N | N | N | Y | N | N | N | N | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.0 | N | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 | N | Y | N | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | N | N | N | N | N | N | N | Y | N | N | Y | N | Y | Y |

1 [in box driver support only]

| Operating systems | x3550 M4 (7914, E5 v2) |

sd350 (5493) |

x3850/3950 X6 (3837) |

x3850/3950 X6 (6241, E7 v2) |

x3850 X5/x3950 X5 (7143) |

x3755 M3 (7164, 6200) |

x3750 M4 (8722, E5 v1) |

x3750 M4 (8752, E5 v2) |

x3750 M4 (8753, E5 v2) |

x3690 X5 (7147) |

x3650 M4 HD (5460, E5 v2) |

x3650 M4 BD (5466, E5 v2) |

x3650 M4 (7915, E5 v1) |

x3650 M4 (7915, E5 v2) |

x3650 M3 (7945) |

x3630 M4 (7158, E5 v1) |

x3630 M4 (7158, E5 v2) |

x3550 M4 (7914, E5 v1) |

nx360 M4 (5455, E5 v1) |

x3530 M4 (7160, E5 v1) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2008 R2 | N | N | N | Y | Y | N | N | N | Y | N | N | Y | N | N | N | N | N | N | N | N |

| Microsoft Windows Server 2012 R2 | N | Y | Y | Y | Y | N | N | N | Y | N | Y | Y | N | N | N | N | Y | N | Y | N |

| Microsoft Windows Server 2016 | Y | Y | N | Y 3 | N | N | N | N | Y | N | N | N | Y | Y | N | N | N | Y | Y | N |

| Microsoft Windows Server 2019 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Microsoft Windows Server version 1709 | N | Y | N | N | N | N | N | N | Y | N | N | N | N | N | N | N | N | N | Y | N |

| Microsoft Windows Server version 1803 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| Red Hat Enterprise Linux 5 Server x64 Edition | N | N | N | N | Y | N | Y | N | N | Y | N | N | Y | Y | Y | Y | Y | Y | N | Y |

| Red Hat Enterprise Linux 6 Server x64 Edition | N | Y | N | Y | Y | Y | Y | N | Y | Y | N | N | Y | N | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7 | Y | Y | N | Y | N | N | N | N | N | N | N | N | N | Y | N | N | N | Y | Y | N |

| Red Hat Enterprise Linux 8.0 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 10 for AMD64/EM64T | N | N | N | N | Y | N | Y | N | N | Y | N | N | Y | N | Y | Y | N | Y | N | Y |

| SUSE Linux Enterprise Server 11 for AMD64/EM64T | N | Y | Y | Y | Y | N | Y | Y | Y | Y | N | N | Y | N | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 | Y | Y | N | Y | N | N | N | N | N | N | N | N | N | Y | N | N | N | Y | Y | N |

| SUSE Linux Enterprise Server 15 | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | Y | N |

| VMware vSphere 5.0 (ESXi) | N | N | N | N | Y | N | Y | N | Y | Y | N | N | Y | Y | Y | Y | Y | Y | N | Y |

| VMware vSphere 5.1 (ESXi) | Y | N | Y 2 | Y | Y | N | Y | N | Y | Y | Y | N | Y | Y | N | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 5.5 | Y 1 | Y | N | Y | N | N | N | N | Y | N | Y 4 | N | N | Y 5 | N | N | N | N | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.0 | N | Y | N | Y | N | N | N | N | N | N | N | N | Y | N | N | N | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.5 | Y | Y | N | N | N | N | N | N | Y | N | N | N | Y | Y | N | N | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | Y | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | N | Y | N |

1 Supported with 40gb ethernet only. No Infiniband

2 Ethernet support only

3 [in box driver support only]

4 No infiniband support

5 Supported with 40 GB Ethernet only, no InfiniBand.

| Operating systems | x3530 M4 (7160, E5 v2) |

x3500 M4 (7383, E5 v1) |

x3500 M4 (7383, E5 v2) |

x3300 M4 (7382, E5 v1) |

nx360 M4 (5455, E5 v2) |

dx360 M4 (7912, E5 v1) |

dx360 M4 (7912, E5 v2) |

x3850/3950 X6 (6241, E7 v4) |

x3850/3950 X6 (6241, E7 v3) |

x3650 M5 (5462) |

x3650 M5 (8871) |

x3550 M5 (5463) |

x3550 M5 (8869) |

x3500 M5 (5464) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2008 R2 | N | Y | Y | N | N | N | N | N | Y | Y | N | Y | Y | Y |

| Microsoft Windows Server 2012 R2 | Y | N | Y | Y | Y | N | N | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2016 | N | N | Y | N | N | Y | Y | Y 1 | Y 1 | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2019 | N | N | N | N | N | N | N | Y | N | N | Y | N | Y | N |

| Microsoft Windows Server version 1709 | N | N | N | N | N | N | N | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server version 1803 | N | N | N | N | N | N | N | N | N | N | Y | N | Y | N |

| Red Hat Enterprise Linux 5 Server x64 Edition | Y | Y | Y | Y | Y | Y | Y | N | N | N | N | N | N | N |

| Red Hat Enterprise Linux 6 Server x64 Edition | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7 | N | N | Y | N | N | N | N | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.0 | N | N | N | N | N | N | N | Y | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 10 for AMD64/EM64T | N | Y | N | Y | N | Y | Y | N | N | N | N | N | N | N |

| SUSE Linux Enterprise Server 11 for AMD64/EM64T | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 | N | N | Y | N | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 | N | N | N | N | N | N | N | Y | Y | N | Y | N | Y | N |

| VMware vSphere 5.0 (ESXi) | N | Y | Y | Y | Y | Y | Y | N | N | N | N | N | N | N |

| VMware vSphere 5.1 (ESXi) | N | Y | Y | N | Y | Y | Y | N | N | Y | N | Y | N | Y |

| VMware vSphere Hypervisor (ESXi) 5.5 | N | N | N | N | N | N | N | N | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.0 | N | N | N | N | N | Y | N | Y | Y | Y | Y | N | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 | N | N | Y | N | N | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | N | N | N | N | N | N | Y | N | N | Y | N | Y | Y |

1 [in box driver support only]

| Operating systems | nx360 M4 (5455, E5 v1) |

x3550 M5 (5463) |

x3550 M5 (8869) |

x3650 M5 (5462) |

x3650 M5 (8871) |

x3850/3950 X6 (6241, E7 v3) |

x3850/3950 X6 (6241, E7 v4) |

x3750 M4 (8752, E5 v2) |

x3750 M4 (8753, E5 v2) |

x3850/3950 X6 (3837) |

x3850/3950 X6 (6241, E7 v2) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2012 R2 | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | Y |

| Microsoft Windows Server 2016 | Y | Y | Y | Y | Y | Y 1 | Y 1 | N | Y | N | Y 1 |

| Microsoft Windows Server 2019 | N | N | Y | N | Y | N | Y | N | N | N | N |

| Microsoft Windows Server version 1709 | Y | Y | Y | Y | Y | Y | Y | N | Y | N | N |

| Microsoft Windows Server version 1803 | N | N | Y | N | Y | N | N | N | N | N | N |

| Red Hat Enterprise Linux 6 Server x64 Edition | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7 | Y | Y | Y | Y | Y | Y | Y | N | N | N | Y |

| Red Hat Enterprise Linux 8.0 | N | N | N | N | N | N | Y | N | N | N | N |

| SUSE Linux Enterprise Server 11 for AMD64/EM64T | Y | Y | Y | Y | N | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 | Y | Y | Y | Y | Y | Y | Y | N | N | N | Y |

| SUSE Linux Enterprise Server 15 | Y | N | Y | N | Y | Y | Y | N | N | N | N |

| VMware vSphere 5.1 (ESXi) | Y | Y | N | Y | N | N | N | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 5.5 | Y | Y | Y | Y | N | Y | N | Y | N | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.0 | Y | Y | Y | Y | Y | Y | N | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 | Y | Y | Y | Y | Y | Y | Y | N | Y | N | N |

| VMware vSphere Hypervisor (ESXi) 6.7 | Y | N | Y | N | Y | N | Y | N | N | N | N |

1 [in box driver support only]

| Operating systems | SR630 (Xeon Gen 2) |

SR650 (Xeon Gen 2) |

SR860 (Xeon Gen 2) |

SR850 (Xeon Gen 2) |

SR630 (Xeon Gen 1) |

SR650 (Xeon Gen 1) |

SR850 (Xeon Gen 1) |

SR850P (Xeon Gen 2) |

SR860 (Xeon Gen 1) |

SR950 (Xeon Gen 1) |

SR950 (Xeon Gen 2) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2012 R2 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Microsoft Windows Server 2016 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2019 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server version 1709 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Microsoft Windows Server version 1803 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Red Hat Enterprise Linux 6.9 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Red Hat Enterprise Linux 6.10 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Red Hat Enterprise Linux 7.3 | N | N | N | N | Y | Y | Y | N | N | Y | N |

| Red Hat Enterprise Linux 7.4 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Red Hat Enterprise Linux 7.5 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| Red Hat Enterprise Linux 7.6 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.7 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.8 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.9 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.0 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.1 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.2 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.4 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.5 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.6 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 11 SP4 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| SUSE Linux Enterprise Server 12 SP2 | N | N | N | N | Y | Y | Y | N | N | Y | N |

| SUSE Linux Enterprise Server 12 SP3 | N | N | N | N | Y | Y | Y | Y | Y | Y | N |

| SUSE Linux Enterprise Server 12 SP4 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 SP5 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 SP1 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 SP2 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 | Y 1 |

| SUSE Linux Enterprise Server 15 SP3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.0 U3 | N | N | N | N | Y | Y | N | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.5 | N | N | N | N | N | N | N | N | N | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.5 U1 | N | N | N | N | N | N | N | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.5 U2 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 U3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | N | N | N | Y | Y | Y | N | Y | Y | N |

| VMware vSphere Hypervisor (ESXi) 6.7 U1 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 U2 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 U3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U1 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U2 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

1 Need out of box driver to support infiniband feature

Regulatory approvals

The adapters have the following regulatory approvals:

- EN55022

- EN55024

- EN60950-1

- EN 61000-3-2

- EN 61000-3-3

- IEC 60950-1

- FCC Part 15 Class A

- UL 60950-1

- CSA C22.2 60950-1-07

- VCCI

- NZ AS3548 / C-tick

- RRL for MIC (KCC)

- BSMI (EMC)

- IECS-003:2004 Issue 4

Operating environment

The adapters are supported in the following environment:

- Operating temperature:

- 0 - 55° C (-32 to 131° F) at 0 - 914 m (0 - 3,000 ft)

- 10 - 32° C (50 - 90° F) at 914 to 2133 m (3,000 - 7,000 ft)

- Relative humidity: 20% - 80% (noncondensing)

- Maximum altitude: 2,133 m (7,000 ft)

- Air flow: 200 LFM at 55° C

- Power consumption:

- Power consumption (typical): 8.8 W typical (both ports active)

- Power consumption (maximum): 9.4 W maximum for passive cables only,

- 13.4 W maximum for active optical modules

Top-of-rack Ethernet switches

The following 10 Gb Ethernet top-of-rack switches are supported.

| Part number | Description |

|---|---|

| Switches mounted at the rear of the rack (rear-to-front airflow) | |

| 7159A1X | Lenovo ThinkSystem NE1032 RackSwitch (Rear to Front) |

| 7159B1X | Lenovo ThinkSystem NE1032T RackSwitch (Rear to Front) |

| 7Z330O11WW | Lenovo ThinkSystem NE1064TO RackSwitch (Rear to Front, ONIE) |

| 7159C1X | Lenovo ThinkSystem NE1072T RackSwitch (Rear to Front) |

| 7159BR6 | Lenovo RackSwitch G8124E (Rear to Front) |

| 7159G64 | Lenovo RackSwitch G8264 (Rear to Front) |

| 7159DRX | Lenovo RackSwitch G8264CS (Rear to Front) |

| 7159CRW | Lenovo RackSwitch G8272 (Rear to Front) |

| 7159GR6 | Lenovo RackSwitch G8296 (Rear to Front) |

| Switches mounted at the front of the rack (front-to-rear airflow) | |

| 7159BF7 | Lenovo RackSwitch G8124E (Front to Rear) |

| 715964F | Lenovo RackSwitch G8264 (Front to Rear) |

| 7159DFX | Lenovo RackSwitch G8264CS (Front to Rear) |

| 7159CFV | Lenovo RackSwitch G8272 (Front to Rear) |

| 7159GR5 | Lenovo RackSwitch G8296 (Front to Rear) |

For more information, see the Lenovo Press Product Guides in the 10Gb top-of-rack switch category:

https://lenovopress.com/networking/tor/10gb

Warranty

One year limited warranty. When installed in a supported server, these cards assume the server’s base warranty and any warranty upgrades.

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

BladeCenter®

ServerProven®

System x®

ThinkSystem®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel® and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Hyper-V®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

IBM® is a trademark of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.