Abstract

The Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) for IBM® BladeCenter® delivers the industry's lowest latency performance of 10 Gigabit Ethernet connectivity with stateless offloads for converged fabrics in enterprise data centers, high-performance computing, and embedded environments. Financial services, cluster databases, web infrastructure, and cloud computing are just a few example applications that will achieve significant throughput and latency improvements, resulting in faster access, real-time response, and increased number of users per server. The adapter is based on Mellanox ConnectX-2 EN technology, which improves network performance by increasing available bandwidth to the CPU, especially in virtualized server environments.

Note: This product has been withdrawn from marketing and is no longer available for ordering from IBM.

Introduction

The Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) for IBM® BladeCenter® delivers the industry's lowest latency performance of 10 Gigabit Ethernet connectivity with stateless offloads for converged fabrics in enterprise data centers, high-performance computing, and embedded environments. Financial services, cluster databases, web infrastructure, and cloud computing are just a few example applications that will achieve significant throughput and latency improvements resulting in faster access, real-time response, and increased number of users per server. The adapter is based on Mellanox ConnectX-2 EN technology, which improves network performance by increasing available bandwidth to the CPU, especially in virtualized server environments.

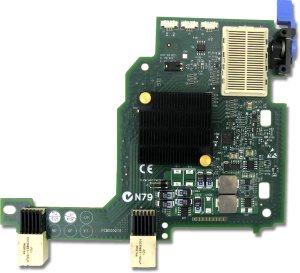

Figure 1 shows the adapter.

Figure 1. Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) for IBM BladeCenter

Did you know?

Part number information

Table 1. Ordering part number and feature code

| Description | Part number | Feature code |

| Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) for IBM BladeCenter | 90Y3570 | A1NW |

These part numbers include the following items:

- One Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh)

- Documentation CD that contains the Installation and User's Guide

- Important Notices document

Features and benefits

Performance

Based on ConnectX-2 EN technology, the Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) provides a high level of throughput performance for all network environments by removing I/O bottlenecks in mainstream servers that are limiting application performance. Blade servers in the BladeCenter H and HT chassis can fully utilize both 10 Gbps ports and achieve up to 40 Gbps duplex bandwidth. Hardware-based stateless offload engines handle the TCP/UDP/IP segmentation, reassembly, and checksum calculations that would otherwise burden the host processor. These offload technologies are fully compatible with Microsoft RSS and NetDMA.

RDMA over Converged Ethernet (RoCE) further accelerates application run time. RoCE specification provides efficient data transfer with very low latencies on lossless Ethernet networks. RoCE enables lowest latency memory transaction, with less than 2 µs at full bandwidth with small message size. This allows very high-volume transaction-intensive applications typical of financial market firms and other industries where speed of data delivery is paramount to take advantage. With the Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh), high-frequency transaction applications are able to access trading information with shorter times, making sure that the trading servers are able to respond first to any new market data and market inefficiencies, while the higher throughput enables higher volume trading, maximizing liquidity and profitability.

In data mining or web crawl applications, RoCE provides the needed boost in performance to search faster by solving the network latency bottleneck associated with I/O cards and the corresponding transport technology in the cloud. Various other applications that benefit from RoCE with ConnectX-2 EN include Web 2.0 (Content Delivery Network), Business intelligence, database transactions, and various Cloud computing applications. Mellanox ConnectX-2 EN's low-power consumption (less than 3.5 W per port) provides customers with high bandwidth and low latency at the lowest cost of ownership.

I/O virtualization

ConnectX-2 EN supports hardware-based I/O virtualization, providing dedicated adapter resources and guaranteed isolation and protection for virtual machines (VM) within the server. ConnectX-2 EN gives data center managers better server utilization and LAN and SAN unification while reducing costs, power, and complexity.

Quality of service

Resource allocation per application or per VM is provided by the advanced QoS supported by ConnectX-2 EN. Service levels for multiple traffic types can be based on IETF DiffServ or IEEE 802.1p/Q, along with the Data Center Bridging enhancements, allowing system administrators to prioritize traffic by application, virtual machine, or protocol. This powerful combination of QoS and prioritization provides the ultimate fine-grained control of traffic, ensuring that applications run smoothly in today’s complex environment.

Specifications

- CFFh form-factor

- Two full-duplex 10 Gb Ethernet ports

- PCI Express x8 Gen 2 host interface

- Mellanox ConnectX-2 EN ASIC

- Connectivity to high-speed I/O module bays in BladeCenter H and BladeCenter HT chassis

- Features: Stateless Offload, Priority Flow Control, FCoE Ready

- Power consumption: 6.4 W (typical)

- Stateless iSCSI offload

- BladeCenter Open Fabric Manager support (available early 4Q/2011)

- PXE v2.1 boot support

- Wake on LAN support (available early 4Q/2011)

Ethernet support and standards are:

- IEEE Std 802.3ae 10 Gigabit Ethernet

- IEEE Std 802.3ad Link Aggregation and Failover

- IEEE Std 802.3x Pause

- IEEE 802.1Q, .1p VLAN tags and priority

- Multicast

- Jumbo frame support (10 KB)

- 128 MAC/VLAN addresses per port

Data Center Bridging (DCB) support:

- Enhanced Transmission Selection (ETS) (IEEE 802.1Qaz)

- Quantized Congestion Notification (QCN) (IEEE 802.1Qau)

- Priority-based Flow Control (PFC) (IEEE 802.1Qbb)

- Data Center Bridging Capability eXchange (DCBX) protocol

TCP/UDP/IP stateless offload:

- TCP/UDP/IP checksum offload

- TCP Large Send Offload (< 64 KB) or Giant Send Offload (64 KB - 16 MB) for segmentation

- Receive Side Scaling (RSS) up to 32 queues

- Line rate packet filtering

Additional CPU offloads:

- RDMA over Converged Ethernet support

- FC checksum offload

- VMDirect Path support

- Traffic steering across multiple cores

- Intelligent interrupt coalescence

- Compliant to Microsoft RSS and NetDMA

Hardware-based I/O virtualization:

- Address translation and protection

- Dedicated adapter resources and guaranteed isolation

- Multiple queues per virtual machine

- Hardware switching between guest OSs

- Enhanced QoS for vNICs

- VMware NetQueue support

Storage support:

- Compliant with T11.3 FC-BB-5 FCoE frame format

Operating environment

- Temperature: 0° C to 55° C (32° F to 131° F)

- Operating power: Approximately 6.4 watts

- Dimensions: Approximately 15.9 cm x 12.5 cm (6.26 in. x 4.92 in.)

Warranty

Supported servers

Table 2. Supported servers

|

Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) |

90Y3570 |

N |

N |

N |

Y |

Y |

Y |

N |

N |

N |

N |

N |

N |

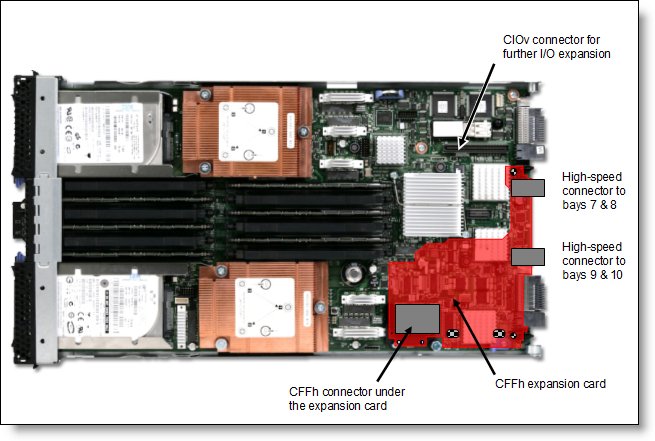

Figure 2 shows where the CFFh card is installed in a BladeCenter server.

Figure 2. Location on the BladeCenter server planar where the CFFh card is installed

Supported BladeCenter chassis and I/O modules

Table 3 lists the supported chassis and I/O module combinations that the card supports.

Table 3. I/O modules supported with the Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh)

|

BNT Virtual Fabric 10 Gb Switch Module |

46C7191 |

N |

N |

Y |

N |

Y |

N |

N |

|

BNT 6-port 10 Gb High Speed Switch Module |

39Y9267 |

N |

N |

N |

N |

N |

N |

N |

|

10 Gb Ethernet Pass-Thru Module |

46M6181 |

N |

N |

N |

N |

N |

N |

N |

|

Cisco Nexus 4001I Switch Module |

46M6071 |

N |

N |

Y |

N |

Y |

N |

N |

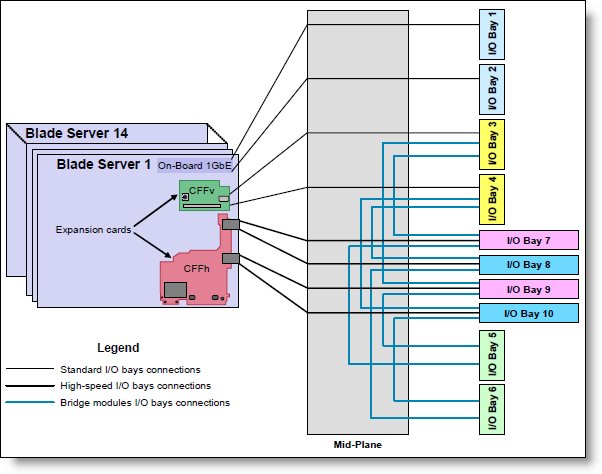

In BladeCenter H, the ports of CFFh cards are routed through the midplane to I/O bays 7, 8, 9, and 10 (Figure 3). The BladeCenter HT works in a similar way because the CFFh cards are also routed through the midplane to I/O bays 7, 8, 9, and 10.

Figure 3. IBM BladeCenter H I/O topology showing the I/O paths from CFFh expansion cards

The Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) requires that two I/O modules be installed in bays 7 and 9 of the BladeCenter H or HT chassis (Table 4).

Table 4. Locations of I/O modules required to connect to the expansion card

| Expansion card | I/O bay 7 | I/O bay 8 | I/O bay 9 | I/O bay 10 |

| Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) | Supported I/O module | Not used | Supported I/O module | Not used |

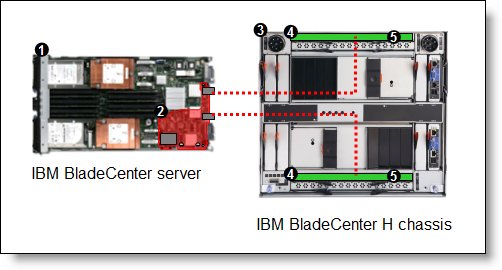

Popular configurations

Figure 4. 20 Gb solution using Intel 2-port 10 Gb Ethernet Expansion Card (CFFh)

Table 5 lists the components used in this configuration.

Table 5. Components used when connecting Intel 2-port 10 Gb Ethernet Expansion Card (CFFh) to two BNT Virtual Fabric 10 Gb Switch Modules

| Diagram reference | Part number/machine type | Description | Quantity |

| Varies | IBM BladeCenter HS22 or other supported server | 1 to 14 | |

| 90Y3570 | Mellanox 2-port 10Gb Ethernet Expansion Card (CFFh) | 1 per server | |

| 8852 or 8740/8750 | BladeCenter H or BladeCenter HT | 1 | |

| 46C7191 | BNT Virtual Fabric 10 Gb Switch Module | 2 | |

| 44W4408 | IBM 10GBase-SR SFP+ Transceiver | Up to 20* |

Operating system support

- Microsoft Windows Server 2008 R2

- Microsoft Windows Server 2008, Datacenter x64 Edition

- Microsoft Windows Server 2008, Enterprise x64 Edition

- Microsoft Windows Server 2008, Standard x64 Edition

- Microsoft Windows Server 2008, Web x64 Edition

- Red Hat Enterprise Linux 5 Server with Xen x64 Edition

- Red Hat Enterprise Linux 5 Server x64 Edition

- Red Hat Enterprise Linux 6 Server x64 Edition

- SUSE LINUX Enterprise Server 10 for AMD64/EM64T

- SUSE LINUX Enterprise Server 10 with Xen for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 with Xen for AMD64/EM64T

- VMware ESX 4.0

- VMware ESX 4.1

- VMware ESXi 4.0

- VMware ESXi 4.1

Support for operating systems is based on the combination of the expansion card and the blade server in which it is installed. See IBM ServerProven for the latest information about the specific versions and service packs supported by going to the following address:

http://ibm.com/servers/eserver/serverproven/compat/us/.

Select the blade server and then select the expansion card to see the supported operating systems.

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

BladeCenter®

ServerProven®

The following terms are trademarks of other companies:

Intel®, the Intel logo is a trademark of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

IBM®, ibm.com®, Redbooks®, and ServicePac® are trademarks of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.