Abstract

The Emulex Virtual Fabric Adapter 5 (VFA5) Network Adapter Family for System x builds on the foundation of previous generations of Emulex VFAs by delivering performance enhancements and new features that reduce complexity, reduce cost, and improve performance.

This product guide provides essential pre-sales information to understand the VFA5 adapter offerings, including their key features and specifications. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about VFA5 adapters and consider their use in IT solutions.

Withdrawn: Add adapters and FoD upgrades listed in this product guide are now withdrawn. The follow-on adapter is the VFA5.2 family of adapters, as described in https://lenovopress.com/lp0052-emulex-vfa52-adapters

Change History

Changes in the January 15 update:

- Withdrawn adapters:

- Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter, 00D1996

- Emulex VFA5 2x10 GbE SFP+ PCIe Adapter, 00JY820

- Emulex VFA5 2x10 GbE SFP+ Integrated Adapter, AS3M

- Withdrawn FoD upgrades:

- Emulex VFA5 ML2 FCoE/iSCSI License for System x (FoD), 00D8544

- Emulex VFA5 FCoE/iSCSI SW for PCIe Adapter for System x (FoD), 00JY824

Adapter Emulex VFA5 2x10 GbE SFP+ Adapter and FCoE/iSCSI SW, 00JY830, is still available.

Video walk-through with David Watts and Tom Boucher

Introduction

The Emulex Virtual Fabric Adapter 5 (VFA5) Network Adapter Family for System x builds on the foundation of previous generations of Emulex VFAs by delivering performance enhancements and new features that reduce complexity, reduce cost, and improve performance. The Emulex VFA5 family delivers a new set of powerful features and capabilities that are designed for the virtualized enterprise environment, multi-tenant and single-tenant cloud environments, I/O intensive environments, and converged infrastructure environments. The ML2 form factor adapter is shown in the following figure.

Figure 1. Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter

Did you know?

The Emulex VFA5 adapters support three methods to virtualize I/O, out of the box: Virtual Fabric Mode (vNIC1), Switch Independent mode and now Universal Fabric Port (UFP) mode. With virtual fabric, up to eight virtual network ports (vNICs) can be created with a single two-port 10 GbE network adapter. Converged protocols iSCSI and FCoE are also supported by the Features on Demand upgrade. By using a common infrastructure for Ethernet and SAN, and by virtualizing your network adapter, you can reduce your infrastructure capital expense.

Part number information

The part numbers to order the adapter are listed in the following table. The FCoE/iSCSI upgrade licenses are an Features on Demand field upgrade that enables the converged networking capabilities of the adapter. Adapter 00JY830 already includes the FCoE/iSCSI license.

Withdrawn: Add adapters and FoD upgrades listed in this product guide are now withdrawn from marketing. The follow-on adapter is the VFA5.2 family of adapters, as described in https://lenovopress.com/lp0052-emulex-vfa52-adapters

Table 1. Ordering part number and feature code

| Part number | Feature code | Description |

| Adapters | ||

| 00D1996 | A40Q | Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter for System x |

| 00JY820 | A5UT | Emulex VFA5 2x10 GbE SFP+ PCIe Adapter for System x |

| 00JY830 | A5UU | Emulex VFA5 2x10 GbE SFP+ Adapter and FCoE/iSCSI SW (Includes the FCoE/iSCSI license pre-installed) |

| None** | AS3M* | Emulex VFA5 2x10 GbE SFP+ Integrated Adapter for System x |

| Features on Demand license upgrades | ||

| 00D8544 | A4NZ | Emulex VFA5 ML2 FCoE/iSCSI License for System x (FoD) Features on Demand license for FCoE/iSCSI for 00D1996 |

| 00JY824 | A5UV | Emulex VFA5 FCoE/iSCSI SW for PCIe Adapter for System x (FoD) Features on Demand license for FCoE/iSCSI for 00JY820 and FC AS3M |

** The Integrated Adapter is available via configure-to-order (CTO) only.

The adapter, when shipped as a stand-alone option, includes the following items:

- One Emulex adapter

- Full-height (3U) bracket that is attached with a low-profile (2U) bracket that is included in the box

- Quick Install Guide

- Warranty information and Important Notices flyer

- Documentation CD

The FCoE/iSCSI license is delivered in the form of an authorization code that you enter on the Features on Demand website to generate an activation key.

Supported transceivers and direct-attach cables

The Emulex VFA5 adapter family each have two empty SFP+ cages that support SFP+ SR transceivers and twin-ax direct-attached copper cables, as listed in Table 2 and Table 3, respectively.

| Description | Part number | Feature Code |

| Brocade 10Gb SFP+ SR Optical Transceiver | 49Y4216 | 0069 |

| QLogic 10Gb SFP+ SR Optical Transceiver | 49Y4218 | 0064 |

| 10Gb SFP+ SR Optical Transceiver | 46C3447 | 5053 |

| Description | Part number | Feature Code |

| Passive direct-attach cables | ||

| 0.5m Passive DAC SFP+ Cable | 00D6288 | A3RG |

| 1 m Passive DAC-SFP+ Cable | 90Y9427 | A1PH |

| 1.5 Passive DAC SFP+ Cable | 00AY764 | A51N |

| 2.0 Passive DAC SFP+ Cable | 00AY765 | A51P |

| 3 m Passive DAC-SFP+ Cable | 90Y9430 | A1PJ |

| 5 m Passive DAC-SFP+ Cable | 90Y9433 | A1PK |

| 7 m Passive DAC SFP+ Cable | 00D6151 | A3RH |

| Active direct-attach cables | ||

| 1m Active DAC SFP+ Cable | 95Y0323 | A25A |

| 3m Active DAC SFP+ Cable | 95Y0326 | A25B |

| 5m Active DAC SFP+ Cable | 95Y0329 | A25C |

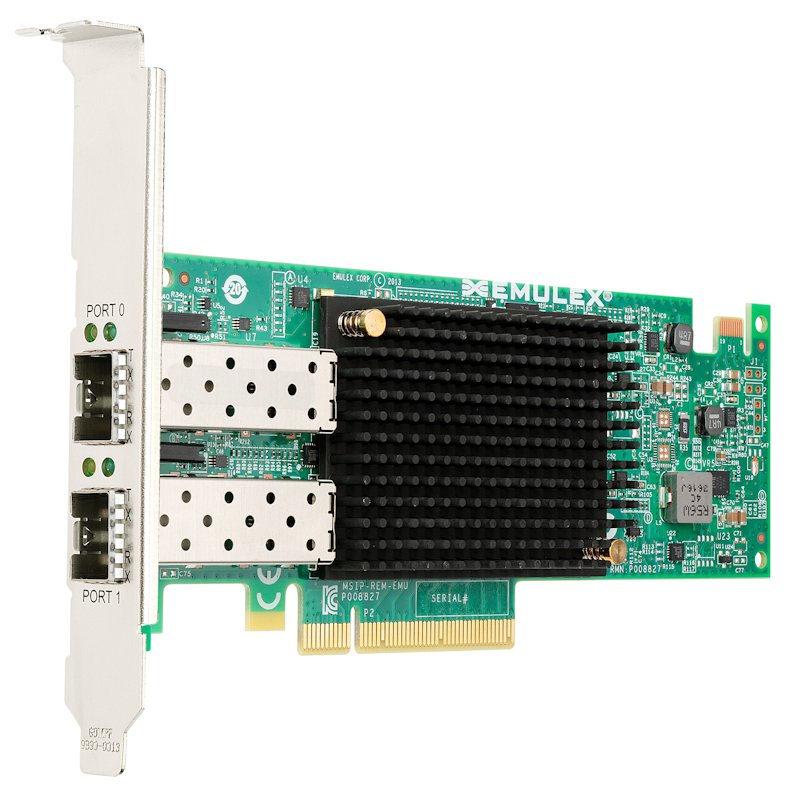

The following figure shows the PCIe adapter.

Figure 2. Emulex VFA5 2x10 GbE SFP+ PCIe Adapter for System x

Features

The VFA5 adapters offer virtualized networking, support a converged infrastructure, and improve performance with powerful offload engines. The adapter has the following features and benefits.

Reduce complexity

- Virtual NIC emulation

The Emulex VFA5 supports three NIC virtualization modes, right out of the box: Virtual Fabric mode (vNIC1), switch independent mode (vNIC2), and Universal Fabric Port (UFP). With NIC virtualization, each of the two physical ports on the adapter can be logically configured to emulate up to four virtual NIC (vNIC) functions with user-definable bandwidth settings. Additionally, each physical port can simultaneously support a storage protocol (FCoE or iSCSI).

- Common drivers and tools

You can deploy faster and manage less when you implement both Virtual Fabric Adapters (VFAs) and Host Bus Adapters (HBAs) that are developed by Emulex. VFAs and HBAs that are developed by Emulex use the same installation and configuration process, streamlining the effort to get your server running, and saving you valuable time. They also use the same Fibre Channel drivers, reducing time to qualify and manage storage connectivity. With Emulex's OneCommand Manager, you can manage VFAs and HBAs that are developed by Emulex through the data center from a single console.

Reduce cost

- Multi-protocol support for 10 GbE

The Emulex VFA5 offers two 10 Gb Ethernet ports and is cost- and performance-optimized for integrated and converged infrastructures. The VFA5 offers a “triple play” of converged data, storage, and low latency RDMA networking on a common Ethernet fabric. The Emulex VFA provides customers with a flexible storage protocol option for running heterogeneous workloads on their increasingly converged infrastructures.

- Power savings

When compared with previous generation Emulex VFA3 adapters, the Emulex VFA5 you can save up to 50 watts per server, reducing energy and cooling OPEX through improved storage offloads.

- Delay purchasing features until you need them with Features on Demand (FoD)

The VFA5 adapters use Features on Demand (FoD) software activation technology. FoD enables the adapter to be initially deployed as a low-cost Ethernet NIC, and then later upgraded in the field to support FCoE or iSCSI hardware offload.

Improve performance

- VXLAN/NVGRE offload technology

Emulex supports Microsoft’s network virtualization using generic routing encapsulation (NVGRE) and VMware’s virtual extensible LAN (VXLAN). These technologies create more logical LANs (for traffic isolation) that are needed by cloud architectures. Because these protocols increase the processing burden on the server’s CPU, the VFA5 has an offload engine specifically designed for processing these tags. The resulting benefit is that cloud providers can leverage the benefits of VXLAN/NVGRE while not being penalized with a reduction in the server’s performance.

- Full hardware storage offloads

The Emulex VFA5 supports a hardware offload engine (optionally enabled via a Features on Demand upgrade, standard on 00JY830) that accelerates storage protocol processing, which enables the server’s processing resources to focus on applications, improving the server’s performance.

- Advanced RDMA capabilities

RDMA over Converged Ethernet (RoCE) delivers application and storage acceleration through faster I/O operations with support for Windows Server SMB Direct and Linux NFS protocols. With RoCE, the VFA5 adapter helps accelerate workloads in the following ways:

- Capability to deliver a 4x boost in small packet network performance vs. previous generation adapters, which is critical for transaction-heavy workloads

- Desktop-like experiences for VDI with up to 1.5 million FCoE or iSCSI I/O operations per second (IOPS)

- Ability to scale Microsoft SQL Server, Exchange, and SharePoint using SMB Direct optimized with RoCE

- More VDI desktops/server due to up to 18% higher CPU effectiveness (the percentage of server CPU utilization for every 1 Mbps I/O throughput)

- Superior application performance for VMware hybrid cloud workloads due to up to 129% higher I/O throughput compared to adapters without offload

Specifications

Adapter specifications:

- Dual-channel 10 Gbps Ethernet controller

- Based on the Emulex XE102 ASIC

- PCI Express 3.0 x8 host interface

- Line-rate 10 GbE performance

- Upgradeable to support converged networking (FCoE and iSCSI) through Features on Demand

- 2 SFP+ empty cages to support either SFP+ SR or twin-ax copper connections

- SFP+ SR link is with the SFP+ SR optical module with LC connectors.

- SFP+ twin-ax copper link is with the SFP+ direct attached copper module/cable.

- Power dissipation: 14.4 W typical

- Form factor:

- Mezzanine LOM (ML2) form factor (adapter 00D1996)

- PCIe low-profile form factor (adapters 00JY820, 00JY830, and feature code AS3M)

Virtualization features

- Virtual Fabric support:

- Virtual Fabric mode (vNIC1)

- Switch Independent mode (vNIC2)

- Universal Fabric Port (UFP) support

- Four NIC partitions per adapter port

- Complies with PCI-SIG specification for SR-IOV

- VXLAN/NVGRE encapsulation and offload

- PCI-SIG Address Translation Service (ATS) v1.0

- Virtual Switch Port Mirroring for Diagnostic purposes

- Virtual Ethernet Bridge (VEB)

- 62 Virtual functions (VF)

- QoS for controlling and monitoring bandwidth that is assigned to and used by virtual entities

- Traffic shaping and QoS across each virtual function (VF) and physical function (PF)

- Message Signal Interrupts (MSI-X) support

- VMware NetQue / VMQ support

- Microsoft VMQ & Dynamic VMQ support in Hyper-V

Ethernet and NIC features

- NDIS 5.2, 6.0, and 6.2 compliant Ethernet functionality

- IPv4/IPv6 TCP, UDP, and Checksum Offload

- IPv4/IPv6 TCP, UDP Receive Side Scaling (RSS)

- IPv4/IPv6 Large Send Offload (LSO)

- Programmable MAC addresses

- 128 MAC/vLAN addresses per port

- Support for HASH-based broadcast frame filters per port

- vLAN insertion and extraction

- 9216 byte Jumbo frame support

- Receive Side Scaling (RSS)

- Filters: MAC and vLAN

Remote Direct Memory Access (RDMA) (Windows & Linux):

- Direct data placement in application buffers without processor intervention

- Supports RDMA over converged Ethernet (RoCE) specifications

- Linux Opens Fabrics Enterprise Distribution (OFED) Support

- Low latency queues for small packet sends and receives

- Local interprocess communication option by internal VEB switch

- TCP/IP Stack By-Pass

Data Center Bridging / Converged Enhanced Ethernet (DCB/CEE):

- Hardware Offloads of Ethernet TCP/IP

- 802.1Qbb Priority Flow Control (PFC)

- 802.1 Qaz Enhanced Transmission Selection (ETS)

- 802.1 Qaz Data Center Bridging Exchange (DCBX)

Fibre Channel over Ethernet (FCoE) offload (included with 00JY830; optional via a Features on Demand upgrade for all other adapters):

- Hardware offloads of Ethernet TCP/IP

- ANSI T11 FC-BB-5 Support

- Programmable Worldwide Name (WWN)

- Support for FIP and FCoE Ether Types

- Supports up to 255 NPIV Interfaces per port

- FCoE Initiator

- Common driver for Emulex Universal CNA and Fibre Channel HBAs

- 255 N_Port ID Virtualization (NPIV) interfaces per port

- Fabric Provided MAC Addressing (FPMA) support

- Up to 4096 concurrent port logins (RPIs) per port

- Up to 2048 active exchanges (XRIs) per port

iSCSI offload (included with 00JY830; optional via a Features on Demand upgrade for all other adapters):

- Full iSCSI Protocol Offload

- Header, Data Digest (CRC), and PDU

- Direct data placement of SCSI data

- 2048 Offloaded iSCSI connections

- iSCSI initiator and concurrent initiator /target modes

- Multipath I/O

- OS-neutral INT13 based iSCSIboot and iSCSI crash memory dump support

- RFC 4171 Internet Storage Name Service (iSNS)

Standards

The following IEEE standards are supported:

- 802.3-2008 10Gbase Ethernet port

- 802.1Q vLAN

- 802.3x Flow Control with pause Frames

- 802.1 Qbg Edge Virtual Bridging

- 802.1Qaz Enhanced transmission Selection (ETS) Data Center Bridging Capability (DCBX)

- 802.1Qbb Priority Flow Control

- 802.3ad link Aggregation/LACP

- 802.1AB Link Layer Discovery Protocol

- 802.3ae (SR Optics)

- 802.3p (Priority of Service)

- IPV4 (RFC 791)

- IPV6 (RFC 2460)

Server support

The Emulex VFA5 adapter family is supported in the System x servers that are listed in the following tables.

Support for System x and dense servers with Xeon E5/E7 v4 and E3 v5 processors

| Part number |

Description |

x3250 M6 (3943)

|

x3250 M6 (3633)

|

x3550 M5 (8869)

|

x3650 M5 (8871)

|

x3850 X6/x3950 X6 (6241, E7 v4)

|

nx360 M5 (5465, E5-2600 v4)

|

sd350 (5493)

|

|---|---|---|---|---|---|---|---|---|

| 00D1996 | Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter | N | N | Y | Y | Y | Y | N |

| 00JY820 | Emulex VFA5 2x10 GbE SFP+ PCIe Adapter | N | N | Y | Y | Y | Y | Y |

| 00JY830 | Emulex VFA5 2x10 GbE SFP+ Adapter and FCoE/iSCSI SW | N | N | Y | Y | Y | Y | N |

| fc AS3M | Emulex VFA5 2x10 GbE SFP+ Integrated Adapter | N | N | N | N | N | N | N |

| 00D8544 | Emulex VFA5 ML2 FCoE/iSCSI License (FoD) | N | N | Y | Y | Y | Y | N |

| 00JY824 | Emulex VFA5 FCoE/iSCSI SW for PCIe Adapter (FoD) | N | N | Y | Y | Y | Y | N |

Support for servers with Intel Xeon v3 processors

| Part number |

Description |

x3100 M5 (5457)

|

x3250 M5 (5458)

|

x3500 M5 (5464)

|

x3550 M5 (5463)

|

x3650 M5 (5462)

|

x3850 X6/x3950 X6 (6241, E7 v3)

|

nx360 M5 (5465)

|

|---|---|---|---|---|---|---|---|---|

| 00D1996 | Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter | N | N | N | Y | Y | Y | Y |

| 00JY820 | Emulex VFA5 2x10 GbE SFP+ PCIe Adapter | N | Y | Y | Y | Y | Y | Y |

| 00JY830 | Emulex VFA5 2x10 GbE SFP+ Adapter and FCoE/iSCSI SW | N | Y | Y | Y | Y | Y | Y |

| fc AS3M* | Emulex VFA5 2x10 GbE SFP+ Integrated Adapter | N | Y | Y | N | N | N | N |

| 00D8544 | Emulex VFA5 ML2 FCoE/iSCSI License (FoD) | N | N | N | Y | Y | Y | Y |

| 00JY824 | Emulex VFA5 FCoE/iSCSI SW for PCIe Adapter (FoD) | N | Y | Y | Y | Y | Y | Y |

* The Integrated Adapter is available via configure-to-order (CTO) only.

Support for servers with Intel Xeon v2 processors

| Part number |

Description |

x3500 M4 (7383, E5-2600 v2)

|

x3530 M4 (7160, E5-2400 v2)

|

x3550 M4 (7914, E5-2600 v2)

|

x3630 M4 (7158, E5-2400 v2)

|

x3650 M4 (7915, E5-2600 v2)

|

x3650 M4 BD (5466)

|

x3650 M4 HD (5460)

|

x3750 M4 (8752)

|

x3750 M4 (8753)

|

x3850 X6/x3950 X6 (3837)

|

x3850 X6/x3950 X6 (6241, E7 v2)

|

dx360 M4 (E5-2600 v2)

|

nx360 M4 (5455)

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 00D1996 | Emulex VFA5 ML2 Dual Port 10GbE SFP+ Adapter | N | N | N | N | N | N | N | Y | Y | Y | Y | N | N |

| 00JY820 | Emulex VFA5 2x10 GbE SFP+ PCIe Adapter | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| 00JY830 | Emulex VFA5 2x10 GbE SFP+ Adapter and FCoE/iSCSI SW | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| fc AS3M* | Emulex VFA5 2x10 GbE SFP+ Integrated Adapter | N | Y | Y | Y | Y | Y | Y | N | N | N | N | Y | Y |

| 00D8544 | Emulex VFA5 ML2 FCoE/iSCSI License (FoD) | N | N | N | N | N | N | N | Y | Y | Y | Y | N | N |

| 00JY824 | Emulex VFA5 FCoE/iSCSI SW for PCIe Adapter (FoD) | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | N |

* The Integrated Adapter is available via configure-to-order (CTO) only.

For the latest information about the System x servers that support this adapter, including support for older servers, see ServerProven® at the following website: http://www.lenovo.com/us/en/serverproven/xseries/lan/matrix.shtml

Modes of operation

The Emulex VFA5 adapter family supports three types of virtual NIC (vNIC) operating modes, and a physical NIC (pNIC) operating mode. This support is built into the adapter:

- Virtual Fabric Mode (also known as vNIC1 mode) works only with an RackSwitch™ G8124E and G8264. In this mode, the Emulex adapter communicates with the switch to obtain vNIC parameters (using DCBX). A special tag is added within each data packet and is later removed by the NIC or switch for each vNIC group to maintain separation of the virtual data paths.

In vNIC mode, each physical port is divided into four virtual ports for a maximum of eight virtual NICs per adapter. The default bandwidth for each vNIC is 2.5 Gbps. Bandwidth for each vNIC can be configured through the RackSwitch from 100 Mbps to 10 Gbps, up to a total of 10 Gb per physical port. The vNICs can also be configured to have 0 bandwidth if you must allocate the available bandwidth to fewer than four vNICs per physical port. In Virtual Fabric Mode, you can change the bandwidth allocations through the switch user interfaces without requiring a reboot of the server.

vNIC bandwidth allocation and metering are performed by both the switch and the VFA. In such a case, a bidirectional virtual channel of an assigned bandwidth is established between them for every defined vNIC.

- In Switch Independent Mode (also known as vNIC2 mode), the adapter works with any 10 Gb Ethernet switch. Switch Independent Mode offers the same capabilities as Virtual Fabric Mode in terms of the number of vNICs and the bandwidth each can be configured to have. Switch Independent Mode extends the existing customer VLANs to the virtual NIC interfaces. The IEEE 802.1Q VLAN tag is essential to the separation of the vNIC groups by the NIC adapter or driver and the switch. The VLAN tags are added to the packet by the applications or drivers at each endpoint rather than by the switch.

vNIC bandwidth allocation and metering are performed only by VFA itself. In such a case, a unidirectional virtual channel is established where the bandwidth management is performed only for the outgoing traffic on a VFA side (server-to-switch). The incoming traffic (switch-to-server) uses the all available physical port bandwidth, as there is no metering that is performed on either the VFA or a switch side.

In vNIC2 mode, when storage protocols are enabled on the Emulex 10GbE Virtual Fabric Adapters, six vNICs (three per physical port) are Ethernet, and two vNICs (one per physical port) are either iSCSI or FCoE.

- Universal Fabric Port (UFP) provides a feature rich solution compared to the original vNIC Virtual Fabric mode. Like Virtual Fabric mode vNIC, UFP allows carving up a single 10 Gb port into four virtual NICs (called vPorts in UFP). UFP also has a number of modes associated with it, including:

- Tunnel mode: Provides Q-in-Q mode, where the vPort is customer VLAN-independent (very similar to vNIC Virtual Fabric Dedicated Uplink Mode)

- Trunk mode: Provides a traditional 802.1Q trunk mode (multi-VLAN trunk link) to the virtual NIC (vPort) interface, i.e. permits host side tagging

- Access mode: Provides a traditional access mode (single untagged VLAN) to the virtual NIC (vPort) interface which is similar to a physical port in access mode

- FCoE mode: Provides FCoE functionality to the vPort

- Auto-VLAN mode: Auto VLAN creation for Qbg and VMready environments

Only one vPort (vPort 2) per physical port can be bound to FCoE. If FCoE is not desired, vPort 2 can be configured for one of the other modes. - In pNIC mode, the adapter operates as a standard dual-port 10 Gbps Ethernet adapter, and it functions with any 10 GbE switch. In pNIC mode, with the Emulex FCoE/iSCSI License, the card operates in a traditional Converged Network Adapter (CNA) mode with two Ethernet ports and two storage ports (iSCSI or FCoE) available to the operating system.

The following table compares the three virtual fabric modes.

Table 5. Comparison of virtual fabric modes

| Function | Virtual Fabric Mode (vNIC1) |

Switch Independent Mode (vNIC2) |

UFP Mode |

| Description | Intelligence in the Networking OS working with select Emulex adapters. VLAN based. | Intelligence in the adapter, independent of the upstream networking device. | Intelligence in the adapter, independent of the upstream networking device. |

| Supported switches | G8124, G8264, G8264T, G8264CS | All 10 GbE switches | G8264 (NOS 7.9 or later) |

| Number of vNICs per physical 10 Gb port | 4 (3 if storage functions are used to provide a vHBA) | 4 (3 if storage) | 4 (3 if storage) |

| Minimum vNIC bandwidth | 100 Mb | 100 Mb | 100 Mb |

| Server to switch bandwidth limit per vNIC | Yes | No | Yes, maximum burst allowed and minimum guarantee |

| Switch to server bandwidth limit per vNIC | Yes | No | Yes, maximum burst allowed and minimum guarantee |

| IEEE 802.1q VLAN tagging | Optional | Required (untagged traffic will be tagged with LPVID which is configured in UEFI on a per-vNIC basis) | Optional for Trunk or Tunnel mode; not supported for access mode. |

| Isolated NIC teaming failover per vNIC | Yes | No | Yes (NOS 7.9 or later) |

| Switch modes | Tunnel mode | Access or Trunk Mode (two vNIC which are part of the same physical port can not carry the same VLAN) | Access, Trunk, Tunnel, and FCoE modes |

| Uplink connectivity | Dedicated | Share | Dedicated for Tunnel mode; Shared for other modes |

| iSCSI/FCoE support | Yes | Yes | Yes |

Physical specifications

ML2 adapter (approximate):

- Height: 69 mm (2.7 in.)

- Length: 168 mm (6.6 in.)

- Width: 17 mm (0.7 in.)

PCIe adapters:

- Height: 167 mm (6.6 in)

- Width: 69 mm (2.7 in)

- Depth: 17 mm (0.7 in)

Shipping dimensions and weight (approximate):

- Height: 189 mm (7.51 in.)

- Width: 90 mm (3.54 in.)

- Depth: 38 mm (1.50 in.)

- Weight: 450 g (0.99 lb)

Operating environment

This adapter is supported in the following environment:

- Temperature:

- Operating: 0 °C to 55 °C (32 °F to 131 °F)

- Non-operating: -40 °C to 70 °C (-40 °F to 158 °F)

- Humidity: 5 - 95%, non-condensing

Warranty

One-year limited warranty. When installed in a System x server, these cards assume your system’s base warranty and any warranty upgrade.

Operating systems supported

The ML2 adapter supports the following operating systems:

- Microsoft Windows Server 2008 R2

- Microsoft Windows Server 2012

- Microsoft Windows Server 2012 R2

- Red Hat Enterprise Linux 6 Server x64 Edition

- Red Hat Enterprise Linux 7

- SUSE LINUX Enterprise Server 11 for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 with Xen for AMD64/EM64T

- SUSE LINUX Enterprise Server 12

- VMware vSphere 5.0 (ESXi)

- VMware vSphere 5.1 (ESXi)

- VMware vSphere 5.5 (ESXi)

The PCIe adapters support the following operating systems:

- Microsoft Windows Server 2008 R2

- Microsoft Windows Server 2012

- Microsoft Windows Server 2012 R2

- Red Hat Enterprise Linux 6 Server Edition

- Red Hat Enterprise Linux 6 Server x64 Edition

- Red Hat Enterprise Linux 7

- SUSE LINUX Enterprise Server 11 for AMD64/EM64T

- SUSE LINUX Enterprise Server 11 for x86

- SUSE LINUX Enterprise Server 11 with Xen for AMD64/EM64T

- VMware vSphere 5.1 (ESXi)

- VMware vSphere 5.5 (ESXi)

- VMware vSphere 6.0 (ESXi)

Note: RDMA is not supported with VMware ESXi

For the latest information about the specific versions and service packs that are supported, see ServerProven at http://www.lenovo.com/us/en/serverproven/xseries/lan/matrix.shtml.

Top-of-rack Ethernet switches

The following 10 Gb Ethernet top-of-rack switches are supported.

| Part number | Description |

|---|---|

| Switches mounted at the rear of the rack (rear-to-front airflow) | |

| 7159A1X | Lenovo ThinkSystem NE1032 RackSwitch (Rear to Front) |

| 7159B1X | Lenovo ThinkSystem NE1032T RackSwitch (Rear to Front) |

| 7Z330O11WW | Lenovo ThinkSystem NE1064TO RackSwitch (Rear to Front, ONIE) |

| 7159C1X | Lenovo ThinkSystem NE1072T RackSwitch (Rear to Front) |

| 7159BR6 | Lenovo RackSwitch G8124E (Rear to Front) |

| 7159G64 | Lenovo RackSwitch G8264 (Rear to Front) |

| 7159DRX | Lenovo RackSwitch G8264CS (Rear to Front) |

| 7159CRW | Lenovo RackSwitch G8272 (Rear to Front) |

| 7159GR6 | Lenovo RackSwitch G8296 (Rear to Front) |

| Switches mounted at the front of the rack (front-to-rear airflow) | |

| 7159BF7 | Lenovo RackSwitch G8124E (Front to Rear) |

| 715964F | Lenovo RackSwitch G8264 (Front to Rear) |

| 7159DFX | Lenovo RackSwitch G8264CS (Front to Rear) |

| 7159CFV | Lenovo RackSwitch G8272 (Front to Rear) |

| 7159GR5 | Lenovo RackSwitch G8296 (Front to Rear) |

For more information, see the Lenovo Press Product Guides in the 10Gb top-of-rack switch category:

https://lenovopress.com/networking/tor/10gb

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

System x®

ThinkSystem®

The following terms are trademarks of other companies:

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Hyper-V®, SQL Server®, SharePoint®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

ibm.com® is a trademark of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.