Author

Updated

1 Aug 2024Form Number

LP1170PDF size

31 pages, 2.9 MBAbstract

The ThinkSystem Mellanox ConnectX-6 HDR100/100GbE VPI Adapters offer 100 Gb/s Ethernet and InfiniBand connectivity for high-performance connectivity when running HPC, cloud, storage and machine learning applications.

This product guide provides essential presales information to understand the adapter and its key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the ConnectX-6 HDR100 adapters and consider their use in IT solutions.

Change History

Changes in the August 1, 2024 update:

- Added information about factory preload support for operating systems - Operating system support section

Introduction

The ThinkSystem Mellanox ConnectX-6 HDR100 Adapters offer 100 Gb/s Ethernet and InfiniBand connectivity for high-performance connectivity when running HPC, cloud, storage and machine learning applications.

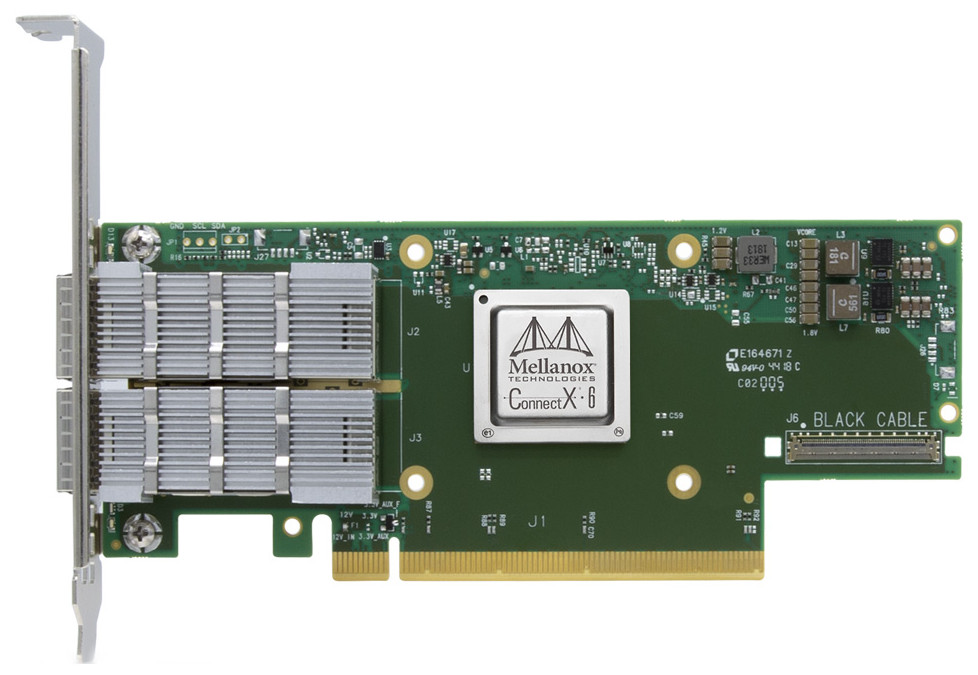

The following figure shows the 2-port ConnectX-6 HDR100/100GbE VPI Adapter (the standard heat sink has been removed in this photo).

Figure 1. ThinkSystem Mellanox ConnectX-6 HDR100/100GbE QSFP56 1-port PCIe VPI Adapter

Did you know?

Mellanox ConnectX-6 brings new acceleration engines for maximizing High Performance, Machine Learning, Storage, Web 2.0, Cloud, Data Analytics and Telecommunications platforms. ConnectX-6 HDR100 adapters support up to 100G total bandwidth at sub-600 nanosecond latency, and NVMe over Fabric offloads, providing the highest performance and most flexible solution for the most demanding applications and markets. ThinkSystem servers with Mellanox adapters and switches deliver the most intelligent fabrics for High Performance Computing clusters.

Part number information

The following table shows the part numbers for the adapters.

The part numbers include the following:

- One Mellanox adapter

- Low-profile (2U) and full-height (3U) adapter brackets

- Documentation

Note: These adapters were previously named as follows:

- ThinkSystem Mellanox ConnectX-6 HDR100 QSFP56 1-port PCIe InfiniBand Adapter

- ThinkSystem Mellanox ConnectX-6 HDR100 QSFP56 2-port PCIe InfiniBand Adapter

Supported transceivers and cables

The adapter has one or two empty QSFP56 cages for connectivity.

The following table lists the supported transceivers.

Configuration notes:

- Transceiver AV1D also supports 40Gb when installed in a Mellanox adapter.

- For the transceiver and cable support for the Mellanox QSA 100G to 25G Cable Adapter (4G17A10853), see the 25G Cable Adapter transceiver and cable support section.

The following table lists the supported optical cables.

The following table lists the supported direct-attach copper (DAC) cables.

25G Cable Adapter transceiver and cable support

The Mellanox QSA 100G to 25G Cable Adapter (4G17A10853) supports the transceivers listed in the following table.

The Mellanox QSA 100G to 25G Cable Adapter (4G17A10853) supports the fiber optic cables and Active Optical Cables listed in the following table.

The Mellanox QSA 100G to 25G Cable Adapter (4G17A10853) supports the direct-attach copper (DAC) cables listed in the following table.

Features

Machine learning and big data environments

Data analytics has become an essential function within many enterprise data centers, clouds and hyperscale platforms. Machine learning relies on especially high throughput and low latency to train deep neural networks and to improve recognition and classification accuracy. ConnectX-6 offers an excellent solution to provide machine learning applications with the levels of performance and scalability that they require.

ConnectX-6 utilizes the RDMA technology to deliver low-latency and high performance. ConnectX-6 enhances RDMA network capabilities even further by delivering end-to-end packet level flow control.

Security

ConnectX-6 block-level encryption offers a critical innovation to network security. As data in transit is stored or retrieved, it undergoes encryption and decryption. The ConnectX-6 hardware offloads the IEEE AES-XTS encryption/decryption from the CPU, saving latency and CPU utilization. It also guarantees protection for users sharing the same resources through the use of dedicated encryption keys.

By performing block-storage encryption in the adapter, ConnectX-6 excludes the need for self-encrypted disks. This allows customers the freedom to choose their preferred storage device, including those that traditionally do not provide encryption. ConnectX-6 can support Federal Information Processing Standards (FIPS) compliance.

ConnectX-6 also includes a hardware Root-of-Trust (RoT), which uses HMAC relying on a device-unique key. This provides both a secure boot as well as cloning-protection. Delivering best-in-class device and firmware protection, ConnectX-6 also provides secured debugging capabilities, without the need for physical access.

Storage environments

NVMe storage devices offer very fast access to storage media. The evolving NVMe over Fabric (NVMe-oF) protocol leverages RDMA connectivity to remotely access NVMe storage devices efficiently, while keeping the end-to-end NVMe model at lowest latency. With its NVMe-oF target and initiator offloads, ConnectX-6 brings further optimization to NVMe-oF, enhancing CPU utilization and scalability.

Cloud and Web 2.0 environments

Telco, Cloud and Web 2.0 customers developing their platforms on software-defined network (SDN) environments are leveraging the Virtual Switching capabilities of server operating systems to enable maximum flexibility in the management and routing protocols of their networks.

Open V-Switch (OVS) is an example of a virtual switch that allows virtual machines to communicate among themselves and with the outside world. Software-based virtual switches, traditionally residing in the hypervisor, are CPU intensive, affecting system performance and preventing full utilization of available CPU for compute functions.

To address such performance issues, ConnectX-6 offers Mellanox Accelerated Switching and Packet Processing (ASAP2) Direct technology. ASAP2 offloads the vSwitch/vRouter by handling the data plane in the NIC hardware while maintaining the control plane unmodified. As a result, significantly higher vSwitch/vRouter performance is achieved minus the associated CPU load.

The vSwitch/vRouter offload functions supported by ConnectX-5 and ConnectX-6 include encapsulation and de-capsulation of overlay network headers, as well as stateless offloads of inner packets, packet headers re-write (enabling NAT functionality), hairpin, and more.

In addition, ConnectX-6 offers intelligent flexible pipeline capabilities, including programmable flexible parser and flexible match-action tables, which enable hardware offloads for future protocols.

Technical specifications

The adapters have the following technical specifications.

PCI Express Interface

- PCIe 4.0 x16 host interface (also supports a PCIe 3.0 host interface)

- Support for PCIe x1, x2, x4, x8, and x16 configurations

- PCIe Atomic

- TLP (Transaction Layer Packet) Processing Hints (TPH)

- PCIe switch Downstream Port Containment (DPC) enablement for PCIe hot-plug

- Advanced Error Reporting (AER)

- Access Control Service (ACS) for peer-to-peer secure communication

- Process Address Space ID (PASID) Address Translation Services (ATS)

- IBM CAPIv2 (Coherent Accelerator Processor Interface)

- Support for MSI/MSI-X mechanisms

Connectivity

- One or two QSFP56 ports

- Supports passive copper cables with ESD protection

- Powered connectors for optical and active cable support

InfiniBand

- Supports interoperability with InfiniBand switches (up to HDR100)

- When used in a PCIe 3.0 slot, total connectivity is up to 100 Gb/s:

- One port adapter supports a single 100 Gb/s link

- Two-port adapter supports two connections of 50 Gb/s each or one 100 Gb/s active link and the other a standby link

- When used in a PCIe 4.0 slot, total connectivity is up to 200 Gb/s:

- One port adapter supports a single 100 Gb/s link

- Two-port adapter supports two connections of 100 Gb/s each

- HDR100 / EDR / FDR / QDR / DDR / SDR

- IBTA Specification 1.3 compliant

- RDMA, Send/Receive semantics

- Hardware-based congestion control

- Atomic operations

- 16 million I/O channels

- 256 to 4Kbyte MTU, 2Gbyte messages

- 8 virtual lanes + VL15

Ethernet (requires firmware 20.28.1002 or later)

- Support interoperability with Ethernet switches (up to 100GbE, as 2 lanes of 50Gb/s data rate)

- When used in the PCIe 3.0 slot, total connectivity is 100 Gb/s:

- One port adapter supports a single 100 Gb/s link

- Two-port adapter supports two connections of 50 Gb/s each or one 100 Gb/s active link and the other a standby link

- When used in the PCIe 4.0 slot, total connectivity is 200 Gb/s:

- One port adapter supports a single 100 Gb/s link

- Two-port adapter supports two connections of 100 Gb/s each

- Supports 100GbE / 50GbE / 40GbE / 25GbE / 10GbE / 1GbE

- Ethernet speed must be set; auto-negotiation is currently not supported (planned for a later firmware update)

- IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet

- IEEE 802.3by, Ethernet Consortium 25, 50 Gigabit Ethernet, supporting all FEC modes

- IEEE 802.3ba 40 Gigabit Ethernet

- IEEE 802.3ae 10 Gigabit Ethernet

- IEEE 802.3az Energy Efficient Ethernet

- IEEE 802.3ap based auto-negotiation and KR startup (planned for a later firmware update)

- IEEE 802.3ad, 802.1AX Link Aggregation

- IEEE 802.1Q, 802.1P VLAN tags and priority

- IEEE 802.1Qau (QCN) – Congestion Notification

- IEEE 802.1Qaz (ETS)

- IEEE 802.1Qbb (PFC)

- IEEE 802.1Qbg

- IEEE 1588v2

- Jumbo frame support (9.6KB)

- IPv4 (RFQ 791)

- IPv6 (RFC 2460)

Enhanced Features

- Hardware-based reliable transport

- Collective operations offloads

- Vector collective operations offloads

- PeerDirect RDMA (GPUDirect) communication acceleration

- 64/66 encoding

- Enhanced Atomic operations

- Advanced memory mapping support, allowing user mode registration and remapping of memory (UMR)

- Extended Reliable Connected transport (XRC)

- Dynamically Connected transport (DCT)

- On demand paging (ODP)

- MPI Tag Matching

- Rendezvous protocol offload

- Out-of-order RDMA supporting Adaptive Routing

- Burst buffer offload

- In-Network Memory registration-free RDMA memory access

CPU Offloads

- RDMA over Converged Ethernet (RoCE)

- TCP/UDP/IP stateless offload

- LSO, LRO, checksum offload

- RSS (also on encapsulated packet), TSS, HDS, VLAN and MPLS tag insertion/stripping, Receive flow steering

- Data Plane Development Kit (DPDK) for kernel bypass applications

- Open VSwitch (OVS) offload using ASAP2

- Flexible match-action flow tables

- Tunneling encapsulation / de-capsulation

- Intelligent interrupt coalescence

- Header rewrite supporting hardware offload of NAT router

Storage Offloads

- Block-level encryption: XTS-AES 256/512 bit key

- NVMe over Fabric offloads for target machine

- Erasure Coding offload - offloading Reed-Solomon calculations

- T10 DIF - signature handover operation at wire speed, for ingress and egress traffic

- Storage Protocols: SRP, iSER, NFS RDMA, SMB Direct, NVMe-oF

Overlay Networks

- RoCE over overlay networks

- Stateless offloads for overlay network tunneling protocols

- Hardware offload of encapsulation and decapsulation of VXLAN, NVGRE, and GENEVE overlay networks

Hardware-Based I/O Virtualization

- Single Root IOV

- Address translation and protection

- VMware NetQueue support

- SR-IOV: Up to 512 Virtual Functions

- SR-IOV: Up to 16 Physical Functions per host

- Virtualization hierarchies (network partitioning, NPAR)

- Virtualizing Physical Functions on a physical port

- SR-IOV on every Physical Function

- Configurable and user-programmable QoS

- Guaranteed QoS for VMs

HPC Software Libraries

- HPC-X, OpenMPI, MVAPICH, MPICH, OpenSHMEM, PGAS and varied commercial packages

Management and Control

- NC-SI, MCTP over SMBus and MCTP over PCIe - BMC interface

- PLDM for Monitor and Control DSP0248

- PLDM for Firmware Update DSP0267

- SDN management interface for managing the eSwitch

- I2C interface for device control and configuration

- General Purpose I/O pins

- SPI interface to Flash

- JTAG IEEE 1149.1 and IEEE 1149.6

Remote Boot

- Remote boot over InfiniBand

- Remote boot over Ethernet

- Remote boot over iSCSI

- Unified Extensible Firmware Interface (UEFI)

- Pre-execution Environment (PXE)

NVIDIA Unified Fabric Manager

NVIDIA Unified Fabric Manager (UFM) is InfiniBand networking management software that combines enhanced, real-time network telemetry with fabric visibility and control to support scale-out InfiniBand data centers.

The two offerings available from Lenovo are as follows:

- UFM Telemetry for Real-Time Monitoring

The UFM Telemetry platform provides network validation tools to monitor network performance and conditions, capturing and streaming rich real-time network telemetry information, application workload usage, and system configuration to an on-premises or cloud-based database for further analysis.

- UFM Enterprise for Fabric Visibility and Control

The UFM Enterprise platform combines the benefits of UFM Telemetry with enhanced network monitoring and management. It performs automated network discovery and provisioning, traffic monitoring, and congestion discovery. It also enables job schedule provisioning and integrates with industry-leading job schedulers and cloud and cluster managers, including Slurm and Platform Load Sharing Facility (LSF).

The following table lists the subscription licenses available from Lenovo.

For more information, see the following web page:

https://www.nvidia.com/en-us/networking/infiniband/ufm/

Server support

The following servers offer a PCIe 4.0 host interface. All other supported servers have a PCIe 3.0 host interface.

- ThinkSystem SR635

- ThinkSystem SR655

- ThinkSystem SR645

- ThinkSystem SR665

The following tables list the ThinkSystem servers that are compatible.

Operating system support

The following table indicates which operating systems can be preloaded in the Lenovo factory for CTO server orders where this adapter is included in the server configuration.

Tip: If an OS is listed as "No support" above, but it is listed in one of the support tables below, that means the OS is supported by the adapter, just not available to be preloaded in the Lenovo factory in CTO orders.

The adapters support the operating systems listed in the following tables:

- ThinkSystem Mellanox ConnectX-6 HDR100/100GbE QSFP56 1-port PCIe VPI Adapter, 4C57A14177

- ThinkSystem Mellanox ConnectX-6 HDR100/100GbE QSFP56 2-port PCIe VPI Adapter, 4C57A14178

Tip: These tables are automatically generated based on data from Lenovo ServerProven.

1 The iboot hard drive can't be identified)

2 Need out of box driver to support infiniband feature

3 The iboot hard drive can't be identified

4 For limitation, please refer Support Tip 104278

5 IONG-11838 tips #TT1781

6 Ubuntu don't support SW iSCSI

1 Need out of box driver to support infiniband feature

2 The OS is not supported with EPYC 7003 processors.

3 The iboot hard drive can't be identified)

4 ISG will not sell/preload this OS, but compatibility and cert only.

1 The iboot hard drive can't be identified)

2 Need out of box driver to support infiniband feature

3 The iboot hard drive can't be identified

4 For limitation, please refer Support Tip 104278

5 IONG-11838 tips #TT1781

6 The OS is not supported with EPYC 7003 processors.

1 Need out of box driver to support infiniband feature

2 The OS is not supported with EPYC 7003 processors.

3 The iboot hard drive can't be identified)

4 ISG will not sell/preload this OS, but compatibility and cert only.

5 The iboot hard drive can't be identified

Regulatory approvals

The adapters have the following regulatory approvals:

- Safety: CB / cTUVus / CE

- EMC: CE / FCC / VCCI / ICES / RCM / KC

- RoHS: RoHS Compliant

Operating environment

The adapters have the following operating characteristics:

- Typical power consumption (passive cables): 15.6W

- Maximum power available through QSFP56 port: 5W

- Temperature

- Operational: 0°C to 55°C

- Non-operational: -40°C to 70°C

- Humidity: 90% relative humidity

Warranty

One year limited warranty. When installed in a Lenovo server, the adapter assumes the server’s base warranty and any warranty upgrades.

Related publications

For more information, refer to these documents:

- Networking Options for ThinkSystem Servers:

https://lenovopress.com/lp0765-networking-options-for-thinksystem-servers - ServerProven compatibility:

http://www.lenovo.com/us/en/serverproven - Mellanox InfiniBand product page:

https://www.nvidia.com/en-us/networking/infiniband-adapters/ - ConnectX-6 VPI user manual:

https://docs.nvidia.com/networking/display/ConnectX6VPI

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

ThinkSystem®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

IBM® and LSF® are trademarks of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Changes in the August 1, 2024 update:

- Added information about factory preload support for operating systems - Operating system support section

Changes in the September 16, 2023 update:

- Withdrawn cables are now hidden; click Show Withdrawn Products to view them - Supported transceivers and cables section

Changes in the July 4, 2023 update:

- Added the following transceiver - Supported transceivers and cables section:

- Lenovo 100GBase-SR4 QSFP28 Transceiver, 4TC7A86257

- Added tables listing the transceiver and cable support for the Mellanox QSA 100G to 25G Cable Adapter (4G17A10853) - 25G Cable Adapter transceiver and cable support section

Changes in the January 16, 2022 update:

- 100Gb transceiver 7G17A03539 also supports 40Gb when installed in a Mellanox adapter. - Supported transceivers and cables section

Changes in the November 2, 2021 update:

- Added additional feature codes for the adapters - Part number information section

Changes in the August 24, 2021 update:

- New low-latency optical cables supported - Supported transceivers and cables section:

- 3m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72553

- 5m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72554

- 10m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72555

- 15m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72556

- 20m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72557

- 30m Mellanox HDR IB Optical QSFP56 Low Latency Cable, 4Z57A72558

- 3m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72561

- 5m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72562

- 10m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72563

- 15m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72564

- 20m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72565

- 30m Mellanox HDR IB to 2x HDR100 Splitter Optical QSFP56 Low Latency Cable, 4Z57A72566

Changes in the June 8, 2021 update:

- New cables supported - Supported transceivers and cables section:

- 3m Mellanox HDR IB Active Copper QSFP56 Cable, 4X97A12610

- 4m Mellanox HDR IB Active Copper QSFP56 Cable, 4X97A12611

Changes in the February 15, 2021 update:

- The server support tables are now automatically updated - Server support section

Changes in the January 28, 2021 update:

- Added 40Gb QSFP Active Optical Cables - Supported cables section

Changes in the January 22, 2021 update:

- The adapters have been renamed to VPI Adapters - Part number information section

- Ethernet functions require firmware version 20.28.1002 or later

Changes in the December 17, 2020 update:

- Support for QSFP56 200Gb Passive DAC Cables - Supported cables section:

- Lenovo 1m 200G QSFP56 DAC Cable, 4X97A11113

- Lenovo 3m 200G QSFP56 DAC Cable, 4X97A12613

Changes in the October 13, 2020 update:

- Added the SR850 V2 and SR860 V2 servers - Server support section

Changes in the May 12, 2020 update:

- Updated server support table, plus a footnote about CTO orders of the 1-port adapter - Server support section

Changes in the May 5, 2020 update:

- Added SR645 and SR665 to the server support table - Server support section

Changes in the January 7, 2020 update:

- Added SR850P to the server support table - Server support section

Changes in the December 19, 2019 update:

- The adapters are supported in the SR655 and SR635 - Server support section

Changes in the November 8, 2019 update:

- Clarified that the adapters have a PCIe 4.0 host interface - Technical specifications section

- The adapters now support Ethernet connectivity - Technical specifications section

- Updated the server support table - SR655 and SR635 support planned for December

Changes in the October 14, 2019 update:

- The SR670 supports the HDR100 adapters - Server support table

First published: 16 July 2019

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.