Author

Published

6 Dec 2023Form Number

LP1862PDF size

14 pages, 738 KBAbstract

The ThinkSystem AMD Instinct MI210 Accelerator is a compute workhorse optimized for accelerating single precision and double-precision HPC-class system. The accelerator can also be deployed for training large scale machine intelligence workloads.

This product guide provides essential presales information to understand the MI210 Accelerator and its key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the MI210 Accelerator and consider its use in IT solutions.

Introduction

The ThinkSystem AMD Instinct MI210 Accelerator is a compute workhorse optimized for accelerating single precision and double-precision HPC-class system. The accelerator can also be deployed for training large scale machine intelligence workloads.

The accelerator's powerful compute engine, new matrix math FP64 cores and advanced memory architecture, combined with AMD’s ROCm open software platform and ecosystem, provides a powerful, flexible heterogeneous compute solution that is designed to help datacenter designers meet the challenges of a new era of compute.

Did you know?

The ThinkSystem SR670 V2 supports 8x MI210 Accelerator and these GPUs can be connected together using 2x Infinity Fabric Link Bridge Cards, connectiong two sets of four GPUs together. Each accelerator has three Infinity Fabric links with 300 GB/s of Peer-to-Peer (P2P) bandwidth performance. The AMD Infinity Architecture enables platform designs with direct-connect GPU hives with high-speed P2P connectivity and delivers up to 1.2 TB/s of total theoretical GPU bandwidth.

Part number information

The following table shows the part numbers for the MI210 Accelerator.

The PCIe option part number includes the following:

- One MI210 Accelerator with full-height (3U) adapter bracket attached

- Documentation

Each Infinity Fabric Link Bridge Card connects four MI210 Acclerators.

Features

The AMD Instinct MI210 accelerator offers the following features:

- Exascale-Class Technologies for the Data Center

The AMD Instinct MI210 accelerator extends AMD industry performance leadership in accelerated compute for double precision (FP64) on PCIe form factors for mainstream HPC and AI workloads in the data center. Built on AMD Exascale-class technologies with the 2nd Gen AMD CDNA architecture, the MI210 enables scientists and researchers to tackle our most pressing challenges from climate change to vaccine research. MI210 accelerators, combined with the AMD ROCm 5 software ecosystem, allow innovators to tap the power of HPC and AI data center PCIe GPUs to accelerate their time to science and discovery.

- Purpose-built Accelerators for HPC & AI Workloads

Powered by the 2nd Gen AMD CDNA architecture, AMD Instinct MI210 accelerator delivers HPC performance leadership in FP64 for a broad set of HPC & AI applications. The MI210 accelerator is built to accelerate deep learning training, providing an expanded range of mixed-precision capabilities based on the AMD Matrix Core Technology, and delivers an outstanding 181 teraflops peak theoretical FP16 and BF16 performance to bring users a powerful platform to fuel the convergence of HPC and AI.

- Innovations Delivering Performance Leadership

AMD innovations in architecture, packaging and integration are pushing the boundaries of computing by unifying the most important processors in the data center, the CPU, and the GPU accelerator. With our innovative double-precision Matrix Core capabilities along with the 3rd Gen AMD Infinity Architecture, AMD is delivering performance, efficiency and overall system throughput for HPC and AI using AMD EPYC CPUs and AMD Instinct MI210 accelerators.

- Ecosystem without Borders

AMD ROCm is an open software platform allowing researchers to tap the power of AMD Instinct accelerators to drive scientific discoveries. The ROCm platform is built on the foundation of open portability, supporting environments across multiple accelerator vendors and architectures. With ROCm 5, AMD extends its platform powering top HPC and AI applications with AMD Instinct MI200 series accelerators, increasing accessibility of ROCm for developers and delivering outstanding performance across key workloads.

- 2nd Generation AMD CDNA Architecture

The AMD Instinct MI210 accelerator brings commercial HPC & AI customers the compute engine selected for the first U.S. Exascale supercomputer. Powered by the 2nd Gen AMD CDNA architecture, the MI210 accelerator delivers outstanding performance for HPC and AI. The MI210 PCIe GPU delivers superior double and single precision performance for HPC workloads with up to 22.6 TFLOPS peak FP64|FP32 performance, enabling scientists and researchers around the globe to process HPC parallel codes more efficiently across several industries.

AMD’s Matrix Core technology delivers a broad range of mixed precision operations bringing you the ability to work with large models and enhance memory-bound operation performance for whatever combination of AI and machine learning workloads you need to deploy. The MI210 offers optimized BF16, INT4, INT8, FP16, FP32, and FP32 Matrix capabilities bringing you supercharged compute performance to meet all your AI system requirements. The AMD Instinct MI210 accelerator handles large data efficiently for training and delivers 181 teraflops of peak FP16 and bfloat16 floating-point performance for deep learning training.

- AMD Infinity Fabric Link Technology

AMD Instinct MI210 GPUs provide advanced I/O capabilities in standard off-the-shelf servers with our AMD Infinity Fabric technology and PCIe Gen4 support. The MI210 GPU delivers 64 GB/s CPU to GPU bandwidth without the need for PCIe switches, and up to 300 GB/s of Peer-to-Peer (P2P) bandwidth performance through three Infinity Fabric links. The AMD Infinity Architecture enables platform designs with dual and quad, direct-connect, GPU hives with high-speed P2P connectivity and delivers up to 1.2 TB/s of total theoretical GPU bandwidth within a server design. Infinity Fabric helps unlock the promise of accelerated computing, enabling a quick and simple onramp for CPU codes to accelerated platforms.

- Ultra-Fast HBM2e Memory

AMD Instinct MI210 accelerators provide up to 64GB High-bandwidth HBM2e memory with ECC support at a clock rate of 1.6 GHz. and deliver an ultra-high 1.6 TB/s of memory bandwidth to help support your largest data sets and eliminate bottlenecks moving data in and out of memory. Combine this performance with the MI210’s advanced Infinity Fabric I/O capabilities and you can push workloads closer to their full potential.

Technical specifications

The following table lists the MI210 Accelerator specifications.

Server support

The following tables list the ThinkSystem servers that are compatible.

- Supported only with EPYC 7003 "Milan" processors. Not supported with EPYC 7002 "Rome" processors

Operating system support

The following table lists the supported operating systems:

Tip: These tables are automatically generated based on data from Lenovo ServerProven.

1 HW is not supported with EPYC 7002 processors.

2 Ubuntu 22.04.3 LTS/Ubuntu 22.04.4 LTS

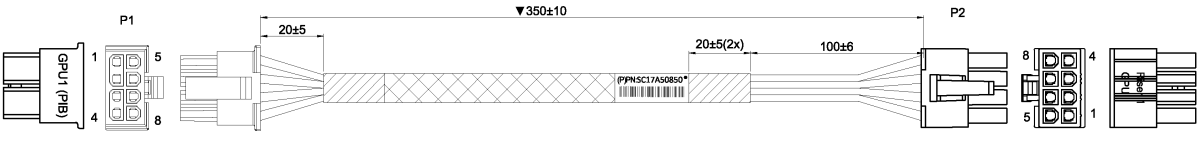

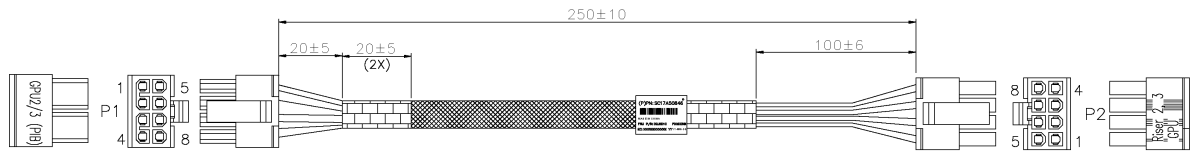

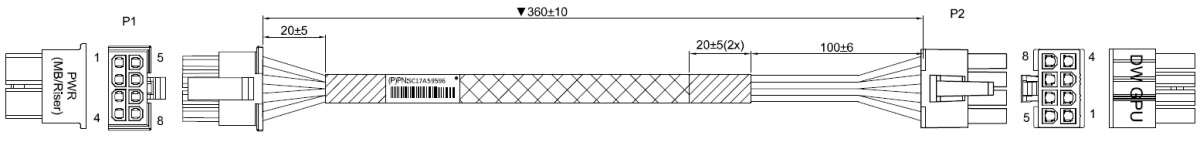

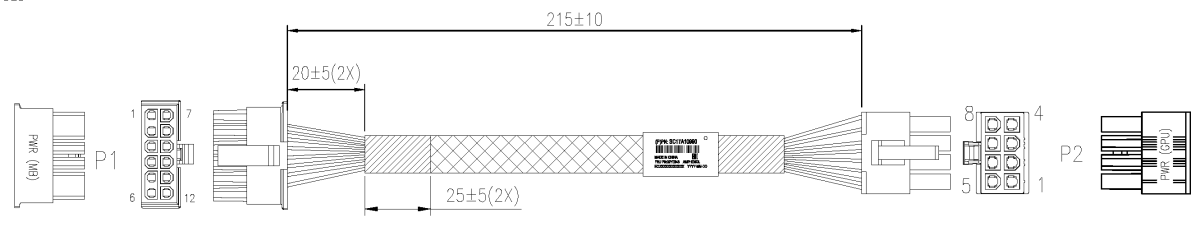

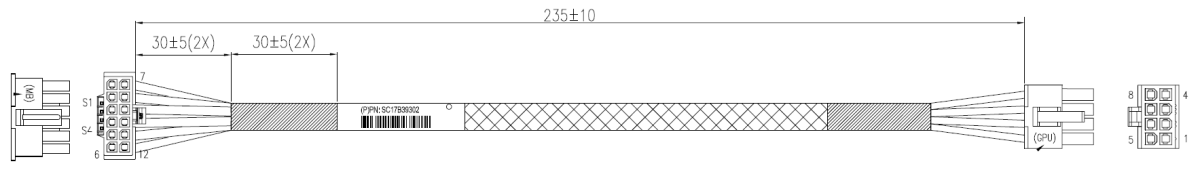

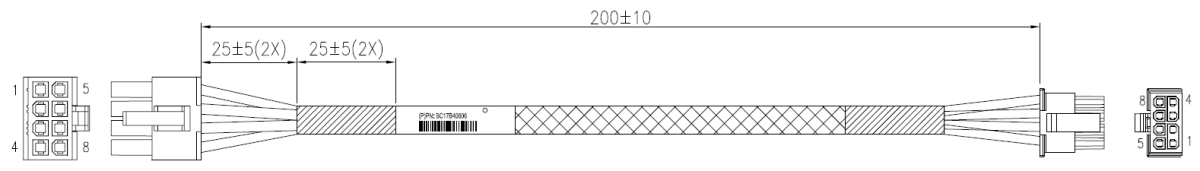

Auxiliary power cables

The MI210 Accelerator option part number does not ship with auxiliary power cables.

Regulatory approvals

The MI210 Accelerator has the following regulatory approvals:

- Electromagnetic Compliance

- Australia and New Zealand: CISPR 32: 2015 +COR1: 2016, Class A

- Canada ICES-003, Issue 7, Class A

- European Countries: EN 55032: 2015 + A11: 2020 Class B, EN 55024: 2010, EN 55035: 2017

- Japan VCCI-CISPR32:2016, VCCI 32-1: 2016 Class A

- Korea KN32, Class A, KN35, RRA Public Notification 2019-32

- Taiwan CNS 13438: 2016, C6357, Class A

- USA FCC 47 CFR Part 15, Subpart B, Class A

- Product Safety Compliance

- UL 62368-1, 2nd Edition, 2014-12

- CSA-C22.2 No. 62368-1, 2nd Edition, 2014-12

- EN 62368-1, 2nd Edition, 2014 + A1: 2017

- IEC 62368-1, 2nd Edition, 2014

- RoHS Compliance: EU RoHS Directive (EU) 2015/863 Amendment to EU RoHS 2 (Directive 2011/65/EU)

- REACH Compliance

- Halogen Free: IEC 61249-2-21:2003 standard

Operating environment

The MI210 Accelerator has the following operating characteristics:

- Ambient temperature

- Operational: 5°C to 45°C

- Storage: -40°C to 70°C

- Relative humidity:

- Operational: 8-90%

- Storage: 0-95%

Warranty

One year limited warranty. When installed in a Lenovo server, the MI210 Accelerator assumes the server’s base warranty and any warranty upgrades.

Related publications

For more information, refer to these documents:

- ThinkSystem and ThinkAgile GPU Summary:

https://lenovopress.lenovo.com/lp0768-thinksystem-thinkagile-gpu-summary - ServerProven compatibility:

https://serverproven.lenovo.com/ - AMD MI210 product page:

https://www.amd.com/en/products/accelerators/instinct/mi200/mi210.html

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

ThinkAgile®

ThinkSystem®

The following terms are trademarks of other companies:

AMD, AMD CDNA™, AMD EPYC™, AMD Instinct™, AMD ROCm™, and Infinity Fabric™ are trademarks of Advanced Micro Devices, Inc.

Intel®, the Intel logo and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.