Published

10 Jul 2025Form Number

LP2255PDF size

5 pages, 162 KBAbstract

As organizations push AI from experimentation into business-critical production, infrastructure limitations are becoming a major roadblock. This blog introduces NVIDIA Run:ai, a workload and GPU orchestration platform now validated on Lenovo ThinkSystem AI servers. We’ll explore how this joint solution addresses key infrastructure challenges—such as resource fragmentation, low GPU utilization, and orchestration inefficiencies—helping IT teams and AI practitioners streamline operations, maximize performance, and accelerate value realization from AI.

Introduction

AI is reshaping industries—improving decision-making in finance, accelerating research in healthcare, and enhancing customer experience in retail. However, as enterprises move from small-scale pilots to real-world AI deployments, they often face critical bottlenecks in infrastructure management:

- AI practitioners experience inconsistent access to compute resources.

- IT teams struggle to allocate GPU workloads efficiently across diverse projects.

- Leadership lacks visibility into resource usage and cost implications.

Traditional infrastructure—built for static workloads—wasn’t designed to handle the dynamic, GPU-intensive demands of modern AI pipelines.

The result? Slow AI adoption, inflated costs, and missed business opportunities.

Overview: Introducing NVIDIA Run:ai on Lenovo Infrastructure

NVIDIA Run:ai is a Kubernetes-native platform that abstracts, virtualizes, and orchestrates GPU resources across AI workloads. It allows teams to run AI workloads as if they had an elastic GPU cloud, all while maintaining control, compliance, and visibility.

Lenovo Hybrid AI 285 Platform

NVIDIA Run:ai is a great companion for the Lenovo Hybrid Ai 285, a validated designed for both Lenovo and NVIDIA to deliver best in class performance for AI applications.

NVIDIA Run:ai also is compatible with:

- Lenovo ThinkSystem SR780a V3, SR680a V3, SR685a V3 servers

- Integration with 200Gbps Ethernet & InfiniBand, HGX H200 GPU platforms

This enables organizations to deploy Run:ai with confidence on scalable, high-performance Lenovo hardware—ensuring both operational continuity and enterprise-grade support.

For more information, see the Lenovo Hybrid AI 285 Platform Guide.

Role-Based Benefits

The benefits of Run:ai include the following:

- For IT Managers: Centralized control, capacity planning, policy enforcement, audit-ready compliance

- For AI Practitioners: Reliable scheduling, elastic GPU access, lifecycle automation

- For Platform Admins: Efficient GPU allocation, user access control, performance monitoring

Technical Architecture

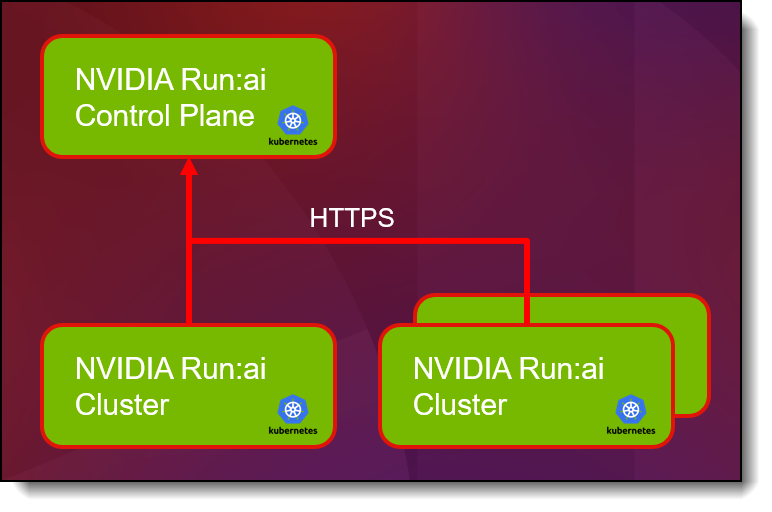

NVIDIA Run:ai consists of:

- A Control Plane for resource governance, monitoring, and workload submission

- Multiple Run:ai Clusters for distributed scheduling and orchestration

- All deployed on a Kubernetes cluster with secure HTTPS communication, enabling end-to-end visibility and control across AI operations.

Conclusion

AI success depends not just on algorithms, but on infrastructure. Without modern orchestration tools, organizations face underutilized GPUs, project delays, and rising costs.

NVIDIA Run:ai on Lenovo AI Infrastructure solves these challenges by:

- Delivering dynamic, efficient GPU orchestration

- Supporting end-to-end AI lifecycle management

- Unifying infrastructure into a single, scalable platform

For IT Managers and AI teams ready to move AI from pilot to production, this solution represents a critical leap forward in operationalizing AI at scale.

For more information see the following resources:

Authors

Carlos Huescas is the Worldwide Product Manager for NVIDIA software at Lenovo. He specializes in High Performance Computing and AI solutions. He has more than 15 years of experience as an IT architect and in product management positions across several high-tech companies.

Sandeep Brahmarouthu is the Head of Partnerships at NVIDIA for the Run:ai product. With over 15 years of experience in sales and business development, he leads the strategy and execution of identifying and sourcing new business opportunities, acquiring new customers and partners, and negotiating complex enterprise deals in the AI and Data space.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ThinkSystem®

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.