Author

Updated

11 Nov 2025Form Number

LP2163PDF size

15 pages, 502 KBAbstract

The ThinkSystem NVIDIA ConnectX-8 8180 800Gbs OSFP PCIe Gen6 x16 Adapter is a high-performance adapter that supports one port of 800Gb XDR InfiniBand or two ports of 400 Gb Ethernet, via a single-cage OSFP interface, providing best-in-class connectivity for AI computing.

This product guide provides essential presales information to understand the ConnectX-8 8180 adapter and its key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the ConnectX-8 8180 adapter and consider its use IT solutions.

Change History

Changes in the November 11, 2025 update:

- Added additional transceiver and cables for the water-cooled ConnectX-8 8180 adapter - Supported transceivers and cables section

Introduction

The ThinkSystem NVIDIA ConnectX-8 8180 800Gbs OSFP PCIe Gen6 x16 Adapter is a high-performance SuperNIC network adapter with a single-cage OSFP interface. It supports one port of 800Gb XDR InfiniBand or two ports of 400 Gb Ethernet, providing best-in-class connectivity for AI computing.

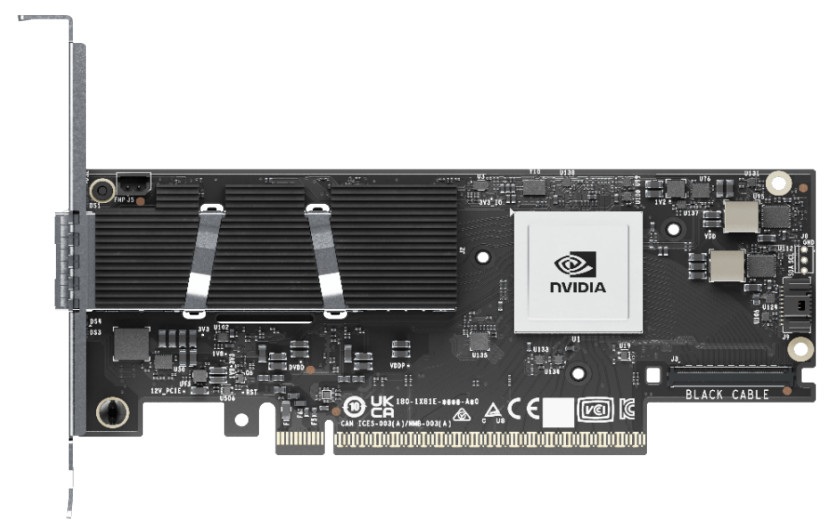

The following figure shows the ThinkSystem NVIDIA ConnectX-8 8180 800Gbs OSFP PCIe Gen6 x16 Adapter (shown with the full-adapter gold case and internal heatsink removed)

Figure 1. ThinkSystem NVIDIA ConnectX-8 8180 800Gbs OSFP PCIe Gen6 x16 Adapter (full-adapter gold case and heatsink removed)

Did you know?

The ConnectX-8 8180 adapter includes support for NVIDIA In-Network Computing acceleration engines to deliver the performance and robust feature set needed to power trillion-parameter-scale AI factories and scientific computing workloads.

Part number information

The following table shows the ordering information.

The option part number includes the following:

- One NVIDIA adapter with full-height (3U) adapter bracket attached

- Low-profile (2U) adapter bracket

- Documentation

In PCIe Gen5 systems, the ConnectX-8 8180 adapter requires the use of an Auxiliary cable which plugs into a second PCIe x16 connection. The combination of the x16 host interface of the adapter plus the x16 connection of the Auxiliary cable results in a PCIe 5.0 x32 connection, needed for 800 Gb networking connectivity. Ordering information for the Auxiliary cable is listed in the above table.

Supported transceivers and cables

The ConnectX-8 8180 adapter has an empty OSFP cage for connectivity.

Support for air-cooled adapter

The ThinkSystem NVIDIA ConnectX-8 8180 800Gbs XDR IB / 2x400GbE OSFP 1-port PCIe Gen6 x16 (Generic FW) supports specific cables.

No transceiver support: The air-cooled adapter does not currently support any transceivers. Use a DAC cable instead.

The following table lists the supported direct-attach copper (DAC) cables.

Support for water-cooled adapter

The ThinkSystem NVIDIA ConnectX-8 8180 800Gbs XDR IB / 2x400GbE OSFP 1-Port PCIe Gen6 x16 DWC (Generic FW) supports specific transceivers and cables.

The following table lists the supported transceivers.

The following table lists the supported optical cables.

The following table lists the supported direct-attach copper (DAC) cables.

Technical specifications

The adapter has the following technical specifications:

Implementation tip: By default, the ConnectX-8 C8180 adapter will attempt to establish a link using InfiniBand. If an Ethernet connection is required, the protocol must be changed on the adapter.

- Single OSFP cage

- InfiniBand Network Interface

- Supports 200/100/50G PAM4

- Speeds: 800Gb, 400Gb, 200Gb, 100Gb

- Supports InfiniBand XDR/NDR/HDR/HDR100/EDR

- IBTA v1.7-compliant

- 16 million I/O channels

- 256 to 4,000 byte MTU, 2GB messages

- Ethernet Network Interface

- Supports 100/50G PAM4 and 25/10G NRZ

- Speeds: 400Gb, 200Gb, 100Gb, 50Gb, 25Gb

- Max bandwidth: 800Gb/s (2x 400Gb using a twin-transceiver or splitter cable)

- Supports up to 8 split ports

- Protocol support

- InfiniBand: IBTA v1.7; Auto-Negotiation: XDR (4 lanes x 200Gb/s per ) port, NDR (4 lanes x 100Gb/s per lane) port, NDR200 (2 lanes x 100Gb/s per lane) port, HDR (50Gb/s per lane) port, HDR100 (2 lane x 50Gb/s per lane) port, EDR (25Gb/s per lane) port, FDR (14.0625Gb/s per lane), 1X/2X/4X SDR (2.5Gb/s per lane).

- Ethernet: 400GAUI-4 C2M, 400GBASE-CR4, 200GAUI-2 C2M, 200GAUI-4 C2M, 200GBASE-CR4, 100GAUI-2 C2M, 100GAUI-1 C2M, 100GBASE-CR4, 100GBASE-CR2, 100GBASE-CR1, 50GAUI-2 C2M, 50GAUI-1 C2M, 50GBASE-CR, 50GBASE-R2 , 40GBASE-CR4, 40GBASE-R2, 25GBASE-R, 10GBASE-R, 10GBASE-CX4, 1000BASE-CX, CAUI-4 C2M, 25GAUI C2M, XLAUI C2M , XLPPI, SFI

- Host Interface

- PCIe Gen6 x16 host interface via edge connector

- Additional x16 interface via an MCIO connector on the adapter (requires Auxiliary cable)

- MSI/MSI-X

- Optimized Cloud Networking

- Stateless TCP offloads: IP / TCP / UDP checksum, LSO, LRO, GRO, TSS, RSS

- SR-IOV

- Ethernet Accelerated Switching & Packet Processing (ASAP2) for SDN and VNF

- OVS acceleration

- Overlay network accelerations: VXLAN, GENEVE, NVGRE

- Connection tracking (L4 firewall) and NAT

- Hierarchical QoS, Header rewrite, Flow mirroring, Flow-based statistics, Flow aging

- Advanced AI / HPC Networking

- RDMA and RoCEv2 accelerations

- Advanced, programmable congestion control

- NVIDIA GPUDirect RDMA

- GPUDirect Storage

- In-network computing

- High-speed packet reordering

- MPI Accelerations

- Burst-buffer offloads

- Collective operations offloads

- Rendezvous protocol offloads

- Enhanced atomic operations

- AI / HPC Software

- NCCL HPC-X

- DOCA UCC / UCX

- OpenMPI

- MVAPICH-2

- Platform security

- Secure boot with hardware root of trust (RoT)

- Secure firmware update

- Flash encryption

- Device attestation (SPDM 1.2)

- Cryptography

- Inline crypto accelerations: IPsec, TLS, MACsec, PSP

- Management and Control

- Network Control Sideband Interface (NC-SI)

- MCTP over SMBus and PCIe PLDM for:

- Monitor and Control DSP0248

- Firmware Update DSP0267

- Redfish Device Enablement DSP0218

- Field-Replaceable Unit (FRU) DSP0257

- Security Protocols and Data Models (SPDM) DSP0274

- Serial Peripheral Interface (SPI) to flash

- Joint Test Action Group (JTAG) IEEE 1149.1 and IEEE 1149.6

- Network Boot

- InfiniBand or Ethernet

- PXE boot

- iSCSI boot

- UEFI

NVIDIA Unified Fabric Manager

NVIDIA Unified Fabric Manager (UFM) is InfiniBand networking management software that combines enhanced, real-time network telemetry with fabric visibility and control to support scale-out InfiniBand data centers.

The two offerings available from Lenovo are as follows:

- UFM Telemetry for Real-Time Monitoring

The UFM Telemetry platform provides network validation tools to monitor network performance and conditions, capturing and streaming rich real-time network telemetry information, application workload usage, and system configuration to an on-premises or cloud-based database for further analysis.

- UFM Enterprise for Fabric Visibility and Control

The UFM Enterprise platform combines the benefits of UFM Telemetry with enhanced network monitoring and management. It performs automated network discovery and provisioning, traffic monitoring, and congestion discovery. It also enables job schedule provisioning and integrates with industry-leading job schedulers and cloud and cluster managers, including Slurm and Platform Load Sharing Facility (LSF).

The following table lists the subscription licenses available from Lenovo.

For more information, see the following web page:

https://www.nvidia.com/en-us/networking/infiniband/ufm/

Server support

The following tables list the ThinkSystem servers that are compatible.

Support of adapters with generic firmware

One or more of the adapters described in this product guide uses standard vendor firmware (look for "Generic FW" or "Generic" in the adapter names). These adapters are supported in Lenovo servers however there are currently limitations on the use of Lenovo management tools.

Support in Lenovo XClarity management tools for adapters with generic firmware is per the following table.

Tip: Always use firmware that is obtained from Lenovo sources to ensure the firmware is fully tested by Lenovo and is supported. You should not use firmware that is obtained from the vendor web site, unless directed to do so by Lenovo support.

Operating system support

The following table lists the OS support for the ThinkSystem NVIDIA ConnectX-8 8180 800Gbs XDR IB / 2x400GbE OSFP 1-port PCIe Gen6 x16 (Generic FW).

Tip: This table is automatically generated based on data from Lenovo ServerProven.

Regulatory approvals

The adapter has the following regulatory approvals:

- Safety: CB / cTUVus / CE

- EMC: CE / FCC / VCCI / ICES / RCM / KC

- RoHS: RoHS Compliant

Operating environment

The adapter has the following operating characteristics:

- Maximum power available through OSFP port: 17W

- Temperature

- Operational: 0°C to 55°C

- Non-operational: -40°C to 70°C

- Humidity: 90% relative humidity

Warranty

One year limited warranty. When installed in a Lenovo server, the adapter assumes the server’s base warranty and any warranty upgrades.

Related publications

For more information, refer to these documents:

- ThinkSystem Ethernet and InfiniBand Adapter Reference

https://lenovopress.lenovo.com/lp1594-thinksystem-ethernet-infiniband-adapter-reference - Lenovo ServerProven compatibility information

http://serverproven.lenovo.com - NVIDIA InfiniBand product page:

https://www.nvidia.com/en-us/networking/infiniband-adapters/ - ConnectX-8 SuperNIC user manual:

https://docs.nvidia.com/networking/display/connectx8supernic

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

ServerProven®

ThinkSystem®

XClarity®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel®, the Intel logo is a trademark of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

LSF® is a trademark of IBM in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Changes in the November 11, 2025 update:

- Added additional transceiver and cables for the water-cooled ConnectX-8 8180 adapter - Supported transceivers and cables section

Changes in the August 1, 2025 update:

- Added a note regarding the need for RoCE when Ethernet mode is enabled - Technical specifications section

Changes in the May 27, 2025 update:

- The following transceiver is no longer supported:

- Lenovo NVIDIA XDR OSFP Single Mode Solo-Transceiver (Generic), 4TC7B05746

Changes in the May 21, 2025 update:

- Added OS support information - Operating system support section

Changes in the May 19, 2025 update:

- The following 800Gb transceiver has been reactivated - Transceivers for water-cooled adapter:

- Lenovo NVIDIA XDR OSFP Single Mode Solo-Transceiver (Generic), 4TC7B05746

Changes in the April 22, 2025 update:

- Added the following adapter for water-cooled servers:

- ThinkSystem NVIDIA ConnectX-8 8180 800Gbs XDR IB / 2x400GbE OSFP 1-Port PCIe Gen6 x16 DWC (Generic FW), CAGC

- Added the supported transceiver and cables for the water-cooled adapter - Supported transceivers and cables section

First published: February 25, 2025

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.