Author

Updated

16 Dec 2024Form Number

LP1732PDF size

24 pages, 2.3 MBAbstract

The ThinkSystem NVIDIA H100 GPU delivers unprecedented performance, scalability, and security for every workload. The GPUs use breakthrough innovations in the NVIDIA Hopper™ architecture to deliver industry-leading conversational AI, speeding up large language models by 30X over the previous generation.

This product guide provides essential presales information to understand the NVIDIA H100 GPU and their key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the GPUs and consider their use in IT solutions.

Change History

Changes in the December 16, 2024 update:

- Removed the vGPU and Omniverse software part numbers as not supported with the H100 GPUs - NVIDIA GPU software section

Introduction

The ThinkSystem NVIDIA H100 GPU delivers unprecedented performance, scalability, and security for every workload. The GPUs use breakthrough innovations in the NVIDIA Hopper™ architecture to deliver industry-leading conversational AI, speeding up large language models by 30X over the previous generation.

The NVIDIA H100 GPU features fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, further extending NVIDIA’s market-leading AI leadership with up to 9X faster training and an incredible 30X inference speedup on large language models. For high-performance computing (HPC) applications, The GPUs triple the floating-point operations per second (FLOPS) of FP64 and add dynamic programming (DPX) instructions to deliver up to 7X higher performance.

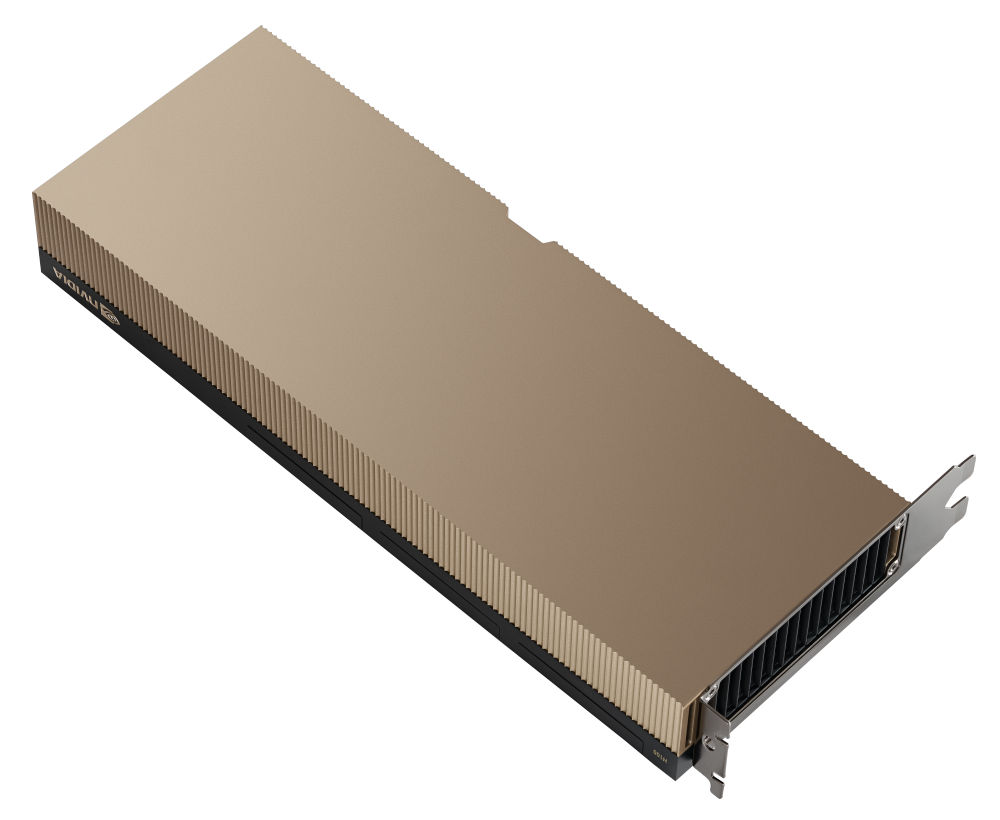

The following figure shows the ThinkSystem NVIDIA H100 GPU in the double-width PCIe adapter form factor.

Figure 1. ThinkSystem NVIDIA H100 NVL 94GB PCIe Gen5 Passive GPU

Did you know?

The NVIDIA H100 family is available in both double-wide PCIe adapter form factor and in SXM form factor. The latter is used in Lenovo's Neptune direct-water-cooled ThinkSystem SD665-N V3 server for the ultimate in GPU performance and heat management.

The NVIDIA H100 NVL Tensor Core GPU is optimized for Large Language Model (LLM) Inferences, with its high compute density, high memory bandwidth, high energy efficiency, and unique NVLink architecture.

Part number information

The following table shows the part numbers for the ThinkSystem NVIDIA H100 GPU.

Not available in China, Hong Kong and Macau: The H100 GPUs are not available in China, Hong Kong and Macau. For these markets, the H800 is avalable. See the NVIDIA H800 product guide for details, https://lenovopress.lenovo.com/LP1814.

The PCIe option part numbers includes the following:

- One GPU with full-height (3U) adapter bracket attached

- Documentation

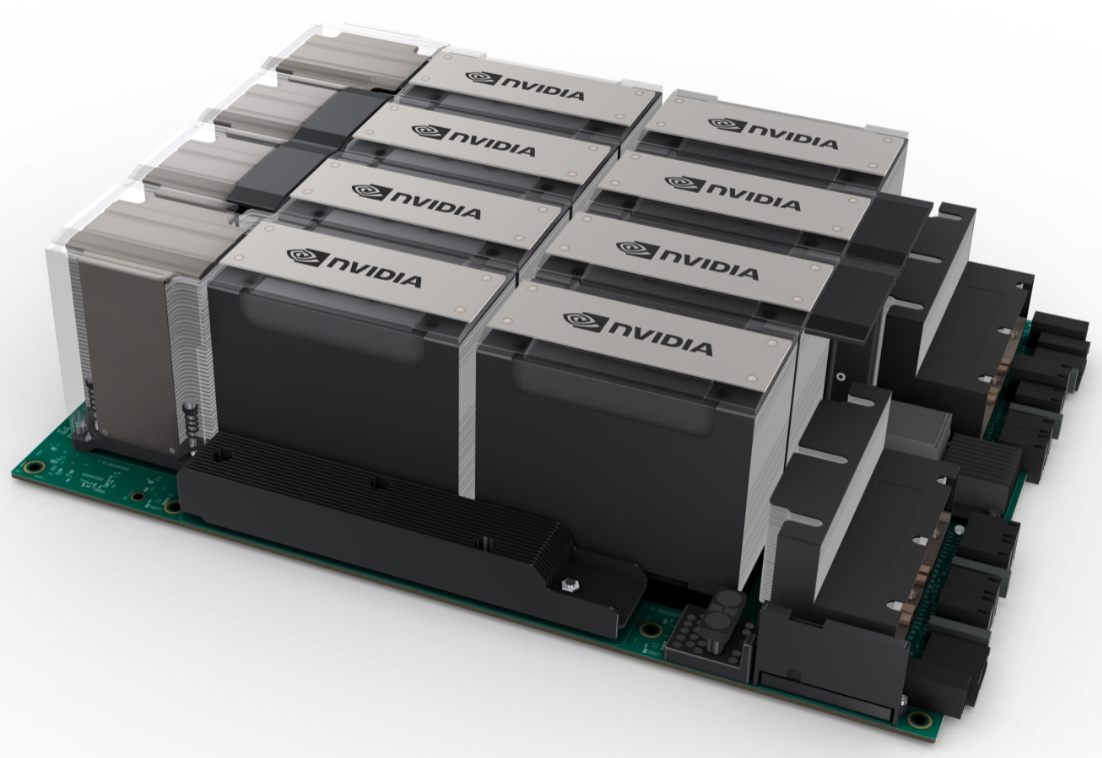

The following figure shows the NVIDIA H100 SXM5 8-GPU Board with heatsinks installed in the ThinkSystem SR680a V3 and ThinkSystem SR685a V3 servers.

Figure 2. NVIDIA H100 SXM5 8-GPU Board in the ThinkSystem SR680a V3 and SR685a V3 servers

Features

The ThinkSystem NVIDIA H100 GPU delivers high performance, scalability, and security for every workload. The GPU uses breakthrough innovations in the NVIDIA Hopper™ architecture to deliver industry-leading conversational AI, speeding up large language models (LLMs) by 30X over the previous generation.

The PCIe versions of the NVIDIA H100 GPUs include a five-year software subscription, with enterprise support, to the NVIDIA AI Enterprise software suite, simplifying AI adoption with the highest performance. This ensures organizations have access to the AI frameworks and tools they need to build accelerated AI workflows such as AI chatbots, recommendation engines, vision AI, and more.

The NVIDIA H100 GPU features fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, further extending NVIDIA’s market-leading AI leadership with up to 9X faster training and an incredible 30X inference speedup on large language models. For high-performance computing (HPC) applications, the GPU triples the floating-point operations per second (FLOPS) of FP64 and adds dynamic programming (DPX) instructions to deliver up to 7X higher performance. With second-generation Multi-Instance GPU (MIG), built-in NVIDIA confidential computing, and NVIDIA NVLink Switch System, the NVIDIA H100 GPU securely accelerates all workloads for every data center from enterprise to exascale.

Key features of the NVIDIA H100 GPU:

- NVIDIA H100 Tensor Core GPU

Built with 80 billion transistors using a cutting-edge TSMC 4N process custom tailored for NVIDIA’s accelerated compute needs, H100 is the world’s most advanced chip ever built. It features major advances to accelerate AI, HPC, memory bandwidth, interconnect, and communication at data center scale.

- Transformer Engine

The Transformer Engine uses software and Hopper Tensor Core technology designed to accelerate training for models built from the world’s most important AI model building block, the transformer. Hopper Tensor Cores can apply mixed FP8 and FP16 precisions to dramatically accelerate AI calculations for transformers.

- NVLink Switch System

The NVLink Switch System enables the scaling of multi-GPU input/output (IO) across multiple servers. The system delivers up to 9X higher bandwidth than InfiniBand HDR on the NVIDIA Ampere architecture.

- NVIDIA Confidential Computing

NVIDIA Confidential Computing is a built-in security feature of Hopper that makes NVIDIA H100 the world’s first accelerator with confidential computing capabilities. Users can protect the confidentiality and integrity of their data and applications in use while accessing the unsurpassed acceleration of H100 GPUs.

- Second-Generation Multi-Instance GPU (MIG)

The Hopper architecture’s second-generation MIG supports multi-tenant, multi-user configurations in virtualized environments, securely partitioning the GPU into isolated, right-size instances to maximize quality of service (QoS) for 7X more secured tenants.

- DPX Instructions

Hopper’s DPX instructions accelerate dynamic programming algorithms by 40X compared to CPUs and 7X compared to NVIDIA Ampere architecture GPUs. This leads to dramatically faster times in disease diagnosis, real-time routing optimizations, and graph analytics.

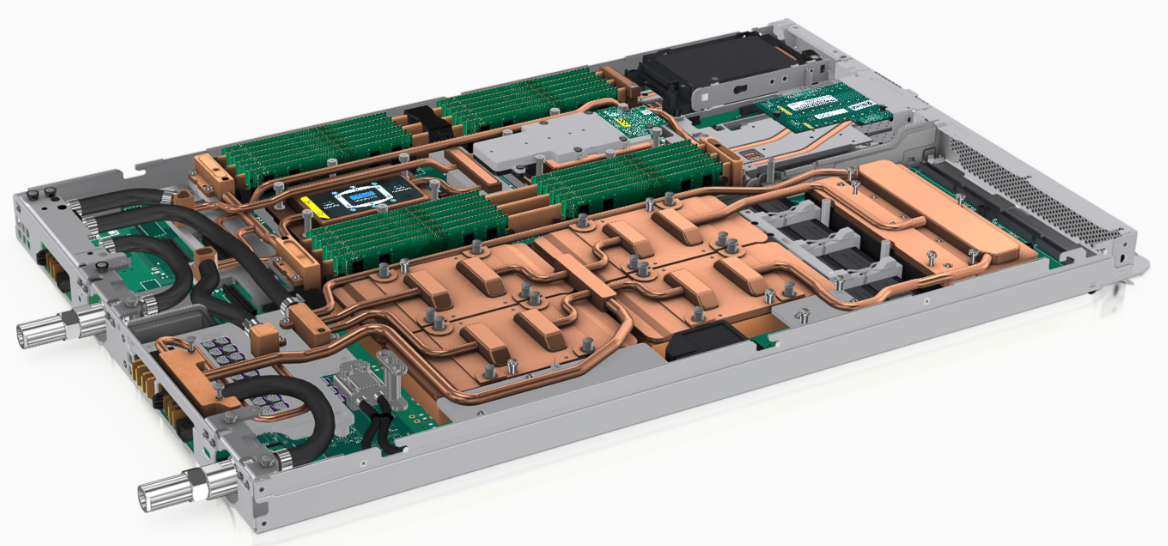

The following figure shows the NVIDIA H100 SXM5 4-GPU Board installed in the ThinkSystem SD665-N V3 server

Figure 3. NVIDIA H100 SXM5 4-GPU Board in the ThinkSystem SD665-N V3 server

Technical specifications

The following table lists the GPU processing specifications and performance of the NVIDIA H100 GPU.

* With structural sparsity enabled

Server support

The following tables list the ThinkSystem servers that are compatible.

NVLink server support: The NVLink Ampere bridge is supported with additional NVIDIA A-series and H-series GPUs. As a result, there are additional servers listed as supporting the bridge that don't support the H100 GPU.

- Contains 8 separate GPUs connected via high-speed interconnects

- Contains 4 separate GPUs connected via high-speed interconnects

- Contains 4 separate GPUs connected via high-speed interconnects

Operating system support

The following table lists the supported operating systems.

Tip: These tables are automatically generated based on data from Lenovo ServerProven.

1 HW is not supported with EPYC 7002 processors.

2 For limitation, please refer Support Tip TT1064

3 For limitation, please refer Support Tip TT1591

4 Ubuntu 22.04.3 LTS/Ubuntu 22.04.4 LTS

NVIDIA GPU software

This section lists the NVIDIA software that is available from Lenovo.

The PCIe adapter H100 GPUs include a five-year software subscription, including enterprise support, to the NVIDIA AI Enterprise software suite:

- ThinkSystem NVIDIA H100 NVL 94GB PCIe Gen5 Passive GPU, 4X67A89325

- ThinkSystem NVIDIA H100 80GB PCIe Gen5 Passive GPU, 4X67A82257

This license is equivalent to part number 7S02001HWW listed in the NVIDIA AI Enterprise Software section below.

To activate the NVIDIA AI Enterprise license, see the following page:

https://www.nvidia.com/en-us/data-center/activate-license/

SXM GPUs: The NVIDIA AI Enterprise software suite is not included with the SXM H100 GPUs and will need to ordered separately if needed.

NVIDIA AI Enterprise Software

Lenovo offers the NVIDIA AI Enterprise (NVAIE) cloud-native enterprise software. NVIDIA AI Enterprise is an end-to-end, cloud-native suite of AI and data analytics software, optimized, certified, and supported by NVIDIA to run on VMware vSphere and bare-metal with NVIDIA-Certified Systems™. It includes key enabling technologies from NVIDIA for rapid deployment, management, and scaling of AI workloads in the modern hybrid cloud.

NVIDIA AI Enterprise is licensed on a per-GPU basis. NVIDIA AI Enterprise products can be purchased as either a perpetual license with support services, or as an annual or multi-year subscription.

- The perpetual license provides the right to use the NVIDIA AI Enterprise software indefinitely, with no expiration. NVIDIA AI Enterprise with perpetual licenses must be purchased in conjunction with one-year, three-year, or five-year support services. A one-year support service is also available for renewals.

- The subscription offerings are an affordable option to allow IT departments to better manage the flexibility of license volumes. NVIDIA AI Enterprise software products with subscription includes support services for the duration of the software’s subscription license

The features of NVIDIA AI Enterprise Software are listed in the following table.

Note: Maximum 10 concurrent VMs per product license

The following table lists the ordering part numbers and feature codes.

Find more information in the NVIDIA AI Enterprise Sizing Guide.

NVIDIA HPC Compiler Software

Auxiliary power cables

The power cables needed for the H100 SXM GPUs are included with the supported servers.

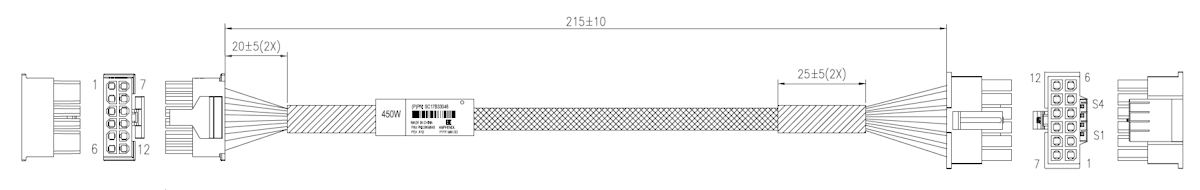

The H100 PCIe GPU option part number does not ship with auxiliary power cables. Cables are server-specific due to length requirements. For CTO orders, auxiliary power cables are derived by the configurator. For field upgrades, cables will need to be ordered separately as listed in the table below.

| Auxiliary power cable needed with the SR650 V3, SR655 V3, SR665 V3, SR665, SR650 V2 |

|

* The option part numbers are for thermal kits and include other components needed to install the GPU. See the SR650 V3 product guide or SR655 V3 product guide or SR665 V3 product guide for details. |

| Auxiliary power cable needed with the SR675 V3 |

.png) 235mm 16-pin (2x6+4) cable 235mm 16-pin (2x6+4) cableOption: 4X97A84510, ThinkSystem SR675 V3 Supplemental Power Cable for H100 GPU Option Feature: BSD2 SBB: SBB7A65299 Base: SC17B39301 FRU: 03LE554 |

| Auxiliary power cable needed with the SR850 V3, SR860 V3 |

.png) 200mm 16-pin (2x6+4) cable 200mm 16-pin (2x6+4) cableOption: 4X97A88016, ThinkSystem SR850 V3/SR860 V3 H100 GPU Power Cable Option Kit Feature: BW28 SBB: SBB7A72759 Base: SC17B40604 FRU: 03LF915 |

| Auxiliary power cable needed with the SR670 V2 |

215mm 16-pin (2x6+4) cable 215mm 16-pin (2x6+4) cableOption: 4X97A85027, ThinkSystem SR670 V2 H100/L40 GPU Option Power Cable Feature: BRWL SBB: SBB7A66339 Base: SC17B33046 FRU: 03KM845 |

Regulatory approvals

The NVIDIA H100 GPU has the following regulatory approvals:

- RCM

- BSMI

- CE

- FCC

- ICES

- KCC

- cUL, UL

- VCCI

Operating environment

The NVIDIA H100 GPU has the following operating characteristics:

- Ambient temperature

- Operational: 0°C to 50°C (-5°C to 55°C for short term*)

- Storage: -40°C to 75°C

- Relative humidity:

- Operational: 5-85% (5-93% short term*)

- Storage: 5-95%

* A period not more than 96 hours consecutive, not to exceed 15 days per year.

Warranty

One year limited warranty. When installed in a Lenovo server, the GPU assumes the server’s base warranty and any warranty upgrades.

Seller training courses

The following sales training courses are offered for employees and partners (login required). Courses are listed in date order.

-

Lenovo VTT Cloud Architecture: Empowering AI Innovation with NVIDIA RTX Pro 6000 and Lenovo Hybrid AI Services

2025-09-18 | 68 minutes | Employees Only

DetailsLenovo VTT Cloud Architecture: Empowering AI Innovation with NVIDIA RTX Pro 6000 and Lenovo Hybrid AI Services

Join Dinesh Tripathi, Lenovo Technical Team Lead for GenAI and Jose Carlos Huescas, Lenovo HPC & AI Product Manager for an in-depth, interactive technical webinar. This session will explore how to effectively position the NVIDIA RTX PRO 6000 Blackwell Server Edition in AI and visualization workflows, with a focus on real-world applications and customer value.

Published: 2025-09-18

We’ll cover:

- NVIDIA RTX PRO 6000 Blackwell Overview: Key specs, performance benchmarks, and use cases in AI, rendering, and simulation.

- Positioning Strategy: How to align NVIDIA RTX PRO 6000 with customer needs across industries like healthcare, manufacturing, and media.

- Lenovo Hybrid AI 285 Services: Dive into Lenovo’s Hybrid AI 285 architecture and learn how it supports scalable AI deployments from edge to cloud.

Whether you're enabling AI solutions or guiding customers through infrastructure decisions, this session will equip you with the insights and tools to drive impactful conversations.

Tags: Industry solutions, SMB, Services, Technical Sales, Technology solutions

Length: 68 minutes

Course code: DVCLD227Start the training:

Employee link: Grow@Lenovo

-

Think AI Weekly: ISG & SSG Better Together: Uniting AI Solutions & Services for Smarter Outcomes

2025-08-01 | 55 minutes | Employees Only

DetailsThink AI Weekly: ISG & SSG Better Together: Uniting AI Solutions & Services for Smarter Outcomes

View this session to hear from our speakers Allen Holmes, AI Technologist, ISG and Balaji Subramaniam, AI Regional Leader-Americas, SSG.

Published: 2025-08-01

Topics include:

• An overview of ISG & SSG AI CoE Offerings with Customer Case Studies

• The Enterprise AI Deal Engagement Flow with ISG and SSG

• How sellers can leverage this partnership to differentiate with Enterprise clients.

• NEW COURSE: From Inception to Execution: Evolution of an AI Deal

Tags: Artificial Intelligence (AI), Sales, Services, Technology Solutions, TruScale Infrastructure as a Service

Length: 55 minutes

Course code: DTAIW145Start the training:

Employee link: Grow@Lenovo

-

Think AI Weekly: Third-Party Due Diligence Requirements for GPU Opportunities

2025-07-24 | 46 minutes | Employees Only

DetailsThink AI Weekly: Third-Party Due Diligence Requirements for GPU Opportunities

View this session to hear from Tanya Roychowdhury, Legal Counsel Director and Andrea Fazio, Third-party Due Diligence Project Manager as the explain:

Published: 2025-07-24

- What are the requirements?

- Why are they important?

- What this means to sales

Tags: Artificial Intelligence (AI), DataCenter Products, NVIDIA, Sales, Technical Sales

Length: 46 minutes

Course code: DTAIW143Start the training:

Employee link: Grow@Lenovo

-

ThinkSystem Supercomputing Servers Powered by NVIDIA

2025-06-27 | 30 minutes | Employees and Partners

DetailsThinkSystem Supercomputing Servers Powered by NVIDIA

This course offers you information about the Lenovo SC777 V4 Neptune server, the first Lenovo server to use an Arm processor from NVIDIA. By the end of this course, you’ll be able to list three features of the ThinkSystem SC777 V4 Neptune server, list three features of the ThinkSystem N1380 Neptune enclosure, describe two customer benefits of the ThinkSystem SC777 V4 Neptune server, and list four workload environments to which the SC777 V4 server is well suited.

Published: 2025-06-27

Tags: DataCenter Products, NVIDIA, ThinkSystem

Length: 30 minutes

Course code: SXXW2545Start the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

VTT AI: NVIDIA and Lenovo: Data Center Platform Overview

2025-06-10 | 77 minutes | Employees Only

DetailsVTT AI: NVIDIA and Lenovo: Data Center Platform Overview

Please join this session to hear Steve Stein, Senior Product Marketing Manager, NVIDIA and Naman Malhotra, Senior Product Manager, Lenovo as they present these topics:

Published: 2025-06-10

•NVIDIA Accelerated Computing Portfolio

•Use Cases and Positioning

•Lenovo Platforms and Solutions

Tags: Artificial Intelligence (AI), Nvidia, Server

Length: 77 minutes

Course code: DVAI216Start the training:

Employee link: Grow@Lenovo

-

VTT AI: Introducing the Lenovo Hybrid AI 285 Platform April 2025

2025-04-30 | 60 minutes | Employees Only

DetailsVTT AI: Introducing the Lenovo Hybrid AI 285 Platform April 2025

The Lenovo Hybrid AI 285 Platform enables enterprises of all sizes to quickly deploy AI infrastructures supporting use cases as either new greenfield environments or as an extension to current infrastructures. The 285 Platform enables the use of the NVIDIA AI Enterprise software stack. The AI Hybrid 285 platform is the perfect foundation supporting Lenovo Validated Designs.

Published: 2025-04-30

• Technical overview of the Hybrid AI 285 platform

• AI Hybrid platforms as infrastructure frameworks for LVDs addressing data center-based AI solutions.

• Accelerate AI adoption and reduce deployment risks

Tags: Artificial Intelligence (AI), Nvidia, Technical Sales, Lenovo Hybrid AI 285

Length: 60 minutes

Course code: DVAI215Start the training:

Employee link: Grow@Lenovo

-

Lenovo Cloud Architecture VTT: Supercharge Your Enterprise AI with NVIDIA AI Enterprise on Lenovo Hybrid AI Platform

2025-04-17 | 75 minutes | Employees and Partners

DetailsLenovo Cloud Architecture VTT: Supercharge Your Enterprise AI with NVIDIA AI Enterprise on Lenovo Hybrid AI Platform

Join us for an in-depth webinar with Justin King, Principal Product Marketing Manager for Enterprise AI exploring the power of NVIDIA AI Enterprise, delivering Generative and Agentic AI outcomes deployed with Lenovo Hybrid AI platform environments.

Published: 2025-04-17

In today’s data-driven landscape, AI is evolving at high speed, with new techniques delivering more accurate responses. Enterprises are seeking not just an understanding but also how they can achieve AI-driven business outcomes.

With this, the demand for secure, scalable, and high-performing AI operations-and the skills to deliver them-is top of mind for many. Learn how NVIDIA AI Enterprise, a comprehensive software suite optimized for NVIDIA GPUs, provides the tools and frameworks, including NVIDIA NIM, NeMo, and Blueprints, to accelerate AI development and deployment while reducing risk-all within the control and security of your Lenovo customer’s hybrid AI environment.

Tags: Artificial Intelligence (AI), Cloud, Data Management, Nvidia, Technical Sales

Length: 75 minutes

Course code: DVCLD221Start the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

AI VTT: GTC Update and The Lenovo LLM Sizing Guide

2025-03-12 | 86 minutes | Employees Only

DetailsAI VTT: GTC Update and The Lenovo LLM Sizing Guide

Please view this session that is two parts. Part one is Robert Daigle, Director, Global AI Solutions and Hande Sahin-Bahceci, AI Solutions Marketing Leader explaining the upcoming announcements for NVIDIA GTC. Part Two is Sachin Wani, AI Data Scientist explaining the Lenovo LLM Sizing Guide with these topics:

Published: 2025-03-12

• Minimum GPU requirements for fine-tuning/training and inference

• Gathering requirements for the customer's use case

• LLMs from a technical perspective

Tags: Artificial Intelligence (AI), Technical Sales

Length: 86 minutes

Course code: DVAI214Start the training:

Employee link: Grow@Lenovo

-

Partner Technical Webinar - NVIDIA Portfolio

2024-11-06 | 60 minutes | Employees and Partners

DetailsPartner Technical Webinar - NVIDIA Portfolio

In this 60-minute replay, Jason Knudsen of NVIDIA presented the NVIDIA Computing Platform. Jason talked about the full portfolio from GPUs to Networking to AI Enterprise and NIMs.

Published: 2024-11-06

Tags: Artificial Intelligence (AI), Nvidia

Length: 60 minutes

Course code: 110124Start the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

Q2 Solutions Launch TruScale GPU Next Generation Management in the AI Era Quick Hit

2024-09-10 | 6 minutes | Employees and Partners

DetailsQ2 Solutions Launch TruScale GPU Next Generation Management in the AI Era Quick Hit

This Quick Hit focuses on Lenovo announcing additional ways to help you build, scale, and evolve your customer’s private AI faster for improved ROI with TruScale GPU as a Service, AI-driven systems management, and infrastructure transformation services.

Published: 2024-09-10

Tags: Artificial Intelligence (AI), Services, TruScale

Length: 6 minutes

Course code: SXXW2543aStart the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

VTT AI: The NetApp AIPod with Lenovo for NVIDIA OVX

2024-08-13 | 38 minutes | Employees and Partners

DetailsVTT AI: The NetApp AIPod with Lenovo for NVIDIA OVX

AI, for some organizations, is out of reach, due to cost, integration complexity, and time to deployment. Previously, organizations relied on frequently retraining their LLMs with the latest data, a costly and time-consuming process. The NetApp AIPod with Lenovo for NVIDIA OVX combines NVIDIA-Certified OVX Lenovo ThinkSystem SR675 V3 servers with validated NetApp storage to create a converged infrastructure specifically designed for AI workloads. Using this solution, customers will be able to conduct AI RAG and inferencing operations for use cases like chatbots, knowledge management, and object recognition.

Published: 2024-08-13

Topics covered in this VTT session include:

•Where Lenovo fits in the solution

•NetApp AIPod with Lenovo for NVIDIA OVX Solution Overview

•Challenges/pain points that this solution solves for enterprises deploying AI

•Solution value/benefits of the combined NetApp, Lenovo, and NVIDIA OVX-Certified Solution

Tags: Artificial Intelligence (AI), Nvidia, Sales, Technical Sales, ThinkSystem

Length: 38 minutes

Course code: DVAI206Start the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

Guidance for Selling NVIDIA Products at Lenovo for ISG

2024-07-01 | 25 minutes | Employees and Partners

DetailsGuidance for Selling NVIDIA Products at Lenovo for ISG

This course gives key talking points about the Lenovo and NVIDIA partnership in the Data Center. Details are included on where to find the products that are included in the partnership and what to do if NVIDIA products are needed that are not included in the partnership. Contact information is included if help is needed in choosing which product is best for your customer. At the end of this session sellers should be able to explain the Lenovo and NVIDIA partnership, describe the products Lenovo can sell through the partnership with NVIDIA, help a customer purchase other NVIDIA product, and get assistance with choosing NVIDIA products to fit customer needs.

Published: 2024-07-01

Tags: Artificial Intelligence (AI), Nvidia

Length: 25 minutes

Course code: DNVIS102Start the training:

Employee link: Grow@Lenovo

Partner link: Lenovo 360 Learning Center

-

Think AI Weekly: Lenovo AI PCs & AI Workstations

2024-05-23 | 60 minutes | Employees Only

DetailsThink AI Weekly: Lenovo AI PCs & AI Workstations

Join Mike Leach, Sr. Manager, Workstations Solutions and Pooja Sathe, Director Commercial AI PCs as they discuss why Lenovo AI Developer Workstations and AI PCs are the most powerful, where they fit into the device to cloud ecosystem, and this week’s Microsoft announcement, Copilot+PC

Published: 2024-05-23

Tags: Artificial Intelligence (AI), ThinkStation

Length: 60 minutes

Course code: DTAIW105Start the training:

Employee link: Grow@Lenovo

Related publications

For more information, refer to these documents:

- ThinkSystem and ThinkAgile GPU Summary:

https://lenovopress.lenovo.com/lp0768-thinksystem-thinkagile-gpu-summary - ServerProven compatibility:

https://serverproven.lenovo.com/ - NVIDIA H100 product page:

https://www.nvidia.com/en-us/data-center/h100/ - NVIDIA Hopper Architecture page

https://www.nvidia.com/en-us/data-center/technologies/hopper-architecture/ - ThinkSystem SD665-N V3 product guide

https://lenovopress.lenovo.com/lp1613-thinksystem-sd665-n-v3-server - ThinkSystem SR680a V3 product guide

https://lenovopress.lenovo.com/lp1909-thinksystem-sr680a-v3-server - ThinkSystem SR685a V3 product guide

https://lenovopress.lenovo.com/lp1910-thinksystem-sr685a-v3-server

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

Neptune®

ServerProven®

ThinkAgile®

ThinkSystem®

The following terms are trademarks of other companies:

AMD is a trademark of Advanced Micro Devices, Inc.

Intel® and Xeon® are trademarks of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Changes in the December 16, 2024 update:

- Removed the vGPU and Omniverse software part numbers as not supported with the H100 GPUs - NVIDIA GPU software section

Changes in the August 9, 2024 update:

- The following GPU is withdrawn from marketing:

- ThinkSystem NVIDIA H100 80GB PCIe Gen5 Passive GPU, 4X67A82257

Changes in the May 2, 2024 update:

- Corrections to the number of CUDA cores and Tensor cores - Technical specifications section

Changes in the April 23, 2024 update:

- Added the following 8-GPU offering:

- ThinkSystem NVIDIA HGX H100 80GB 700W 8-GPU Board, C1HL

Changes in the November 8, 2023 update:

- Clarified that the H100 GPUs in the PCIe form factor include a 5-year subscription to the NVIDIA AI Enterprise software suite - NVIDIA GPU software section

Changes in the September 12, 2023 update:

- The NVIDIA H800 GPUs are now covered in a separate product guide, https://lenovopress.lenovo.com/lp1814

Changes in the August 29, 2023 update:

- The following servers now support the H100 PCIe adapter - Server support section

- SR665, SR650 V2, SR670 V2

- Added the power cables for the new servers - Auxiliary power cables section:

Changes in the August 1, 2023 update:

- New GPU for customers in China, Hong Kong and Macau:

- ThinkSystem NVIDIA H800 80GB PCIe Gen5 Passive GPU, 4X67A86451

First published: May 5, 2023

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.

.png)