Author

Updated

12 Feb 2026Form Number

LP1601PDF size

198 pages, 22.7 MB- Introduction

- Did you know?

- Key features

- Comparing the SR650 V3 to the SR650 V2

- Components and connectors

- System architecture

- Standard specifications

- Top Choice

- Models

- Processors

- Memory options

- Persistent memory

- Internal storage

- Controllers for internal storage

- Internal drive options

- Internal backup units

- Optical drives

- I/O expansion

- Network adapters

- GPU adapters

- Fibre Channel host bus adapters

- SAS adapters for external storage

- Cooling

- Power supplies

- Systems management

- Security

- Rack installation

- Operating system support

- Physical and electrical specifications

- Operating environment

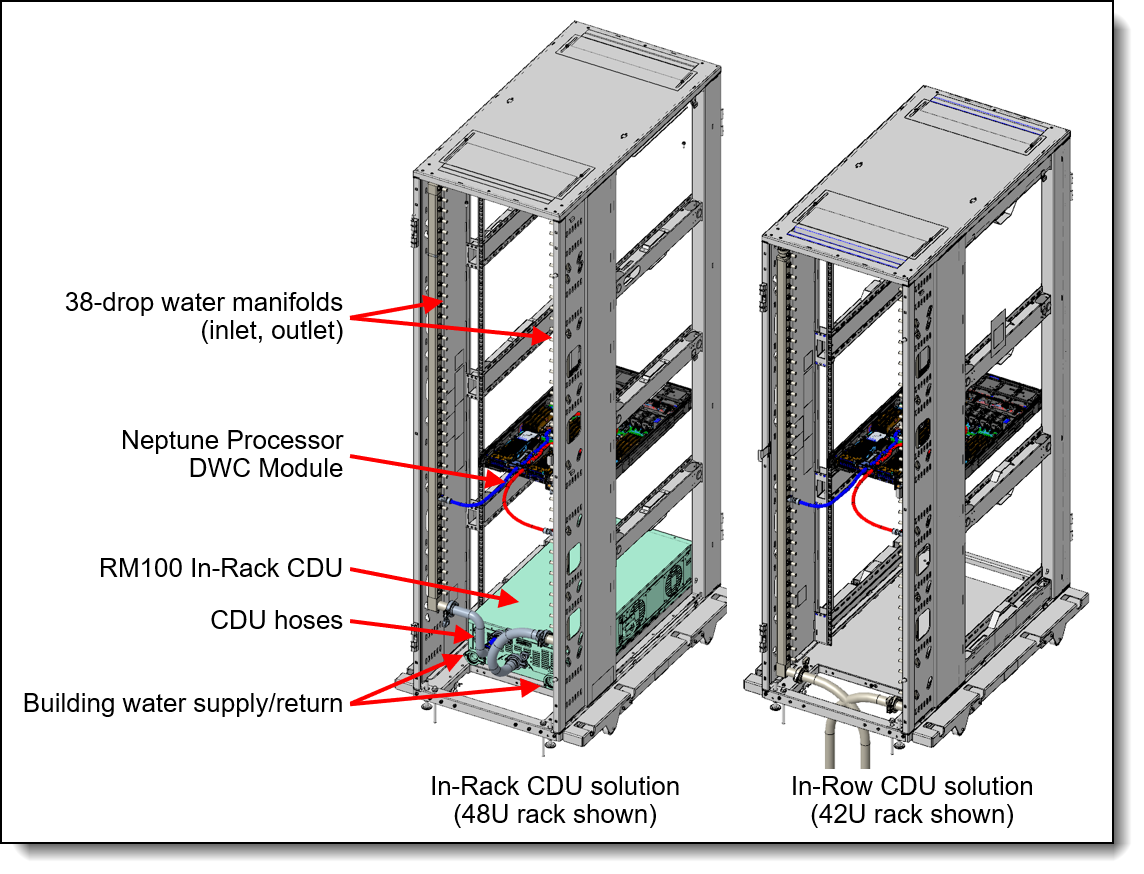

- Water infrastructure for the Lenovo Neptune Processor DWC Module

- RM100 In-Rack Coolant Distribution Unit

- Warranty upgrades and post-warranty support

- Services

- Lenovo TruScale

- Regulatory compliance

- External drive enclosures

- External storage systems

- External backup units

- Fibre Channel SAN switches

- Uninterruptible power supply units

- Power distribution units

- Rack cabinets

- KVM console options

- Lenovo Financial Services

- Seller training courses

- Related publications and links

- Related product families

- Trademarks

Abstract

The Lenovo ThinkSystem SR650 V3 is an ideal 2-socket 2U rack server for small businesses up to large enterprises that need industry-leading reliability, management, and security, as well as maximizing performance and flexibility for future growth. The SR650 V3 is designed to handle a wide range of workloads, such as databases, virtualization and cloud computing, virtual desktop infrastructure (VDI), infrastructure security, systems management, enterprise applications, collaboration/email, streaming media, web, and HPC.

This product guide provides essential pre-sales information to understand the ThinkSystem SR650 V3 server, its key features and specifications, components and options, and configuration guidelines. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the SR650 V3 and consider its use in IT solutions.

Pushing the boundaries of authentication technology

Meeting growing demand for innovative digital authentication solutions with Lenovo ThinkSystem SR650 V3 servers powered by 4th Gen Intel® Xeon® Scalable processors.

Change History

Changes in the February 12, 2026 update:

- Added a new Top Choice section introducing Top Choice Express and Top Choice Stock

- Added a Top Choice Express column to all component tables in the product guide

Introduction

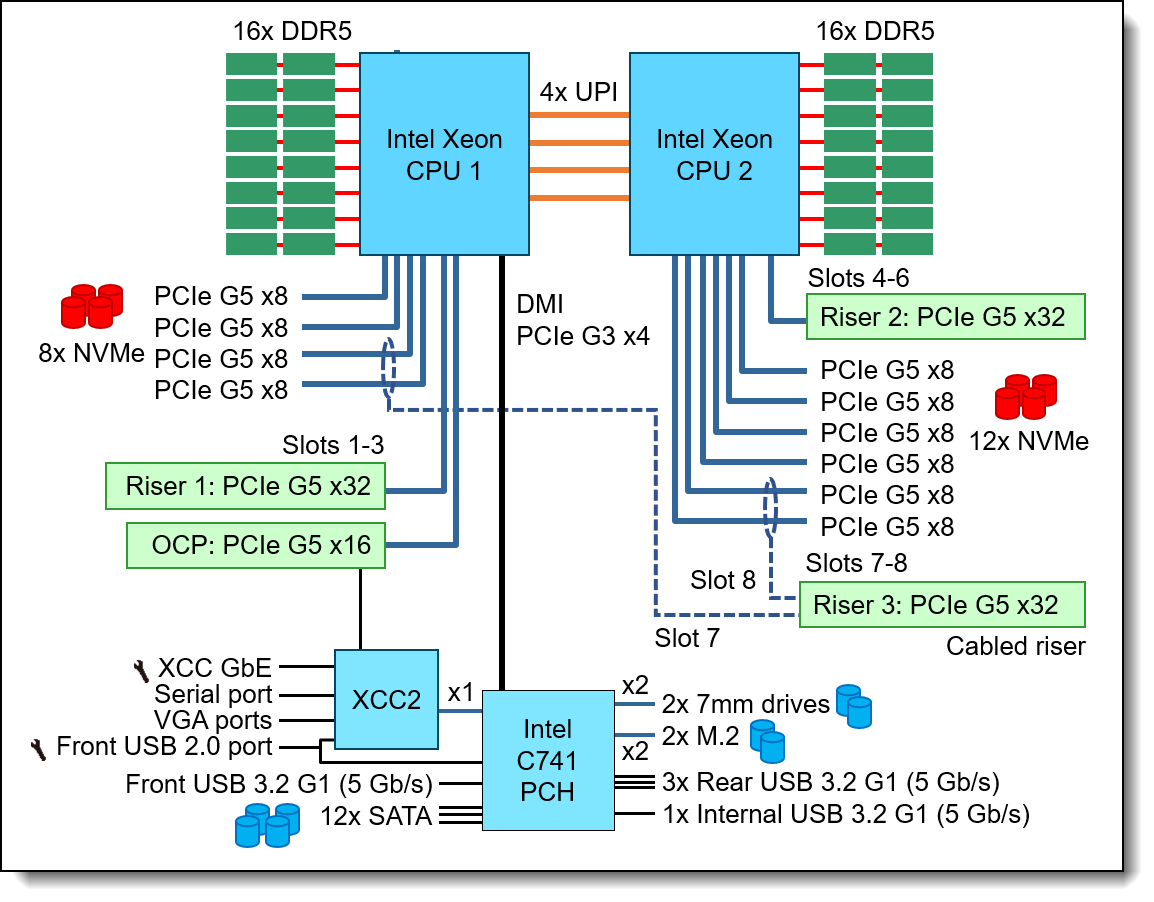

The Lenovo ThinkSystem SR650 V3 is an ideal 2-socket 2U rack server for small businesses up to large enterprises that need industry-leading reliability, management, and security, as well as maximizing performance and flexibility for future growth. The SR650 V3 is based on the new 5th generation Intel Xeon Scalable processor family (formerly codenamed "Emerald Rapids").

The SR650 V3 is designed to handle a wide range of workloads, such as databases, virtualization and cloud computing, virtual desktop infrastructure (VDI), infrastructure security, systems management, enterprise applications, collaboration/email, streaming media, web, and HPC.

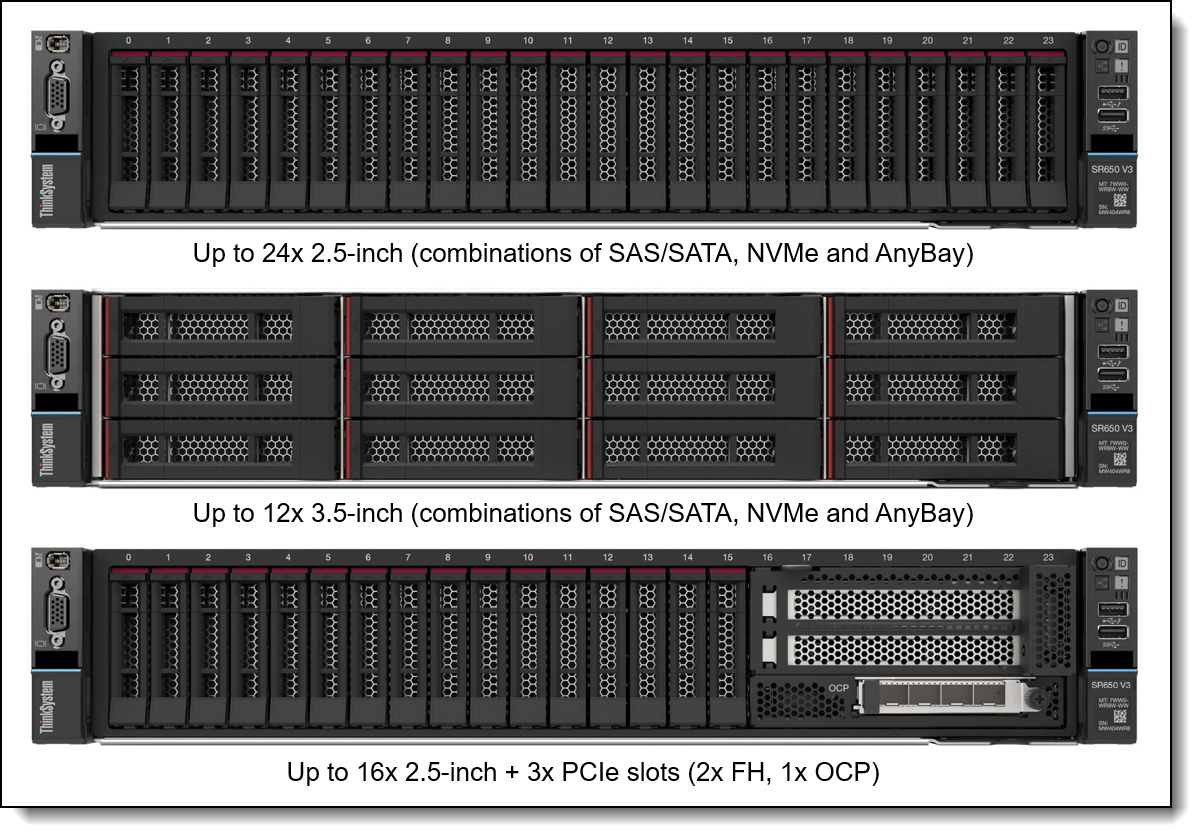

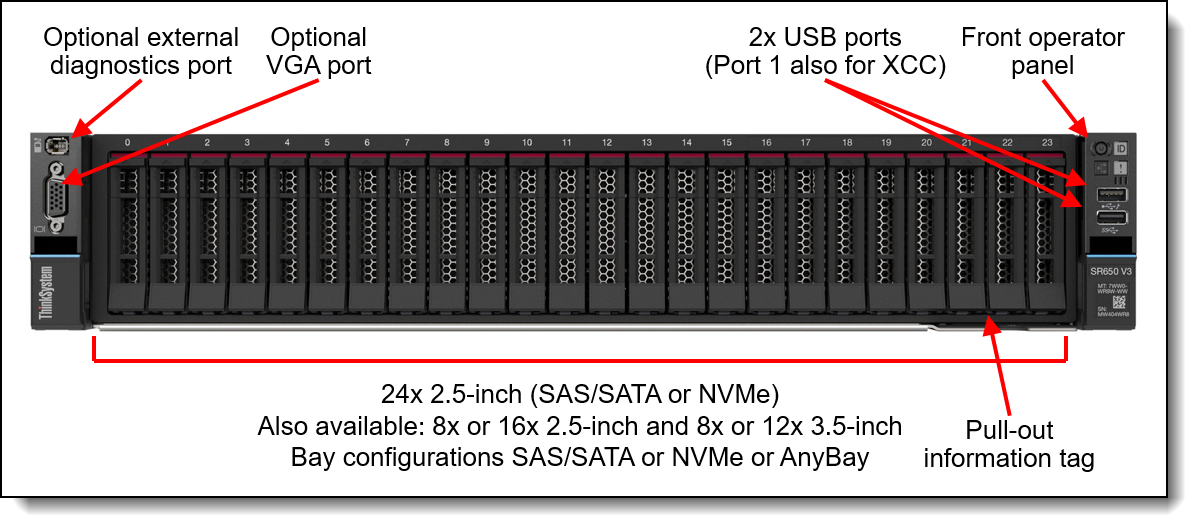

Figure 1. Lenovo ThinkSystem SR650 V3 with 2.5-inch front drive bays (3.5-inch drive configurations also available)

Did you know?

The SR650 V3 server has been designed to take advantage of the features of the 5th generation Intel Xeon Scalable processors, such as the full performance of 385W 64-core processors, support for 5600 MHz memory and PCIe Gen 5.0 support. The SR650 V3 is a very configuration-rich offering, supporting more than 30 different drive bay configurations in the front, middle and rear of the server and 5 different slot configurations at the rear of the server. This level of flexibility ensures that you can configure the server to meet the needs of your workload.

Key features

Combining performance and flexibility, the SR650 V3 server is a great choice for enterprises of all sizes. The server offers a broad selection of drive and slot configurations and offers numerous high performance features. Outstanding reliability, availability, and serviceability (RAS) and high-efficiency design can improve your business environment and can help save operational costs.

Scalability and performance

The SR650 V3 offers numerous features to boost performance, improve scalability and reduce costs:

- Supports one or two fifth-generation Intel Xeon Processor Scalable processors

- Up to 64 cores and 128 threads

- Core speeds of up to 3.9 GHz

- TDP ratings of up to 385 W

- Supports one or two fourth-generation Intel Xeon Processor Scalable processors

- Up to 60 cores and 120 threads

- Core speeds of up to 3.7 GHz

- TDP ratings of up to 350 W

- Support for DDR5 memory DIMMs to maximize the performance of the memory subsystem:

- Up to 32 DDR5 memory DIMMs, 16 DIMMs per processor

- 8 memory channels per processor (2 DIMMs per channel)

- Supports 1 DIMM per channel operating at 5600 MHz (5th Gen processors) or 4800 MHz (4th Gen processors)

- Supports 2 DIMMs per channel operating at 4800 MHz (5th Gen processors) or 4400 MHz (4th Gen processors)

- Using 256GB 3DS RDIMMs, the server supports up to 8TB of system memory

- Supports the new Intel Optane Persistent Memory 300 Series for advanced in-memory database applications, dense-virtualization; up to 16 PMem Modules can be installed in conjunction with regular system memory.

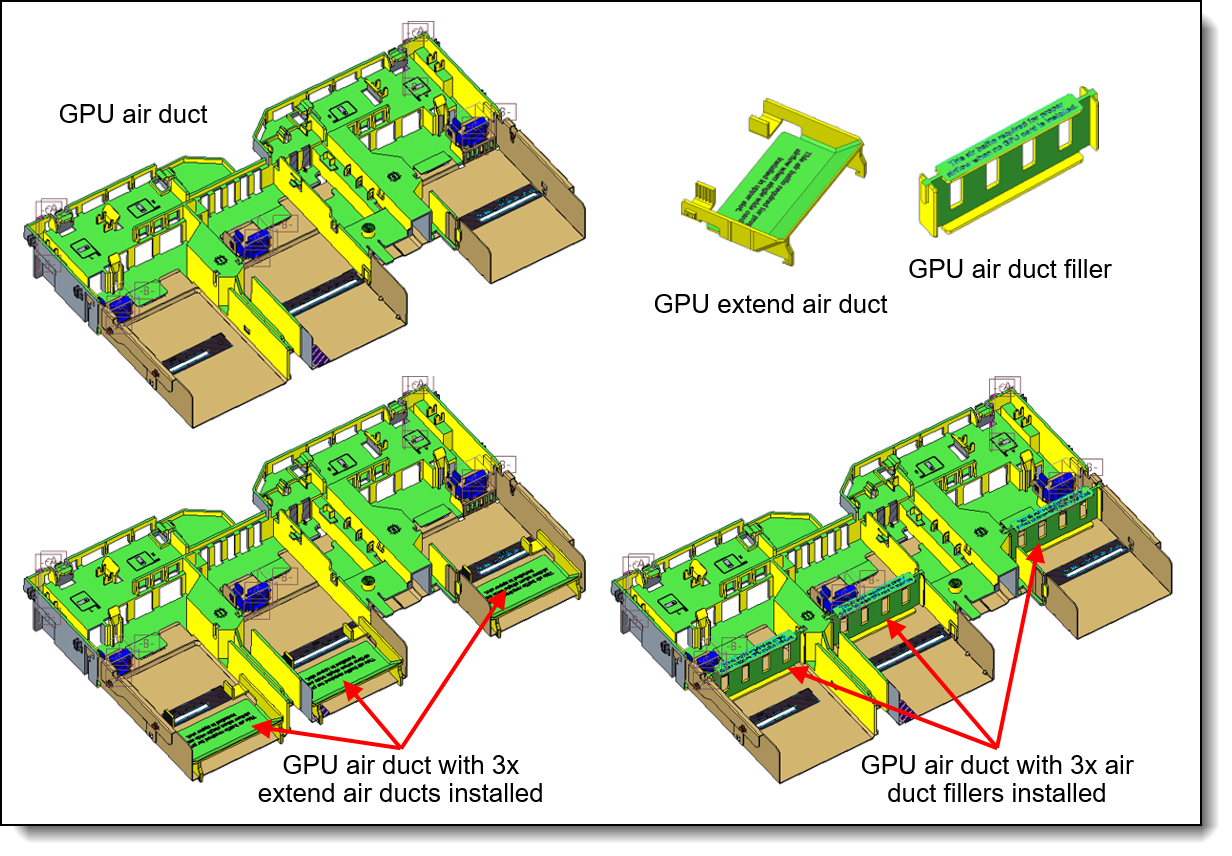

- Supports up to eight single-width GPUs or three double-wide GPUs, for substantial processing power in a 2U system.

- The server is Compute Express Linsk (CXL) v1.1 Ready. With CXL 1.1 for next-generation workloads, you can reduce compute latency in the data center and lower TCO. CXL is a protocol that runs across the standard PCIe physical layer and can support both standard PCIe devices as well as CXL devices on the same link.

- Supports up to 40x 2.5-inch drive bays, by using combinations of front-accessible (up to 24 bays), mid bays (8 bays) and rear-accessible (8 bays).

- Supports 20x 3.5-inch drive bays for lower-cost high-capacity HDD storage. 2.5-inch and 3.5-inch drive bays can be mixed if desired.

- Supports 36x NVMe drives without oversubscription of PCIe lanes (1:1 connectivity). The use of NVMe drives maximizes drive I/O performance, in terms of throughput and latency.

- Supports 14x SATA drives using the onboard SATA controller (no additional adapter needed), enabling lower cost, high capacity storage solution for cold or archival storage workloads.

- Supports high-speed RAID controllers from Broadcom providing 12 Gb SAS connectivity to the drive backplanes. A variety of PCIe 3.0 and PCIe 4.0 RAID adapters are available.

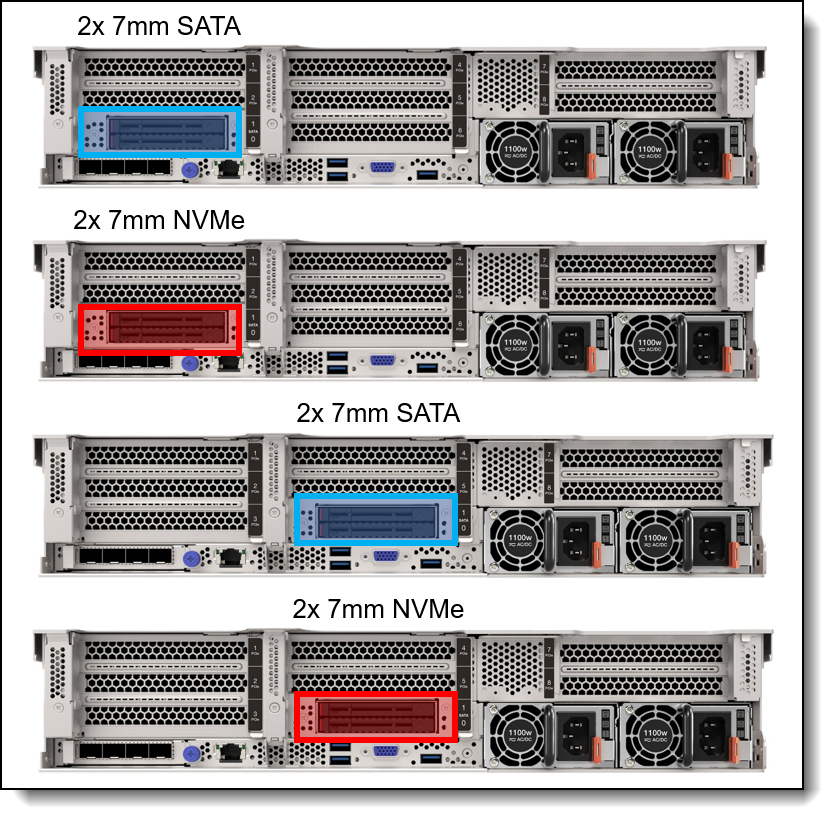

- Supports up to two externally accessible 7mm hot-swap drives for operating system boot functions. Optional RAID-0 or RAID-1.

- Supports M.2 drives for convenient operating system boot functions. Available M.2 adapters support either one M.2 drive or two M.2 drives. Optional RAID-0 or RAID-1.

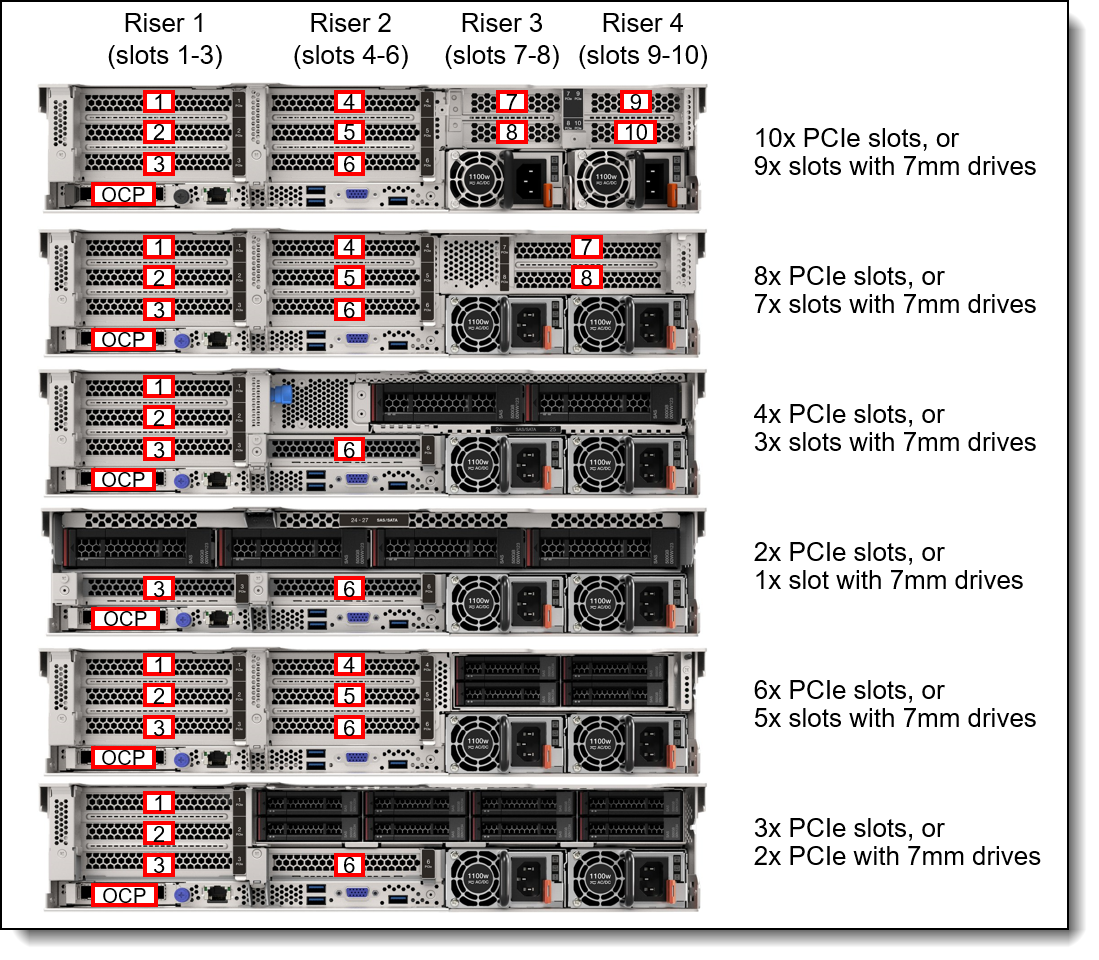

- Up to 12x PCIe slots (10x rear, 2x front), plus a slot dedicated to an OCP 3.0 adapter. 2.5-inch drive configurations also support an additional internal bay for a cabled RAID adapter or HBA.

- The server has a dedicated industry-standard OCP 3.0 small form factor (SFF) slot, supporting a variety of Ethernet network adapters. A simple-swap mechanism with a thumbscrew and pull-tab enables tool-less installation and removal of the adapter. The adapter supports shared BMC network sideband connectivity to enable out-of-band systems management.

- The server offers PCI Express 5.0 I/O expansion capabilities that doubles the theoretical maximum bandwidth of PCIe 4.0 (32GT/s in each direction for PCIe Gen 5, compared to 16 GT/s with PCIe Gen 4 and 8 GT/s with PCIe Gen 3). A PCIe 5.0 x16 slot provides 128 GB/s bandwidth, enough to support a dual-port 200GbE network connection.

Availability and serviceability

The SR650 V3 provides many features to simplify serviceability and increase system uptime:

- Designed to run 24 hours a day, 7 days a week

- The server offers Single Device Data Correction (SDDC, also known as Chipkill), Adaptive Double-Device Data Correction (ADDDC, also known as Redundant Bit Steering or RBS), and memory mirroring for redundancy in the event of a non-correctable memory failure. Note: ADDDC in not supported with 9x4 RDIMMs.

- The server offers hot-swap drives, supporting RAID redundancy for data protection and greater system uptime.

- Available M.2 adapters support RAID-1 (using Intel VROC) which can enable two SATA or two NVMe M.2 drives to be configured as a redundant pair.

- The server has up to two hot-swap redundant power supplies and up to six hot-swap redundant fans to provide availability for business-critical applications.

- Optional front-accessible slots and drives so that most major components and cables (except power) are located at the front of the server

- The light path diagnostics feature uses LEDs to lead the technician to failed (or failing) components, which simplifies servicing, speeds up problem resolution, and helps improve system availability.

- Solid-state drives (SSDs) offer more reliability and performance than traditional mechanical HDDs for greater uptime.

- Proactive Platform Alerts (including PFA and SMART alerts): Processors, voltage regulators, memory, internal storage (SAS/SATA HDDs and SSDs, NVMe SSDs, M.2 storage), fans, power supplies, RAID controllers, server ambient and subcomponent temperatures. Alerts can be surfaced through the XClarity Controller to managers such as Lenovo XClarity Administrator, VMware vCenter, and Microsoft System Center. These proactive alerts let you take appropriate actions in advance of possible failure, thereby increasing server uptime and application availability.

- The built-in XClarity Controller continuously monitors system parameters, triggers alerts, and performs recovery actions in case of failures to minimize downtime.

- Built-in diagnostics in UEFI, using Lenovo XClarity Provisioning Manager, speed up troubleshooting tasks to reduce service time.

- Lenovo XClarity Provisioning Manager supports diagnostics and can save service data to a USB key drive or remote CIFS share folder for troubleshooting and reduce service time.

- Auto restart in the event of a momentary loss of AC power (based on power policy setting in the XClarity Controller service processor)

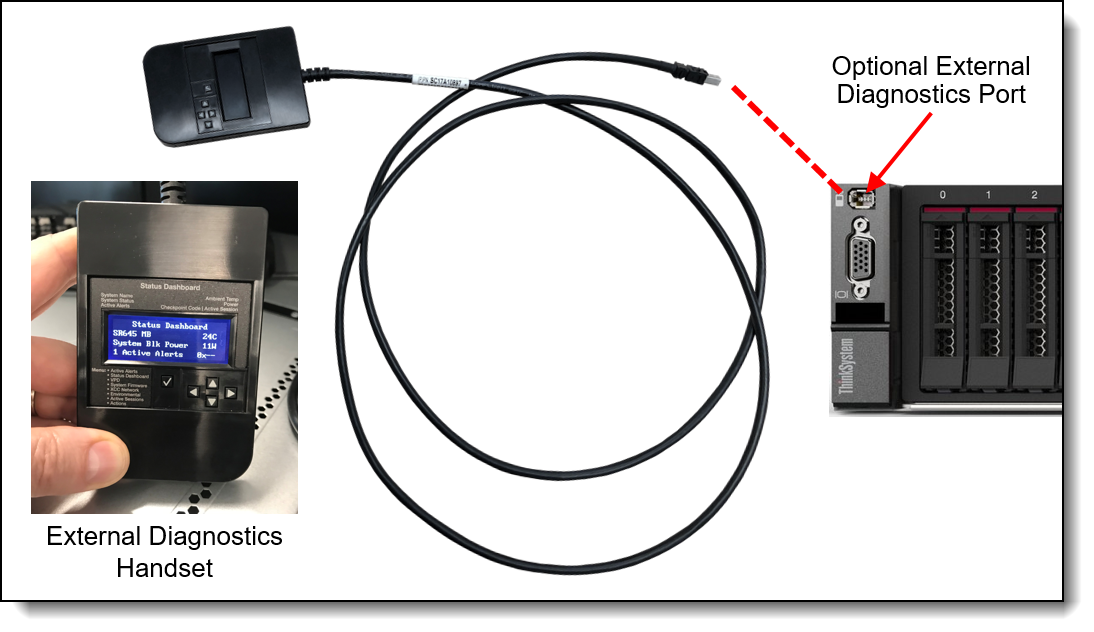

- Offers a diagnostics port on the front of the server to allow you to attach an external diagnostics handset for enhanced systems management capabilities.

- Support for the XClarity Administrator Mobile app running on a supported smartphone or tablet and connected to the server through the service-enabled USB port, enables additional local systems management functions.

- Three-year or one-year customer-replaceable unit and onsite limited warranty (varies by geography), 9 x 5 next business day. Optional service upgrades are available.

Manageability and security

Systems management features simplify local and remote management of the SR650 V3:

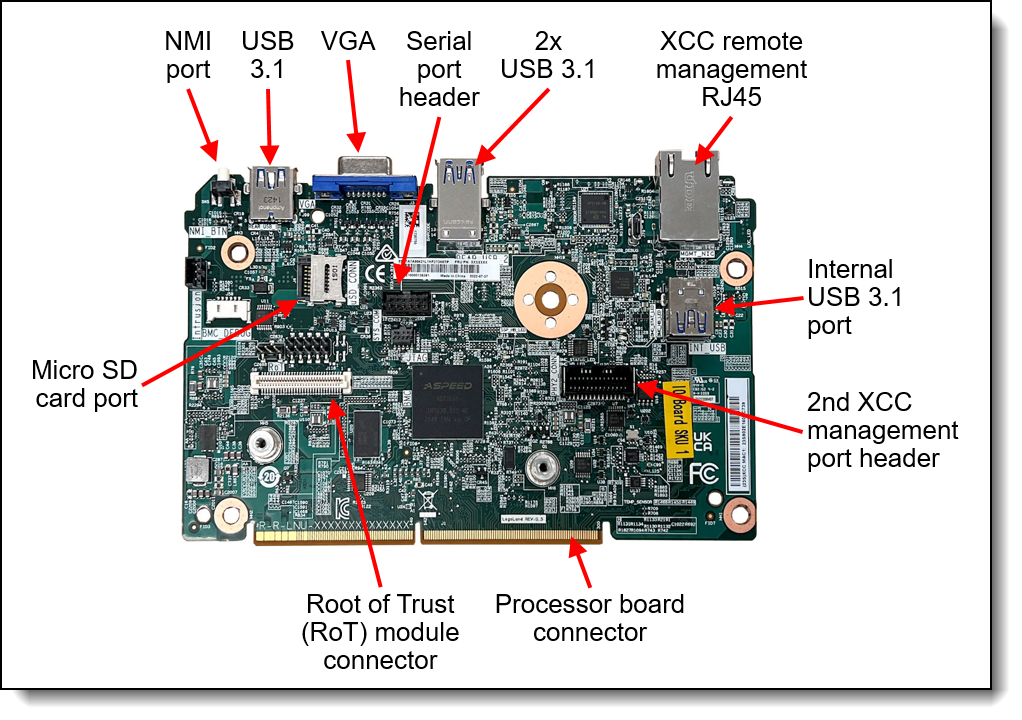

- The server includes XClarity Controller 2 (XCC2) to monitor server availability. Optional upgrade to XCC Platinum to provide remote control (keyboard video mouse) functions, support for the mounting of remote media files (ISO and IMG image files), boot capture, power capping and new XCC2 Platinum features. New XCC2 Platinum features include System Guard, new security modes including a CNSA-compliant mode, a FIPS 140-3-compliant mode and enhanced NIST 800-193 support, and a new Neighbor Group feature.

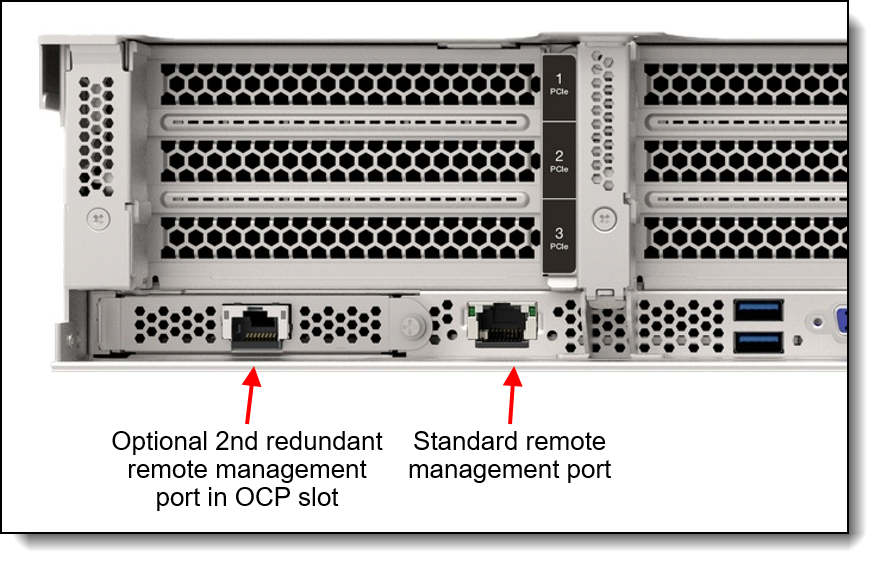

- Dedicated Ethernet port at the rear of the server for remote management (BMC management).

- Lenovo XClarity Administrator offers comprehensive hardware management tools that help to increase uptime, reduce costs and improve productivity through advanced server management capabilities.

- UEFI-based Lenovo XClarity Provisioning Manager, accessible from F1 during boot, provides system inventory information, graphical UEFI Setup, platform update function, RAID Setup wizard, operating system installation function, and diagnostic functions.

- Support for Lenovo XClarity Energy Manager, which captures real-time power and temperature data from the server and provides automated controls to lower energy costs.

- An integrated industry-standard Unified Extensible Firmware Interface (UEFI) enables improved setup, configuration, and updates, and simplifies error handling.

- Support for industry standard management protocols, IPMI 2.0, SNMP 3.0, Redfish REST API, serial console via IPMI

- An integrated hardware Trusted Platform Module (TPM) supporting TPM 2.0 enables advanced cryptographic functionality, such as digital signatures and remote attestation.

- Administrator and power-on passwords help protect from unauthorized access to the server.

- Supports Secure Boot to ensure only a digitally signed operating system can be used. Supported with HDDs and SSDs, as well as 7mm and M.2 drives.

- Industry-standard Advanced Encryption Standard (AES) NI support for faster, stronger encryption.

- Intel Execute Disable Bit functionality can prevent certain classes of malicious buffer overflow attacks when combined with a supported operating system.

- Intel Trusted Execution Technology provides enhanced security through hardware-based resistance to malicious software attacks, allowing an application to run in its own isolated space, protected from all other software running on a system.

- Additional physical security features are an available chassis intrusion switch and available lockable front bezel.

Energy efficiency

The SR650 V3 offers the following energy-efficiency features to save energy, reduce operational costs, and increase energy availability:

- The server supports an advanced direct-water cooling (DWC) capability with the Lenovo Neptune Processor DWC Module, where heat from the processors is removed from the rack and the data center using an open loop and coolant distribution units, resulting in lower energy costs

- Energy-efficient system board components help lower operational costs.

- High-efficiency power supplies with 80 PLUS Platinum and Titanium certifications

- Solid-state drives (SSDs) consume as much as 80% less power than traditional spinning 2.5-inch HDDs.

- The server uses hexagonal ventilation holes, which can be grouped more densely than round holes, providing more efficient airflow through the system and thus keeping your system cooler.

- Optional Lenovo XClarity Energy Manager provides advanced data center power notification, analysis, and policy-based management to help achieve lower heat output and reduced cooling needs.

Comparing the SR650 V3 to the SR650 V2

The ThinkSystem SR650 V3 improves on the previous generation SR650 V2, as summarized in the following table.

Components and connectors

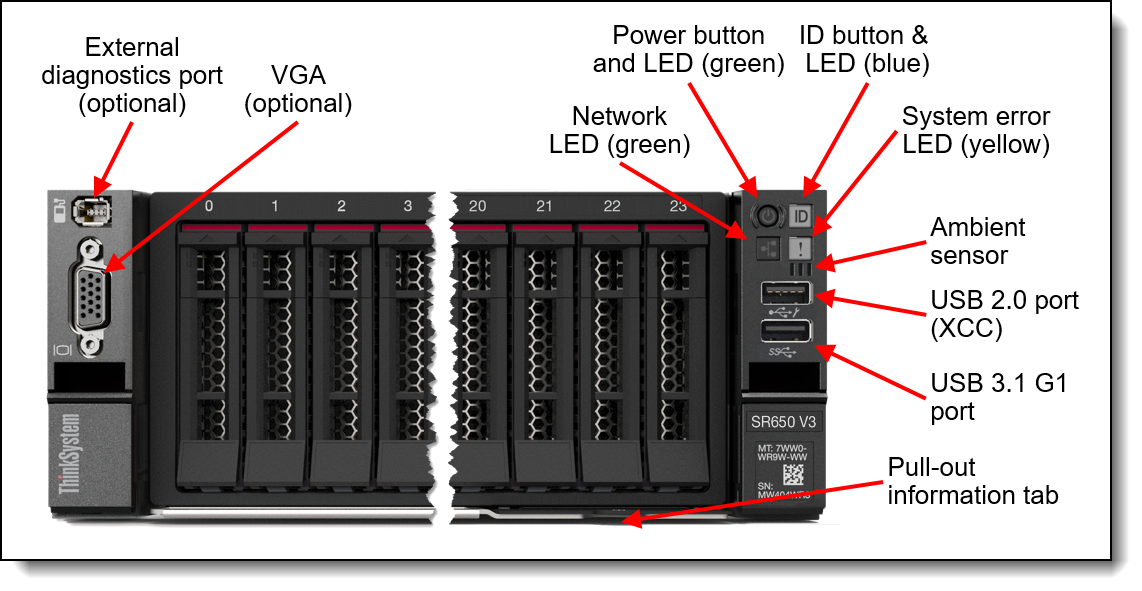

The following figure shows the front of the SR650 V3.

Figure 2. Front view of the ThinkSystem SR650 V3 with 2.5-inch drive bays

For details on the front ports, including the optional front VGA port and optional external diagnostic port, see the Local management section.

The following figure shows the front configurations of the SR650 V3. The server supports either 2.5-inch hot-swap drives (8, 16 or 24 drive bays) or 3.5-inch hot-swap drives (8 or 12 bays) at the front. The server also supports three front PCIe slots (2 full-height, 1 OCP) in addition to 16x 2.5-inch drive bays.

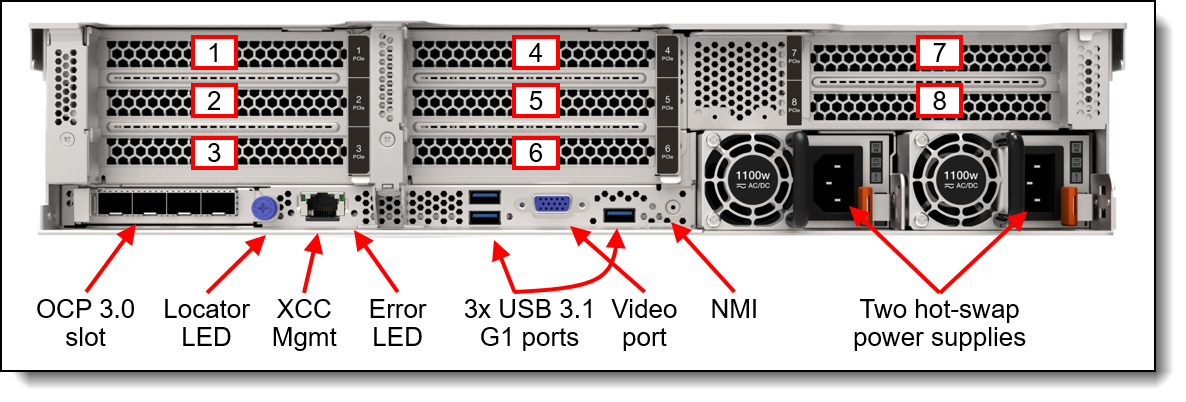

The following figure shows the components visible from the rear of the server. The figure shows one configuration, with eight PCIe slots, however there are additional rear configurations which include 3.5-inch drive bays, 2.5-inch drive bays, or 7mm drive bays.

Figure 4. Rear view of the ThinkSystem SR650 V3 (configuration with eight PCIe slots)

Standard specifications

The following table lists the standard specifications.

| Components | Specification |

|---|---|

| Machine types | 7D75 - 1 year warranty 7D76 - 3 year warranty |

| Form factor | 2U rack |

| Processor | One or two 5th Gen Intel Xeon Scalable processors (formerly codenamed "Emerald Rapids"), or one or two 4th Gen Intel Xeon Scalable processors (formerly codenamed "Sapphire Rapids"). Supports processors up to 64 cores, core speeds of up to 3.9 GHz, and TDP ratings of up to 385 W. |

| Chipset | Intel C741 "Emmitsburg" chipset, part of the platform codenamed "Eagle Stream" |

| Memory | 32 DIMM slots with two processors (16 DIMM slots per processor). Each processor has 8 memory channels, with 2 DIMMs per channel (DPC). Lenovo TruDDR5 RDIMMs, 9x4 RDIMMs, and 3DS RDIMMs are supported. DIMMs operate at up to 5600 MHz at 1 DPC and up to 4800 MHz at 2 DPC. |

| Persistent memory | No support. Supports up to 16x Intel Optane Persistent Memory 300 Series modules (8 per processor) installed in the DIMM slots. Persistent memory (Pmem) is installed in combination with system memory DIMMs. |

| Memory maximum | With RDIMMs: Up to 8TB by using 32x 256GB 3DS RDIMMs With Persistent Memory: Up to 12TB by using 16x 256GB 3DS RDIMMs and 16x 512GB Pmem modules |

| Memory protection | ECC, SDDC (for x4-based memory DIMMs), ADDDC (for x4-based memory DIMMs excluding 9x4 RDIMMs, requires Platinum or Gold processors), and memory mirroring. |

| Disk drive bays |

Up to 20x 3.5-inch or 40x 2.5-inch hot-swap drive bays:

The server also supports these drives for OS boot or drive storage:

See Supported drive bay combinations for details. |

| Maximum internal storage |

|

| Storage controller |

|

| Optical drive bays | No internal optical drive |

| Tape drive bays | No internal backup drive |

| Network interfaces | Dedicated OCP 3.0 SFF slot with PCIe 5.0 x16 host interface. Supports a variety of 2-port and 4-port adapters with 1, 10, 25 or 100 GbE network connectivity. One port can optionally be shared with the XClarity Controller 2 (XCC2) management processor for Wake-on-LAN and NC-SI support. |

| PCIe slots |

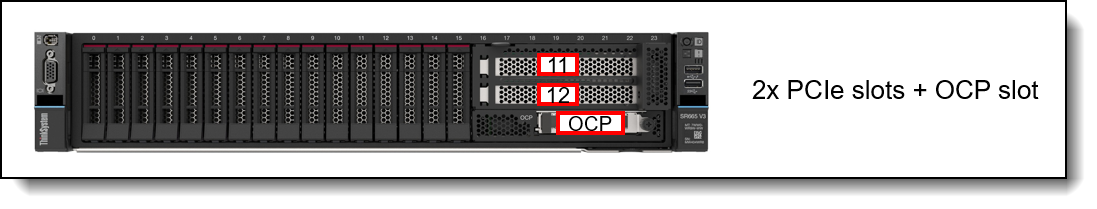

Up to 12x PCIe slots (10x rear, 2x front), plus a slot dedicated to an OCP 3.0 adapter. 2.5-inch drive configurations also support an additional internal bay for a cabled RAID adapter or HBA. Rear: Up to 10x PCIe slots, plus a slot dedicated to the OCP adapter. Slot are either PCIe 5.0 or 4.0 depending on riser selection and rear drive bay selection. The use of some slots requires two processors. Slots are configured using three riser cards. Riser 1 (slots 1-3) and Riser 2 (slots 4-6) are installed in slots in the system board, Riser 3 (slots 7-8) and Riser 4 (9-10) are cabled to ports on the system board. A variety of riser cards are available. See the I/O expansion for details. Front: The server also supports slots at the front of the server (configurations with up to 16 drive bays): 2x PCIe x16 full-height half-length slots, plus 1x OCP 3.0 slot Internal: For 2.5-inch front drive configurations, the server supports the installation of a RAID adapter or HBA in a dedicated area that does not consume any of the PCIe slots. |

| GPU support | Supports up to 8x single-wide GPUs or up to 3x double-wide GPUs |

| Ports |

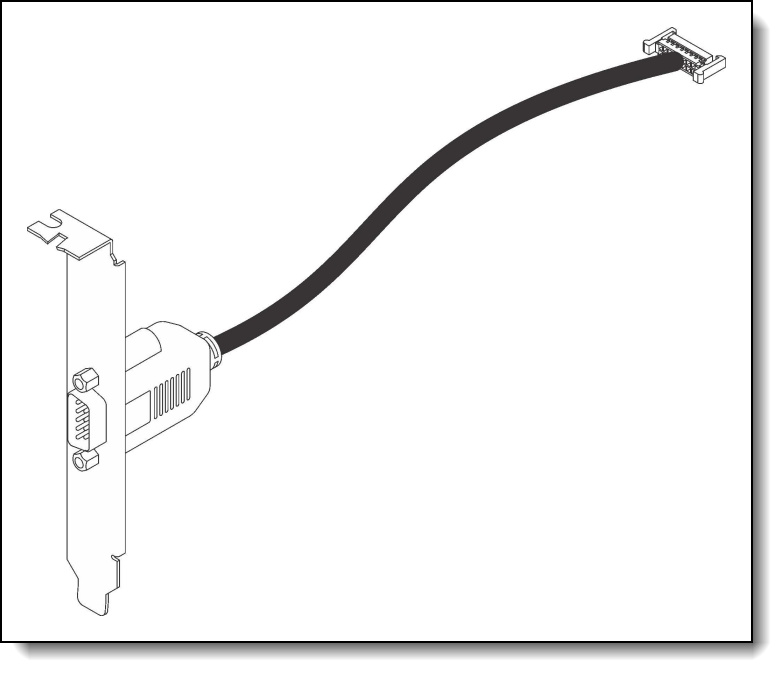

Front: 1x USB 3.2 G1 (5 Gb/s) port, 1x USB 2.0 port (also for XCC local management), External diagnostics port, optional VGA port. Rear: 3x USB 3.2 G1 (5 Gb/s) ports, 1x VGA video port, 1x RJ-45 1GbE systems management port for XCC remote management. Optional DB-9 COM serial port (installs in a PCIe slot). Internal: 1x USB 3.2 G1 (5 Gb/s) connector for operating system or license key purposes |

| Cooling | 6x (with two processors installed) or 5x (with one processor installed) single-rotor or dual-rotor hot swap 60 mm fans, configuration dependent. Fans are N+1 redundant, tolerating a single-rotor failure. One fan integrated in each power supply. For customers with water infrastructure in their data center, the SR650 V3 also supports open-loop water cooling for efficient heat removal. |

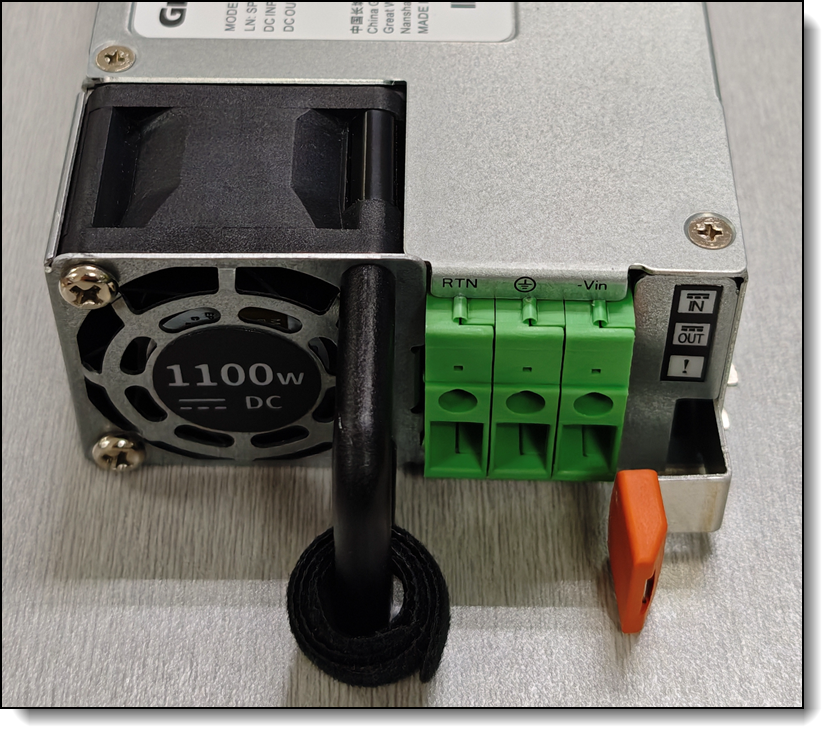

| Power supply | Up to two hot-swap redundant AC power supplies, 80 PLUS Platinum or 80 PLUS Titanium certification. 750 W, 1100 W, 1800 W, 2400 W and 2600W AC options, supporting 220 V AC. 750 W and 1100 W options also support 110V input supply. In China only, all power supply options support 240 V DC. Also available is a 1100W power supply with a -48V DC input. |

| Video | Embedded graphics with 16 MB memory with 2D hardware accelerator, integrated into the XClarity Controller 2 management controller. Maximum resolution is 1920x1200 32bpp at 60Hz. |

| Hot-swap parts | Drives, power supplies, and fans. |

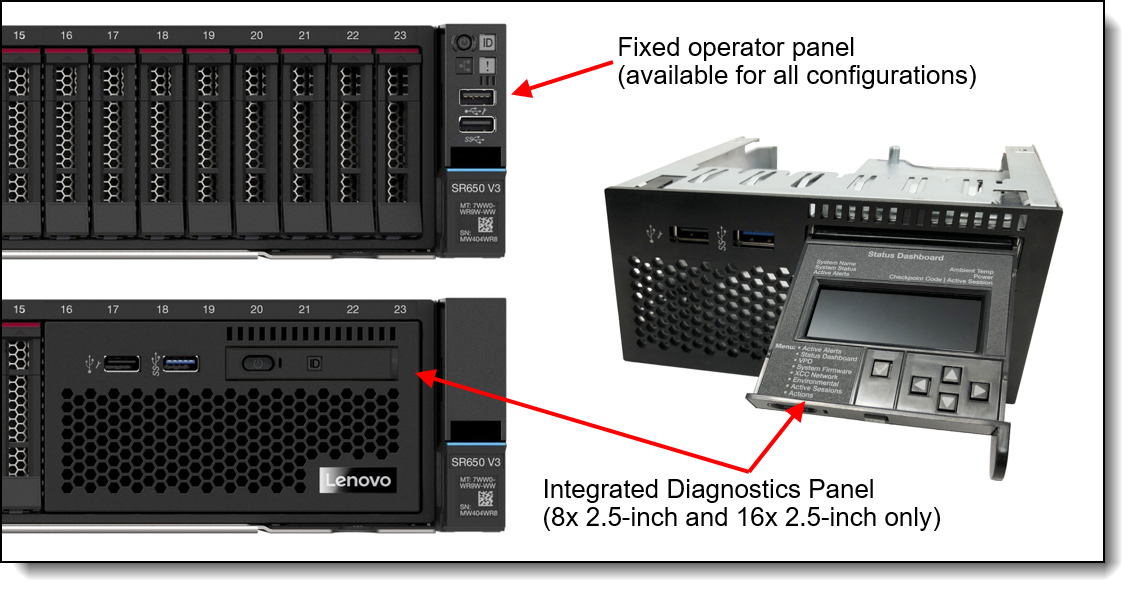

| Systems management | Operator panel with status LEDs. Optional External Diagnostics Handset with LCD display. Models with 8x or 16x 2.5-inch front drive bays can optionally support an Integrated Diagnostics Panel. XClarity Controller 2 (XCC2) embedded management controller, XClarity Administrator centralized infrastructure delivery, XClarity Integrator plugins, and XClarity Energy Manager centralized server power management. Optional XClarity Controller Platinum to enable remote control and other functions. |

| Security features | Chassis intrusion switch, Power-on password, administrator's password, Root of Trust module supporting TPM 2.0 and Platform Firmware Resiliency (PFR). Optional lockable front security bezel. |

| Operating systems supported | Microsoft Windows Server, Microsoft Windows 10 & 11, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, VMware ESXi, Ubuntu Server. See the Operating system support section for specifics. |

| Limited warranty | Three-year or one-year (model dependent) customer-replaceable unit and onsite limited warranty with 9x5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware and some third-party applications. |

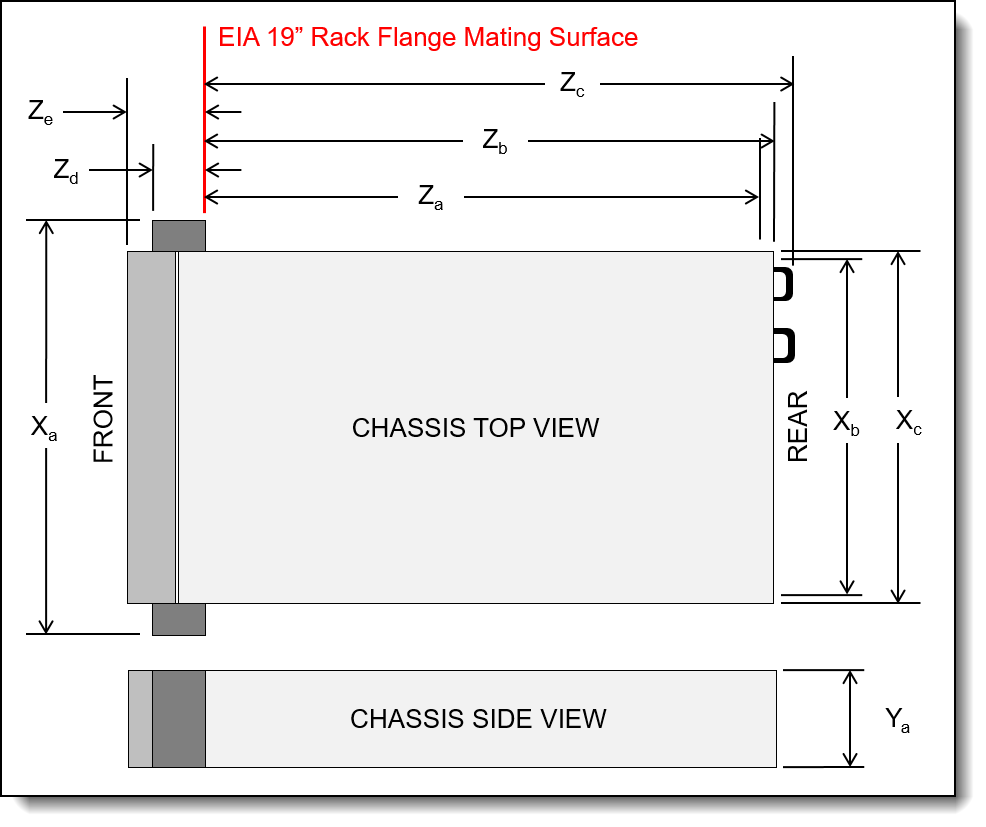

| Dimensions | Width: 445 mm (17.5 in.), height: 87 mm (3.4 in.), depth: 766 mm (30.1 in.). See Physical and electrical specifications for details. |

| Weight | Maximum: 38.8 kg (85.5 lb) |

Top Choice

Lenovo offers two “quick-ship” programs to make it easy for our customers to get quick delivery of our offerings:

- Top Choice Express (TCE): A Configure-to-Order (CTO) model that uses a "best of" subset of components. It allows for customization while maintaining significantly faster lead times than standard CTO.

Top Choice Express (TCE) is the way to get custom configurations as quickly as possible. It allows DCSC configurator users to configure systems in CTO Mode, selecting the required hardware components while still benefiting from faster turnaround than traditional CTO. These configurations are built and fully integrated by Lenovo Manufacturing, with simple configuration steps, instant pricing, and quicker delivery.

To see which components are TCE, check the TCE column in the various component tables in this product guide. Green cells mean that the component is enabled for ordering with Top Choice Express in all regions world-wide. Orange cells with the symbol mean that the component is enable for Top Choice Express in only some regions. Mouse over or tap on the cell to see which regions have it enabled for TCE.

Note: Top Choice Express is for configure-to-order builds only, not for standalone option part numbers.

- Top Choice Stock (TCS): Pre-built, fixed-configuration units held in distribution. They are the "Ready-to-Ship" inventory options for customers who need a server quickly.

Top Choice Stock (TCS) is used in Preconfigured Mode, starting from a standard pre‑built configuration and adding option components as needed. Systems are assembled and fulfilled by Lenovo partners, enabling quick order processing and fast shipment through distribution. This path supports rapid delivery while maintaining flexibility through add‑on options. For details of TCS models, see the Preconfigured Models section.

Note: Ship dates are estimates only; actual ship times may vary based on platform and component availability, order processing, logistics, and external factors beyond Lenovo’s control. Lenovo is not liable for delays.

Models

ThinkSystem SR650 V3 models can be configured by using the Lenovo Data Center Solution Configurator (DCSC).

Topics in this section:

- CTO models

- CTO models for Windows 10 and Windows 11

- CTO models for NVIDIA Bluefield-2 SmartNIC DPUs

- Base feature codes

- Preconfigured models

CTO models

ThinkSystem SR650 V3 models can be configured by using the Lenovo Data Center Solution Configurator (DCSC).

Configure-to-order (CTO) models are used to create models with factory-integrated server customizations. For CTO models, two types of base CTO models are available for the SR650 V3 as listed in the columns in the following table:

- General purpose base CTO models are for general business (non-HPC) and is selectable by choosing General Purpose mode in DCSC.

- AI and HPC base models are intended for Artificial Intelligence (AI) and High Performance Computing (HPC) configurations and solutions are enabled using the AI & HPC Hardware - ThinkSystem Hardware mode in DCSC. These configurations, along with Lenovo EveryScale Solutions, can also be built using System x and Cluster Solutions Configurator (x-config). Tip: Some HPC and AI models are not listed in DCSC and can only be configured in x-config.

"For AI" models: The base CTO models listed in the table with "for AI" in the names are the only base models that support high-performance GPUs and accelerators (Controlled GPUs). These models are classified under US Government ECCN regulations and have limited market and customer availability. All other base models do not support high-performance GPUs.

Preconfigured server models may also be available for the SR650 V3, however these are region-specific; that is, each region may define their own server models, and not all server models are available in every region.

The following table lists the base CTO models of the ThinkSystem SR650 V3 server.

CTO models for Windows 10 and Windows 11

The SR650 V3 can run Windows 10 and Windows 11, however only a subset of adapters and drives can be installed. For ease of configuration, the following Base CTO models have been announced to assist building a configuration that can be used with the client operating systems. For more information, see the Windows 10 and Windows 11 section.

CTO models for NVIDIA Bluefield-2 SmartNIC DPUs

The SR650 V3 supports the new VMware vSphere Distributed Services Engine (DSE) using the NVIDIA Bluefield-2 SmartNIC Data Processing Unit (DPU). This solution improves the performance of VMware vSphere by offloading tasks from server CPUs to the DPU thereby making data center applications more efficient.

When configuring VMware vSphere Distributed Services Engine using NVIDIA BlueField-2 DPUs, you will need to build the server in the DCSC configurator starting from the DPU-specific CTO models listed in the following table.

BlueField-3 support: These CTO models are not required for BlueField-3 DPUs.

For more information, see the DPU adapter section.

Base feature codes

Models of the SR650 V3 are defined based on whether the server has 2.5-inch drive bays at the front (called the 2.5-inch chassis) or whether it has 3.5-inch drive bays at the front (called the 3.5-inch chassis). For models, the feature codes for these chassis bases are as listed in the following table.

Preconfigured models

The following tables list the available preconfigured models, grouped by region.

- Models for Asia Pacific region

- Models for Australia and New Zealand

- Models for Brazil

- Models for China

- Models for EMEA region

- Models for Hong Kong, Taiwan, Korea (HTK)

- Models for India

- Models for Japan

- Models for Latin American countries (except Brazil)

- Models for USA and Canada

Refer to the Specifications section for information about standard features of the server.

Common to all models:

- Power supplies are Platinum unless otherwise stated

- All models include a Toolless Slide Rail Kit

Models for Asia Pacific region

The following table lists the models for the Asia Pacific region: Australia, Bangladesh, Brunei, Hong Kong, India, Japan, Korea, Sri Lanka, Malaysia, New Zealand, Philippines, Singapore, Thailand, Taiwan, Vietnam

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for Australia and New Zealand

AP models: Customers in Australia and New Zealand also have access to the Asia Pacific region models.

Common to all Australia and New Zealand models:

- All models include a Toolless Slide Rail Kit and Cable Management Arm

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for Brazil

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for China

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for EMEA region

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for Hong Kong, Taiwan, Korea (HTK)

AP models: Customers in Hong Kong, Taiwan, and Korea also have access to the Asia Pacific region models.

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for India

AP models: Customers in India also have access to the Asia Pacific region models.

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for Japan

AP models: Customers in Japan also have access to the Asia Pacific region models.

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for Latin American countries (except Brazil)

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Models for USA and Canada

† Processor description: Processor model, number of cores, thermal design power (TDP), core frequency

Processors

The SR650 V3 supports processors in either the 5th Gen Intel Xeon Scalable Processor family ("Emerald Rapids") or the 4th Gen Intel Xeon Scalable Processor family ("Sapphire Rapids"). The server supports one or two processors.

Topics in this section:

Processor options

All supported processors have the following characteristics:

- 8 DDR5 memory channels at 2 DIMMs per channel

- Up to 4 UPI links between processors at up to 20 GT/s

- Up to 80 PCIe 5.0 I/O lanes

The following table lists the 5th Gen processors that are currently supported by the SR650 V3. The table includes a Top Choice Express column; for CTO orders, select a TCE component for faster delivery. See the Top Choice section for more information.

* These processors are single-socket capable processors and are only available in configure-to-order builds or in preconfigured models. Not available as option part numbers.

The following table lists the 4th Gen processors that are currently supported by the SR650 V3.

* These processors are single-socket capable processors and are only available in configure-to-order builds or in preconfigured models. Not available as option part numbers.

Configuration notes:

- Processor options include a heatsink but do not include a system fan

- Single-processor configurations are not supported

Processor features

Processors supported by the SR650 V3 introduce new embedded accelerators to add even more processing capability:

- QuickAssist Technology (Intel QAT)

Help reduce system resource consumption by providing accelerated cryptography, key protection, and data compression with Intel QuickAssist Technology (Intel QAT). By offloading encryption and decryption, this built-in accelerator helps free up processor cores and helps systems serve a larger number of clients.

- Intel Dynamic Load Balancer (Intel DLB)

Improve the system performance related to handling network data on multi-core Intel Xeon Scalable processors. Intel Dynamic Load Balancer (Intel DLB) enables the efficient distribution of network processing across multiple CPU cores/threads and dynamically distributes network data across multiple CPU cores for processing as the system load varies. Intel DLB also restores the order of networking data packets processed simultaneously on CPU cores.

- Intel Data Streaming Accelerator (Intel DSA)

Drive high performance for storage, networking, and data-intensive workloads by improving streaming data movement and transformation operations. Intel Data Streaming Accelerator (Intel DSA) is designed to offload the most common data movement tasks that cause overhead in data center-scale deployments. Intel DSA helps speed up data movement across the CPU, memory, and caches, as well as all attached memory, storage, and network devices.

- Intel In-Memory Analytics Accelerator (Intel IAA)

Run database and analytics workloads faster, with potentially greater power efficiency. Intel In-Memory Analytics Accelerator (Intel IAA) increases query throughput and decreases the memory footprint for in-memory database and big data analytics workloads. Intel IAA is ideal for in-memory databases, open source databases and data stores like RocksDB, Redis, Cassandra, and MySQL.

- Intel Advanced Matrix Extensions (Intel AMX)

Intel Advanced Matrix Extensions (Intel AMX) is a built-in accelerator in all Silver, Gold, and Platinum processors that significantly improves deep learning training and inference. With Intel AMX, you can fine-tune deep learning models or train small to medium models in just minutes. Intel AMX offers discrete accelerator performance without added hardware and complexity.

The processors also support a separate and encrypted memory space, known as the SGX Enclave, for use by Intel Software Guard Extensions (SGX). The size of the SGX Enclave supported varies by processor model. Intel SGX offers hardware-based memory encryption that isolates specific application code and data in memory. It allows user-level code to allocate private regions of memory (enclaves) which are designed to be protected from processes running at higher privilege levels.

The following table summarizes the key features of all supported 5th Gen processors in the SR650 V3.

† The maximum single-core frequency at with the processor is capable of operating

* L3 cache is 1.875 MB per core or larger. Processors with a larger L3 cache per core are marked with an *

** Bronze 3508U aprocessor does not support Hyper-Threading Technology

‡ SKUs with a U suffix as well as some other SKUs have no UPI links and are are single-socket only

The following table summarizes the key features of all supported 4th Gen processors in the SR650 V3.

† The maximum single-core frequency at with the processor is capable of operating

* L3 cache is 1.875 MB per core or larger. Processors with a larger L3 cache per core are marked with an *

** Bronze 3408U processor does not support Hyper-Threading Technology

‡ SKUs with a U suffix as well as some other SKUs have no UPI links and are are single-socket only

Intel On Demand feature licensing

Intel On Demand is a licensing offering from Lenovo for certain 4th Gen and 5th Gen Intel Xeon Scalable processors that implements software-defined silicon (SDSi) features. The licenses allow customers to activate the embedded accelerators and to increase the SGX Enclave size in specific processor models as their workload and business needs change.

The available upgrades are the following:

- Up to 4x QuickAssist Technology (Intel QAT) accelerators

- Up to 4x Intel Dynamic Load Balancer (Intel DLB) accelerators

- Up to 4x Intel Data Streaming Accelerator (Intel DSA) accelerators

- Up to 4x Intel In-Memory Analytics Accelerator (Intel IAA) accelerators

- 512GB SGX Enclave, an encrypted memory space for use by Intel Software Guard Extensions (SGX)

See the Processor features section for a brief description of each accelerator and the SGX Enclave.

The following table lists the ordering information for the licenses. Accelerator licenses are bundled together based on the suitable workloads each would benefit with the additional accelerators.

Licenses can be activated in the factory (CTO orders) using feature codes, or as field upgrades using the option part numbers. With the field upgrades, they allow customers to only activate the accelerators or to increase the SGX Enclave size when their applications can best take advantage of them.

Intel On Demand is licensed on individual processors. For servers with two processors, customers will need a license for each processor and the licenses of the two processors must match. If customers add a second processor as a field upgrade, then you must ensure that the Intel On Demand licenses match the first processor.

Each license enables a certain quantity of embedded accelerators - the total number of accelerators available after activation is listed in the table. For example, Intel On Demand Communications & Storage Suite 4 (4L47A89451), once applied to the server, will result in a total of 4x QAT, 4x DLB and 4x DSA accelerators to be enabled the processor. The number of IAA accelerators is unchanged in this example.

The following table lists the 5th Gen processors that support Intel on Demand. The table shows the default accelerators and default SGX Enclave size, and it shows (with green highlight) what the total new accelerators and SGX Enclave would be once the Intel On Demand features have been activated.

The following table lists the 4th Gen processors that support Intel on Demand. The table shows the default accelerators and default SGX Enclave size, and it shows (with green highlight) what the total new accelerators and SGX Enclave would be once the Intel On Demand features have been activated.

Configuration rules:

- Not all processors support Intel On Demand upgrades - see the table for those that do not support Intel On Demand

- Upgrades can be performed in the factory (feature codes) or in the field (part numbers) but not both, and only one time

- Upgrades cannot be removed once activated

- SGX Enclave upgrades are independent of the accelerator upgrades; install either or both as desired

- For processors that support more than one upgrade, all upgrades must be performed at the same time

- Only one of each type of upgrade can be applied to a processor (eg 2x BX9A is not supported; 4x BX9B is not supported)

- The following processors support two accelerator upgrades, Intel On Demand Analytics Suite 4 (4L47A89452) and Intel On Demand Communications & Storage Suite 4 (4L47A89451); the table(s) above shows the accelerators based on both upgrades being applied.

- Intel Xeon Platinum 8460Y+

- Intel Xeon Platinum 8480+

- Intel Xeon Platinum 8568Y+

- Intel Xeon Platinum 8592+

- The number of accelerators listed for each upgrade is the number of accelerators that will be active one the upgrade is complete (ie the total number, not the number to be added)

- If a server has two processors, then two feature codes must be selected, one for each processor. The upgrades on the two processors must be identical.

- If a one-processor server with Intel On Demand features activated on it has a 2nd processor added as a field upgrade, the 2nd processor must also have the same features activated by purchasing the appropriate part numbers.

One-processor configurations

The SR650 V3 can be used with only one processor installed. Most core functions of the server (including the XClarity Controller) are connected to processor 1 as shown in the System architecture section.

With only one processor, the server has the following capabilities:

- 16 memory DIMMs for a 4TB maximum

- Rear slots: Slot 1-3 (riser 1) and slots 7-8 (riser 3) are available; (Slots 4-6 in Riser 2 are not available)

- Front slots: Slot 12 (Slot 11 is not available)

Drive support is as follows (see the Storage configurations section for specifics):

- Front SAS/SATA drives are supported - 8, 16, 24 drives (2.5-inch) or 8, 12 drives (3.5-inch)

- Front NVMe drives are supported - up to 8 drives (2.5-inch) or 4 drives (3.5-inch)

- Rear SAS/SATA drives are supported - 4x 3.5-inch drives or up to 4x 2.5-inch drives

- M.2 drives are supported

- 7mm rear drives are supported

Controller support is as follows:

- 12x onboard SATA

- 12x NVMe (8x onboard + 1x 4-port retimer adapter)

- RAID adapters/HBAs installed in slots 1-3

- Internal RAID/HBA controller (CFF form factor) with SAS/SATA-only configurations

Riser 3 is supported under the following conditions:

- No onboard NVMe connections are available

- With the x8/x8 PCIe Riser3 (BPKH or BPKF) selected, Slots 7 and 8 are both connected each with x8 lanes

- With the x16/x16 PCIe Riser3 (BLL9 or BPKG) selected, Slot 7 is connected with x16 lanes; Slot 8 is not connected

- The processor selected is not one of the following vRAN processors (insufficient PCIe lanes): 5423N, 5433N, 6403N, 6423N, 6433N, 6443N

The following components are not supported:

- Middle NVMe drive bays are not supported (SAS/SATA is supported)

Thermal requirements for processors

For thermal requirements for processors, see the Thermal Rules section in the Information Center for the SR650 V3:

https://pubs.lenovo.com/sr650-v3/thermal_rules

Lenovo Processor Neptune Core Module - Open-loop liquid cooling

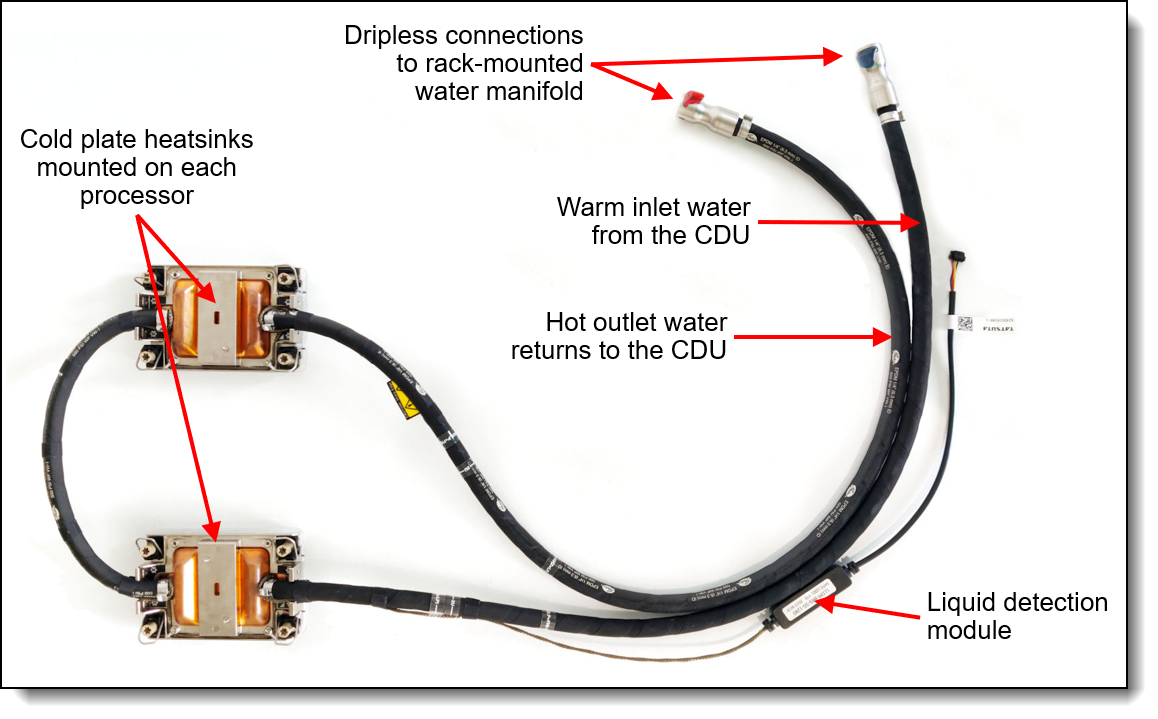

The SR650 V3 also supports advanced direct-water cooling (DWC) capability with the Lenovo Processor Neptune Core Module. This module implements a liquid cooling solution where heat from the processors is removed from the rack and the data center using an open loop and coolant distribution units.

With the Processor Neptune Core Module, all heat generated by the processors is removed from the server using water. This means that the server fans and data center air conditioning units only need to remove the heat generated by the other components. This results in lower air conditioning costs and it enables the use of slower fans which results in lower overall power consumption.

Typical power saving of 23% (up to 9.9KW per rack) are possible, based on 18x SR650 V3 servers in a rack (DC level PUE weighted) at 30°C ambient temperature. Power savings are configuration dependent.

The following figure shows the Lenovo Processor Neptune Core Module.

Figure 7. Lenovo Processor Neptune Core Module

The Processor Neptune Core Module also includes a leak detection module which can detect a leakage of more than 0.5ml (about 10 drops) along the length of the tube and then issue an event to the XClarity Controller. XCC will then post an error to the System Event Log and enable further actions. Once the liquid evaporates, a further event is issue to XCC.

The Processor Neptune Core Module is only available in CTO orders, not as a field upgrade. Ordering information is listed in the following table.

| Part number | Feature code | Description | Top Choice Express |

|---|---|---|---|

| CTO only | BXBC* | ThinkSystem V3 1U/2U Neptune Processor Direct Water Cooling Solution | Not TCE |

* In DCSC, this feature code is listed in the Processor tab

Configuration notes:

- The Processor Neptune Core Module requires water infrastructure be available in the rack cabinet and data center, as described in the Water infrastructure section.

- All processor SKUs are supported

- Either one or two CPUs are supported

- All front drive bay configurations are supported

- Slot 6 is not available for adapters - the water loop is routed through the space otherwise occupied by slot 6

- Rear drive bays are supported

- 7mm drive bays are supported only in slot 3

- M.2 adapters are supported based on the configurations in the Storage configurations section

- Standard fans can be configured in most configurations

- The use of a cable management arm (CMA) is not supported

For more information, see the Thermal Rules page:

https://pubs.lenovo.com/sr650-v3/thermal_rules

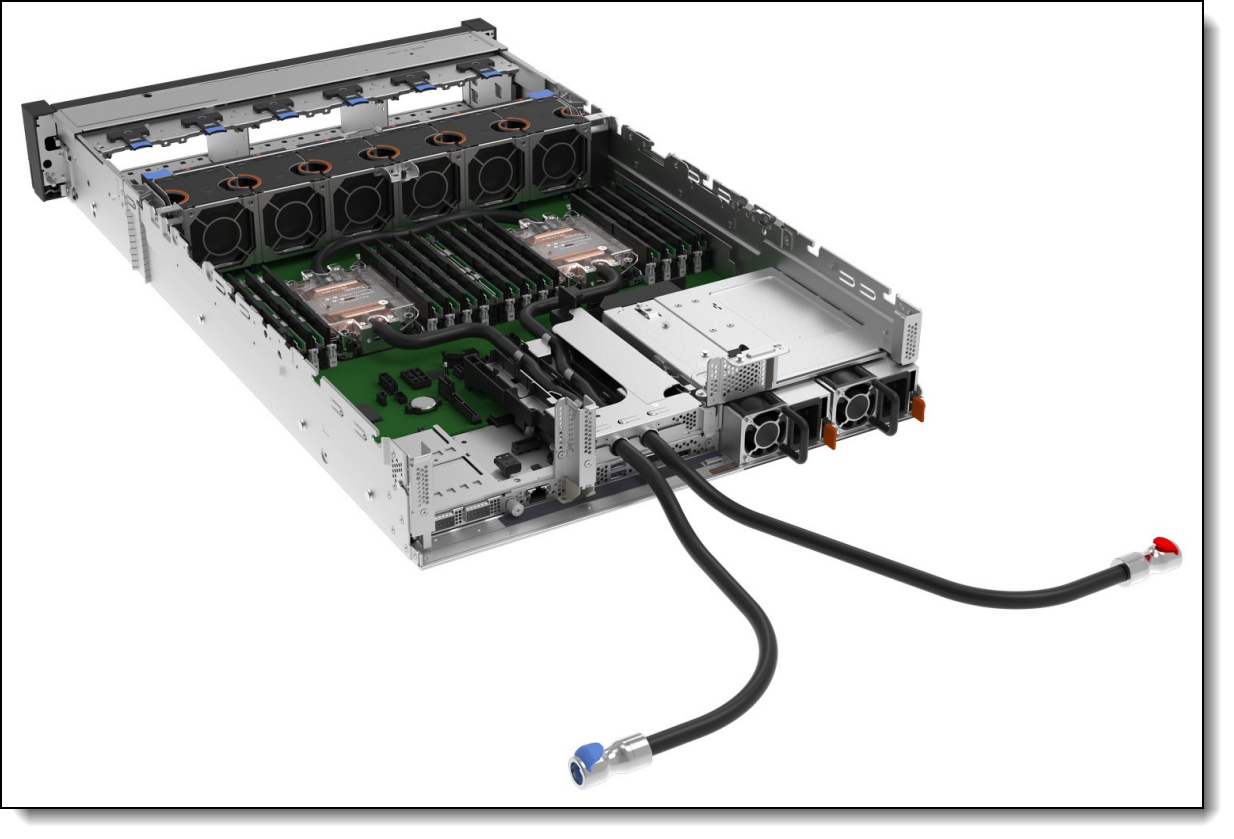

The following figure shows the Lenovo Neptune Processor DWC Module installed in the SR650 V3 (risers removed to show internal components).

Figure 8. Lenovo Neptune Processor DWC Module installed in the SR650 V3

UEFI operating modes

The SR650 V3 offers preset operating modes that affect energy consumption and performance. These modes are a collection of predefined low-level UEFI settings that simplify the task of tuning the server to suit your business and workload requirements.

The following table lists the feature codes that allow you to specify the mode you wish to preset in the factory for CTO orders.

UK and EU customers: For compliance with the ERP Lot9 regulation, you should select feature BFYE. For some systems, you may not be able to make a selection, in which case, it will be automatically derived by the configurator.

The preset modes for the SR650 V3 are as follows:

- Maximum Performance Mode (feature BFYB): Achieves maximum performance but with higher power consumption and lower energy efficiency.

- Minimal Power Mode (feature BFYC): Minimize the absolute power consumption of the system.

- Efficiency Favoring Power Savings Mode (feature BFYD): Maximize the performance/watt efficiency with a bias towards power savings. This is the favored mode for SPECpower benchmark testing, for example.

- Efficiency Favoring Performance Mode (feature BFYE): Maximize the performance/watt efficiency with a bias towards performance. This is the favored mode for Energy Star certification, for example.

For details about these preset modes, and all other performance and power efficiency UEFI settings offered in the SR650 V3, see the paper "Tuning UEFI Settings for Performance and Energy Efficiency on Intel Xeon Scalable Processor-Based ThinkSystem Servers", available from https://lenovopress.lenovo.com/lp1477.

Memory options

The SR650 V3 uses Lenovo TruDDR5 memory operating at up to 5600 MHz. The server supports up to 32 DIMMs with 2 processors. The processors have 8 memory channels and support 2 DIMMs per channel (DPC). The server supports up to 8TB of memory using 32x 256GB 3DS RDIMMs and two processors.

With 5th Gen Intel Xeon processors, DIMMs operate at the following speeds, up to the memory bus speed of the processor selected. See the Processor features section for specifics.

- 1 DIMM per channel (DPC) using RDIMMs: Up to 5600 MHz

- 1 DPC using 3DS RDIMMs: Up to 5200 MHz

- 2 DPC using RDIMMs or 3DS RDIMMs: Up to 4400 MHz

- 2 DPC using Performance+ RDIMMs: Up to 4800 MHz

With 4th Gen Intel Xeon processors, DIMMs operate at the following speeds, up to the memory bus speed of the processor selected:

- 1 DPC: Up to 4800 MHz

- 2 DPC: Up to 4400 MHz

Lenovo TruDDR5 memory uses the highest quality components that are sourced from Tier 1 DRAM suppliers and only memory that meets the strict requirements of Lenovo is selected. It is compatibility tested and tuned to maximize performance and reliability. From a service and support standpoint, Lenovo TruDDR5 memory automatically assumes the system warranty, and Lenovo provides service and support worldwide.

The following table lists the 5600 MHz memory options that are currently supported by the SR650 V3. These DIMMs are only supported with 5th Gen Intel Xeon processors, with the exceptions listed in the memory rules below.

The following table lists the 4800 MHz memory options that are currently supported by the SR650 V3. These DIMMs are only supported with 4th Gen Intel Xeon processors plus four specific 5th Gen processors as listed in the memory rules below. The 128GB 5600MHz RDIMM is also supported with 4th Gen processors.

9x4 RDIMMs (also known as EC4 RDIMMs) are a new lower-cost DDR5 memory option supported in ThinkSystem V3 servers. 9x4 DIMMs offer the same performance as standard RDIMMs (known as 10x4 or EC8 modules), however they support lower fault-tolerance characteristics. Standard RDIMMs and 3DS RDIMMs support two 40-bit subchannels (that is, a total of 80 bits), whereas 9x4 RDIMMs support two 36-bit subchannels (a total of 72 bits). The extra bits in the subchannels allow standard RDIMMs and 3DS RDIMMs to support Single Device Data Correction (SDDC), however 9x4 RDIMMs do not support SDDC. Note, however, that all DDR5 DIMMs, including 9x4 RDIMMs, support Bounded Fault correction, which enables the server to correct most common types of DRAM failures.

For more information on DDR5 memory, see the Lenovo Press paper, Introduction to DDR5 Memory, available from https://lenovopress.com/lp1618.

The following rules apply when selecting the memory configuration:

- In DCSC, 4800 MHz memory can only be selected with 4th Gen Intel Xeon Scalable processors, and 5600 MHz memory can only be selected with 5th Gen Intel Xeon Scalable processors. The only exceptions are listed below.

- An exception to the above rule is the following 5600 MHz DIMM which is supported with both 4th Gen and 5th Gen processors (operates at up to 4800 MHz with 4th Gen processors):

- ThinkSystem 128GB TruDDR5 5600MHz (2Rx4) RDIMM, 4X77A93887

- An additional exception to the above rule are the following 5th Gen processors:

- Intel Xeon Bronze 3508U 8C 125W 2.1GHz Processor

- Intel Xeon Silver 4509Y 8C 125W 2.6GHz Processor

- Intel Xeon Silver 4510 12C 150W 2.4GHz Processor

- Intel Xeon Silver 4510T 12C 115W 2.0GHz Processor

These 4 processors support:

- All 4800 MHz memory DIMMs

- ThinkSystem 16GB TruDDR5 5600MHz (1Rx8) RDIMM, 4X77A88087

- ThinkSystem 32GB TruDDR5 5600MHz (2Rx8) RDIMM, 4X77A88051

- ThinkSystem 64GB TruDDR5 5600MHz (2Rx4) RDIMM, 4X77A88052

- ThinkSystem 128GB TruDDR5 5600MHz (2Rx4) RDIMM, 4X77A93887

- The SR650 V3 only supports quantities of 1, 2, 4, 6, 8, 12, or 16 DIMMs per processor; other quantities not supported. Additional quantity requirements:

- 5th Gen Intel Xeon processors:

- 24GB and 48GB DIMMs are only supported in quantities 1, 6, 8 per processor (2, 4, 12, 16 not supported)

- 96GB DIMMs are only supported in quantities 1, 6, 8, 12, 16 per processor (2, 4 not supported)

- 4th Gen Intel Xeon processors:

- 24GB and 48GB DIMMs are only supported in quantity 8 per processor

- 96GB DIMMs are only supported in quantities 8, 16 per processor

- 5th Gen Intel Xeon processors:

- The 48GB RDIMM (4X77A87033) and 96GB RDIMM (4X77A87034) are supported with all 4th Gen processors

- The server supports three types of DIMMs: 9x4 RDIMMs, RDIMMs, and 3DS RDIMMs; UDIMMs and LRDIMMs are not supported

- Mixing of DIMM types is not supported (9x4 DIMMs with 10x4 RDIMMs, 9x4 DIMMs with 3DS RDIMMs, 10x4 RDIMMs with 3DS RDIMMs)

- Mixing of DRAM technology (16Gb, 24Gb, 32Gb) is not supported. See the column in the above table.

- Mixing of 24GB, 48GB and 96GB DIMMs (24Gb DRAM) is not supported; if using any of these DIMMs then all installed memory must be the same part number

- Mixing x4 and x8 DIMMs is not supported

- Mixing of DIMM rank counts is supported. Follow the required installation order installing the DIMMs with the higher rank counts first.

- Mixing of DIMM capacities is supported, however only two different capacities are supported across all channels of the processor. Follow the required installation order installing the larger DIMMs first.

- Memory mirroring is not supported with 9x4 DIMMs

- The mixing of 128GB 3DS RDIMMs and 256GB 3DS RDIMMs is supported

- The use of the 128GB 3D RDIMM feature BY8F has the following requirements for thermal reasons:

- If the front drive bays are 12x 3.5-inch, then middle and rear drive bays are not supported

- Additional ambient temperature requirements - see https://pubs.lenovo.com/sr650-v3/thermal_rules for information

For best performance, consider the following:

- Ensure the memory installed is at least the same speed as the memory bus of the selected processor.

- Populate all 8 memory channels.

The following memory protection technologies are supported:

- ECC detection/correction

- Bounded Fault detection/correction

- SDDC (for 10x4-based memory DIMMs; look for "x4" in the DIMM description)

- ADDDC (for 10x4-based memory DIMMs, not supported with 9x4 DIMMs)

- Memory mirroring

See the Lenovo Press article, RAS Features of the Lenovo ThinkSystem Intel Servers for more information about memory RAS features.

If memory channel mirroring is used, then DIMMs must be installed in pairs (minimum of one pair per processor), and both DIMMs in the pair must be identical in type and size. 50% of the installed capacity is available to the operating system.

Memory rank sparing is implemented using ADDDC/ADC-SR/ADDDC-MR to provide DRAM-level sparing feature support.

Persistent memory

The SR650 V3 server supports Intel Optane Persistent Memory 300 Series, a new class of memory and storage technology explicitly architected for data center usage. Persistent memory is an innovative technology that delivers a unique combination of affordable large memory capacity and persistence (non-volatility). It offers significantly lower latency than fetching data from SSDs, even NVMe SSDs, and offers higher capacities than system memory.

Persistent memory technology can help boost the performance of data-intensive applications such as in-memory analytics, databases, content delivery networks, and high performance computing (HPC), as well as deliver consistent service levels at scale with higher virtual machine and container density. When data is stored closer to the processor on nonvolatile media, applications can see significant overall improvement in performance.

Key workloads:

- Hybrid cloud, IaaS, and Virtualization: Increased VM density

- In-memory databases and data services: Higher capacity/larger databases, data reliability/quick recovery, memory performance

- AI/Analytics: Faster real-time analytics and Machine Learning Analytics

- Fast storage solutions: Increased storage capacity and performance

The following table lists the ordering information for the supported persistent memory modules.

The following are the requirements when installing persistent memory (PMem) modules:

- Only App Direct Mode is supported; Memory Mode and Mixed Mode are not supported

- All PMem modules operate at a maximum speed of 4400 MHz; any installed RDIMMs will also run at this maximum speed

- PMem is supported only with 10x4 RDIMMs and 3DS RDIMMs; PMem is not supported with 9x4 RDIMMs

- All installed PMem modules must be the same size; Mixing PMem modules of different capacities is not supported

- Maximum 8 PMem modules per processor (install 1 in each memory channel).

- For each memory channel with both a PMem module and a memory DIMM installed, the PMem module is installed in channel slot 1 (DIMM1, closer to the processor) and the DIMM is installed in channel slot 0 (DIMM0)

- To maximize performance, balance all memory channels

- Both interleaved and non-interleaved modes are supported

- Memory mirroring is only supported on the RDIMMs; Memory mirroring is not supported on the PMem modules

Internal storage

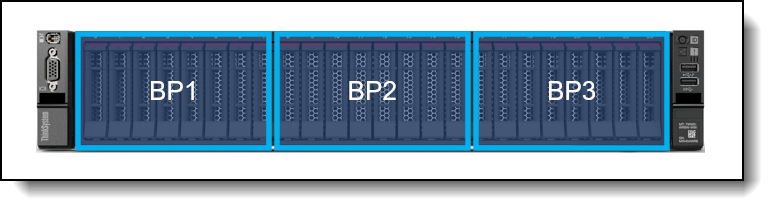

The SR650 V3 has three drive bay zones and supports up to 20x 3.5-inch or 40x 2.5-inch hot-swap drive bays or a combination of drive bays, depending on the selected chassis and backplane configuration. The server also supports configurations without any drive bays if desired.

The three drive bay zones are as follows:

- Front:

- Up to 12x 3.5-inch hot-swap bays, or

- Up to 24x 2.5-inch hot-swap bays

- Middle:

- 4x 3.5-inch simple-swap bays, or

- 8x 2.5-inch simple-swap bays

- Rear:

- Up to 4x 3.5-inch hot-swap bays, or

- Up to 8x 2.5-inch hot-swap bays

- Also supports 2x 7mm hot-swap drives bays

The server also supports one or two M.2 drives, installed in an M.2 adapter internal to the server.

In this section:

NVMe drive support

The SR650 V3 supports NVMe drives to maximize storage performance.

- Up to 36 NVMe drives in a 2.5-inch drive configuration, without oversubscription (that is, each x4 drive has a dedicated x4 (4 lanes) connection to the processor, either direct to the processor or via a retimer adapter)

- Up to 24 installed in front bays

- Up to 28 installed in the front and rear bays

- Up to 32 installed in front and mid bays

- Up to 36 installed in front, mid and rear bays

- Up to 8 NVMe drives in a 3.5-inch drive configuration, without oversubscription

- All installed in mid bays

Riser 3 support with onboard NVMe: When Riser 3 is configured, the use of the onboard NVMe connections is supported up to 12x NVMe drives. Two 8-bay backplanes are required, with 4 bays unused. A quantity of 16x or more NVMe drives requires the use of an adapter if Riser 3 is configured.

The specifics of these configurations are covered in the Storage configurations section. The tables in those sections indicate the number of NVMe drives in each configuration.

In addition, the SR650 V3 supports two 7mm NVMe drives for use as boot drives.

Tri-Mode support - RAID 940 and RAID 9450 adapters

The RAID 940 and RAID 9450 adapters support NVMe through a feature named Tri-Mode support (or Trimode support). This feature enables the use of NVMe U.3 drives at the same time as SAS and SATA drives. Tri-Mode requires an AnyBay backplane. Cabling of the controller to the backplanes is the same as with SAS/SATA drives, and the NVMe drives are connected via a PCIe x1 link to the controller.

NVMe drives connected using Tri-Mode support provide better performance than SAS or SATA drives: A SATA SSD has a data rate of 6Gbps, a SAS SSD has a data rate of 12Gbps, whereas an NVMe U.3 Gen 4 SSD with a PCIe x1 link will have a data rate of 16Gbps. NVMe drives typically also have lower latency and higher IOPS compared to SAS and SATA drives. Tri-Mode is supported with U.3 NVMe drives and requires an AnyBay backplane.

Tri-Mode requires U.3 drives: Only NVMe drives with a U.3 interface are supported. U.2 drives are not supported. See the Internal drive options section for the U.3 drives supported by the server.

NVMe x4 support (not Tri-Mode): As an alternative to Tri-Mode support, the RAID 9450-32i adapter supports connectivity to either NVMe U.2 and U.3 drives using a PCIe Gen4 x4 connection. A x4 connection to each drive enables maximum performance from the NVMe drives. Note that a x4 connection requires different cabling than a Tri-Mode connection. Only NVMe drives are supported; SAS and SATA drives are not supported.

Front drive bays

The front drive bay zone supports the following configurations:

- 8x or 12x 3.5-inch drive bays (all hot-swap)

- 8x, 16x or 24x 2.5-inch drive bays (all hot-swap)

- 4x 2.5-inch drive bays (all hot-swap) with support for front PCIe slots

- No backplanes and no drives (supports field upgrades)

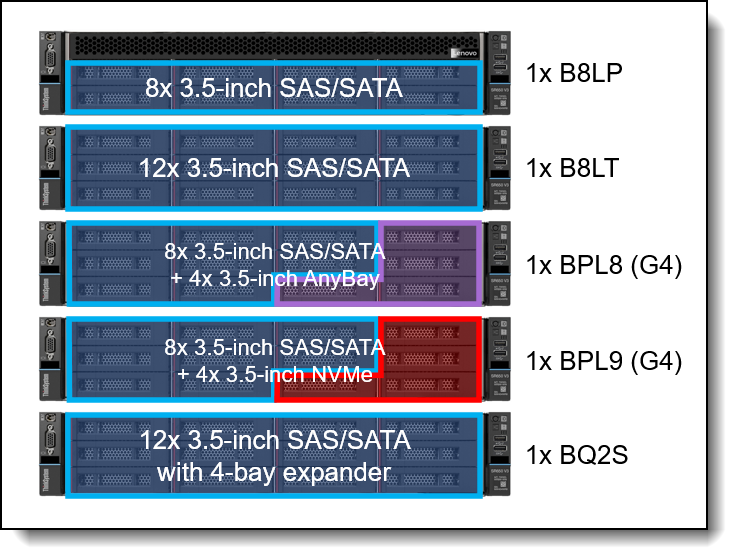

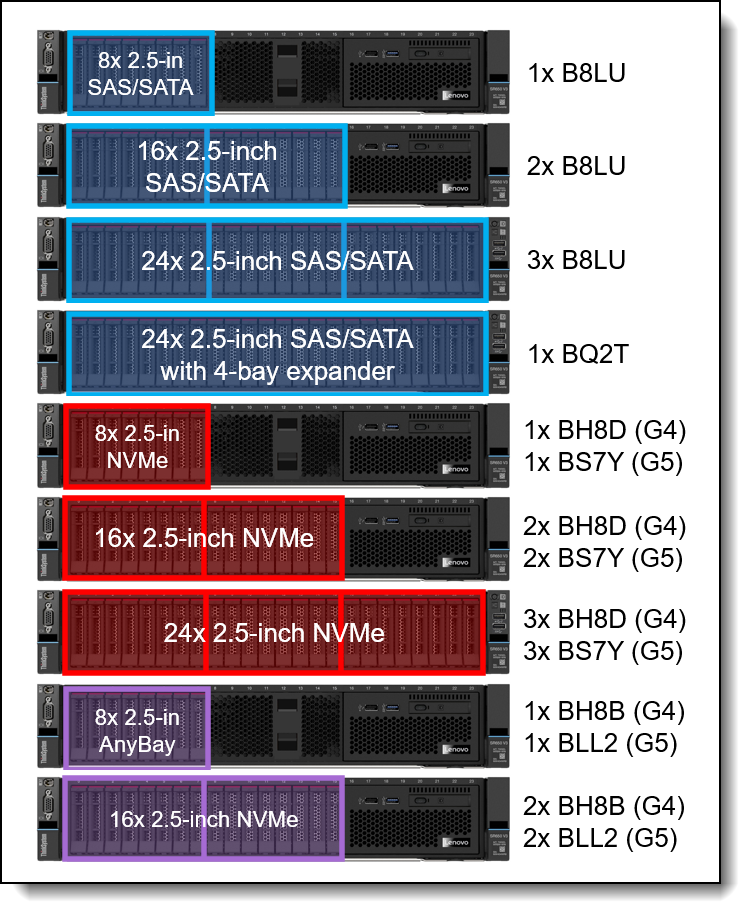

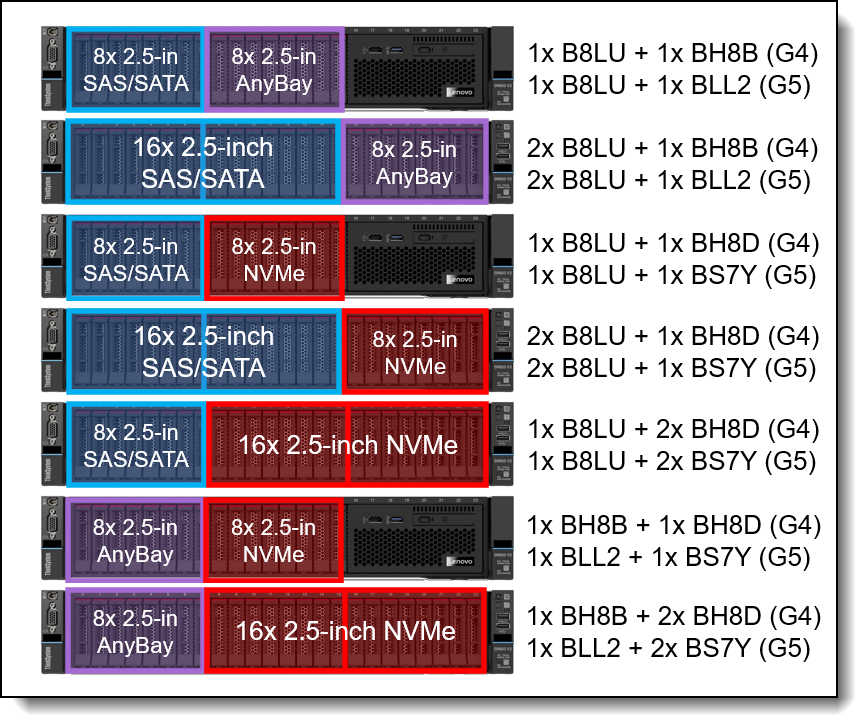

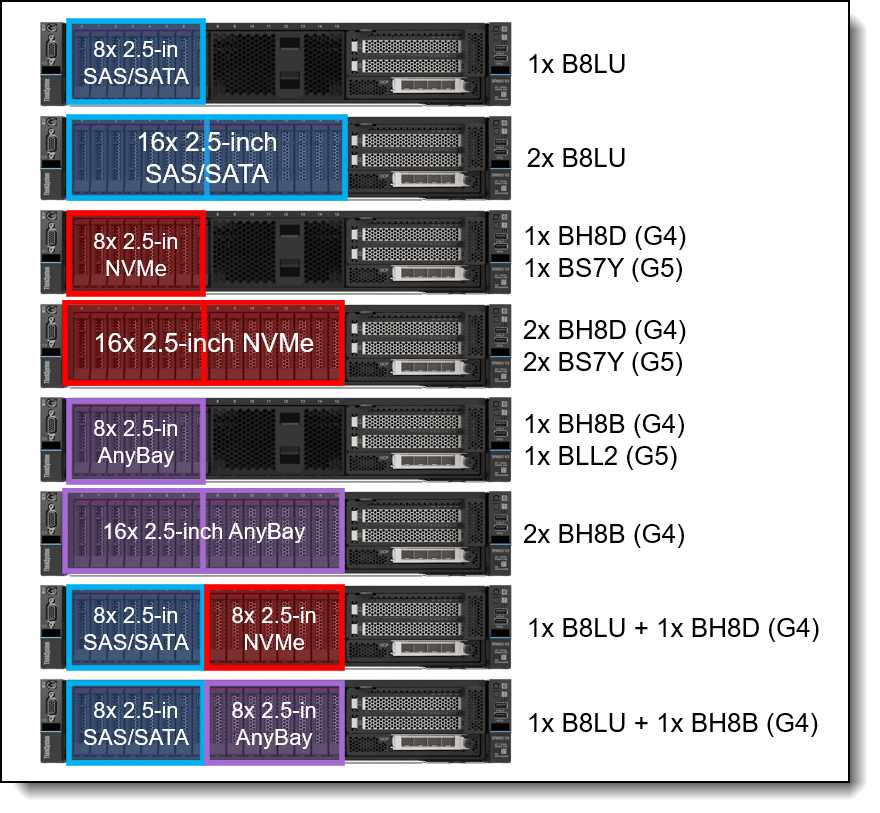

The specific combinations that are supported in the SR650 V3 are shown in the following figures. The feature codes listed are the backplane feature codes when ordering CTO and correspond to the feature codes listed in the table below the figure. Note that NVMe and AnyBay backplanes may be available either PCIe Gen4 (G4) or PCIe Gen5 (G5) as listed in the figures.

Figure 9. SR650 V3 front drive bay configurations - 3.5-inch drive bays

Figure 10. SR650 V3 front drive bay configurations - 2.5-inch drive bays, all the same drive type

Figure 11. SR650 V3 front drive bay configurations - 2.5-inch drive bays, combinations

Figure 12. SR650 V3 front drive bay configurations - 2.5-inch drive bays with front PCIe slots

The backplanes used to provide these drive bays are listed in the following table. The table includes a Top Choice Express column; for CTO orders, select a TCE component for faster delivery. See the Top Choice section for more information.

Field upgrades: All front backplanes are available as part numbers for field upgrades along with require cable option kits, as described in the Field upgrades section below.

* Backplane has an onboard SAS expander that provides connectivity to front SAS/SATA drive bays using only an 8-port controller, plus support for 4x rear drives in a separate backplane (order the rear backplane separately). See below for additional information and requirements.

The use of front drive bays has the following configuration rules:

- The SR650 V3 also supports configurations without any drive bays, allowing for drive bay upgrades as described in the field upgrades section.

- Most backplanes require connections to controllers that provides enough ports to connect each drive (eg 8 ports for an 8-bay backplane). The only exceptions to that are the backplanes with built-in SAS expanders - these backplanes require a fewer number of controller ports to connect to the front drives, and also include a connection to up to 4x rear drive bays. Connections to mid-chassis backplanes or to 8x rear drive bays require additional controller ports. Supported connections are as follows:

- For the ThinkSystem 2U 12x3.5" SAS/SATA with Rear 4-Bay Expander Backplane, feature BQ2S:

- 12x3.5" front drives only: requires a single 8i controller

- 12x3.5” + 4x3.5” (Rear): requires a single 8i controller, 8i to front+rear

- 12x3.5” + 4x3.5” (Mid): requires 16i controller - 8i to front, 8i to middle

- 12x3.5” + 4x3.5” (Mid) + 4x3.5” (Rear): requires 16i controller, 8i to front+rear, 8i to middle

- For the ThinkSystem 2U 24x2.5" SAS/SATA with Rear 4-Bay Expander Backplane, feature BQ2T:

- 24x2.5” front drives only: requires a single 8i controller

- 24x2.5” + 4x2.5” (Rear): requires a single 8i controller, 8i to front+rear

- 24x2.5” + 8x2.5” (Mid): requires 16i controller - 8i to front, 8i to middle

- 24x2.5” + 8x2.5” (Mid) + 8x2.5” (Rear): requires 32i controller - 8i to front, 8i to middle, 8i to rear

For specifics configurations, see the Storage configurations section.

- For the ThinkSystem 2U 12x3.5" SAS/SATA with Rear 4-Bay Expander Backplane, feature BQ2S:

- If you are building a server configuration that includes the ThinkSystem 2U 24x2.5" SAS/SATA with Rear 4-Bay Expander Backplane (feature BQ2T) and the order also includes a rack cabinet, then you can configure at most 6 drives to be installed in the factory. The remaining drives must be ordered separately using the option part numbers for the drives. This requirement does not apply if the order does not include a rack cabinet. The requirement is due to the shock/vibration limits of the 24x 2.5-inch backplane.

- If 3.5-inch front drive bays are used, an internal (CFF) RAID adapter or HBA is not supported as the adapter and bays occupy the same physical space

- Any 8x 2.5-inch and 16x 2.5-inch drive configuration (SAS/SATA, AnyBay, or NVMe) can optionally be configured for use with the Integrated Diagnostics Panel as described in the Local management section. 3.5-inch drive configurations do not support the Integrated Diagnostics Panel. With the Integrated Diagnostics Display, 8-bay configurations can be upgrade to 16 bays, however 16-bay configurations cannot be upgrade to 24 bays.

- The following backplanes with integrated SAS expanders are not supported with cluster applications. For more information see Support Tip TT3693:

- ThinkSystem 2U 12x3.5" SAS/SATA with Rear 4-Bay Expander Backplane (BQ2S)

- ThinkSystem 2U 24x2.5" SAS/SATA with Rear 4-Bay Expander Backplane (BQ2T)

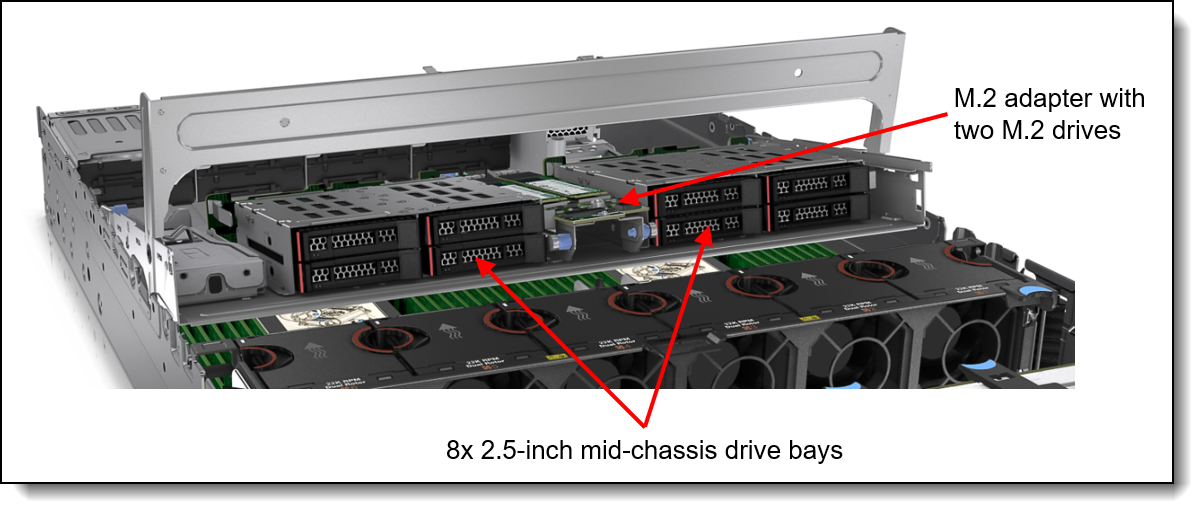

Mid drive bays

The SR650 V3 supports simple-swap drives installed in the middle of the server chassis. The drive bays are accessible by removing the top lid of the server and levering the mid drive chassis up at the front.

The following configurations are supported:

- 4x 3.5-inch simple-swap SAS/SATA drive bays

- 8x 2.5-inch simple-swap SAS/SATA drive bays

- 8x 2.5-inch simple-swap NVMe drive bays

The drive bays in the open position are shown in the following figure.

Simple-swap drive bays: The drives that are installed in the mid-chassis drive bays are simple-swap, not hot-swap, even though the drives use hot-swap drive trays.

M.2 support: When mid drive bays are configured, the M.2 adapter is installed on the mid drive bay mechanical as shown in the images.

Figure 13. Mid-chassis drive bays

The backplanes used to provide these drive bays are listed in the following table. The table includes a Top Choice Express column; for CTO orders, select a TCE component for faster delivery. See the Top Choice section for more information.

‡ 2.5-inch drive backplanes for the mid-chassis area must be installed in pairs. NVMe and SAS/SATA cannot be mixed.

Field upgrades: Backplanes are available as part numbers for field upgrades along with require cable option kits, as described in the Field upgrades section below.

The use of drive bays in the mid-chassis area has the following configuration rules:

- All processors are supported. Higher TDP processors will require the performance heatsinks.

- Full-length adapter cards are not supported

- GPUs (including low profile GPUs such as the NVIDIA A2) are not supported

- The use of mid drive bays requires Riser 1 be installed, since power for the mid bay backplanes comes from Riser 1

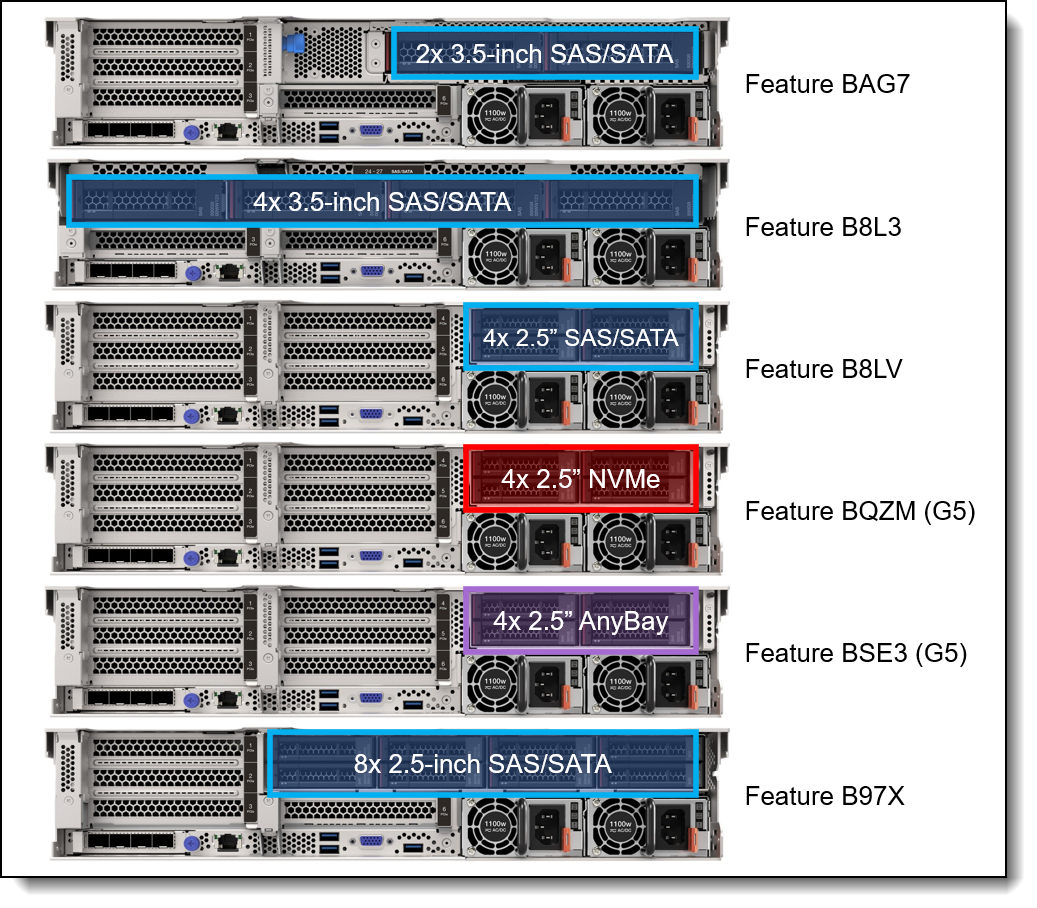

Rear drive bays

The SR650 V3 supports hot-swap drives installed at the rear of the server chassis. Supported configurations are as follows:

- 3.5-inch hot-swap drives

- 2x SAS/SATA drive bays

- 4x SAS/SATA drive bays

- 2.5-inch hot-swap drives

- 4x SAS/SATA drive bays

- 4x NVMe Gen5 drive bays

- 4x AnyBay Gen5 drive bays

- 8x SAS/SATA drive bays

The configurations are shown in the following figure.

Figure 14. Rear 2.5-inch and 3.5-inch drive bay configurations

The backplanes used to provide these drive bays are listed in the following table. The table includes a Top Choice Express column; for CTO orders, select a TCE component for faster delivery. See the Top Choice section for more information.

7mm drives: The SR650 V3 supports two 7mm drives. See the 7mm drives section for details.

Field upgrades: Backplanes are available as part numbers for field upgrades along with require cable option kits, as described in the Field upgrades section below.

The use of rear drive bays has the following configuration rules:

- The use of rear bays restricts the number of slots and the choice of risers that are supported. See the I/O expansion section for details.

- The use of rear drive bays may require that Riser 1 or Riser 2 be installed, since power for the rear backplane comes from that riser.

- Configurations with 4x 2.5-inch rear drive bays are not supported with 7mm drive bays, as they use the same power connector

Storage configurations

This section describes the various combinations of front and rear drives that the server supports, as well as M.2 support.

Tip: These tables are based on Config Matrix V5.6 in TRD V3.63

In this section:

- Overview of configurations - 3.5-inch front drive bays

- Overview of configurations - 2.5-inch front drives supporting rear slots (no front PCIe slots)

- Overview of configurations - 2.5-inch front drives supporting 12 PCIe slots (front & rear)

- Details - 3.5-inch front drive bays

- Details - 2.5-inch front drives supporting rear slots (no front PCIe slots)

- Details - 2.5-inch front drives supporting 12 PCIe slots (front & rear)

The following tables summarize the storage configurations for the SR650 V3. For details, including processor requirements, M.2 and 7mm support, and controller selections, see each of the Details tables.

Platinum processor support: Some storage configurations are only supported with certain processors:

- Configurations with "NP" (No Platinum) in the CPU column are supported with Bronze, Silver and Gold processors, but not Platinum processors.

- Configurations with "PO" (Platinum Only) in the CPU column are only supported with Platinum processors

For example, config 98-1 is only for Platinum processors, however config 98-2 is for all other processors.

Overview - 3.5-inch front drives

The following table summarizes the configurations that use 3.5-inch front drive bays.

Click to jump down to the details of the 3.5-inch front drive configurations.

Return to Storage configurations.

Field upgrades: For part number upgrades, see the Drive bays field upgrades - 3.5-inch chassis section.

Overview - 2.5-inch front drives supporting rear slots (no front PCIe slots)

The following table summarizes the configurations that use 2.5-inch front drives supporting rear slots (no front PCIe slots).

Click to jump down to the details of the 2.5-inch front drive configurations.

Return to Storage configurations.

Field upgrades: For part number upgrades, see the following:

Overview - 2.5-inch front drives supporting 12 PCIe slots (front & rear)

The following table summarizes the configurations that use 2.5-inch front drives supporting 12 PCIe slots (front & rear). Such a configuration supports up to 16x 2.5-inch drive bays + PCIe slots at the front of the server.

Click to jump down to the details of the 2.5-inch front drive configurations.

Return to Storage configurations.

Field upgrades: For part number upgrades, see the Drive bay field upgrades - 2.5-inch chassis with front slots section.

Details - 3.5-inch front bays

The following table details the configurations that use 3.5-inch front drive bays.

Click to go to the overview of the 3.5-inch front drive configurations.

Return to Storage configurations.

In the table:

- M.2 + VROC (SATA) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with SATA drives. RAID is optional, provided using VROC.

- M.2 + VROC (NVMe) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with NVMe drives. RAID is optional, provided using VROC.

- M.2 + RAID adapter means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives). Adapter installs in a rear PCIe slot.

- M.2 Integrated RAID means the M.2 RAID NVMe adapter (B8P9) with a Marvell controller or the M.2 RAID B540i-2i SATA/NVMe Adapter with a Broadcom controller. RAID-0 and RAID-1 are supported.

- 7mm + VROC (SATA) means the 7mm SATA/NVMe kit (BU0N) with SATA drives. RAID is optional, provided using VROC.

- 7mm + VROC (NVMe) means the 7mm SATA/NVMe kit (BU0N) with NVMe drives. RAID is optional, provided using VROC.

- 7mm + RAID adapter means the 7mm SATA/NVMe kit (BU0N) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives). Adapter installs in a rear PCIe slot.

- 7mm Integrated RAID means the 7mm NVMe RAID kit (B8P3) with a Marvell controller or the 7mm SATA/NVMe RAID kit (BYFG) with a Broadcom controller. RAID-0 and RAID-1 are supported.

Tip: M.2 and 7mm are mutually exclusive: they are not supported together in the same configuration

* For M.2 or 7mm: Requires 2 processors; 1P not supported

Details - 2.5-inch front bays supporting rear slots (no front PCIe slots)

The following table details the configurations that use 2.5-inch front bays supporting rear slots (no front PCIe slots).

Click to go to the overview of the 2.5-inch front drive configurations.

Return to Storage configurations.

In the table:

- M.2 + VROC (SATA) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with SATA drives. RAID is optional, provided using VROC.

- M.2 + VROC (NVMe) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with NVMe drives. RAID is optional, provided using VROC.

- M.2 + RAID adapter means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives)

- M.2 Integrated RAID means the M.2 RAID NVMe adapter (B8P9) with a Marvell controller or the M.2 RAID B540i-2i SATA/NVMe Adapter with a Broadcom controller. RAID-0 and RAID-1 are supported.

- 7mm + VROC (SATA) means the 7mm SATA/NVMe kit (BU0N) with SATA drives. RAID is optional, provided using VROC.

- 7mm + VROC (NVMe) means the 7mm SATA/NVMe kit (BU0N) with NVMe drives. RAID is optional, provided using VROC.

- 7mm + RAID adapter means the 7mm SATA/NVMe kit (BU0N) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives)

- 7mm Integrated RAID means the 7mm NVMe RAID kit (B8P3) with a Marvell controller or the 7mm SATA/NVMe RAID kit (BYFG) with a Broadcom controller. RAID-0 and RAID-1 are supported.

Tip: M.2 and 7mm are mutually exclusive: they are not supported together in the same configuration

* For M.2 or 7mm: Requires 2 processors; 1P not supported

† NP (No Platinum) means the configuration does not support Platinum processors — only Bronze, Silver and Gold are supported. PO (Platinum Only) means the configuration only supports Platinum processors.

Details - 2.5-inch front drives supporting 12 PCIe slots (front & rear)

The following table details the configurations that use 2.5-inch front drives supporting 12 PCIe slots (front & rear). Such a configuration supports up to 16x 2.5-inch drive bays + PCIe slots at the front of the server.

Click to go to the overview of the 2.5-inch front drive configurations.

Return to Storage configurations.

In the table:

- M.2 + VROC (SATA) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with SATA drives. RAID is optional, provided using VROC.

- M.2 + VROC (NVMe) means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with NVMe drives. RAID is optional, provided using VROC.

- M.2 + RAID adapter means the M.2 SATA/x4 NVMe adapter (4Y37A79663) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives)

- M.2 Integrated RAID means the M.2 RAID NVMe adapter (B8P9) with a Marvell controller or the M.2 RAID B540i-2i SATA/NVMe Adapter with a Broadcom controller. RAID-0 and RAID-1 are supported.

- 7mm + VROC (SATA) means the 7mm SATA/NVMe kit (BU0N) with SATA drives. RAID is optional, provided using VROC.

- 7mm + VROC (NVMe) means the 7mm SATA/NVMe kit (BU0N) with NVMe drives. RAID is optional, provided using VROC.

- 7mm + RAID adapter means the 7mm SATA/NVMe kit (BU0N) with either a RAID 5350-8i adapter (supporting SATA drives) or a RAID 540-8i (supporting NVMe drives)

- 7mm Integrated RAID means the 7mm NVMe RAID kit (B8P3) with a Marvell controller or the 7mm SATA/NVMe RAID kit (BYFG) with a Broadcom controller. RAID-0 and RAID-1 are supported.

Tip: M.2 and 7mm are mutually exclusive: they are not supported together in the same configuration

* For M.2 or 7mm: Requires 2 processors; 1P not supported

Field upgrades

The SR650 V3 is orderable without drive bays, allowing you to add a backplane, cabling and controllers as field upgrades. The server also supports upgrading some configurations by adding additional front drive bays (for example, upgrading from 8 to 16x 2.5-inch drive bays).

Upgrade path: The key criteria for upgrade support is to ensure that the target configuration is one of the supported drive bay configurations as listed in the Storage configurations section.

For example, if you are upgrading a 2.5-inch SAS/SATA drive configuration from 8 bays (Config 9) to 16 bays (Config 12), you will need these additional options:

- 4XH7A60930, ThinkSystem V3 2U 8x2.5" SAS/SATA Backplane Option Kit

- 4X97A82935, ThinkSystem SR650 V3 2.5" Chassis Front BP2 SAS/SATA Cable Kit

To add drive bays you will need to order both drive backplanes and cable kits. Backplane kits do not include cables.

In this section:

- Drive bay field upgrades - 3.5-inch chassis

- Drive bay field upgrades - 2.5-inch chassis with SAS/SATA and Gen4 front bays

- Drive bay field upgrades - 2.5-inch chassis with Gen5 front bays

- Drive bay field upgrades - 2.5-inch chassis with front slots

- Upgrading to an internal (CFF) adapter

- 2.5-inch drive bay fillers

Drive bay field upgrades - 3.5-inch chassis

The table below lists the backplane kits and cable kits needed to build one of the supported 3.5-inch chassis configurations.

The Config numbers listed here match the configuration listed in the Storage configuration Overview and Details sections.

Return to Field upgrades.

For more information about the backplane kits and cable kits, see the Lenovo server options site:

https://serveroption.lenovo.com/cable_kit_options/

Drive bay field upgrades - 2.5-inch chassis with SAS/SATA and Gen4 front bays

The tables below lists the backplane kits and cable kits needed to build one of the supported 2.5-inch chassis configurations with either SAS/SATA or Gen4 front backplanes.