Authors

- David Watts

- April Chen

Updated

12 Feb 2026Form Number

LP2048PDF size

65 pages, 8.0 MB- Introduction

- Did you know?

- Key features

- View in Augmented Reality

- Components and connectors

- System architecture

- Standard specifications - SC777 V4 tray

- Standard specifications - N1380 enclosure

- Models

- Configurations

- NVIDIA GB200 NVL4 HPM

- Processors

- Memory

- GPU accelerators

- Internal storage

- Controllers for internal storage

- Internal drive options

- I/O expansion

- Network adapters

- Cooling

- Water connections

- Power conversion stations

- System Management

- Security

- Operating system support

- Physical and electrical specifications

- Operating environment

- Regulatory compliance

- Warranty upgrades and post-warranty support

- Services

- Lenovo TruScale

- Rack cabinets

- Genie Material Lift

- Lenovo Financial Services

- Seller training courses

- Related publications and links

- Related product families

- Trademarks

Abstract

The ThinkSystem SC777 V4 Neptune server is the next-generation high-performance accelerated server based on the sixth generation Lenovo Neptune® direct water cooling platform.

Based on the NVIDIA GB200 NVL4 platform, the ThinkSystem SC777 V4 server combines the latest high-performance NVIDIA GPU and Lenovo's market-leading water-cooling solution, which results in extreme HPC/AI performance in dense packaging.

This product guide provides essential pre-sales information to understand the SC777 V4 server, its key features and specifications, components and options, and configuration guidelines. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the SC777 V4 and consider its use in IT solutions.

Change History

Changes in the February 12, 2026 update:

- Updated Water requirements - Operating environment section

Introduction

The ThinkSystem SC777 V4 Neptune node is the next-generation high-performance accelerated server based on the sixth generation Lenovo Neptune® direct water cooling platform.

Based on the NVIDIA GB200 NVL4 platform, the ThinkSystem SC777 V4 server combines the latest high-performance NVIDIA GPU and Lenovo's market-leading water-cooling solution, which results in extreme HPC/AI performance in dense packaging.

The direct water cooled solution is designed to operate by using warm water, up to 45°C (113°F). Chillers are not needed for most customers, meaning even greater savings and a lower total cost of ownership. Eight servers are housed in the ThinkSystem N1380 enclosure, a 13U rack mount unit that fits in a standard 19-inch rack.

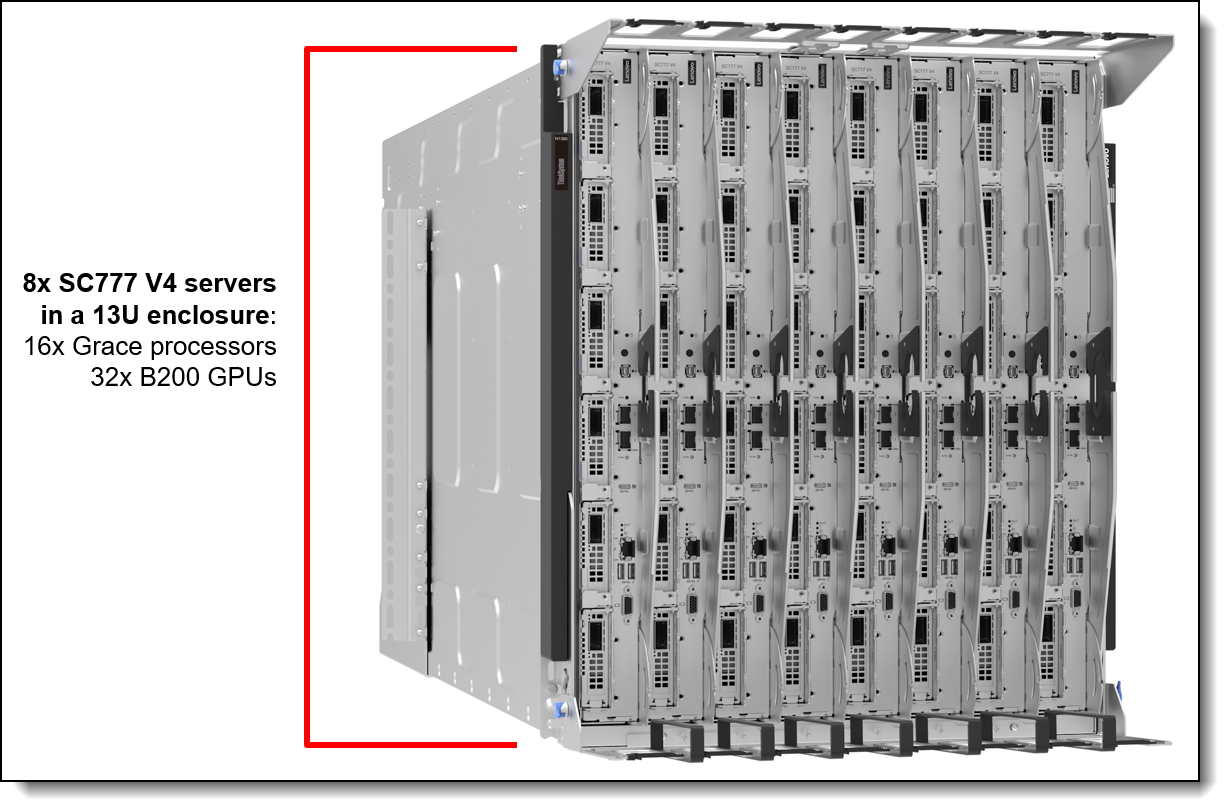

Figure 1. The Lenovo ThinkSystem SC777 V4 Neptune Server (AI Training configuration)

Did you know?

Lenovo Neptune and the ThinkSystem SC777 V4 are designed to operate at water inlet temperatures as low as the dew point allows, up to 45°C. This design eliminates the need for additional chilling and allows for efficient reuse of the generated heat energy in building heating or adsorption cold water generation, for example. It uses treated de-ionized water as a coolant, a much safer and more efficient alternative to the commonly used PG25 Glycol liquid, reflecting our commitment to environmental responsibility.

Key features

The ThinkSystem SC777 V4 server uses a new design, where the servers are mounted vertically in the N1380 enclosure. Eight servers are installed in one 13U N1380 enclosure.

Revolutionary Lenovo Neptune Design

Engineered for large-scale Artificial Intelligence (AI) and High Performance Computing (HPC), Lenovo ThinkSystem SC777 V4 Neptune excels in intensive simulations and complex modeling. It is designed to handle modeling, training, simulation, rendering, financial tech, scientific research, technical computing, grid deployments, and analytics workloads in various fields such as research, life sciences, energy, engineering, and financial simulation.

At its core, Lenovo Neptune applies 100% direct warm-water cooling, maximizing performance and energy efficiency without sacrificing accessibility or serviceability. The SC777 V4 integrates seamlessly into a standard 19" rack cabinet with the ThinkSystem N1380 Neptune enclosure, featuring a patented blind-mate stainless steel dripless quick connection.

This design ensures easy serviceability and extreme performance density, making the SC777 V4 the go-to choice for compute clusters of all sizes - from departmental/workgroup levels to the world’s most powerful supercomputers – from Exascale to Everyscale®.

Lenovo Neptune utilizes superior materials, including custom copper water loops and patented CPU cold plates, for full system water-cooling. Unlike systems that use low-quality FEP plastic, Neptune features durable stainless steel and reliable EPDM hoses. The N1380 enclosure features an integrated manifold that offers a patented blind-mate mechanism with aerospace-grade drip-less connectors to the compute trays, ensuring safe and seamless operation.

Lenovo’s direct water-cooled solutions are factory-integrated and are re-tested at the rack-level to ensure that a rack can be directly deployed at the customer site. This careful and consistent quality testing has been developed as a result of over a decade of experience designing and deploying DWC solutions to the very highest standards.

Scalability and performance

The ThinkSystem SC777 V4 server and ThinkSystem N1380 enclosure offer the following features to boost performance, improve scalability, and reduce costs:

- The SC777 V4 node leverages the NVIDIA GB200 NVL4 architecture:

- Two NVIDIA Grace processors

- Four NVIDIA Blackwell B200 GPU modules

- Highspeed NVLink interconnects between GPUs

- The server has up to 6x PCIe Gen 5 x16 slots for high-speed networking, depending on the configuration.

- The server also supports up to 10x E3.S 1T drive bays, depending on the configuration, for internal storage and OS boot

- All supported drives are E3.S NVMe drives with PCIe Gen 5 x4 host interface, to maximize I/O performance in terms of throughput, bandwidth, and latency.

- The node includes two 25 Gb Ethernet onboard ports for cost effective networking. High speed host networking can be added through the included PCIe slots. One of the 25Gb ports support NC-SI for BMC management communication to the BMC in addition to the host communication.

- The node offers PCI Express 5.0 (PCIe Gen 5) I/O expansion capabilities that doubles the theoretical maximum bandwidth of PCIe Gen 4 (32GT/s in each direction for Gen 5, compared to 16 GT/s with Gen 4). A PCIe Gen 5 x16 slot provides 128 GB/s bandwidth, enough to support a 400GbE network connection. Two Gen 5 x16 connections (32 lanes total) provides the bandwidth needed for an 800 GbE connection using the ConnectX-8 8180 network adapter.

Energy efficiency

The direct water cooled solution offers the following energy efficiency features to save energy, reduce operational costs, increase energy availability, and contribute to a green environment:

- Water cooling eliminates power that is drawn by cooling fans in the enclosure and dramatically reduces the required air movement in the server room, which also saves power. In combination with an Energy Aware Runtime environment, savings as much as 40% are possible in the data center due to the reduced need for air conditioning.

- Water chillers may not be required with a direct water cooled solution. Chillers are a major expense for most geographies and can be reduced or even eliminated because the water temperature can now be 45°C instead of 18°C in an air-cooled environment.

- With the new water-cooled power conversion stations and SMM3, essentially 100% system heat recovery is possible, depending on water and ambient temperature chosen. At 45°C water temperature and 30°C room temperature it will be typically around 95% through surface radiated heat. Heat energy absorbed may be reused for heating buildings in the winter, or generating cold through Adsorption Chillers, for further operating expense savings.

- The processors and other microelectronics are run at lower temperatures because they are water cooled, which uses less power, and allows for higher performance.

- The processors are run at uniform temperatures because they are cooled in parallel loops, which avoid thermal jitter and provides higher and more reliable performance at same power.

- 80 PLUS Titanium-compliant power conversion stations ensure energy efficiency.

- Power monitoring and management capabilities through the System Management Module in the N1380 enclosure.

- The System Management Module (SMM3) automatically calculates the power boundaries at the enclosure level; the protective capping feature ensures overall power consumption stay within limits, adjusting dynamically based on the server and power conversion station status change.

- Lenovo power/energy meter based on TI INA228 measures DC power for the SC777 V4 at higher than 97% accuracy and up to 100 Hz sampling frequency to the XCC and can be leveraged both in-band and out-of-band using IPMI raw commands.

- Optional Energy Aware Runtime provides sophisticated power monitoring and energy optimization on a job-level during the application runtime without impacting performance negatively.

Manageability and security

The following powerful systems management features simplify local and remote management of the SC777 V4 server:

- The server includes an XClarity Controller 3 (XCC3) to monitor server availability. XCC3 Premier is an included offering to provide remote control (keyboard video mouse) functions, support for the mounting of remote media files, FIPS 140-3 security, enhanced NIST 800-193 support, boot capture, power capping, and other management and security features.

- Lenovo XClarity Administrator offers comprehensive hardware management tools that help to increase uptime, reduce costs, and improve productivity through advanced server management capabilities.

- Support for industry standard management protocols, IPMI 2.0, SNMP 3.0, Redfish REST API, serial console via IPMI

- The SC777 V4 is enabled with Lenovo HPC & AI Software Stack, so, you can support multiple users and scale within a single cluster environment.

- Lenovo HPC & AI Software Stack provides our HPC customers you with a fully tested and supported open-source software stack to enable your administrators and users with for the most effective and environmentally sustainable consumption of Lenovo supercomputing capabilities.

- Our Confluent management system provides an interface designed to abstract the users from the complexity of HPC cluster orchestration and AI workloads management, making open-source HPC software consumable for every customer.

- Integrated Trusted Platform Module (TPM) 2.0 support enables advanced cryptographic functionality, such as digital signatures and remote attestation.

- Supports Secure Boot to ensure only a digitally signed operating system can be used.

- Industry-standard Advanced Encryption Standard (AES) NI support for faster, stronger encryption.

- With the System Management Module (SMM) installed in the enclosure, only one Ethernet connection is needed to provide remote systems management functions for all SC777 V4 servers and the enclosure.

- The SMM3 management module has two Ethernet ports which allows a single Ethernet connection to be daisy chained, up to 99 connections (eg 3 enclosures and 96 servers), thereby significantly reducing the number of Ethernet switch ports needed to manage an entire rack of SC777 V4 servers and N1380 enclosures.

- The N1380 enclosure and its power conversion stations include drip sensors that monitor the inlet and outlet manifold quick connect couplers; leaks are reported via the SMM.

Availability and serviceability

The SC777 V4 node and N1380 enclosure provide the following features to simplify serviceability and increase system uptime:

- Designed to run 24 hours a day, 7 days a week

- With full water cooling, system fans are not required. This results in significantly reduced noise levels on the data center floor, a significant benefit to personnel having to work on site.

- Depending on the configuration and node population, the N1380 enclosure supports N+1 with oversubscription power policy for its power conversion stations, which means greater system uptime.

- Power conversion stations are hot-swappable to minimize downtime.

- Toolless cover removal on the nodes provides easy access to upgrades and serviceable parts such as adapters and drives.

- The XCC offers optional remote management capability and can enable remote keyboard, video, and mouse (KVM) control and remote media for the node.

- Built-in diagnostics in UEFI, using Lenovo XClarity Provisioning Manager, speed up troubleshooting tasks to reduce service time.

- Lenovo XClarity Provisioning Manager supports diagnostics and can save service data to a USB key drive or remote CIFS share folder for troubleshooting and reduce service time.

- Auto restart in the event of a momentary loss of AC power (based on power policy setting in the XClarity Controller service processor)

- Virtual reseat is a supported feature of the System Management Module which, in essence, removes the node from A/C power and reconnecting the node to AC power from a remote location.

- There is a three-year customer replaceable unit and onsite limited warranty, with next business day 9x5 coverage. Optional warranty upgrades and extensions are available.

View in Augmented Reality

View the SC777 V4 in augmented reality (AR) using your smartphone or tablet.

Simply follow these steps:

- Scan the QR code* with the camera app on your phone

- Point your phone at a flat surface

- Wait a few seconds for the model to appear

Once the server appears, you can move your phone around it. You can also drag or rotate the server to reposition it.

For more information about the AR viewer, see the article "Introducing the Augmented Reality Viewer for Lenovo Servers", available from https://lenovopress.lenovo.com/lp1952

* If you're viewing this document on your phone or tablet, simply tap the QR code

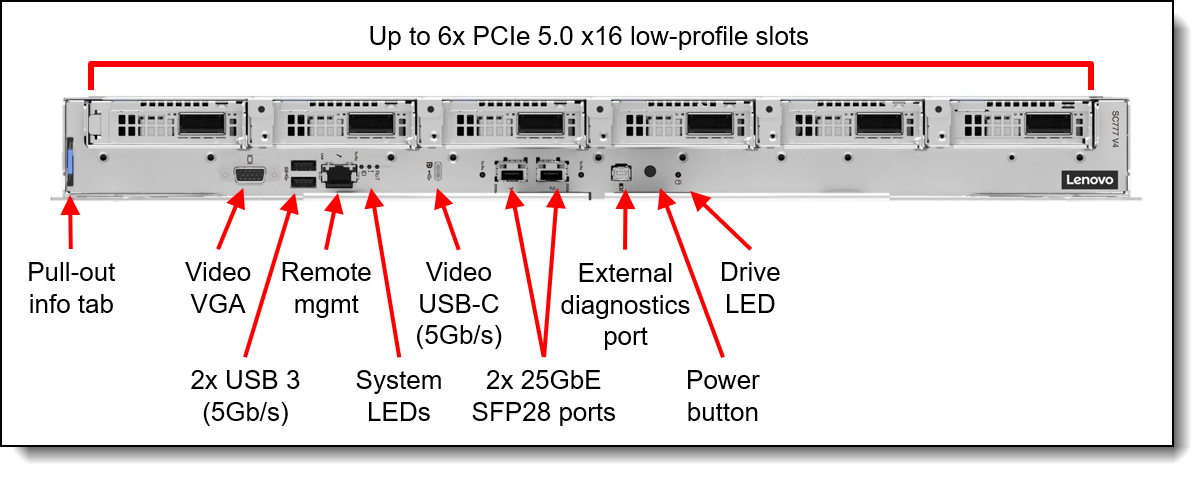

Components and connectors

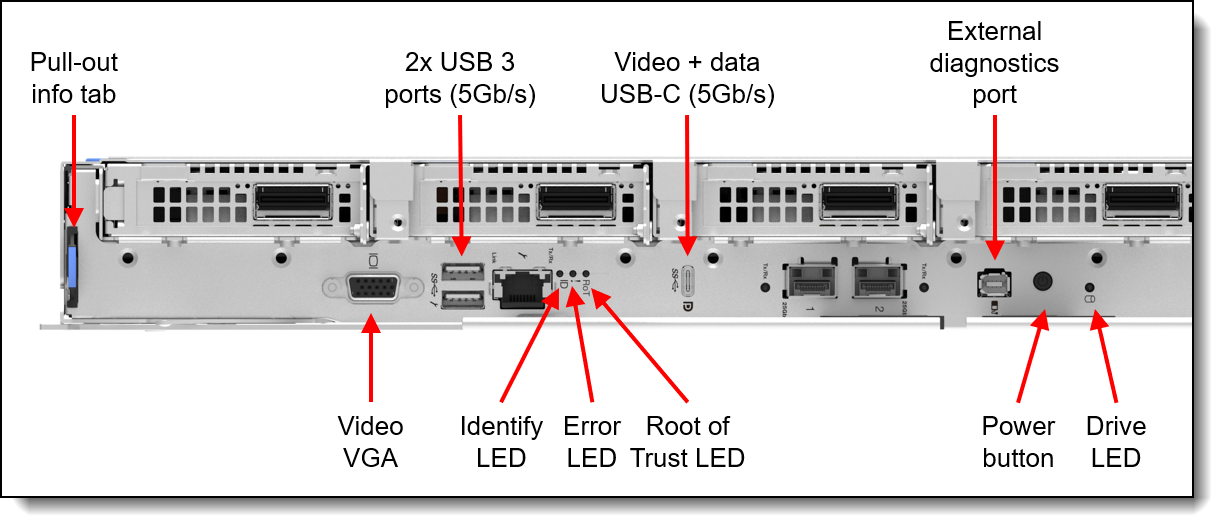

The front of the server is shown in the following figure.

Figure 2. Front view of the ThinkSystem SC777 V4

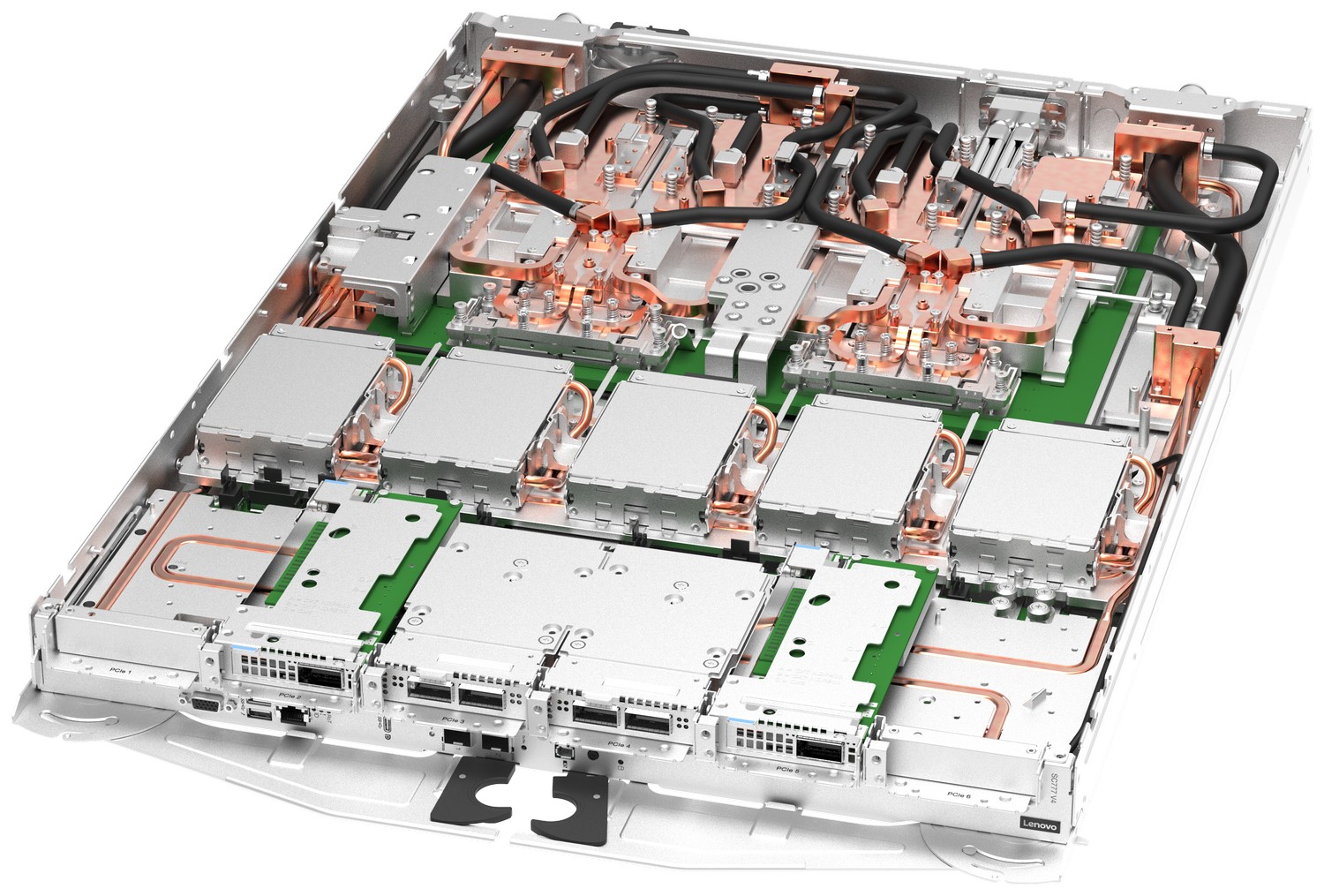

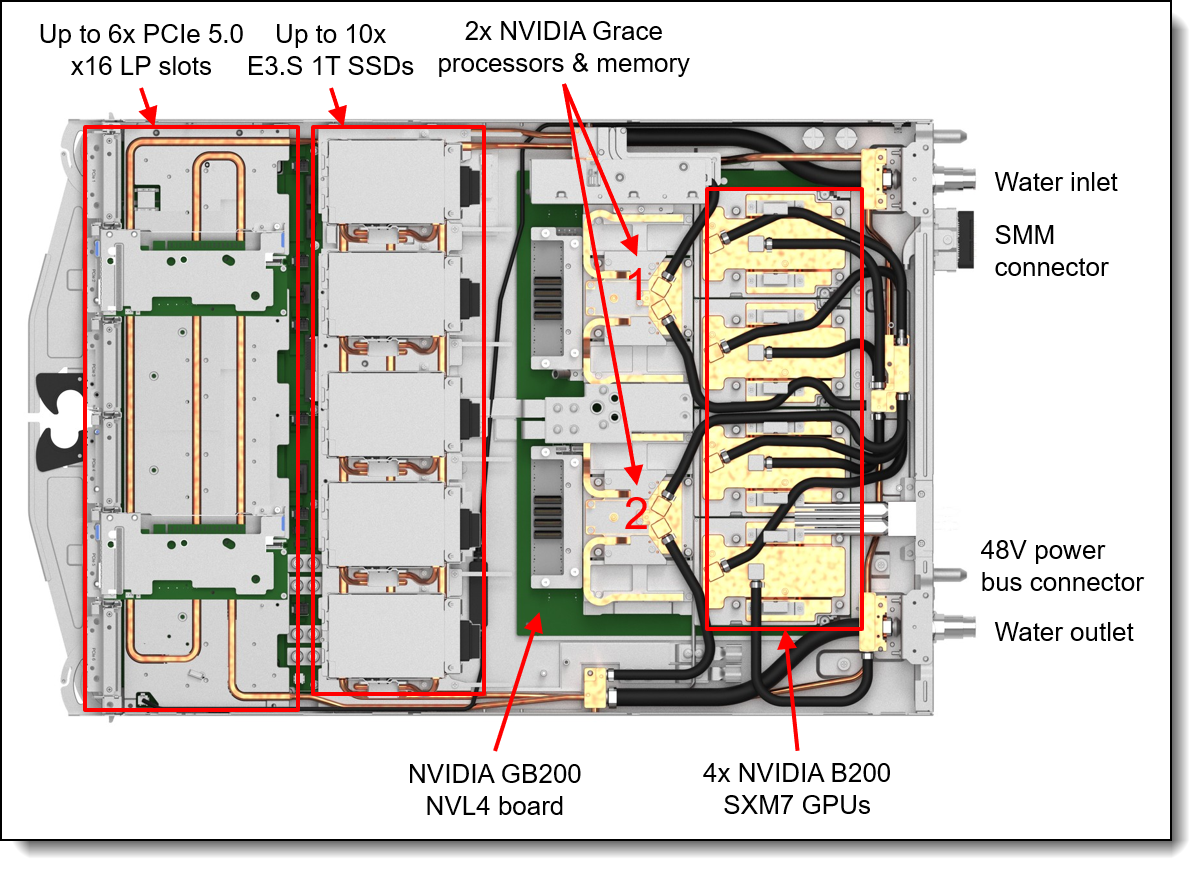

The following figure shows key components internal to the server.

Figure 3. Inside view of the SC777 V4 server in the water-cooled tray

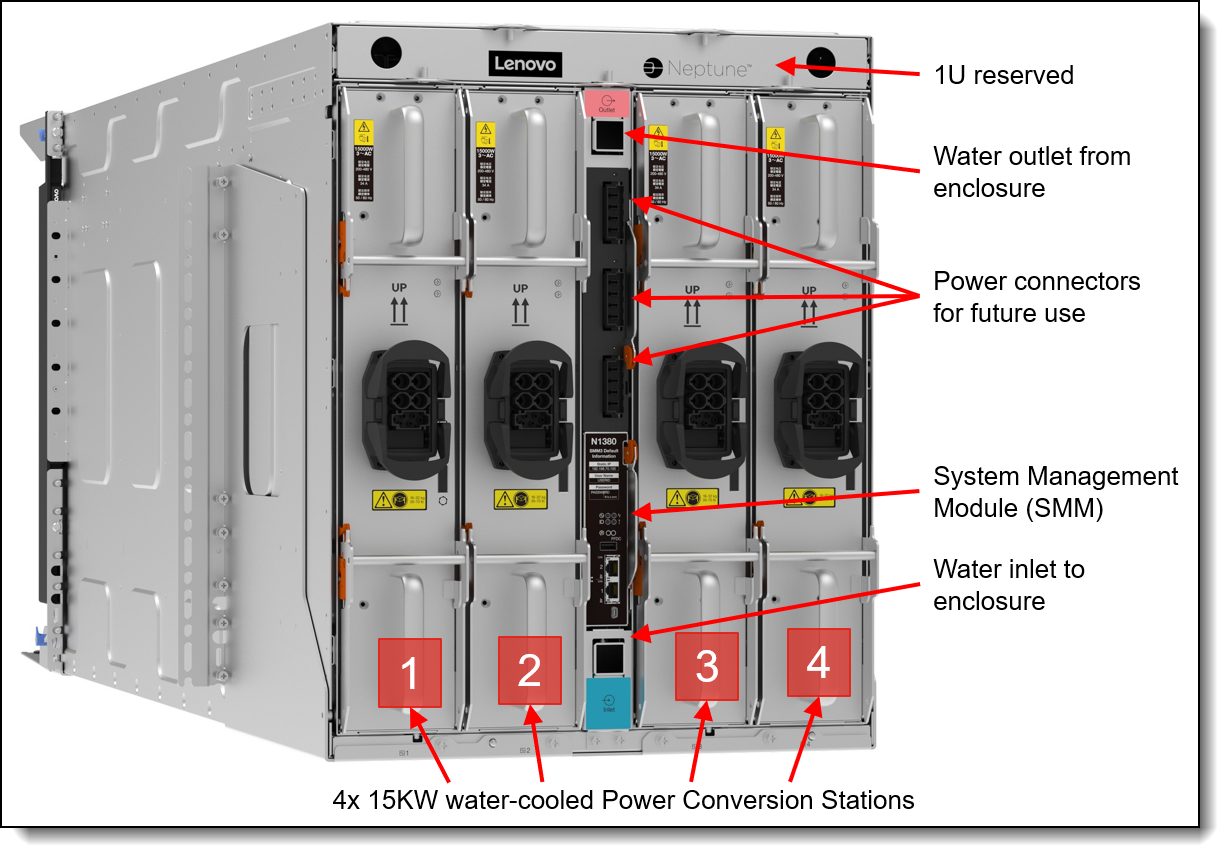

The compute nodes are installed vertically in the ThinkSystem N1380 enclosure, as shown in the following figure.

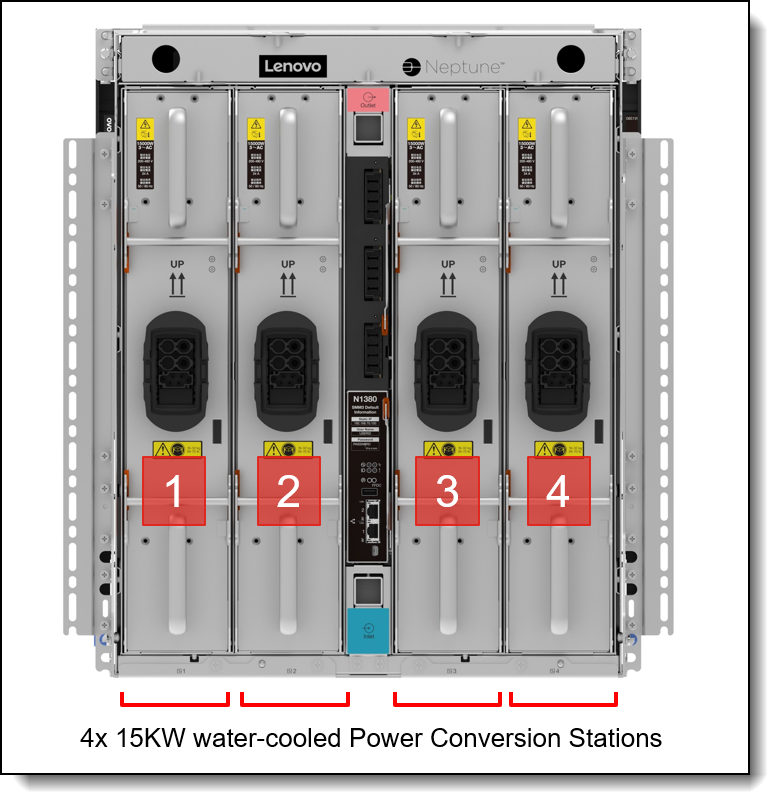

The rear of the N1380 enclosure contains the 4x water-cooled power conversion stations (PCS), water connections, and the System Management Module.

Note: The short hoses that are attached to the water inlet and water outlet have been removed from the figure for clarity.

Figure 5. Rear view of the N1380 enclosure (water hoses removed for clarity)

System architecture

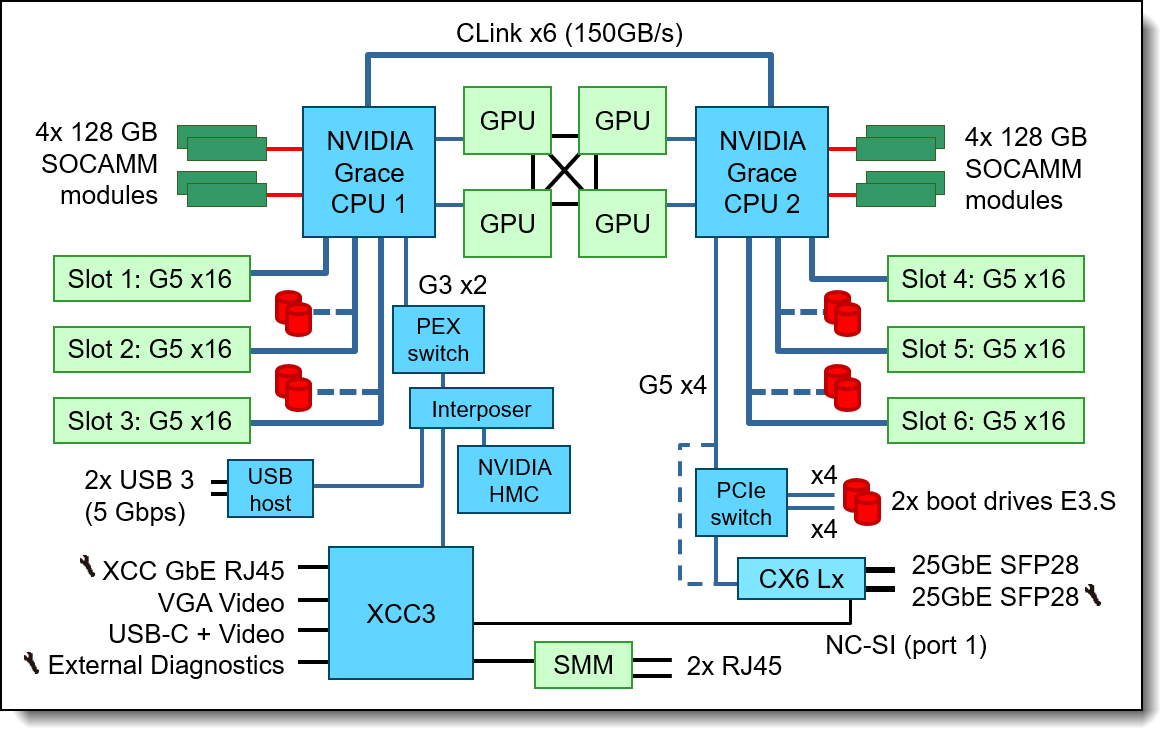

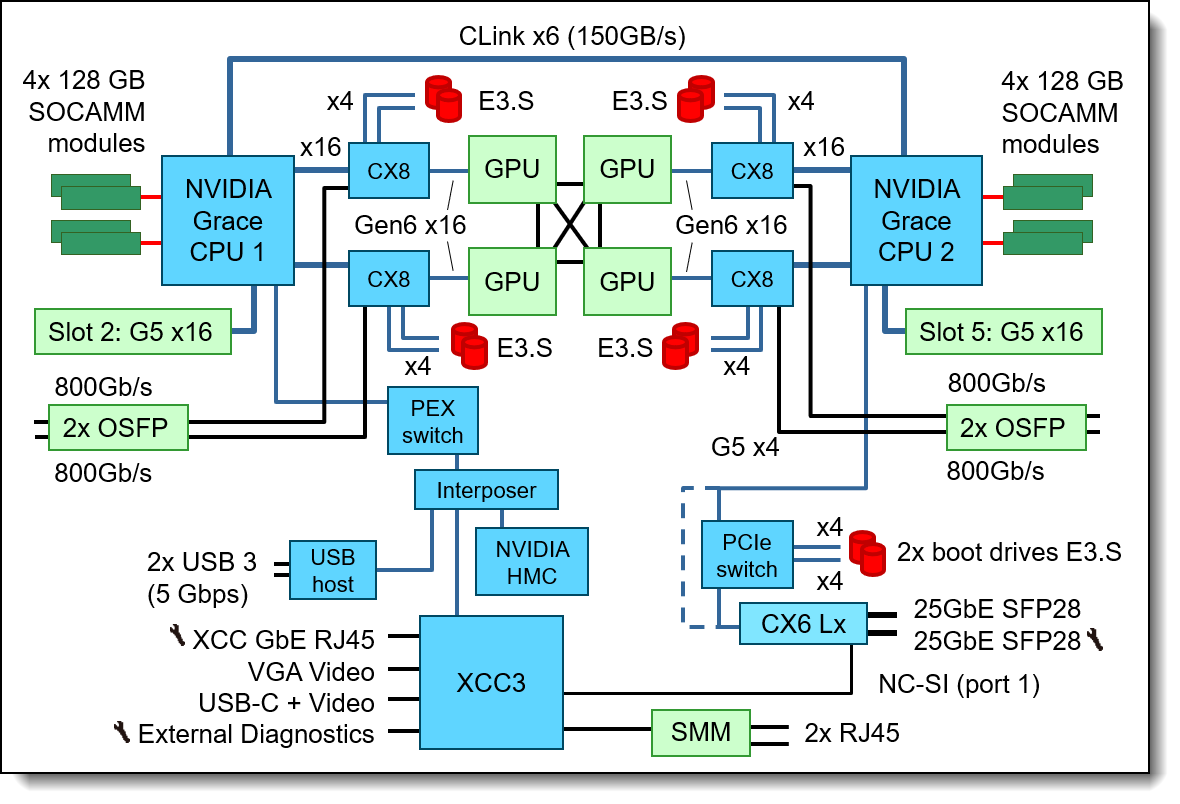

The SC777 V4 is offered in two primary configurations:

- HPC and AI for Science workloads

- AI Training workloads

The following figure shows the architectural block diagram of the SC777 V4 best suited to HPC and AI for Science workloads. This configuration offers 4x GPUs and 6x PCIe Gen5 x16 slots all directly connected to the CPUs. This configuration optimizes network connectivity while maximizing computational efficiency, ensuring cost-effectiveness in performance.

Figure 6. SC777 V4 system architectural block diagram - HPC and AI for Science workloads

The following figure shows the architectural block diagram of the SC777 V4 best suited to AI Training workloads. This configuration implements GPUDirect by connecting the GPUs, 800Gb/s OSFP network interfaces and drives all together using ConnectX-8 switches. The AI Training configuration is optimized for scale-out GPU performance which provides advantages in large AI training workloads.

Figure 7. SC777 V4 system architectural block diagram - AI Training workloads

For more information about the two configurations, see the Configurations section.

Standard specifications - SC777 V4 tray

The following table lists the standard specifications of the SC777 V4 server tray.

| Components | Specification |

|---|---|

| Machine type | 7DKA - 3-year warranty 7DMP - 5-year warranty |

| Form factor | NVIDIA GB200-NVL4 architecture system in a water-cooled server tray, installed vertically in an enclosure |

| Enclosure support |

ThinkSystem N1380 enclosure |

| Processor | Two NVIDIA Grace processors, with 72 Arm Neoverse V2 cores, core speeds of up to 3.1 GHz base frequency, and TDP rating of 300W. Supports PCIe 5.0 for high performance connectivity to network adapters and NVMe drives. |

| Chipset | None. Integrated into the processor. |

| GPUs | Four NVIDIA Blackwell B200 GPUs per tray, each with 186 GB HBM3e memory |

| CPU memory | Up to 480GB of LDPPR5 ECC memory per processor, memory operates at 4266 MHz |

| Memory maximum | 960GB per node |

| Memory protection | ECC with Single Error Correction (SEC), Double Error Detection (DED, parity, scrubbing, poisoning, and page off-lining |

| Disk drive bays | Each node supports up to 10x EDSFF E3.S 1T NVMe SSDs, installed in 5 internal drive bays (non-hot-swap). Each drive has a PCIe x4 host interface. |

| Maximum internal storage | 153.6TB using 10x 15.36TB E3.S NVMe SSDs |

| Storage controllers |

Onboard NVMe ports (RAID provided by operating system, if desired) |

| Optical drive bays | None |

| Network interfaces | 2x 25 Gb Ethernet SFP28 onboard connectors based on NVIDIA ConnectX-6 Lx controller (support 10/25Gb) 25Gb port 1 can optionally be shared with the XClarity Controller 3 (XCC3) management processor for Wake-on-LAN and NC-SI support. |

| PCIe slots | Up to 6x PCIe 5.0 x16 slots supporting low-profile PCIe adapters |

| Ports | External diagnostics port, VGA video port, USB-C port for both video (DisplayPort) and data (5 Gb/s), 2x USB 3 (5 Gb/s) ports, RJ-45 1GbE systems management port for XCC remote management. Additional ports provided by the enclosure as described in the Enclosure specifications section. |

| Video | Embedded graphics with 16 MB memory with 2D hardware accelerator, integrated into the XClarity Controller 3 management controller. Two video ports (VGA port and USB-C DisplayPort video port); both can be used simultaneously if desired. Maximum resolution is 1920x1200 32bpp at 60Hz. |

| Security features | Power-on password, administrator's password, Trusted Platform Module (TPM), supporting TPM 2.0. |

| Systems management |

Operator panel with status LEDs. Optional External Diagnostics Handset with LCD display. Clarity Controller 3 (XCC3) embedded management based on the ASPEED AST2600 baseboard management controller (BMC), XClarity Administrator centralized infrastructure delivery. Optional XCC3 Premier to enable remote control functions and other features. Lenovo power/energy meter based on TI INA228 for 100Hz power measurements with >97% accuracy. System Management Module (SMM3) in the N1380 enclosure provides additional systems management functions from power monitoring to liquid leakage detection on chassis, tray and power conversation station level. |

| Operating systems supported |

Ubuntu is Supported & Certified. See the Operating system support section for details and specific versions. |

| Limited warranty | Three-year or five-year customer-replaceable unit and onsite limited warranty with 9x5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware and some third-party applications. |

| Dimensions | Width: 546 mm (21.5 inches), height: 53 mm (2.1 inches), depth: 760 mm (29.9 inches) |

| Weight | 38 kg (83.8 lbs) |

Standard specifications - N1380 enclosure

The ThinkSystem N1380 enclosure provides shared high-power and high-efficiency power conversion stations. The SC777 V4 servers connect to the midplane of the N1380 enclosure. This midplane connection is for power (via a 48V busbar) and control only; the midplane does not provide any I/O connectivity.

The following table lists the standard specifications of the enclosure.

| Components | Specification |

|---|---|

| Machine type | 7DDH - 3-year warranty |

| Form factor | 13U rack-mounted enclosure |

| Maximum number of SC777 V4 nodes supported | Up to 8x server nodes per enclosure |

| Node support | ThinkSystem SC750 V4 ThinkSystem SC777 V4 |

| Enclosures per rack | Up to three N1380 enclosures per 42U or 48U rack |

| System Management Module (SMM) |

The hot-swappable System Management Module (SMM3) is the management device for the enclosure. Provides integrated systems management functions and controls the power and cooling features of the enclosure. Provides remote browser and CLI-based user interfaces for remote access via the dedicated Gigabit Ethernet port. Remote access is to both the management functions of the enclosure as well as the XClarity Controller 3 (XCC3) in each node. The SMM has two Ethernet ports which enables a single incoming Ethernet connection to be daisy chained across 3 enclosures and 48 nodes, thereby significantly reducing the number of Ethernet switch ports needed to manage an entire rack of SC777 V4 nodes and enclosures. |

| Ports | Two RJ45 port on the rear of the enclosure for 10/100/1000 Ethernet connectivity to the SMM3 for power and cooling management. |

| I/O architecture | None integrated. Use top-of-rack networking and storage switches. |

| Power supplies | Up to 4x water-cooled hot-swap power conversion stations (PCS), depending on the power policy and the power requirements of the installed server node trays. PCS units are installed at the rear of the enclosure. Single power domain supplies power to all nodes. Optional redundancy (N+1 or N+N) and oversubscription, depending on configuration and node population. 80 PLUS Titanium compliant. Built-in overload and surge protection. |

| Cooling | Direct water cooling supplied by water hoses connected to the rear of the enclosure. |

| System LEDs | SMM has four LEDs: system error, identification, status, and system power. Each power conversion station (PCS) has AC, DC, and error LEDs. Nodes have more LEDs. |

| Systems management | Browser-based enclosure management through an Ethernet port on the SMM at the rear of the enclosure. Integrated Ethernet switch provides direct access to the XClarity Controller (XCC) embedded management of the installed nodes. Nodes provide more management features. |

| Temperature |

See Operating Environment for more information. |

| Electrical power | 3-phase 200V-480Vac |

| Power cords | Custom power cables for direct data center attachment, 1 dedicated (32A) or 1 shared (63A) per power module |

| Limited warranty | Three-year customer-replaceable unit and onsite limited warranty with 9x5/NBD. |

| Dimensions | Width: 540 mm (21.3 in.), height: 572 mm (22.5 in.), depth: 1302 mm (51.2 in.). See Physical and electrical specifications for details. |

| Weight |

|

Models

The SC777 V4 node and N1380 enclosure are configured by using the configure-to-order (CTO) process with the Lenovo Cluster Solutions configurator (x-config) or in Lenovo Data Center Solution Configurator (DCSC).

DCSC users: To configure SC777 V4 and N1380 in DCSC, you will need to be in AI & HPC – ThinkSystem Hardware mode. General Purpose mode cannot be used to configure these systems.

The following table lists the base CTO models and base feature codes

The ThinkSystem SC777 V4 node is equipped with high-performance GPUs. It falls under classification 5A992.z per U.S. Government Export regulations, and its market and customer availability are controlled, potentially necessitating an export license.

Configurations

There are two primary configurations offered with the SC777 V4. Block diagrams for these configurations are shown in the System architecture section.

HPC and AI for Science configuration:

- 4x B200 GPU Modules directly connected to processors with x16 interface (2 to each processor)

- Network connectivity options:

- Up to 6x ConnectX-7 (single-port 400Gb adapters or dual-port 200Gb adapters), each with a Gen5 x16 connection

- Up to 3x ConnectX-8 (single-port 800Gb adapters), each with a Gen5 x32 connection (two x16 connections)

- Up to 8x E3.S 1T NVMe drives for data storage, directly connected to a processor (4 to each processor), with a x4 host interface

- PCIe adapters and drives are mutually exclusive; supported combinations are as follows:

- 2x drives can be used with 5x adapters

- 4x drives can be used with 4x adapters

- 6x drives can be used with 3x adapters

- 8x drives can be used with 2x adapters

- PCIe adapters and drives are mutually exclusive; supported combinations are as follows:

- 2x E3.S 1T NVMe drives for OS boot, connected via a switched x4 host interface to CPU 2

- 2x 25Gb Ethernet SFP28 onboard connectors based on Mellanox ConnectX-6 Lx controller

AI Training configuration:

- 4x B200 GPU Modules connected to processors with x16 interface (2 to each processor)

- 4x ConnectX-8 I/O modules to enable high-speed PCIe Gen6 x16 GPUDirect connectivity to the GPUs and Gen5 x16 connectivity to the processors

- Each ConnectX-8 switch has directly connected to it:

- 1x B200 GPU Module via Gen6 x16

- 1x Grace processor via Gen5 x16

- 1x 800 Gb OSFP network connection

- 2x E3.S drives for storage

- Additional network connectivity options:

- 2x ConnectX-7 (single-port 400Gb adapters or dual-port 200Gb adapters), each with a Gen5 x16 connection

- 1x ConnectX-8 (single-port 800Gb adapter), with a Gen5 x32 connection (two x16 connections)

- 2x E3.S 1T NVMe drives for OS boot, connected via a switched x4 host interface to CPU 2

- 2x 25Gb Ethernet SFP28 onboard connectors based on Mellanox ConnectX-6 Lx controller

The feature codes listed in the following table form the building blocks used to create the above configurations.

Configurator tips:

- The AI Training configuration is the default in DCSC, feature C8WZ

- To configure the server for HPC and AI for Science, do the following:

- In the PCI > Network Module section, change Network Module from C8WZ to None

- In the PCI > InfiniBand Adapter section, select up to 6 network adapters to be installed in the PCIe slots

- Feature C8X1 will automatically be derived

NVIDIA GB200 NVL4 HPM

The SC777 V4 leverages two NVIDIA GB200 NVL4 host processor motherboards (HPMs) per tray that integrate processors, memory and GPU accelerators in a powerful package.

Processors

Each of the NVIDIA GB200 NVL4 HPMs include one NVIDIA Grace processor, as part of the NVIDIA GB200 NVL4 module. The processors are standard in all configurations of the SC777 V4.

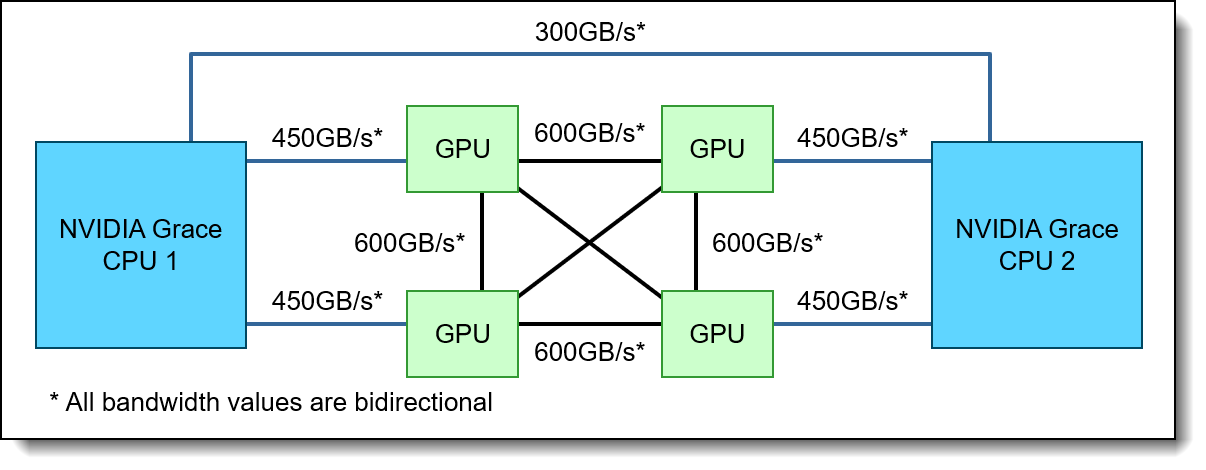

Each processor has the following features:

- 72 Arm Neoverse V2 cores with 4x 128b SVE2

- 3.1 GHz base frequency

- 3.0 GHz all-core SIMD frequency

- L1 cache: 64KB i-cache + 64KB d-cache

- L2 cache: 1MB per core

- L3 cache: 114MB

- 64 PCIe Gen5 lanes (implemented as 8x x8)

- 300 GB/s bi-directional NVLink-C2C connectivity between processors

- 450 GB/s bi-directional NVLink connectivity to two GPUs each

- NVIDIA Scalable Coherency Fabric (SCF) with 3.2 terabytes/s bisection bandwidth interconnecting Cores, Memory and IO

- 300W TDP (includes power for memory)

The NVIDIA Grace processor is integrated using Ball Grid Array (BGA) technology and is soldered onto the NVIDIA GB200 NVL4 HPM board. To replace the CPU, it is necessary to replace the entire module.

Memory

The NVIDIA GB200 NVL4 HPM boards incorporate processor memory via LPDDR5x modules.

Features of the memory subsystem are as follows:

- 960GB of LPDDR5X ECC memory in a fixed configuration, 480GB per processor

- Implemented using 8x 128GB modules (4 per CPU)

- Peak memory bandwidth per CPU: Up to 384 GB/s

- Memory clock per CPU: 4266MT/s

The Single Error Correction (SEC) and Double Error Detection (DED) algorithms as part of the ECC are designed to mitigate multi-bit aliasing and correct errors effectively. Furthermore, features such as parity, scrubbing, poisoning, and page off-lining are implemented to enhance Reliability, Availability, and Serviceability (RAS).

GPU accelerators

The NVIDIA GB200 NVL4 HPM boards support four onboard NVIDIA B200 GPUs. The following table lists the specifications for each GPU and for the full package. Measured performance will vary by application.

The following table lists the specifications for each GPU.

* With structural sparsity enabled

The following figure shows how the GPUs interconnect.

Internal storage

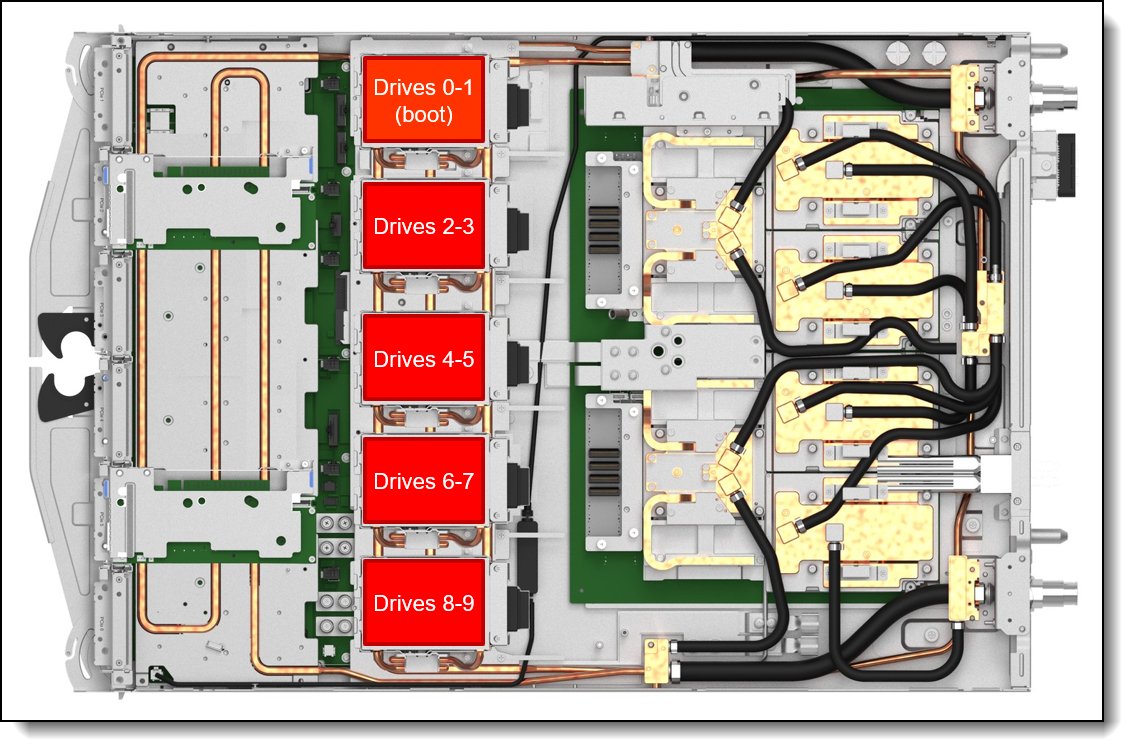

The SC777 V4 node supports up to 10x E3.S 1T SSDs. These are internal drives - they are not front accessible and are non-hot-swap.

- 8x drives for data storage

- 2x drives for OS boot, optional; feature C51T (ThinkSystem SC777 V4 Neptune PCIe Switch Board for Boot Drives) required

The drives are located as shown in the figure below.

Figure 9. SC777 V4 internal drive bays

Each of the 5 drive bays supports either of the following:

- 2x E3.S 1T drives

- 1x E3.S 2T drive

Configuration notes:

- The node supports only supports E3.S NVMe drives

- The drives are connected to onboard controllers; RAID functionality is provided using the operating system features

- Boot drives are the same as other drives but use switched PCIe and may have lower performance. The boot drive kit (feature C51T) is only required when a total of 10 drives are installed. The boot drive kit is not field upgradable.

- In the HPC and AI for Science configuration (see the Configurations section), the same PCIe lanes can be used either for PCIe adapters or for NVMe drives. The following table lists the supported combinations.

- In the AI Training configuration (see the Configurations section), there is no restriction regarding the numbers of drives

- NVMe drives are connected to CPUs as follows:

- Drives 0-1 are boot drives connected to CPU 2

- Drives 2-5 are data storage drives connected to CPU 2

- Drives 6-9 are data storage drives connected to CPU 1

The feature codes to select the appropriate storage cage are listed in the following table.

Tip: To configure boot drives, specify feature C51T plus either feature C77X (1x boot drive) or C51U (2x boot drives). See the table below. The boot drive kit is only required when 8 performance and 2 boot drives are installed.

* See the table below for guidance on quantities required

Each drive bay supports 1x or 2x E3.S 1T drives. If a bay is to have only 1 drive, order feature C77X for that bay; if a bay is to have 2 drives, order feature C51U for that bay. The following table lists the required quantities of each drive bay cage

Controllers for internal storage

The drives of the SC777 V4 are connected to integrated NVMe storage controllers.

If desired, RAID functionality is provided by the installed operating system (software RAID)

Internal drive options

The following tables list the drive options for internal storage of the server.

Trayless drives:

SED support: The tables include a column to indicate which drives support SED encryption. The encryption functionality can be disabled if needed. Note: Not all SED-enabled drives have "SED" in the description.

I/O expansion

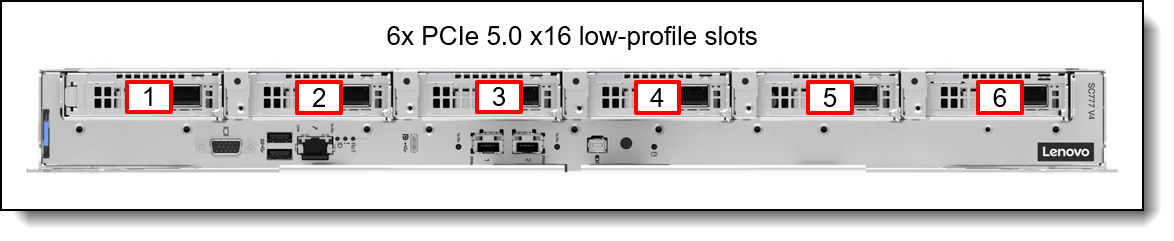

The SC777 V4 node supports up to 6x front-accessible slots, depending on the configuration:

- Slots 1, 2, 3: PCIe 5.0 x16 slots connected to CPU 1

- Slots 4, 5, 6: PCIe 5.0 x16 slots connected to CPU 2

The location of the slots is shown in the following figure.

Figure 10. SC777 V4 PCIe slots

Slot availability is based on the configuration selected.

In the HPC and AI for Science configuration (see the Configurations section), PCIe adapters and data drives share the same PCIe connections to the processors. The following table lists the supported combinations of adapters and drives.

The AI Training configuration (see the Configurations section) supports:

- 2x OSFP interfaces installed in slots 3 and 4 (feature C8X0) (see OSFP 800Gb ports section)

- 2x PCIe adapters in slots 2 and 5

Slots are implemented using 1-slot risers. Ordering information for the risers plus the OSFP interface cage for AI Training configurations is in the following table.

Onboard ports

The SC777 V4 has 2x 25GbE onboard ports. The ports are connected to an onboard NVIDIA ConnectX-6 Lx controller, implemented with SFP28 cages for optical or copper connections. Supports 1Gb, 10Gb and 25Gb connections. Locations of these ports is shown in the Components and connectors section.

25GbE Port 1 supports NC-SI for remote management. For factory orders, to specify which ports should have NC-SI enabled, use the feature codes listed in the Remote Management section. If neither is chosen, both ports will have NC-SI disabled by default.

For the specifications of the 25GbE ports including the supported transceivers and cables, see the ConnectX-6 Lx product guide:

https://lenovopress.lenovo.com/lp1364-thinksystem-mellanox-connectx-6-lx-25gbe-sfp28-ethernet-adapters

OSFP 800Gb ports

The AI Training configuration includes two OSFP interface boards (feature C8X0), each providing two OSFP ports. Supported connections are each up to 800 Gb/s (a total of 3200 Gb/s with 4x ports).

The following table lists the supported optical cables.

The following table lists the supported direct-attach copper (DAC) cables.

Network adapters

The SC777 V4 supports up to 6 network adapters installed in the front PCIe slots. The following table lists the supported adapters.

For more information, including the supported transceivers and cables, see the adapter product guides:

Cooling

One of the most notable features of the ThinkSystem SC777 V4 offering is direct water cooling. Direct water cooling (DWC) is achieved by circulating the cooling water directly through cold plates that contact the CPU thermal case, DIMMs, drives, adapters, and other high-heat-producing components in the node.

One of the main advantages of direct water cooling is the water can be relatively warm and still be effective because water conducts heat much more effectively than air. Depending on the environmentals like water and air temperature, effectively 100% of the heat can be removed by water cooling; in configurations that stay slightly below that, the rest can be easily managed by a standard computer room air conditioner.

Allowable inlet temperatures for the water can be as high as 45°C (113°F) with the SC777 V4 for real-world applications. In most climates, water-side economizers can supply water at temperatures below 45°C for most of the year. This ability allows the data center chilled water system to be bypassed thus saving energy because the chiller is the most significant energy consumer in the data center. Typical economizer systems, such as dry-coolers, use only a fraction of the energy that is required by chillers, which produce 6-10°C (43-50°F) water. The facility energy savings are the largest component of the total energy savings that are realized when the SC777 V4 is deployed.

The advantages of the use of water cooling over air cooling result from water’s higher specific heat capacity, density, and thermal conductivity. These features allow water to transmit heat over greater distances with much less volumetric flow and reduced temperature difference as compared to air.

For cooling IT equipment, this heat transfer capability is its primary advantage. Water has a tremendously increased ability to transport heat away from its source to a secondary cooling surface, which allows for large, more optimally designed radiators or heat exchangers rather than small, inefficient fins that are mounted on or near a heat source, such as a CPU.

The SC777 V4 offering uses the benefits of water by distributing it directly to the highest heat generating node subsystem components. By doing so, the offering realizes 7% - 10% direct energy savings when compared to an air-cooled equivalent. That energy savings results from the removal of the system fans and the lower operating temp of the direct water-cooled system components.

The direct energy savings at the enclosure level, combined with the potential for significant facility energy savings, makes the SC777 V4 an excellent choice for customers that are burdened by high energy costs or with a sustainability mandate.

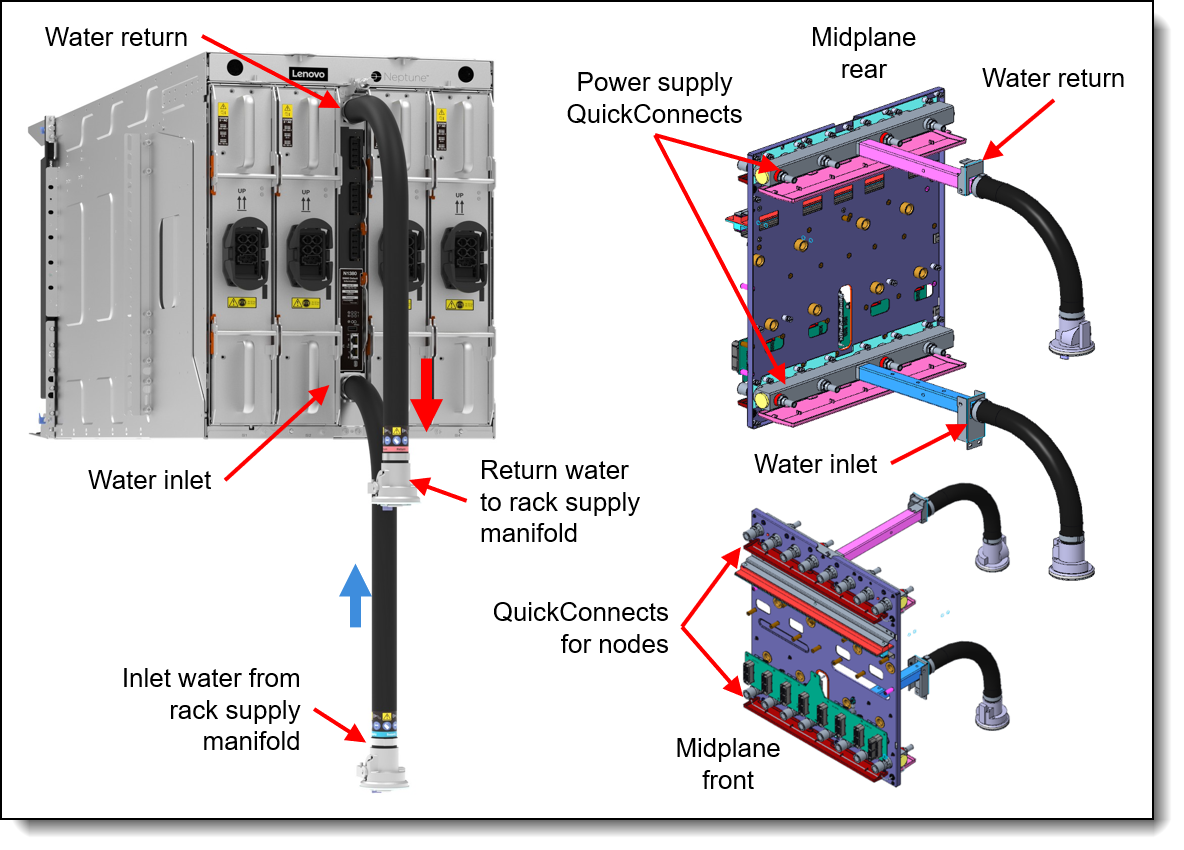

Water is delivered to the enclosure from a coolant distribution unit (CDU) via water hoses, inlet and return. As shown in the following figure, each enclosure connects to the CDU via rack supply hoses.

The midplane in the enclosure routes the water via QuickConnects to each of the eight nodes, and each of the four power conversion stations.

Figure 11. N1380 enclosure water connections

The water flows through the SC777 V4 tray to cool all major heat-producing components. The inlet water is split into two parallel paths, one for each node in the tray. Each path is then split further to cool the processors, memory, drives, and adapters.

During the manufacturing and test cycle, Lenovo’s water-cooled nodes are pressure tested with Helium according to ASTM E499 / E499M – 11 (Standard Practice for Leaks Using the Mass Spectrometer Leak Detector in the Detector Probe Mode) and later again with Nitrogen to detect micro-leaks which may be undetectable by pressure testing with water and/or a water/glycol mixture as Helium and Nitrogen have smaller molecule sizes.

This approach also allows Lenovo to ship the systems pressurized without needing to send hazardous antifreeze-components to our customers.

Onsite the materials used within the water loop from the CDU to the nodes should be limited to copper alloys with brazed joints, Stainless steels with TIG and MIG welded joints and EPDM rubber. In some instances, PVC might be an acceptable choice within the facility.

The water the system is filled with must be reasonably clean, bacteria-free water (< 100 CFU/ml) such as de-mineralized water, reverse osmosis water, de-ionized water, or distilled water. It must be filtered with in-line 50 micron filter. Biocide and Corrosion inhibitors ensure a clean operation without microbiological growth or corrosion. For details about the implementation of Lenovo Neptune water cooling, see the document Lenovo Neptune Direct Water-Cooling Standards.

Lenovo Data Center Power and Cooling Services can support you in the design, implementation and maintenance of the facility water-cooling infrastructure.

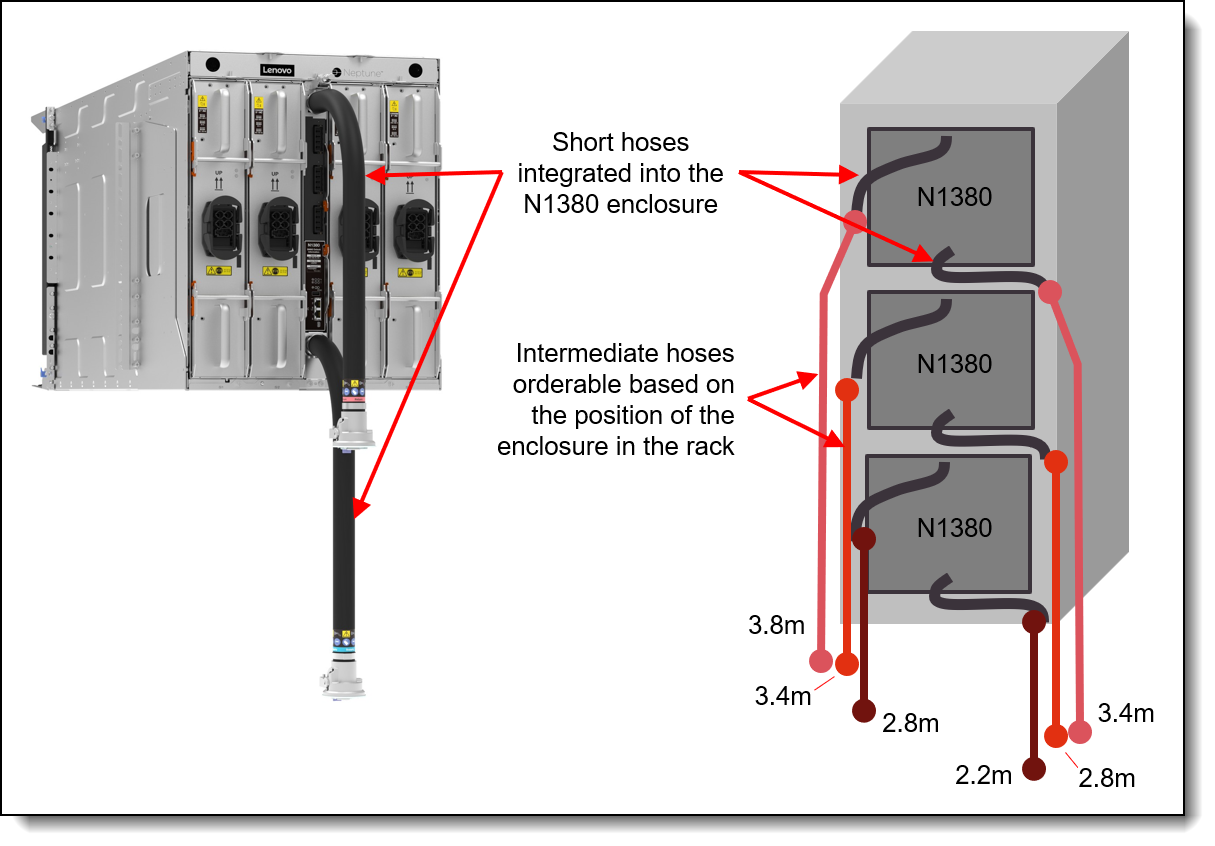

Water connections

Water connections to the N1380 enclosure are provided using hoses that connect directly to the coolant distribution unit (CDU), either an in-rack CDU or in-row CDU. As shown in the following figure, hose lengths required depend on the placement of the N1380 in the rack cabinet.

Figure 12. Hose lengths based on the position of the N1380 enclosure in the rack cabinet

Water connections are made up of the following:

- Short hoses permanently attached to the rear of the N1380 enclosure

- Intermediate hoses, orderable as three different pairs of hoses, based on the location of the N1380 enclosure in the rack. Ordering information for these hoses is listed in the table below.

- Connection to the CDU through additional hoses or valves on the data center water loop. Lenovo Datacenter Services can provide the end-to-end enablement for your water infrastructure needs.

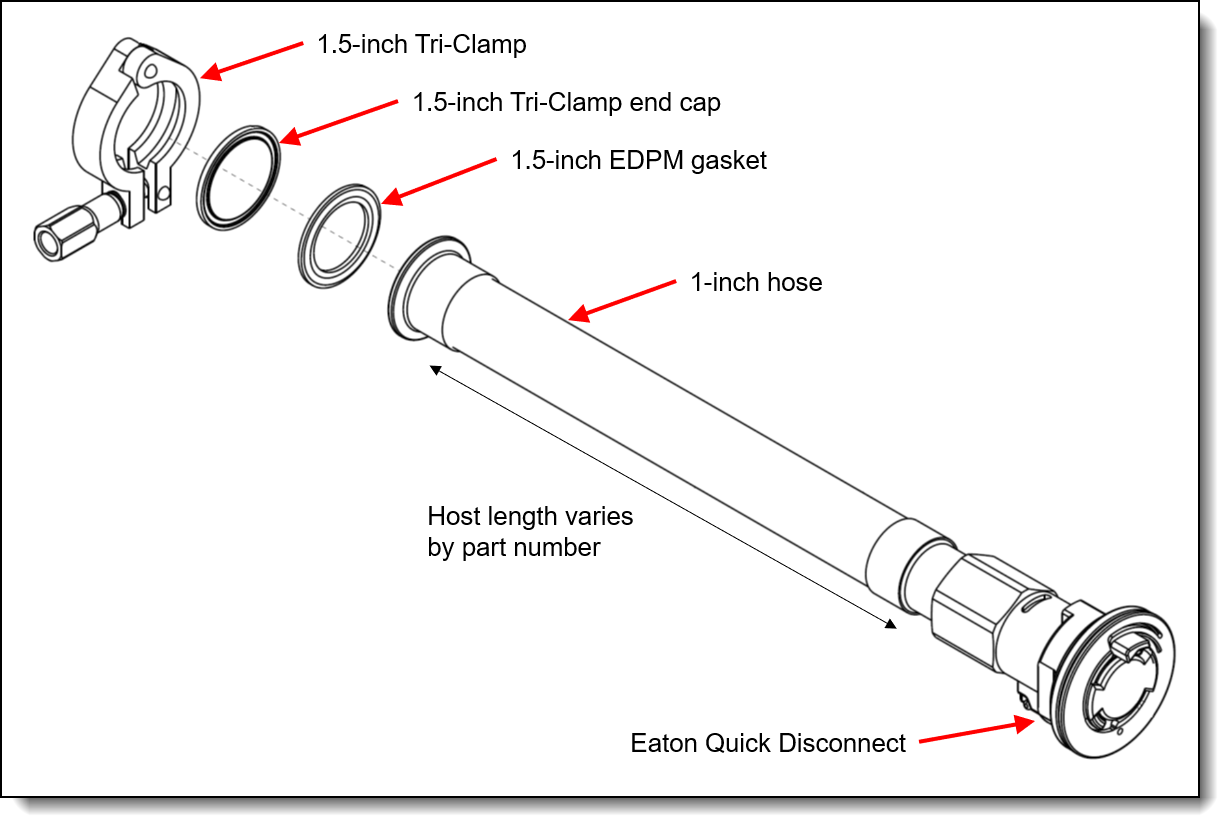

The Intermediate hoses have a Sanitary Flange with a 1.5-inch Tri-Clamp at the CDU/data center end, as shown in the figure below.

Figure 13. Connections of the Intermediate hoses

Ordering information for the Intermediate hoses to supply and return the water are listed in the following table. Hoses can either be stainless steel or EPDM, depending on the data center requirements.

For additional information, see the Cooling section.

To support the onsite setup for the direct water-cooled solution, a Commissioning Kit is available providing a flow meter, bleed hose, pressure gauge and vent valve. Ordering information is listed in the following table. Either kit supports the N1380 enclosure.

Power conversion stations

The N1380 enclosure supports up to four water-cooled Power Conversion Stations. The use of water-cooled power conversion stations enables an even greater amount of heat can be removed from the data center using water instead of air-conditioning.

The Power Conversion Stations supply internal system power to a 48V busbar. This innovative design merges power conversion, rectification, and distribution into a single unit, a departure from traditional setups that require separate units, including separate power supplies, resulting in best-in-class efficiency.

Figure 14. N1380 power conversion stations

The N1380 supports the power conversion stations listed in the following table.

Quantities of 2, 3 or 4 are supported. The power conversion stations provide N+1 redundancy with oversubscription, depending on population and configuration of the node trays. Power policies with N+N redundancy with 4x power conversion stations are supported. Power policies with no redundancy are also supported.

Tip: Use Lenovo Capacity Planner to determine the power needs for your rack installation. See the Lenovo Capacity Planner section for details.

The power conversion stations have the following features:

- 80 PLUS Titanium certified

- Input: 342~528Vac (nominal 380~480Vac), 3-phase WYE

- Input current: 30A per phase)

- Supports N+N, N+1 power redundancy or non-redundant power configurations:

- Power management configured through the SMM

- Built-in overload and surge protection

- Full telemetry of PCS available through SMM GUI or Redfish including: energy, power, voltage, current, and overall conversion efficiency

Power policies and power output

The following table lists the enclosure power capacity based on the power policy and the number of power modules installed.

* The numbers in parentheses are the chassis power capacity if no throttling is required when the redundancy is degraded (e.g. 3+1 and 1 PSU fails)

Notes in the table:

- N+1+OVS (oversubscription) is the default if >1 PSU is installed. N+0 is the default with 1 PSU installed.

- If N+N is selected and there is an AC domain fault (2 PSUs go down), the power capacity is reduced to the value shown in parentheses within 1 second. Example: If 2+2 policy is selected & all 4 PSUs are operational, the power capacity is 36KWDC. If an AC domain faults, 2 PSU go down, and the power is reduced to 30KW within 1 second

- If N+1 is selected and there is a PSU fault, the power capacity is reduced to <= the value shown in parentheses within 1 second. Example: If 3+1 policy is selected & all 4 PSUs are operational, the power capacity is 54KWDC. If a PSU faults, the power is reduced to <=45KW within 1 second. GPU trays are hard throttled w PWRBRK#. Intel trays are proprotionally throttled with Psys power capping

- If the chassis is in the degraded redundancy state, due to 1 or more PSU faults, and an additional PSU fails, the chassis will attempt to stay alive by hard-throttling the trays (via PROCHOT# & PWRBRK#), but there is no guarantee. There is risk that the chassis could power off if the PSU rating is exceeded

- For the redundant power policies, only OVS modes are selectable in SMM (ie. OVS is always enabled). When a user creates a hardware config in DCSC, a chassis max power estimate is completed, via an LCP API call. If the chassis DC max power is in between the chassis degraded power capacity shown in parentheses and the chassis normal power capacity, a warning is displayed stating that the server could be throttled if a PSU fails. If the customer doesn’t want potential throttling, they either need to reduce their config or select a different combo of PSUs/policy to achieve a higher power budget

- Possible future feature

Power cables

The power conversion stations function at a 32A current, but the power cables enable two PCS units to share a single 63A connection from the data center. Consequently, for racks fully equipped with 12 power conversion stations, only six power connections from the data center are needed.

The N1380 enclosure supports the following power cables.

System Management

The SC777 V4 contains an integrated service processor, XClarity Controller 3 (XCC3), which provides advanced control, monitoring, and alerting functions. The XCC3 is based on the AST2600 baseboard management controller (BMC) using a dual-core ARM Cortex A7 32-bit RISC service processor running at 1.2 GHz.

Topics in this section:

- System I/O Board (DC-SCM)

- Local management

- External Diagnostics Handset

- System status with XClarity Mobile

- Remote management

- XCC3 Premier

- Remote management using the SMM3

- Lenovo XClarity Essentials

- Lenovo XClarity Administrator

- Lenovo XClarity One

- Lenovo Capacity Planner

- Lenovo HPC & AI Software Stack

- NVIDIA AI Enterprise

System I/O Board (DC-SCM)

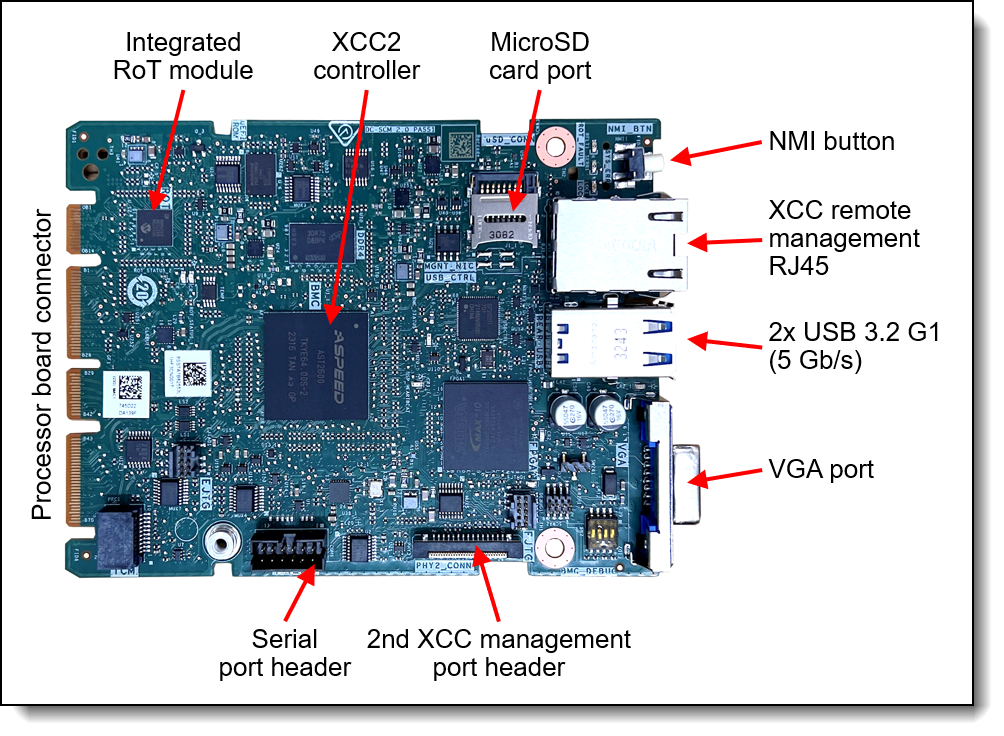

The SC777 V4 implements a separate System I/O Board, also known as the DC-SCM (Data Center Secure Control Module, DCSCM), that connects to the system board as shown in the Internal view in the Components and connectors section. The System I/O Board contains connectors that are accessible from the exterior of the server as shown in the following figure.

Note: The NMI (non-maskable interrupt) button is no longer present on the board. Lenovo recommends using the NMI function that is part of the XCC user interfaces instead.

The board also has the following components:

- XClarity Controller 3, implemented using the ASPEED AST2600 baseboard management controller (BMC).

- Root of Trust (RoT) module - implements Platform Firmware Resiliency (PFR) hardware Root of Trust (RoT) which enables the server to be NIST SP800-193 compliant. For more details about PFR, see the Security section.

- MicroSD card port to enable the use of a MicroSD card for additional storage for use with the XCC3 controller. XCC3 can use the storage as a Remote Disc on Card (RDOC) device (up to 4GB of storage). It can also be used to store firmware updates (including N-1 firmware history) for ease of deployment.

Tip: Without a MicroSD card installed, the XCC controller will have 100MB of available RDOC storage.

Ordering information for the supported Micro SD cards are listed in the MicroSD for XCC local storage section.

Local management

The following figure shows the ports and LEDs on the front of the SC777 V4.

Figure 16. SC777 V4 Front operator panel

The LEDs are as follows:

- Identification (ID) LED (blue): Activated remotely via XCC to identify a specific server to local service engineers

- Error LED (yellow): Indicates if there is a system error. The error is reported in the XCC system log.

- Root of Trust fault LED (yellow): Indicates if there is an error with the Root of Trust (RoT) module

- Drive LED: Indicates activity on any drive

Information pull-out tab

The front of the server also houses an information pull-out tab (also known as the network access tag). See Figure 2 for the location. A label on the tab shows the network information (MAC address and other data) to remotely access the service processor.

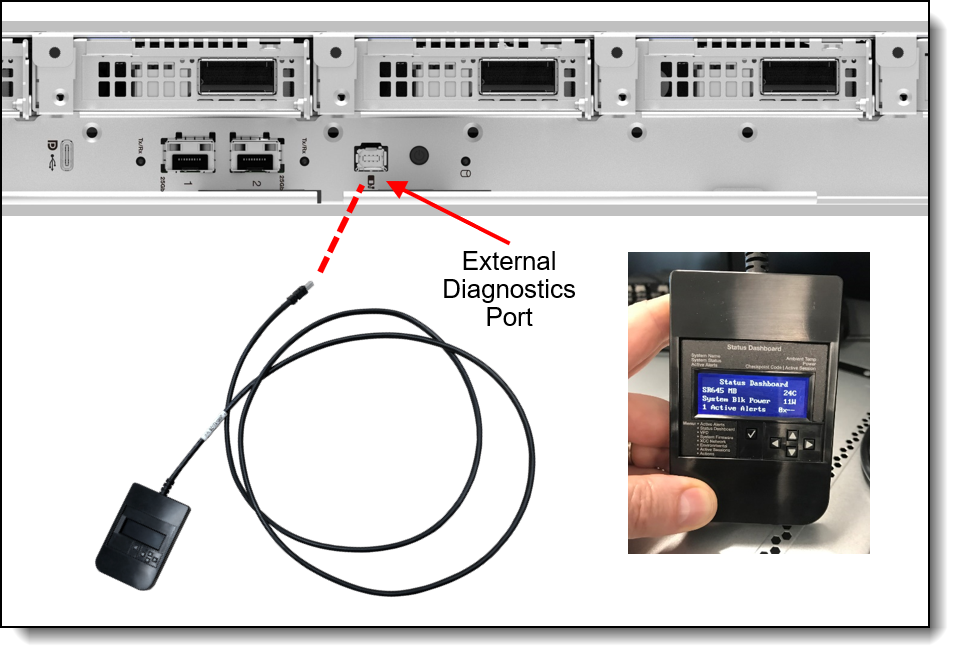

External Diagnostics Handset

The SC777 V4 has a port to connect an External Diagnostics Handset as shown in the following figure.

The External Diagnostics Handset allows quick access to system status, firmware, network, and health information. The LCD display on the panel and the function buttons give you access to the following information:

- Active alerts

- Status Dashboard

- System VPD: machine type & mode, serial number, UUID string

- System firmware levels: UEFI and XCC firmware

- XCC network information: hostname, MAC address, IP address, DNS addresses

- Environmental data: Ambient temperature, CPU temperature, AC input voltage, estimated power consumption

- Active XCC sessions

- System reset action

The handset has a magnet on the back of it to allow you to easily mount it on a convenient place on any rack cabinet.

Figure 17. SC777 V4 External Diagnostics Handset

Ordering information for the External Diagnostics Handset with is listed in the following table.

System status with XClarity Mobile

The XClarity Mobile app includes a tethering function where you can connect your Android or iOS device to the server via USB to see the status of the server.

The steps to connect the mobile device are as follows:

- Enable USB Management on the server, by holding down the ID button for 3 seconds (or pressing the dedicated USB management button if one is present)

- Connect the mobile device via a USB cable to the server's USB port with the management symbol

- In iOS or Android settings, enable Personal Hotspot or USB Tethering

- Launch the Lenovo XClarity Mobile app

Once connected you can see the following information:

- Server status including error logs (read only, no login required)

- Server management functions (XClarity login credentials required)

Remote management

The 1Gb onboard port and one of the 25Gb onboard ports (port 1) on the front of the SC777 V4 offer a connection to the XCC for remote management. This shared-NIC functionality allows the ports to be used both for operating system networking and for remote management.

Remote server management is provided through industry-standard interfaces:

- Intelligent Platform Management Interface (IPMI) Version 2.0

- Simple Network Management Protocol (SNMP) Version 3 (no SET commands; no SNMP v1)

- Common Information Model (CIM-XML)

- Representational State Transfer (REST) support

- Redfish support (DMTF compliant)

- Web browser - HTML 5-based browser interface (Java and ActiveX not required) using a responsive design (content optimized for device being used - laptop, tablet, phone) with NLS support

The 25Gb Port 1 connector supports NC-SI. You can enable NC-SI in the factory using the feature code listed in the following table. If the feature code isn't selected, the port will have NC-SI disabled.

IPMI via the Ethernet port (IPMI over LAN) is supported, however it is disabled by default. For CTO orders you can specify whether you want to the feature enabled or disabled in the factory, using the feature codes listed in the following table.

XCC3 Premier

In the SC777 V4, XCC3 has the Premier level of features built into the server. XCC3 Premier in ThinkSystem V4 servers is equivalent to the XCC2 Premium offering in ThinkSystem V3 servers.

Configurator tip: Even though XCC3 Premier is a standard feature of the SC777 V4, it does not appear in the list of feature codes in the configurator.

XCC3 Premier includes the following functions:

- System Guard - Monitor hardware inventory for unexpected component changes, and simply log the event or prevent booting

- Neighbor Group - Enables administrators to manage and synchronize configurations and firmware level across multiple servers

- Syslog alerting

- Lenovo SED security key management

- Boot video capture and crash video capture

- Virtual console collaboration - Ability for up to 6 remote users to be log into the remote session simultaneously

- Remote console Java client

- System utilization data and graphic view

- Single sign on with Lenovo XClarity Administrator

- Update firmware from a repository

- Enterprise Strict Security mode - Enforces CNSA 1.0 level security

- Remotely viewing video with graphics resolutions up to 1600x1200 at 75 Hz with up to 23 bits per pixel, regardless of the system state

- Remotely accessing the server using the keyboard and mouse from a remote client

- International keyboard mapping support

- Redirecting serial console via SSH

- Component replacement log (Maintenance History log)

- Access restriction (IP address blocking)

- Displaying graphics for real-time and historical power usage data and temperature

- Mapping the ISO and image files located on the local client as virtual drives for use by the server

- Mounting the remote ISO and image files via HTTPS, SFTP, CIFS, and NFS

- Power capping

With XCC3 Premier, for CTO orders, you can request that System Guard be enabled in the factory and the first configuration snapshot be recorded. To add this to an order, select feature code listed in the following table. The selection is made in the Security tab of the configurator.

| Feature code | Description |

|---|---|

| BUT2 | Install System Guard |

For more information about System Guard, see https://pubs.lenovo.com/xcc2/NN1ia_c_systemguard

Remote management using the SMM3

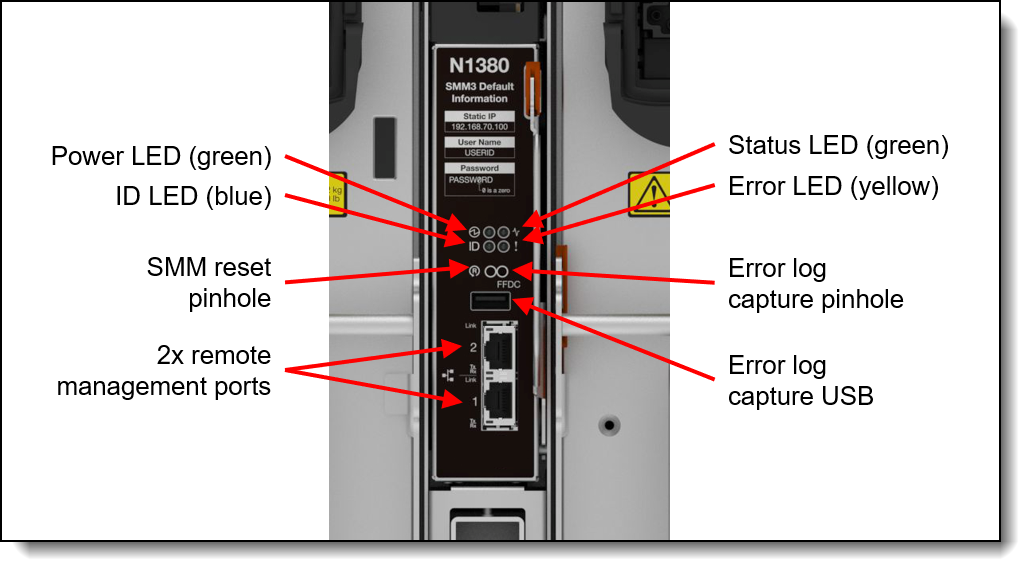

The N1380 enclosure includes a System Management Module 3 (SMM), installed in the rear of the enclosure. See Enclosure rear view for the location of the SMM. The SMM provides remote management of both the enclosure and the individual servers installed in the enclosure. The SMM can be accessed through a web browser interface and via Intelligent Platform Management Interface (IPMI) 2.0 commands.

The SMM provides the following functions:

- Remote connectivity to XCC controllers in each node in the enclosure

- Node-level reporting and control (for example, node virtual reseat/reset)

- Enclosure power management

- Enclosure thermal management

- Enclosure inventory

The following figure shows the LEDs and connectors of the SMM.

The SMM has the following ports and LEDs:

- 2x Gigabit Ethernet RJ45 ports for remote management access

- USB port and activation button for service

- SMM reset button

- System error LED (yellow)

- Identification (ID) LED (blue)

- Status LED (green)

- System power LED (green)

The USB service button and USB service port are used to gather service data in the event of an error. Pressing the service button copies First Failure Data Collection (FFDC) data to a USB key installed in the USB service port. The reset button is used to perform an SMM reset (short press) or to restore the SMM back to factory defaults (press for 4+ seconds).

The use of two RJ45 Ethernet ports enables the ability to daisy-chain the Ethernet management connections thereby reducing the number of ports you need in your management switches and reducing the overall cable density needed for systems management. With this feature you can connect the first SMM to your management network and the SMM in a second enclosure connects to the first SMM. The SMM in the third enclosure can then connect to the SMM in the second enclosure.

Up to 3 enclosures can be connected in a daisy-chain configuration and all 48 servers in those enclosures can be managed remotely via one single Ethernet connection.

Notes:

- If you are using IEEE 802.1D spanning tree protocol (STP) then at most 3 enclosures can be connected together

- Do not form a loop with the network cabling. The dual-port SMM at the end of the chain should not be connected back to the switch that is connected to the top of the SMM chain.

Lenovo XClarity Essentials

Lenovo offers the following XClarity Essentials software tools that can help you set up, use, and maintain the server at no additional cost:

- Lenovo Essentials OneCLI

OneCLI is a collection of server management tools that uses a command line interface program to manage firmware, hardware, and operating systems. It provides functions to collect full system health information (including health status), configure system settings, and update system firmware and drivers.

- Lenovo Essentials UpdateXpress

The UpdateXpress tool is a standalone GUI application for firmware and device driver updates that enables you to maintain your server firmware and device drivers up-to-date and help you avoid unnecessary server outages. The tool acquires and deploys individual updates and UpdateXpress System Packs (UXSPs) which are integration-tested bundles.

- Lenovo Essentials Bootable Media Creator

The Bootable Media Creator (BOMC) tool is used to create bootable media for offline firmware update.

ThinkSystem V4 servers: The format of UEFI and BMC settings has changed for ThinkSystem V4 servers, to align with OpenBMC and Redfish requirements. See the documentation of these tools for details. As a result, the following versions are required for these servers:

- OneCLI 5.x or later

- UpdateXpress 5.x or later

- BOMC 14.x or later

For more information and downloads, visit the Lenovo XClarity Essentials web page:

http://support.lenovo.com/us/en/documents/LNVO-center

Lenovo XClarity Administrator

Lenovo XClarity Administrator is a centralized resource management solution designed to reduce complexity, speed response, and enhance the availability of Lenovo systems and solutions. It provides agent-free hardware management for ThinkSystem servers. The administration dashboard is based on HTML 5 and allows fast location of resources so tasks can be run quickly.

Because Lenovo XClarity Administrator does not require any agent software to be installed on the managed endpoints, there are no CPU cycles spent on agent execution, and no memory is used, which means that up to 1GB of RAM and 1 - 2% CPU usage is saved, compared to a typical managed system where an agent is required.

Lenovo XClarity Administrator is an optional software component for the SC777 V4. The software can be downloaded and used at no charge to discover and monitor the SC777 V4 and to manage firmware upgrades.

If software support is required for Lenovo XClarity Administrator, or premium features such as configuration management and operating system deployment are required, Lenovo XClarity Pro software subscription should be ordered. Lenovo XClarity Pro is licensed on a per managed system basis, that is, each managed Lenovo system requires a license.

The following table lists the Lenovo XClarity software license options.

Lenovo XClarity Administrator offers the following standard features that are available at no charge:

- Auto-discovery and monitoring of Lenovo systems

- Firmware updates and compliance enforcement

- External alerts and notifications via SNMP traps, syslog remote logging, and e-mail

- Secure connections to managed endpoints

- NIST 800-131A or FIPS 140-3 compliant cryptographic standards between the management solution and managed endpoints

- Integration into existing higher-level management systems such as cloud automation and orchestration tools through REST APIs, providing extensive external visibility and control over hardware resources

- An intuitive, easy-to-use GUI

- Scripting with Windows PowerShell, providing command-line visibility and control over hardware resources

Lenovo XClarity Administrator offers the following premium features that require an optional Pro license:

- Pattern-based configuration management that allows to define configurations once and apply repeatedly without errors when deploying new servers or redeploying existing servers without disrupting the fabric

- Bare-metal deployment of operating systems and hypervisors to streamline infrastructure provisioning

For more information, refer to the Lenovo XClarity Administrator Product Guide:

http://lenovopress.com/tips1200

Lenovo XClarity One

Lenovo XClarity One is a hybrid cloud-based unified Management-as-a-Service (MaaS) platform, built for growing enterprises. XClarity One is powered by Lenovo Smarter Support, a powerful AI-driven platform that leverages predictive analytics to enhance the performance, reliability, and overall efficiency of Lenovo servers.

XClarity One is the next milestone in Lenovo’s portfolio of systems management products. Now you can leverage the benefits of a true next-generation, hybrid cloud-based solution for the deployment, management, and maintenance of your infrastructure through a single, centralized platform that delivers a consistent user experience across all Lenovo products.

Key features include:

- AI-powered Automation

Harnesses the power of AI and predictive analytics to enhance the performance and reliability of your infrastructure with proactive protection.

- AI-Powered Predictive Failure Analytics - predict maintenance needs before the failure occurs, with the ability to visualize aggregated actions in customer dashboard.

- AI-Powered Call-Home - A Call-Home serviceable event opens a support ticket automatically, leveraging AI technology for problem determination and fast resolution.

- AI-Powered Premier Support with Auto CRU - uses AI to automatically dispatch parts and services, reducing service costs and minimizing downtime.

- Secure Management Hub

Lenovo’s proprietary Management Hub is an on-premises virtual appliance that acts as the bridge between your infrastructure and the cloud.

- On-Premises Security with Cloud Flexibility - your infrastructure has no direct connection to the cloud, greatly reducing your attack surface from external threats while still having the deployment benefits, flexibility, and scalability of a cloud solution.

- Authentication and Authorization - built on a Zero Trust Architecture and requiring OTP Application authentication for all users to handle the support of all customers’ servers and client devices. Role-based access controls help define and restrict permissions based on user roles.

- AI-Powered Management

Go beyond standard system management leveraging AI algorithms to continuously learn from data patterns to optimize performance and predict potential issues before they impact operations.

- AI Customizable Insights and Reporting - Customize AI-generated insights and reports to align with specific business objectives, enabling data-driven decision-making and strategic planning.

- AI-driven scalability and flexibility - Guided with AI-driven predictions, the platform supports dynamic scaling of resources based on workload demands.

- Monitor and Change - AI Advanced analytics capabilities providing deep insights into server performance, resource utilization, and security threats, to detect anomalies and suggest optimizations in real-time. NLP capabilities enabling administrators to interact with the platform using voice commands or text queries.

- Upward Integration - Integrated with Lenovo Open Cloud Automation (LOC-A), Lenovo Intelligent Computer Orchestration (LiCO) and AIOps engines providing an end-to-end management architecture across Lenovo infrastructure and devices solutions.

- Cross-Platform Compatibility - Compatibility across different server types and cloud environments

Lenovo XClarity One is an optional management component. License information for XClarity One is listed in the following table.

For more information, see these resources:

- Lenovo XClarity One datasheet:

https://lenovopress.lenovo.com/ds0188-lenovo-xclarity-one - Lenovo XClarity One product guide:

https://lenovopress.lenovo.com/lp1992-lenovo-xclarity-one

Lenovo Capacity Planner

Lenovo Capacity Planner is a power consumption evaluation tool that enhances data center planning by enabling IT administrators and pre-sales professionals to understand various power characteristics of racks, servers, and other devices. Capacity Planner can dynamically calculate the power consumption, current, British Thermal Unit (BTU), and volt-ampere (VA) rating at the rack level, improving the planning efficiency for large scale deployments.

For more information, refer to the Capacity Planner web page:

http://datacentersupport.lenovo.com/us/en/solutions/lnvo-lcp

Lenovo HPC & AI Software Stack

The Lenovo HPC & AI Software Stack combines open-source with proprietary best-of-breed Supercomputing software to provide the most consumable open-source HPC software stack embraced by all Lenovo HPC customers.

It provides a fully tested and supported, complete but customizable HPC software stack to enable the administrators and users in optimally and environmentally sustainable utilizing their Lenovo Supercomputers.

The Lenovo HPC & AI Software Stack is built on the most widely adopted and maintained HPC community software for orchestration and management. It integrates third party components especially around programming environments and performance optimization to complement and enhance the capabilities, creating the organic umbrella in software and service to add value for our customers.

The key open-source components of the software stack are as follows:

- Confluent Management

Confluent is Lenovo-developed open-source software designed to discover, provision, and manage HPC clusters and the nodes that comprise them. Confluent provides powerful tooling to deploy and update software and firmware to multiple nodes simultaneously, with simple and readable modern software syntax.

- SLURM Orchestration

Slurm is integrated as an open source, flexible, and modern choice to manage complex workloads for faster processing and optimal utilization of the large-scale and specialized high-performance and AI resource capabilities needed per workload provided by Lenovo systems. Lenovo provides support in partnership with SchedMD.

- LiCO Webportal

Lenovo Intelligent Computing Orchestration (LiCO) is a Lenovo-developed consolidated Graphical User Interface (GUI) for monitoring, managing and using cluster resources. The webportal provides workflows for both AI and HPC, and supports multiple AI frameworks, including TensorFlow, Caffe, Neon, and MXNet, allowing you to leverage a single cluster for diverse workload requirements.

- Energy Aware Runtime

EAR is a powerful European open-source energy management suite supporting anything from monitoring over power capping to live-optimization during the application runtime. Lenovo is collaborating with Barcelona Supercomputing Centre (BSC) and EAS4DC on the continuous development and support and offers three versions with differentiating capabilities.

For more information and ordering information, see the Lenovo HPC & AI Software Stack product guide:

https://lenovopress.com/lp1651

The key non-open-source components of the software stack for the SC777 V4 are:

- NVIDIA CUDA

NVIDIA CUDA is a parallel computing platform and programming model for general computing on graphical processing units (GPUs). With CUDA, developers are able to dramatically speed up computing applications by harnessing the power of GPUs. When using CUDA, developers program in popular languages such as C, C++, Fortran, Python and MATLAB and express parallelism through extensions in the form of a few basic keywords. For more information, see the NVIDIA CUDA Zone.

- NVIDIA HPC Software Development Kit

The NVIDIA HPC SDK C, C++, and Fortran compilers support GPU acceleration of HPC modeling and simulation applications with standard C++ and Fortran, OpenACC directives, and CUDA. GPU-accelerated math libraries maximize performance on common HPC algorithms, and optimized communications libraries enable standards-based multi-GPU and scalable systems programming. Performance profiling and debugging tools simplify porting and optimization of HPC applications, and containerization tools enable easy deployment on-premises or in the cloud. For more information, see the NVIDIA HPC SDK.

NVIDIA AI Enterprise

The SC777 V4 is designed for NVIDIA AI Enterprise, which is a comprehensive suite of artificial intelligence and data analytics software designed for optimized development and deployment in enterprise settings.

NVIDIA AI Enterprise includes workload and infrastructure management software known as Base Command Manager. This software provisions the AI environment, incorporating the components such as the Operating System, Kubernetes (K8S), GPU Operator, and Network Operator to manage the AI workloads.

Additionally, NVIDIA AI Enterprise provides access to ready-to-use open-sourced containers and frameworks from NVIDIA like NVIDIA NeMo, NVIDIA RAPIDS, NVIDIA TAO Toolkit, NVIDIA TensorRT and NVIDIA Triton Inference Server.

- NVIDIA NeMo is an end-to-end framework for building, customizing, and deploying enterprise-grade generative AI models; NeMo lets organizations easily customize pretrained foundation models from NVIDIA and select community models for domain-specific use cases.

- NVIDIA RAPIDS is an open-source suite of GPU-accelerated data science and AI libraries with APIs that match the most popular open-source data tools. It accelerates performance by orders of magnitude at scale across data pipelines.

- NVIDIA TAO Toolkit simplifies model creation, training, and optimization with TensorFlow and PyTorch and it enables creating custom, production-ready AI models by fine-tuning NVIDIA pretrained models and large training datasets.

- NVIDIA TensorRT, an SDK for high-performance deep learning inference, includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for inference applications. TensorRT is built on the NVIDIA CUDA parallel programming model and enables you to optimize inference using techniques such as quantization, layer and tensor fusion, kernel tuning, and others on NVIDIA GPUs. https://developer.nvidia.com/tensorrt-getting-started

- NVIDIA TensorRT-LLM is an open-source library that accelerates and optimizes inference performance of the latest large language models (LLMs). TensorRT-LLM wraps TensorRT’s deep learning compiler and includes optimized kernels from FasterTransformer, pre- and post-processing, and multi-GPU and multi-node communication. https://developer.nvidia.com/tensorrt

- NVIDIA Triton Inference Server optimizes the deployment of AI models at scale and in production for both neural networks and tree-based models on GPUs.

It also provides full access to the NVIDIA NGC catalogue, a collection of tested enterprise software, services and tools supporting end-to-end AI and digital twin workflows and can be integrated with MLOps platforms such as ClearML, Domino Data Lab, Run:ai, UbiOps, and Weights & Biases.

Finally, NVIDIA AI Enterprise introduced NVIDIA Inference Microservices (NIM), a set of performance-optimized, portable microservices designed to accelerate and simplify the deployment of AI models. Those containerized GPU-accelerated pretrained, fine-tuned, and customized models are ideally suited to be self-hosted and deployed on the SC777 V4.

Security

Topics in this section:

Security features

The server offers the following electronic security features:

- Support for Platform Firmware Resiliency (PFR) hardware Root of Trust (RoT) - see the Platform Firmware Resiliency section

- Firmware signature processes compliant with FIPS and NIST requirements

- System Guard (part of XCC3 Premier) - Proactive monitoring of hardware inventory for unexpected component changes

- Administrator and power-on password

- Integrated Trusted Platform Module (TPM) supporting TPM 2.0

The server is NIST SP 800-147B compliant.

The following table lists the security options for the SC777 V4.

Platform Firmware Resiliency - Lenovo ThinkShield

Lenovo's ThinkShield Security is a transparent and comprehensive approach to security that extends to all dimensions of our data center products: from development, to supply chain, and through the entire product lifecycle.

The ThinkSystem SC777 V4 includes Platform Firmware Resiliency (PFR) hardware Root of Trust (RoT) which enables the system to be NIST SP800-193 compliant. This offering further enhances key platform subsystem protections against unauthorized firmware updates and corruption, to restore firmware to an integral state, and to closely monitor firmware for possible compromise from cyber-attacks.

PFR operates upon the following server components:

- UEFI image – the low-level server firmware that connects the operating system to the server hardware

- XCC image – the management “engine” software that controls and reports on the server status separate from the server operating system

- FPGA image – the code that runs the server’s lowest level hardware controller on the motherboard

The Lenovo Platform Root of Trust Hardware performs the following three main functions:

- Detection – Measures the firmware and updates for authenticity

- Recovery – Recovers a corrupted image to a known-safe image

- Protection – Monitors the system to ensure the known-good firmware is not maliciously written

These enhanced protection capabilities are implemented using a dedicated, discrete security processor whose implementation has been rigorously validated by leading third-party security firms. Security evaluation results and design details are available for customer review – providing unprecedented transparency and assurance.

The SC777 V4 includes support for Secure Boot, a UEFI firmware security feature developed by the UEFI Consortium that ensures only immutable and signed software are loaded during the boot time. The use of Secure Boot helps prevent malicious code from being loaded and helps prevent attacks, such as the installation of rootkits. Lenovo offers the capability to enable secure boot in the factory, to ensure end-to-end protection. Alternatively, Secure Boot can be left disabled in the factory, allowing the customer to enable it themselves at a later point, if desired.

The following table lists the relevant feature code(s).

Tip: If Secure Boot is not enabled in the factory, it can be enabled later by the customer. However once Secure Boot is enabled, it cannot be disabled.

Operating system support

The SC777 V4 supports the following operating systems:

- Red Hat Enterprise Linux 9.6

- Red Hat Enterprise Linux 10.0

- Ubuntu 24.04 LTS 64-bit

See Operating System Interoperability Guide (OSIG) for the complete list of supported, certified, and tested operating systems, including version and point releases:

https://lenovopress.lenovo.com/osig#servers=sc777-v4-7dka

Also review the latest LeSI Best Recipe to see the operating systems that are supported via Lenovo Scalable Infrastructure (LeSI):

https://support.lenovo.com/us/en/solutions/HT505184#5

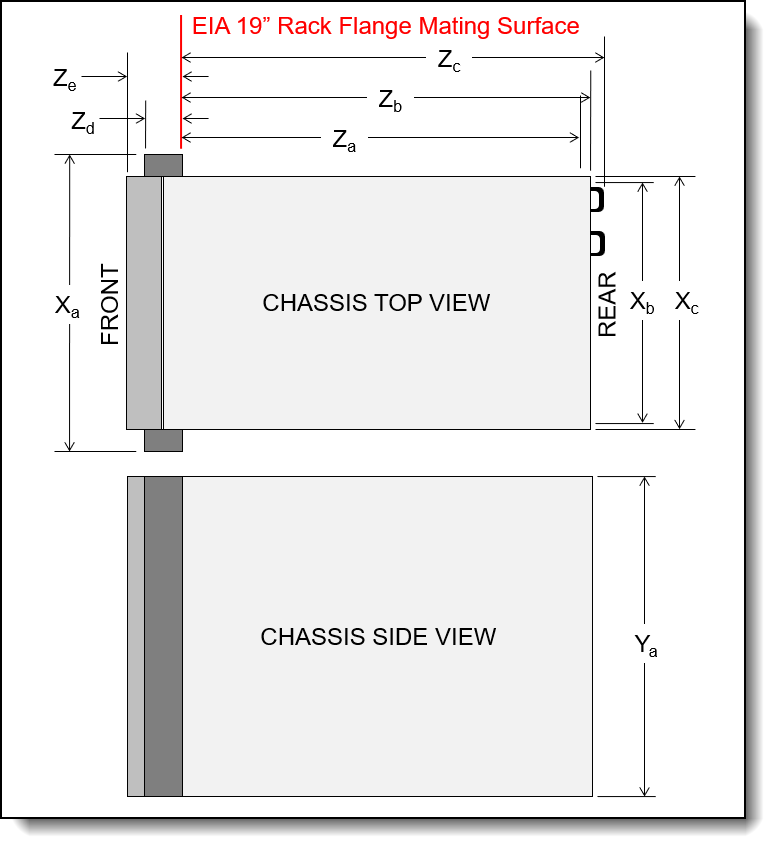

Physical and electrical specifications

Eight SC777 V4 server trays are installed in the N1380 enclosure. Each SC777 V4 tray has the following dimensions:

- Width: 546 mm (21.5 inches)

- Height: 53 mm (2.1 inches)

- Depth: 760 mm (29.9 inches) (799 mm, including the water connections at the rear of the server)

The N1380 enclosure has the following overall physical dimensions, excluding components that extend outside the standard chassis, such as EIA flanges, front security bezel (if any), and power conversion station handles:

- Width: 540 mm (21.3 inches)

- Height: 572 mm (22.5 inches)

- Depth: 1302 mm (51.2 inches)

The following table lists the detailed dimensions. See the figure below for the definition of each dimension.

Shipping (cardboard packaging) dimensions are currently not available for the SC777 V4.

The shipping (cardboard packaging) dimensions of the N1380 are as follows:

- Width: 800 mm (31.5 inches)

- Height: 1027 mm (40.4 inches)

- Depth: 1600 mm (63.0 inches)

The SC777 V4 tray has the following maximum weight:

- 38 kg (83.8 lbs)

The N1380 enclosure has the following weight:

- Empty enclosure (with midplane and cables): 94 kg (208 lbs)

- Fully configured enclosure with 4x water-cooled power conversion stations and 8x SC777 V4 server trays: 487.7 kg (1075 lbs)

The enclosure has the following electrical specifications for input power conversion stations:

- Input voltage: 180-528Vac (nominal 200-480Vac), 3-phase, WYE

- Input current (each power conversion station): 30A

Operating environment

The SC777 V4 server trays and N1380 enclosure are supported in the following environment.

Topics in this section:

Air temperature and humidity

Air temperature/humidity requirements:

- Operating:

- ASHRAE A3: 5°C to 40°C (41°F to 104°F); the maximum ambient temperature decreases by 1°C for every 175 m (574 ft) increase in altitude above 900 m (2,953 ft).

- Powered off: 5°C to 45°C (41°F to 113°F)

- Shipping/storage: -40°C to 60°C (-40°F to 140°F)

- Operating humidity:

- ASHRAE Class A3: 8% to 85%; maximum dew point: 24°C (75°F)

- Shipment/storage humidity: 8% - 90%

Altitude:

- Maximum altitude: 3048 m (10 000 ft)

Water requirements